Bounded Confidence Revisited: What We Overlooked, Underestimated, and Got Wrong

Frankfurt School of Finance & Management, Germany

Journal of Artificial

Societies and Social Simulation 26 (4) 11

<https://www.jasss.org/26/4/11.html>

DOI: 10.18564/jasss.5257

Received: 11-Sep-2023 Accepted: 11-Sep-2023 Published: 31-Oct-2023

Abstract

In the bounded confidence model (BC-model) (Hegselmann and Krause 2002), period by period, each agent averages over all opinions that are no further away from their actual opinion than a given distance ε, i.e., their ‘bound of confidence’. With the benefit of hindsight, it is clear that we completely overlooked a crucial feature of our model back in 2002. That is for increasing values of ε, our analysis suggested smooth transitions in model behaviour. However, the transitions are in fact wild, chaotic and non-monotonic—as described by Lorenz (2006). The most dramatic example of these effects is a consensus that breaks down for larger values of ε. The core of this article is a fundamentally new approach to the analysis of the BC-model. This new approach makes the non-monotonicities unmissable. To understand this approach, we start with the question: how many different BC processes can we initiate with any given start distribution? The answer to this question is almost certainly for all possible start distributions and certainly in all cases analysed here, it is always a finite number of ε-values that make a difference for the processes we start. Moreover, there is an algorithm that finds, for any start distribution, the complete list of ε-values that make a difference. Using this list, we can then go directly through all the possible BC-processes given the start distribution. We can therefore check them for non-monotonicity of any kind, and will be able to find them all. This good news comes however with bad news. That is the algorithm that inevitably and without exception finds all the ϵ-values that matter requires exact arithmetics, without any rounding and without even the slightest rounding error. As a consequence, we have to abandon the usual floating-point arithmetic used in today’s computers and programming languages. What we need to use instead is absolutely exact fractional arithmetic with integers of arbitrary length. This numerical approach is feasible on all modern computers. The new analytical approach and results are likely to have implications for many applications of the BC-model.Introduction

Alfred Jules Ayer’s final remark 1978

in the BBC-Television series Men of Ideas.

Ayer was interviewed by Brian Magee;

cf. Magee (1982, 109).

The very essence of what now is known as the bounded confidence model (BC-model, for short) is simple: Period by period, all agents average over all opinions that are not further away from their actual opinion than a given distance \(\epsilon\), their ‘bound of confidence’. The model was described for the first time in Krause (1997 p. 47ff.), an article published in German. To refer to the model, the term bounded confidence was used for the first time by Krause in 1998, namely in a conference presentation in Warzaw, published as (Krause 2000 cf. 231). A first comprehensive analytical and computational analysis of the BC-model was given more than two decades ago in Hegselmann & Krause (2002).1 The model has a very close relative, namely the model in Deffuant et al. (2000). The main difference regards the updating procedure: In Hegselmann & Krause (2002) the updating happens simultaneously while Deffuant et al. (2000) use a pairwise sequential procedure.2 Soon it became a common practice to refer to both models as BC-models and to distinguish two variants: the Hegselmann-Krause model (HK-model for short) and the Deffuant-Weisbuch model (DW-model for short).

In terms of its definition, the BC-model is easy to understand—and it ‘invites’ all sorts of objections. But it is often easy to modify the model so that it covers the objection. This combination of features has made the model a kind of platform from which new projects can be started. And this probably explains, at least in part, why the model is highly cited (and still increasingly so). It is now even difficult to keep track of the survey articles in which the BC-model, its extensions, modifications or newly discovered analytical features are an important component.3

In the following, I will focus exclusively on the basics of the BC-model and our analysis of it back then in Hegselmann & Krause (2002). The retrospective diagnosis is: We completely overlooked a crucial feature of our model. For increasing values of the confidence level \(\epsilon\), our analysis at the time suggests smooth transitions in the model’s behaviour. But in fact the transitions are wild, chaotic and non-monotonic. For example, we thought that for increasing values of \(\epsilon\), the number of opinion clusters surviving after stabilisation would decrease monotonically. But this is wrong—it may well be the case that a larger \(\epsilon\) leads to more final clusters. The most dramatic example of such an effect is a consensus that falls apart for larger values of \(\epsilon\) (remember: the initial distribution is held constant). What is more, for increasing values of \(\epsilon\) such effects can occur many times.

Similarly, it is wrong to think that for a constant start distribution of opinions the width of the final stable opinion profile decreases monotonically as \(\epsilon\) increases. Again, for one and the same start distribution an increasing final profile width can occur many times. In Hegselmann & Krause (2002) we did not explicitly state what turned out to be wrong. But we did not write it down just because we thought it was obvious—the false belief was suggested in a very natural way by the way we were analysing at the time. Later in this article it will become clear how this could happen, and some lessons will become clear that can be taken away.

Jan Lorenz was the first to discover the wild, chaotic, and non-monotonic transitions. In his equally ingenious and insightful article Consensus Strikes Back in the Hegselmann-Krause Model of Continuous Opinion Dynamics Under Bounded Confidence (Lorenz 2006), he mentions and demonstrates all the non-monotonicities that I mentioned above. The title of his article hints at perhaps the most dramatic effect of this kind.4 My article focuses on all the non-monotonicities in BC-processes that Lorenz discovered. And in a sense, I also want to cover those that are still waiting to be discovered. To this end, I present a new and rather fundamental approach to the analysis of the BC-model. In the new approach, the non-monotonicities that we overlooked at the time become directly obvious; in a sense, they are even unmissable.

The new approach starts from the following simple, but fundamental question: Given a certain start distribution of opinions, where all opinions are real-valued numbers from the interval \([0,1]\), we can run the BC-dynamics for that start distribution with any of all the possible confidence levels \(\epsilon \in [0,1]\). This set of possible \(\epsilon\)-values is an uncountable infinity. Given all this, when we ‘play around’ with possible confidence levels, how many different BC-processes can we initiate with the given start distribution? The answer to the question is: Almost certainly for all possible start distributions, and certainly in all cases analysed below, it is always a finite number of \(\epsilon\)-values that make a difference for the processes that we start. Moreover, there is an algorithm that finds for any start distributions the complete list of \(\epsilon\)-values that make a difference. Such a list is invaluable. With it, we can directly go through all BC-processes that are possible given the start distribution. We can check them for non-monotonicities of any kind—and we will find them all.

This is obviously very good news. But it comes with some bad news: The algorithm that, inevitably and without exception, finds all \(\epsilon\)-values that matter, requires absolutly exact arithmetic without any rounding anywhere and without even the very slightest rounding error. As a consequence, we have to abandon the usual floating-point arithmetic as it is normally used in today’s computers and programming languages. What we have to use instead is an absolutely exact fractional arithmetic with integers of arbitrary length. This numerical approach is feasible on all modern computers (details later). But it comes at a high cost in terms of speed.

In what follows, I have put together a number of arguments that may seem unrelated at first. However, later on they merge into a common thread. The next section deals with the basics of BC-processes. I introduce some notational conventions and definitions. We will go through some application contexts and reflect on their status. I then describe the kind of dual visualisation of BC-processes that is used throughout the article. It is dual in the sense that it shows BC-processes simultaneously as a dynamical system with its trajectories evolving over time, and as a dynamical network with links emerging or disappearing over time. The new type of visualisation is a significant improvement on the old one in Hegselmann & Krause (2002), which did not show network structures.

Section 3 deals with the worst enemy of the BC-model. It is an enemy within, namely the ordinary floating-point arithmetic. I am afraid that no model is more susceptible to errors inherent in floating-point arithmetic than the BC-model. In the computation of BC-processes, it can easily happen that representational or operational floating-point errors (their order of magnitude is \(10^{-17}\)) cause subsequent errors of 16 orders of magnitude higher in the next period. We discuss four strategies for dealing with these numerical difficulties.

Section 4 introduces a concept that is key for our new type of analysis of BC-processes, namely \(\epsilon\)-switches (often called switches for short). They turn out to be the \(\epsilon\)-values that make a difference for BC-processes with a given constant start distribution. We apply the concept to an example and describe the algorithm that finds all \(\epsilon\)-switches for any start distribution. Based upon the discussion in Section 3, it is clear that the algorithm requires absolute numerical accuracy which floating-point arithmetic is inherently incapable of providing. There is no other choice but to abandon the usual floating-point arithmetic and to resort to an absolutely exact fractional arithmetic throughout.

To gain more general insights about switches beyond our simple example, we apply the search algorithm to a larger class of start distributions, each with \(n\) opinions equidistantly spaced in the unit interval \([0,1]\). Since we know all of their \(\epsilon\)-switches from the algorithmic search, we look at their total number, and then focus on non-monotonicities, in particiular consents that fall apart again under the next larger \(\epsilon\)-switch.

In Section 5 we introduce a new graphical research tool, namely \(\epsilon\)-switch diagrams. Since we know all the \(\epsilon\)-values that make a difference, we can focus on these and only these values (i.e., the \(\epsilon\)-switches) and directly visualise the final cluster structure they produce. Such \(\epsilon\)-switch diagrams make it (well, almost) impossible to miss anything that we missed back in Hegselmann & Krause (2002).

In the final Section 6 we draw together some of the strands of argument and insights that have emerged along the way (some of them incidentally and by the way).

There are three appendices to this article. The purpose of these appendices is to keep the main text, which is already very long, free of very technical and advanced details that are not essential for understanding the central line of argument. The core of Appendix A is a series of numbered Analytical Notes which, stated in a more formal and technical language, consider central properties of \(\epsilon\)-switches in general. Appendix B focuses both on universal properties of equidistant start distributions, presented as Analytical Notes as in Appendix A, and on figures showing additional universal properties not covered by the figures in the main text. Many properties of floating-point arithmetic play an important role in this article. I have included only the most important in the main body of the article. When working with the BC-model, modifying and extending it, one needs to know more. Therefore, there is an Appendix C which, in my opinion, contains the minimum of what (at least, but better not only) BC-modelers should know about floating-point arithmetic.

BC-Processes: Definition, Application, Visualisation

Notation and definitions

In a first step we introduce some terminology that finally will allow us to state precisely what we mean by a BC-process (or, equivalently, BC-dynamics):

- There is a set \(I\) of \(n\) agents; \(i, j = 1, \dots, n\); \(i, j \in I\).

- Time is discrete; \(t = 0, 1, 2, \dots\).

- Each individual starts at \(t=0\) with a certain opinion, given by a real number from the unit interval; \(x_{i}(0) \in [0,1]\).

- The profile of opinions at time \(t\) is

\[X(t)= x_{1}(t), \dots , x_{i}(t), \dots, x_{j}(t), \dots, x_{n}(t).\] \[(1)\] - Each agent \(i\) takes into account agents \(j\) whose opinions are not too far away, i.e., for which \(|x_{i}(t) - x_{j}(t)| \leq \epsilon\), where \(\epsilon\) is the confidence level.5 That level determines the size of \(i\)’s confidence interval \([x_{i}-\epsilon, x_{i} + \epsilon]\).6

- The set of all agents \(j\) that \(i\) ‘takes seriously’ at time \(t\) (and given \(\epsilon\)) is:

i.e., the set of all agents in \(i\)’s confidence interval. For short, we will often refer to \(I\bigl(i,X(t), \epsilon \bigr)\) as the set of \(i\)’s \(\epsilon\)-insiders. Correspondingly, the set of \(i\)’s \(\epsilon\)-outsiders is the complimentary set of \(I\bigl(i,X(t), \epsilon \bigr)\), namely\[ I\bigl(i,X(t), \epsilon \bigr)= \{j\big||x_{i}(t) - x_{j}(t)| \leq \epsilon \},\] \[(2)\] \[ O\bigl(i,X(t), \epsilon \bigr) = I\bigl(i,X(t), \epsilon \bigr)^C = \{j\big||x_{i}(t) - x_{j}(t)| > \epsilon \}.\] \[(3)\] - The updated opinion of agent \(i\) is the arithmetic mean of all opinions \(x_{j}(t)\) for which \(j \in I\bigl(i,X(t), \epsilon \bigr)\):

\[ x_{i}(t+1)= \frac{1} {\lvert I\big( i,X(t), \epsilon \big)\rvert} \sum_{j \in I\bigl(i,X(t),\epsilon \bigr)} x_{j}(t).\] \[(4)\]

Based upon (1)-(7), we can now define: A sequence of opinion profiles is a BC-process if and only if it is generated by the updating rule (Equation 4). Obviously, a BC-process is uniquely characterised by the start distribution \(X(0)\) together with a confidence level \(\epsilon\). Therefore, in the following we will often refer to a BC-process simply by the ordered pair \(\langle X(0),\epsilon \rangle\).7 Formally, the process is an \(n\)-dimensional dynamical system.

Some second-order reflection upon the syntactical and semantical status of (1)-(7) helps to avoid major confusions and misunderstandings: The very heart of the characterisation of BC-processes is given in Equation 4. The preceding introduction of the set \(I\), the discrete time \(t\) etc., that is all the preparatory provision of terms that are used in Equation 4. As such, Equation 4 is a mathematical formalism only. However, by a preceding sentence like “There is a set \(I\) of \(n\) agents; \(i, j \in I.\)”, we give the formalism an interpretation that, if only in a sketchy way, hints at an intended type of applications—an application on what we normally call ‘agents’. Another provision says that the components of a profile \(X(t)\) should be understood as opinions. Additionally, a time sequence is assumed. As a consequence, we can read Equation 4 as an exactly specified interaction process of agents and their opinions over time.8

Altogether, we thus obtain by (1)-(7) all that we need for a syntactically and semantically sufficiently clear definition of the predicate “... is a BC-process”. This definition explicitly introduces a new predicate. Whoever understands a predicate, understands a concept as the meaning of that predicate—and that is something that is the same in all the concrete, written or spoken occurrences of the predicate somewhere in time and space, including occurrences as a synonymous term in different languages. But, then, what is exactly meant by the term “BC-model”? Throughout this article, I use the expression “BC-model” as a (meta-language) name for the (object-language) predicate “... is a BC-process”.

One should also be clear that predicates (as well as the concepts that constitute their meaning), are not the kind of entities that can be said to be true or false—and that holds for a primitive predicate like “... is green” as well as for our less primitive predicate “... is a BC-process”. Empirical truth or empirical falsity comes into play by a claim that a particular real-world process is an instance of a predicate (or falls under the concept that constitutes the meaning of this predicate). Such assertion sentences express propositions, and only they, the propositions, are capable of being true or false. Given the definition and the whole mathematical apparatus logically connected with it, logical-mathematical propositions can also be formulated and possibly proved. However, these are logical-mathematical truths, not empirical truths.

Some application contexts

As far as the types of representable opinions are concerned, the BC-model is more general than one might think at first: It could be about the putative probabilities, which themselves may concern qualitative, comparative, or quantitative issues. The opinions may concern any real-valued quantitative problem, provided that one can reasonably normalize the range of possible opinions to the unit interval. The opinions could concern, for example, the length of a river or the lifetime of a car. Opinions could express the intensity or importance of a desire.9 They could be opinions about moral desirability (0: extremely bad; 0.5: neutral; 1: extremely good). Or the opinions could concern a desirable budget share. On the other hand, non-continuous opinions, e.g., discretized or binary opinions, are not covered.

Apart from the different types of opinions, there are quite different contexts of possible applications. Here some (not mutually exclusive) examples:

- Compromise contexts: There is a group of people that exchange their views. For reasons such as uncertainty, interest in compromise, a preference for conformity, or even some social pressure, everyone is willing to move into the direction of the others’ opinions. However, there are limits to the willingness to compromise: An individual \(i\) with view \(x_i(t)\) is willing to compromise with individuals with opinions that are not too far away—and over them is averaged.

- Social media contexts: There is a digital platform with a central algorithmic coordination that matches user \(i\) with users \(j\) whose opinions \(x_j(t)\) are not too far away from \(i\)’s opinion \(x_i(t)\). User \(i\) then averages over these opinions. In such a context, \(\epsilon\) is the distance tolerance of a centrally organized filter bubble. Another social media variant would be a digital platform that allows all users \(j\) to send their opinions \(x_j(t)\) to all other users \(i\). As a recipient, however, user \(i\) only takes note of opinions that are not too far removed from his or her opinion. The average is then formed over these values. In this variant, \(\epsilon\) is the distance tolerance of a decentralised echo chamber.10

- Expert-disagreement contexts: There is a group of experts on something. Each expert has a well-considered opinion about the problem in question. Nevertheless, they disagree. And then what? In social epistemology, a much-discussed resolution proposal is “Split the difference!” (Douven & Riegler 2010 cf. 148). But what does that mean, and where does it lead to? The BC-model can be seen as a formal specification of the conflict resolution advise. In that perspective, the BC-model becomes a tool to answer normative or technical questions in the now blossoming field of (computational) social epistemology: The qualitative advise “Split the difference!” becomes a quantitative advise with regard to bounds of confidence, i.e., \(\epsilon\)-values. Their very different effects can be precisely computed and, then, evaluated from the point of view of their epistemic desirability.

With regard to the first two contexts, the BC-model could be seen as a direct idealising definition of a mechanism that is likely to play a greater role in these contexts. The mechanism could explain how, under certain conditions, effects often described or explained by terms such as homophily, conformity or confirmation bias occur.

Another way of looking at the BC-model would be to see the model itself as a more precise, specified, and metric re-definition of what is addressed by broad and qualitative concepts such as homophily, confirmation or conformity bias, which themselves summarily ‘conceptualize’ findings from countless empirical studies. The re-definition is tailored to dynamical contexts, continuous opinions as the subject matter, and circumstances in which homophily, confirmation or conformity effects come in degrees and with limits. What we do is a kind of conceptual engineering and very similar to what Rudolf Carnap (1891–1990) called an explication.11 He describes the procedure in chapter one of his Logical Foundations of Probability (Carnap 1950 pp. 1–18).12 In Carnap’s view, an explication transforms a more or less ambiguous, prescientific concept, the explicandum, into an improved concept, the explicatum. Carnap formulates four adequacy conditions:

A concept must fulfill the following requirements in order to be an adequate explicatum for a given explicandum: (1) similarity to the explicandum, (2) exactness, (3) fruitfulness, (4) simplicity (1950 p. 5).

In Carnap’s case, the prescientific terms are ‘confirming evidence’, ‘degree of confirmation’ or ‘probability’; cf. (1950 p. 21). His explicatum is the precise concept ‘degree of confirmation’. We can look at ‘homophily’, ‘confirmation bias’ or ‘conformity bias’ as explicanda, and consider the definition of BC-processes as their explicatum. Carnap’s four adequacy conditions are relevant in our case as well. But then there may be a difference: Carnap calls the explicandum a prescientific concept (cf. 1950 p. 1 et passim). With regard to my explicanda, I do not want to talk like that. Rather, it is simply that the very broad and qualitative concepts of homophily, confirmation or conformity bias are specified, made more precise, and quantified through their explication. The explicandum gets transformed, but not with the intention to replace it in all contexts.

Obviously, the broad and qualitative concepts of homophily, confirmation or conformity bias can be explicated in other ways than through the BC-model. One could think of non-real-valued opinions, continuous time or non-simultaneous updating. Thus, even as a mere explication of ‘homophily’, ‘confirmation bias’ or ‘conformity bias’, the-BC model does not have any claim to exclusivity.

In terms of content, the BC-model describes a mechanism: certain parts, namely agents with certain opinions, interact in a precisely defined way and thereby cause certain phenomena to occur. In opinion formation processes, however, there are also plausible mechanisms of a quite different kind, for example those in which the distance \(|x_i - x_j|\) is irrelevant. Each agent \(i\) could assign a weight \(w_{ij}\) to each other agent \(j\) and also to himself, which expresses the power, authority, competence et cetera assumed by \(i\) in \(j\). Let the sum of the weights that \(i\) assigns always be \(1\), and let the assignment of weights be constant over time. The updating is then done as a weighted averaging:

| \[ x_{i}(t+1)= \sum_{j \in I} w_{ij} \cdot x_{j}(t) \text{ with } 0 \leq w_{ij} \leq 1 \text{ and } \sum_{j \in I} w_{ij}= 1 .\] | \[(5)\] |

The mechanism characterised in this way can be traced back to French Jr (1956), Harary (1959) and DeGroot (1974). Today it is often referred to as the DeGroot model. I will do the same and refer to it as the DG-model or DG-processes. There are other mechanisms that can lead to a repulsive drifting apart of opinions.

In real-world opinion-formation processes of groups or even entire societies, a multitude of different mechanisms are likely to be at play, both individually and collectively. This does not bode well for realistic agent-based models. Precisely this could be a reason to rely on a completely different kind of modelling, namely machine learning based on gigantic data sets (big data). Technically, however, the modelling is then only implicit, i.e., hidden in a neural network, and thus largely opaque to humans; in any case, massive problems arise with regard to transparency, interpretability and explainability of what the model actually does.13 However, even without explicit human understanding of such a model, it might still be possible to make extremely good predictions with the model. This creates an entirely new epistemic situation which, together with other breakthroughs in AI that are equally disruptive to the intellectual position of the homo sapiens, requires a fundamental rethinking and redefinition of desirable epistemic ideals for beings like us.

Be that as it may, the BC-model is in any case epistemically old-fashioned: it aims at explicit understanding14,is deliberately kept simple, and precisely defines a certain mechanism, which is then examined in isolation and with conscious disregard of all other influences.15 It is clear from the outset that countless other mechanisms may be at play in actual opinion formation processes. With regard to what can be achieved with the model in descriptive terms, epistemic modesty is called for: The BC-model tells us something about what happens in a context in which it describes the predominant mechanism. Depending on the accuracy with which one knows the initial conditions in such a context, one can then describe, explain or predict accordingly. However, all of this is only possible under the condition that the mechanism in the given context is actually predominant. But this condition may not be fulfilled. Other mechanisms could be predominant, there could be an interplay of different mechanisms. The BC-model is therefore not the universally applicable opinion formation model, but merely one of many.16

Different from the first two contexts of application, in the expert-disagreement context (the third context listed above), the issue is no longer descriptive, explanatory or predictive—at least not in the first place. The paradigmatic problem is that of a group of experts, each of whom has a subjective assessment of an unknown probability, but who then have to act as a team; or, alternatively, a single decision-maker who has to decide on the basis of the different assessments of a group of experts. In both cases, the question is: are there ways to aggregate the divergent opinions into a single, reasonable, consensual assessment?

This problem already inspired the development of formal models in the 1950s and 1960s. The models defined—or better: designed—mechanisms to form consent out of dissent in a somehow reasonable manner. Winkler (1968) gives an early, very precise overview and calls the problem “the consensus problem” (1968 p. B63).17 The very simple solution via the arithmetic mean is ruled out, since this would give all experts the same weight (cf. Winkler 1968 p. B63ff.). But only in very rare cases are the members of expert groups likely to consider themselves equally good and therefore willing to aggregate their opinions with equal weight. The same will analogously apply to an individual decision-maker with regard to the experts advising him or her. But then what?

In the mid-1960s, in a different context and from a different perspective, there was a second, very different variant of a consensus problem. It was succinctly formulated by Robert P. Abelson (1928-2005) in his article Mathematical Models of the Distribution of Attitudes Under Controversy (Abelson 1964). At that time there was already a whole range of mathematically precisely formulated opinion dynamics. These included, for example, the DG-model defined above by Equation 5. In practically all of these models, the same holds as in the DG-model: even under very weak conditions, a final consensus is inevitable. On the other hand, numerous empirical studies had shown that bimodal distributions, polarisation, and community cleavage were quite common real-world outcomes. With this situation in mind, Abelson then writes:

Since universal ultimate agreement is an ubiquitous outcome of a very broad class of mathematical models, we are naturally led to inquire what on earth one must assume in order to generate the bimodal outcome of community cleavage studies (Abelson 1964 p. 153).

So the first consensus problem is too little consensus among experts, the second too much consensus as a result of models.

The BC-processes defined above were originally primarily conceived by Krause and myself as a stylised procedure for analysing and perhaps partially solving the consensus problem of the first type.18 But in addition, as a kind of side effect, it would also solve the second consensus problem. Regarding this second consensus problem, it is clear that the BC-model produces polarisation in a certain range of \(\epsilon\)-values (details and complications later). With regard to the first consensus problem: Couldn’t a consensus be reached by experts who, when iteratively updating their opinions, only take into account those other experts who are within a certain \(\epsilon\) of their own opinion, but who otherwise know nothing about the others? The abstract communication structure (iteration, anonymity) thus corresponds to the Delphi study format developed in the 1950s within the RAND cooperation, which serves the goal “to obtain the most reliable consensus of opinion of a group of experts” (Dalkey & Helmer 1963 p. 458).19

The much older DG-model has been considered and analysed as a possible solution to the consensus problem much earlier, at the latest in the 1960s: What would have to be the case with regard to the mutually attributed weights \(w_{ij}\) for a DG-process to eventually lead to a consensus among experts? While French Jr (1956) and Harary (1959) had empirical and descriptive applications in mind, DeGroot (1974) clearly envisages a consensus technology in the DG-processes.20 Lehrer and Wagner then even upgraded DG-processes to the unique rational solution of the consensus problem.21

In the third application context considered here, there is obviously a very fundamental change of perspective. It is now about the design of a mechanism that could be suitable to achieve a certain goal, namely the consensual aggregation of expert opinions. In such a perspective, neither the actual use nor the actual value of the confidence level \(\epsilon\) (or the weights \(w_{ij}\)) is a matter of fact, but rather a matter of individual or collective choice. More generally, in the third type of applications we take a normative or technical stance: Given certain epistemic goals, the model is used to develop efficient epistemic policies.22

Visualising a dual nature: BC-processes as dynamical systems and dynamical networks

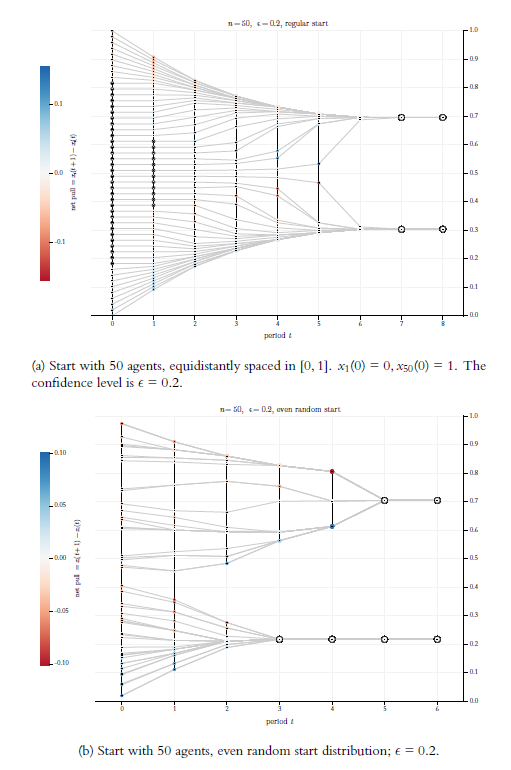

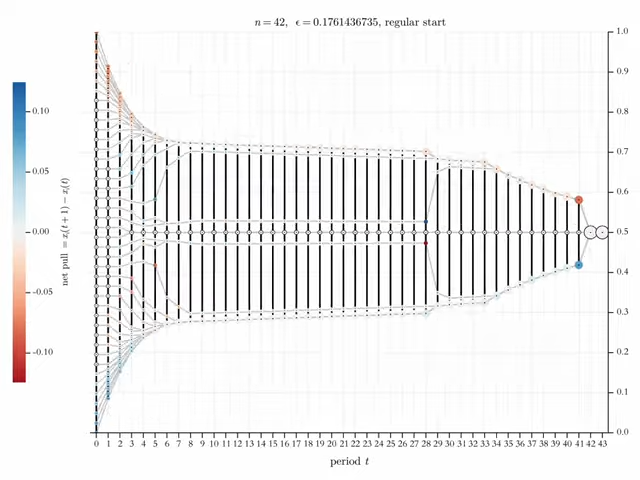

Figure 1 shows two visualisations of BC-processes. The visualisations are done in a specific style that will be used throughout in this article: Grey lines are the pure trajectories of the opinions over time \(t\) (\(x\)-axis). In each period, each trajectory is marked by a filled, colored or light grey circle of a certain size. The colors inform about the net balance of upward and downward directed forces that act on an opinion \(x_i(t)\). Acting forces in an BC-process are the opinions \(x_j(t)\) within \(i\)’s confidence interval. Upward directed forces are exerted by opinions above \(x_i(t)\); the opinions below \(x_i(t)\) pull in downward direction. As a consequence, the net pull on an opinion \(x_i(t)\) is simply \(x_i(t+1)- x_i(t)\). And that is what we color according to the legend to the left of the diagram. Thus, shades of red indicate a downward net pull, shades of blue an upward net pull. Darker shades mean stronger pulls. A net pull of zero is marked specifically: We border the circle (filled with a very light grey) with an outer black line.

The size of the colored or grey circles indicates cluster size. A cluster is a group of agents that have the same opinion. A ‘lonely’ agent whose opinion nobody shares, is considered as a cluster of size one. Increasing circle size, means increasing cluster size.

Then there are vertical black lines. For an accurate understanding of the way they work, we introduce some terminology: A profile \(X(t)\) is an ordered profile iff

| \[ x_{1}(t) \leq x_{2}(t) \dots \leq x_{i}(t) \leq x_{i+1}(t) \dots \leq x_{n}(t)\] | \[(6)\] |

\(X(t)\) is a strictly ordered profile iff

| \[ x_{1}(t) < x_{2}(t) \dots < x_{i}(t) < x_{i+1}(t) \dots < x_{n}(t)\] | \[(7)\] |

In the following we will always start with profiles \(X(0)\) that are strictly ordered. BC-processes that start strictly ordered, always lead to ordered profiles, but these are usually no longer strictly ordered.

Now back to the black vertical lines. Suppose an ordered profile. Then the vertical lines are drawn between neighboring opinions \(x_i(t)\) and \(x_{i+1}(t)\) step by step if and only if their distance is not greater than \(\epsilon\). Thus, a vertical black line between two opinions indicates that they mutually influence each other.23

There are graphical issues: In an ordered profile, graphically, opinions that are the same, get piled up on top of each other. From the stacked opinions, we see only the top one. But we know that all the opinions below have exactly the same properties as the top one, for instance the same cluster size or the same \(\epsilon\)-insiders and outsiders. The consequence is: As long as we see an uninterrupted vertical line, there is a network in which – directly or indirectly – all agents are connected to each other. The vertical line guarantees the existence of the corresponding network paths. Thus, the visual impression is completely right—despite the fact that, technically, the vertical lines are drawn stepwise, following the indices in the ordered profile. However, there is often a problem with distinct, but very close opinions. They may be that close (and thereby mutually being among their \(\epsilon\)-insiders) that one can’t see any more the black vertical connections between them. In Figure 1a we have this problem in some densely populated, outer regions of the profiles. In this case, we have to correct the graphical impression by what we know.

The grey horizontal lines visualise trajectories and thereby take the dynamical system perspective. The black vertical lines take a network perspective: They are links between agents that mutually influence each other. Whoever is an agent on a continuous vertical black line, is a member of the same network. Both BC-processes in Figure 1 start as one network. But BC-processes are dynamical networks in which the links change over time: As the missing black line indicates, the network in Figure 1a falls apart in period \(t=6\); in Figure 1b the same happens already in \(t=2\).

To describe phenomena as the ones we see in Figure 1, let’s introduce explicitly two additional concepts that will prove useful later: An ordered profile \(X(t)\) is an \(\epsilon\)-profile (at time \(t\)) iff

| \[{} |x_{i+1}(t) - x_{i}(t)| \leq \epsilon, \text{ for } i = 1, \dots, (n-1).\] | \[(8)\] |

Without going here very much into details, one can see in the Figures 1a and 1b some typical phenomena of BC-processes: Extreme opinions are under a one-sided influence and move direction center. Therefore, the range of the profile starts to shrink. At the extremes of the shrinking (sub-)profiles, the opinions condense. Condensed regions attract opinions from less populated areas within their \(\epsilon\)-reach. In the center some opinions are pulled upwards, while others are pulled downwards. At some point \(t\), the network falls apart, the profile splits. The split sub-profiles, the two networks respectively, constitute different ‘opinion worlds’, i.e., two communities without any influence on each other. In the two split off sub-profiles opinions contract. At some point in time, in the two sub-profiles all opinions have all opinions within their confidence interval. In the next period all opinions merge into one. The consequence is stability. (Already in Hegselmann & Krause (2002) we proved that a BC-process always stabilises in finite time \(\bar t\) in the usual sense of \(X(\bar t -1)= X(\bar t)\).)

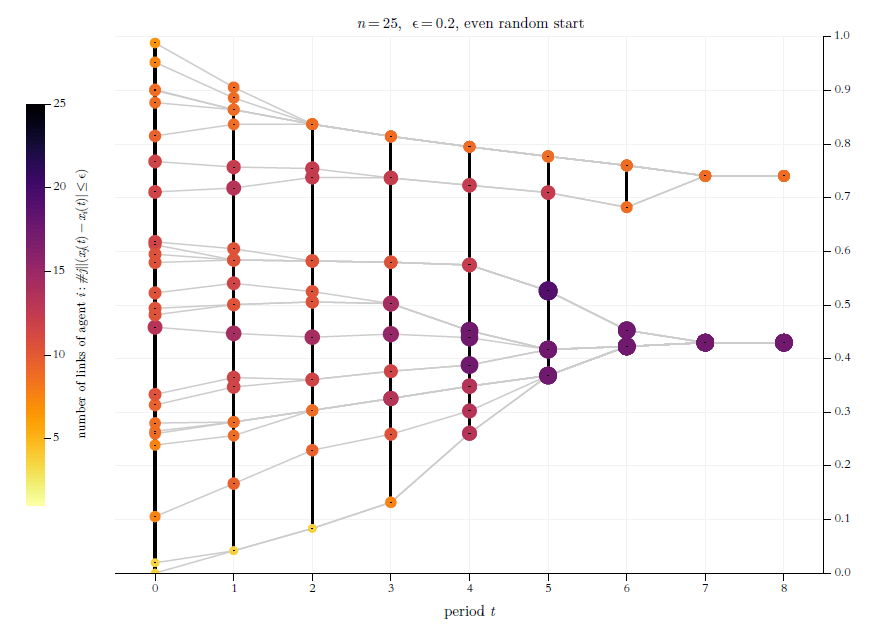

Our approach to the visualisation of BC-processes allows a two-dimensional representation of the network dynamics, whereas normally already the representation of the network for a certain time \(t\) requires two-dimensionality. As a consequence, the network dynamics itself can then only be visualised as a sequence of two-dimensional representations. Obviously, for the visualisation of BC-processes, we can manage with one dimension less. Ultimately, this is possible because all agents who have exactly the same opinion, then also have the same properties, such as their respective cluster size, links to other agents, and so on. In contrast to what is usually the case, we can therefore also stack agents that have the same opinion on top of each other without any problems: Although one then actually only sees the visualised properties of the topmost agent, one knows that all the agents below have the same properties. Figure 2 shows, how this approach can easily be used in order to visualise network (centrality) measures. As an example, Figure 2 visualises the agents’ total number of links to others.

The BC-model’s Worst Enemy: Floating-Point Arithmetic

To compute a BC-process requires to decide over and over again on sets of \(\epsilon\)-insiders and \(\epsilon\)-outsiders: Is \(|x_{i}(t) - x_{j}(t)| \leq \epsilon\), that is the decisive question. Back then in 2002 (and even quite a few years later), we considered that as a simple question, no problem for a computer. This turned out to be wrong.

Some examples of numerical disasters

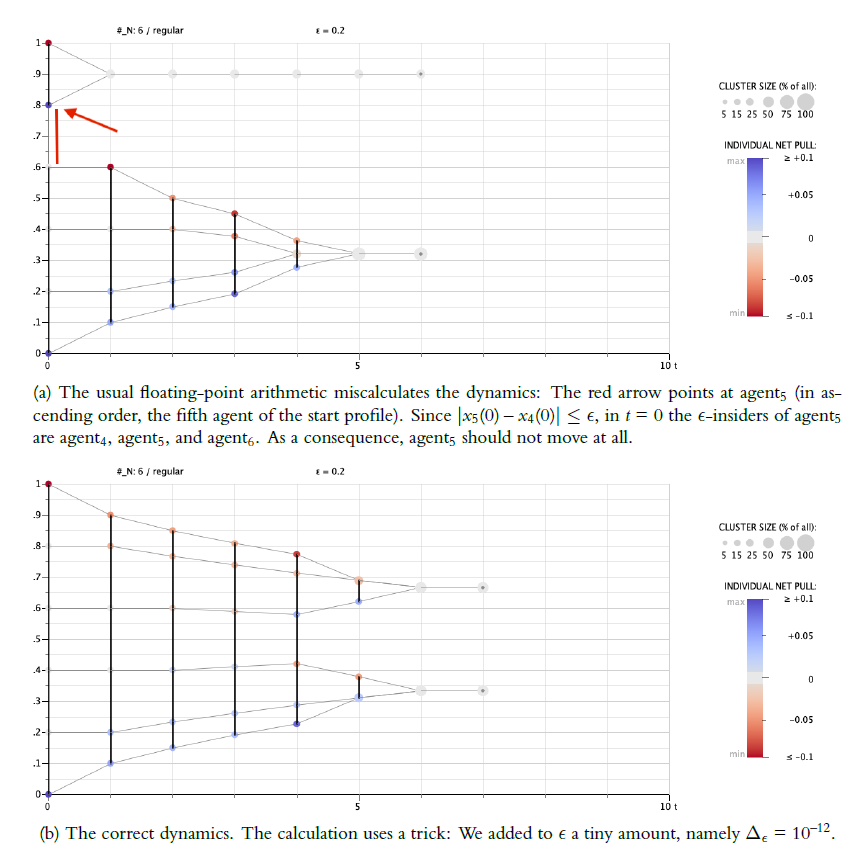

Figure 3a, top illustrates a case where the computer miscalculates the set of \(\epsilon\)-insiders. For \(X(0)\) we assume what we will call a regular start profile. In such a profile \(n\) opinions are equidistantly distributed in the unit interval \([0,1]\) according

| \[{} x_{i}(0) = \frac{i-1}{n-1}, \text{ for } i = 1, \dots, n.\] | \[(9)\] |

Our example is \(n=6\) and, thus, \(X(0)= \langle 0, 0.2, 0.4, 0.6, 0.8, 1 \rangle\).24 The confidence level is \(\epsilon = 0.2\), which is exactly the distance between neighbouring opinions in \(X(0)\). As a consequence, \(X(0)\) is an \(\epsilon\)-profile as defined above, and any two neighbouring opinions are for \(t=0\) mutually members of their sets of \(\epsilon\)-insiders. The red arrow in Figure 3, top points to a segment in the profile where something went wrong: The computer miscalculates the distance between agent\(_4\) (in ascending order, the fourth agent in the start profile) and agent\(_5\), and takes the two agents that obviously are mutually \(\epsilon\)-insiders as \(\epsilon\)-outsiders. As a consequence, from \(t=1\) onwards, the whole BC-process is numerically corrupted. The correct computation is shown in Figure 3b.

The computations that lead to the obvious mistake were done by a NETLOGO program. For the subtraction \(0.8 - 0.6\) one gets the faulty result \(0.20000000000000007\) instead of \(0.2\). The program gets it wrong by a tiny margin: \(2^{-53}\), \(10^{-17}\) respectively, that is the magnitude of the error. But that is sufficient to miss one element in two agents’ insider sets, which, then, causes follow-up errors that are 16 decimal magnitudes higher.25

What we see here, is not a problem specific to NETLOGO; it is simply an effect of the IEEE 754 standard for floating-point arithmetic, and that is the arithmetic that computers use by default.26 Floating-point arithmetic is an arithmetic with engineered numbers, often simply called floats, that approximate the uncountably infinite set of real numbers by a huge but finite set of numbers that are represented by a bit string of a predefined length, today usually 64 bits. As a consequence, almost all real numbers can’t be represented exactly, and rounding to the nearest representable number becomes ubiquitous. That, then, has consequences as we see them in Figure 3a.

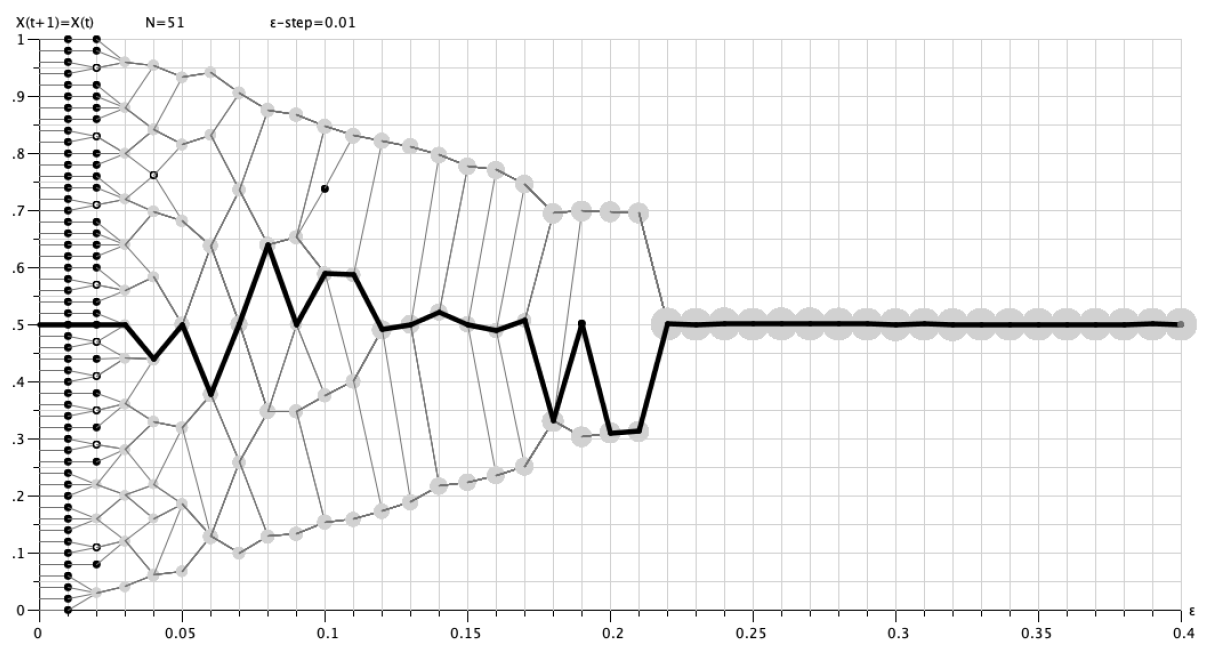

One might think that the computational error in Figure 3a is a somehow artificial and rare event. Figure 4 destroys such an impression: For \(n = 51\), we set up a regular start distribution according to Equation 9. Thus, our start opinions are the decimals \(0, \ 0.02,\ 0.04,\ \dots, \ 1\). Then we compute the resulting dynamics for the decimal \(\epsilon\)-values \(0,\ 0.01,\ 0.02,\ \dots,\ 0.4\). Each process is computed until it is stabilised. Then we display the results as a diagram that we call \(\epsilon\)-diagram: Along the \(x\)-axis we have the increasing \(\epsilon\)-values, the \(y\)-axis displays for each \(\epsilon\)-value the final stable profile \(X(\bar t)\).

Given that setting, we know two things in advance: First, whatever the \(\epsilon\)-value, since the start profile is regular, there has to be a mirror symmetry along the line \(y=0.5\) in all finally stabilised profiles \(X(\bar t)\). Second, there is one agent, namely agent\(_{26}\), whose opinion should never ever change: For all \(\epsilon\)-values, and for all periods \(t=0,\ 1, \ \dots, \ \bar t\), given the symmetry of the start distribution, the opinion value of agent\(_{26}\) should always be \(0.5\)—a centrist with the centrist position (and for whom, trivially, the upward and downward pull is always balanced to zero). That is how it obviously should be. Any violation of the symmetry, any aberration of \(x_{26}(\bar t)=0.5\), is a guarantee that numerically something went wrong. (But note, and keep in mind: This kind of symmetry is only a necessary, not a sufficient condition of numerical correctness.)

Figure 4 shows what floating-point arithmetic in our setting does—and it is a numerical catastrophy: In horizontal direction, as a kind of pseudo trajectory, light grey lines connect the final positions of the \(i\)th opinion for the stepwise increasing \(\epsilon\)-values. The thick black line is the ‘trajectory’ of the centrist agent\(_{26}\), whose opinion in numerically correct calculations will always be \(0.5\), but now—except for very small and very large \(\epsilon\)-values—almost never is. Additionally, a numerically correct computation is mirror-symmetric with regard to \(y=0.5\). But a visual inspection reveals symmetry violations all over. For \(\epsilon = 0.02\), the stabilised opinion of agent\(_{26}\) seems to be computed correctly, but other elements of \(X(\bar t)\) are not: The small filled black circle indicates a ‘cluster’ of just one agent, a black circle with a white dot inside, is a cluster of two agents. Scaled grey circles indicate cluster sizes of clusters \(\geq 3\) (for the scaling see the legend of Figure 3). For \(\epsilon = 0.02\), the cluster structure above and below agent\(_{26}\) is completely different. It is easy to extend the list of symmetry violations. In short: Figure 4 documents a major numerical disaster.27

Four computational strategies to escape numerical disaster

How to get out of the mess? There are at least four different options, three of them risky, but cheap; one of them completely safe, but costly:

- We stick to the floating-point arithmetic, but try to avoid numerical constellations that may cause numerical disasters, namely opinion values \(x_i, x_j\) with \(|x_i - x_j| = \epsilon\). We can try to avoid such constellations by an exclusive use of random start distributions: For such a distribution, the probability that there are opinion values \(x_i(0), x_j(0)\) with \(|x_i(0) - x_j(0)| = \epsilon\), equals zero. Exclusive use of random start distributions was the computational strategy in Hegselmann & Krause (2002). The approach avoids a numerical disaster in the very first updating step. However, that is no guarantee that in later periods the \(\epsilon\)-insider/outsider distinction is always correctly computed. Because of the random start, we will never see any violated asymmetries that indicate miscalculations. But indetectability does not mean non-existence. As a consequence, we get a kind of unintended and uncontrolled noise.

- We use the floating-point arithmetic even together with equidistant start distributions, but try again to avoid the critical constellations, i.e., opinions \(x_i, x_j\), such that \(|x_i- x_j| = \epsilon\). That was our computational strategy in Hegselmann & Krause (2015). There we used a very special equidistant start distribution, that we called expected value start distribution. The expected value start distribution idealises directly and ‘deterministically’ a uniform random start distribution over the range \([0,1]\): the \(i^{th}\) opinion is exactly the value, that we would get as the average of the \(i^{th}\) opinion over infinitely repeated and then ordered uniform random start distributions of \(n\) opinions. An expected value distribution of \(n\) opinions starts with

Working with an expected value start distribution instead of repeated randomly started runs, is sometimes a fruitful methodological approach: It minimises the danger to blurr interesting effects by averaging. Additionally, if there is an effect that needs explanation, one can directly study the unique single runs that produced the effect. In Hegselmann & Krause (2015) we applied that approach (as we think successfully) and used a start distribution according to Equation 10 with \(n=50\). As a consequence, the distance between neighbouring opinions is always \(1/51 = 0.\overline{0196078431372549}\). Given that distance, none of the 50 opinions in \(X(0)\) is for any \(\epsilon = 0.01,0.02,...,0.4\) at the bounds of confidence of any other opinion. As to the very first updating step, we are therefore computing on safe ground. And as to the later periods, there is some evidence for justified hope: If we calculate an \(\epsilon\)-diagram as the one in Figure 4, but now for an expected value start distribution with \(n = 50\), then we get a diagram that is completely mirror symmetric (cf. 2015 p. 487). As said, however, symmetry is only a necessary, not a sufficient condition for numerical correctness. Additionally, the symmetry check is a check by visual inspection only, and there may be asymmetries that are too small to catch the eye.28\[ x_{i}(0) = \frac{i}{n+1}, \text{ for } i = 1, \dots, n.\] \[(10)\] - The problem in the dynamics in Figure 3 is that an \(\epsilon\)-insider is mistaken as an outsider; the problem is not that an \(\epsilon\)-outsider is mistaken as an insider. This asymmetry is typical and suggests a numerical trick: We stick to floating-point arithmetic, but as a precaution we always add a tiny amount \(\Delta_\epsilon\) to \(\epsilon\)—sufficiently much to get the set of \(\epsilon\)-insiders right. The precaution works astonishingly well. In the last years, in many contexts and almost routinely, we used a \(\Delta_\epsilon = 10^{-12}\). The (correct) process in Figure 3, bottom is calculated by a NETLOGO program that uses the trick.29 That the trick works, can be checked by a safe method that does not use the trick. The method will be described below. But here and in general we would like to know (and often have to know) why, when and for which \(\Delta_\epsilon\)-values the trick works without producing the complimentary mistake: taking an \(\epsilon\)-outsider for an insider. It is a trivial task, to calculate for a process \(\langle X(0),\epsilon \rangle\) a value for \(\Delta_\epsilon\) that would numerically corrupt the process right from the start. If \(X(0)\) is an equidistant start distribution, it might even happen that the numerical corruption that is caused by a too large \(\Delta_\epsilon\), works symmetrically and, therefore, is practically undetectable.

- We abandon floating-point arithmetic altogether, and resort to an exact alternative: fractional arithmetic that restricts itself to the exclusive use of rational numbers as fractions of integers. Such numbers can always be exactly represented. All computations are then done as fractional operations. In the computation of a BC-process that easily leads to numerators and denominators in the hundreds of millions—an expression swell (a well known general problem of the exact fractional approach) that requires a permanent and complicated search for expression simplifications. To avoid necessities for rounding since otherwise numerators and denominators become too big to be representable by a bit string of predefined length (for instance the usual 64 bits format), we need to be able to use integers of arbitrary length.

Such a fractional approach with integers of arbitrary length is technically possible. With fractional arithmetic, we operate numerically on completely safe ground. But the solution comes at a cost in terms of computation speed: Fractional arithmetic is comparatively slow and takes much more time than floating-point arithmetic. For tasks that can reasonably be done by both methods, the safe fractional method may easily need one or two orders of magnitudes more time. MATHEMATICA and JULIA allow computations in fractional arithmetic. NETLOGO does not.

In the next section we will turn to the non-monotonicities that we overlooked in Hegselmann & Krause (2002). Soon it will become clear, that analysis and understanding of the non-monotonicities requires computationally a fractional approach.

A New Key Concept: \(\epsilon\)-Switches

The best way to understand the wild behavior of BC-processes \(\langle X(0),\epsilon \rangle\) in detail, is to start with very simple (and seemingly unrelated) questions: Given a certain start distribution \(X(0)\), how many different processes \(\langle X(0),\epsilon \rangle\) exist? How many values of \(\epsilon\) are there that make a difference? Is it a finite number, an infinite number? And how can we find them all, or at least some of them?

The example \(X(0) = \langle 0, 0.18, 0.36, 0.68, 1.0 \rangle\)

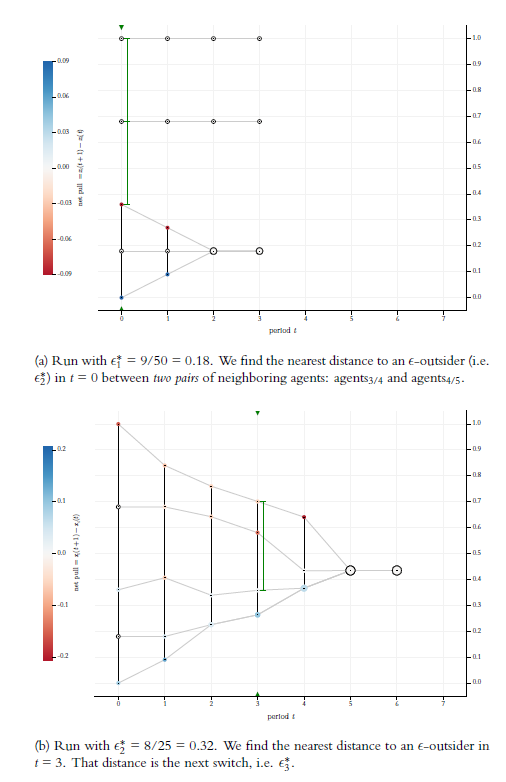

As an example, let us take the start distribution \(X(0) = \langle 0, 0.18, 0.36, 0.68, 1.0 \rangle\).30 For \(\epsilon = 0\), simply nothing will happen. Since \(X(0)=X(1)\), the dynamics is stable in \(t=1\). Now we start to increase the value of \(\epsilon\). What is the smallest value of \(\epsilon\) that makes a difference? An \(\epsilon = 0.1\) would not make any difference—-still, nothing would happen, the same sets of \(\epsilon\)-insiders, the same trajectories as for \(\epsilon = 0\). Obviously, the very first strictly positive \(\epsilon\)-value that really makes a difference, is an \(\epsilon\)-value that equals the distance to a nearest \(\epsilon\)-outsider in the profile \(X(0)\). In our example that is the distance \(0.18\). We find this distance between two pairs, namely for \(|x_2(0) - x_1(0)|\) and \(|x_3(0) - x_2(0)|\). Figure 5a shows the dynamics for \(\epsilon = 0.18\). Compared to the situation for \(\epsilon = 0\), the value \(\epsilon^*= 0.18\) is a kind of switch for the given start distribution: Once \(\epsilon\) reaches that value, the insider/outsider composition and thereby the network structure changes. At least one new link between two agents is established that was not there before. As a consequence, we get, compared to the process \(\langle X(0),\epsilon=0 \rangle\), a different BC-process, namely the one in Figure 5a.

By inspection of Figure 5a it is clear, that a further increase of \(\epsilon\) to \(\epsilon = 0.2\), would not make any difference—we would get exactly the same process that we see already in Figure 5a, the process that is generated by the first switch. The next and nearest larger \(\epsilon\) that again changes the process, is the one that is equal to the distance to the nearest \(\epsilon\)-outsider that we can find in the entire process \(\langle X(0),\epsilon = 0.18 \rangle\) of Figure 5a. Such an \(\epsilon\) is a second switch, that again establishes a new link that did not exist before. By inspection of Figure 5a, it is clear which \(\epsilon\)-value that is: It is an \(\epsilon\) that equals the distance between agent\(_3\) and agent\(_4\) and the distance between the agent\(_4\) and agent\(_5\) right at the start, namely \(|x_5(0) - x_4(0)| = |x_5(0) - x_4(0)| = 0.32\). The green vertical lines in Figure 5a show the two distances to nearest \(\epsilon\)-outsiders. We make this value our new starting point for finding the nearest larger \(\epsilon\)-value that makes a difference, and so forth.

More precisely, the next and nearest \(\epsilon\)-outsider we are looking for, is the minimum element in the set of all distances to \(\epsilon\)-outsiders of all agents over all periods \(t\) for the BC-process \(\langle X(0), \epsilon \rangle\). To that minimum element we will refer as \(\delta_{min}^{out} \bigl(X(0), \epsilon \bigr)\). In formal terms:

| \[ \delta_{min}^{out} \bigl(X(0), \epsilon \bigr) = min \Big\{|x_{i}(t) - x_{j}(t)| \Big \vert \, t = 0, 1, \dots, \bar t \,; \, i = 1,\dots, n; \, j \in O\bigl(i,X(t), \epsilon \bigr) \Big\}\] | \[(11)\] |

Search for \(\epsilon\)-switches: The algorithm that finds them all

At this point, probably inevitably, one gets the idea of an algorithmic search for minimal distances to \(\epsilon\) outsiders. For a given start distribution \(X(0)\) we start with \(\epsilon = 0\), and then search for \(\delta_{min}^{out}\), the distance to a nearest \(\epsilon\)-outsider. Doing that, we find \(\epsilon_1^*\), the first switch that, compared to the process \(\langle X(0), \epsilon = 0 \rangle\), makes a difference. For our start distribution we find \(\epsilon^*_1 = 0.18\). That is the start of a loop. Now we search \(\delta_{min}^{out}\) in \(\langle X(0), \epsilon_1^* \rangle\) and thereby find the second switch \(\epsilon_2^* = 0.32\). This second switch generates the BC-process \(\langle X(0),\epsilon^*_2 \rangle\) shown in Figure 5b. If we go, period by period, through all the distances to \(\epsilon\)-outsiders, we will find (marked by the green vertical line) the distance to the nearest \(\epsilon\)-outsider in \(t=3\), namely the distance between \(x_5(3)\) and \(x_3(3)\). That distance is the third switch \(\epsilon_3^*\). We use that switch to compute the process \(\langle X(0),\epsilon^*_3 \rangle\), in which, then, we search for \(\delta_{min}^{out}= \epsilon^*_4\), and so the loop goes on.

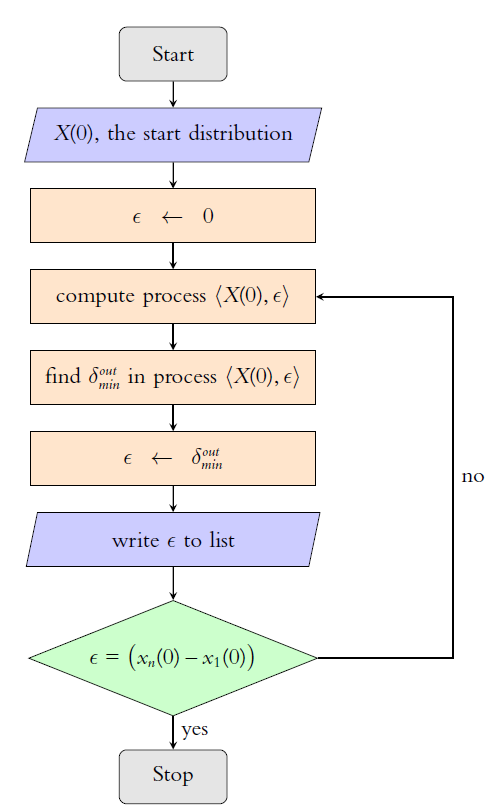

As we know directly from \(X(0)\) the first switch, so we know directly from \(X(0)\) the largest switch, namely the distance \(x_n(0)- x_1(0)\), the width of the start profile. For \(\epsilon^* = \big(x_n(0)- x_1(0)\big)\), all agents are linked to all agents already in \(t=0\). In \(t=1\) they have all the same opinion, and the process is stable in \(t=2\). All \(\epsilon > \epsilon^*\) have the same effect, and can’t make any further difference. Therefore, the search algorithm can stop once \(\epsilon^* = \big(x_n(0)- x_1(0)\big)\) is reached. The flowchart in Figure 6 visualises the algorithm.

Here we should stop immediately because of flashing red lights: In Section 3.1 we saw, that the most numerically dangerous situation is a constellation in which opinions lie exactly on the bounds of confidence of other opinions. But obviously our search algorithm creates exactly this type of situation over and over again: Each switch generates a BC-process in which at least two agents have opinions that are exactly and mutually at the upper or lower bounds of their confidence interval. In other words: Without precautions, our algorithm is a recipe for a numerical disaster.

What to do? Of the four options we discussed as possible solutions in Section 3.2, the first two are not applicable: By design and for good reason, our algorithm creates the constellation that the two options try to avoid. The third option adds a tiny amount \(\Delta_\epsilon\) to \(\epsilon\) to avoid the error of missing an \(\epsilon\)-insider. But this can lead to a complementary problem, namely mistaking an \(\epsilon\)-outsider for an \(\epsilon\)-insider—and we simply do not know what \(\Delta_\epsilon\) would be too much. Consequently, there is no other option than the costly solution of option four: All computations of our algorithm have to be done as fractional arithmetic, and it has to be done with integers of arbitrary length. This is exactly what we are going to do. And it is also what we have already done without mentioning it in the calculation of the processes in Figure 5above. Otherwise we would be heading for numerical disaster with the second switch: Looking at 5a, we see that the second switch equals \(0.32\). But when using floating-point arithmetic, the computer get’s it wrong. For \(|1.0 - 0.68|\), i.e., the distance between agent\(_5\) and agent\(_4\) in \(t=0\), we get \(0.31999999999999995\); for \(|0.68 - 0.36|\), i.e., the distance between agent\(_4\) and agent\(_3\), we get \(0.32000000000000006\). Both results are obviously wrong. Since the first distance is smaller than the second, the algorithm would return \(\epsilon_2^* = 0.31999999999999995\). In the next step we would get \(\epsilon_3^* = 0.32000000000000006\)—and we have a completely corrupted list of switches right from the start.

Therefore, from now on, we will compute consistently on numerically safe ground: fractional arithmetic, and nothing else. Table 1 gives an example. It shows all exact fractional results for the BC-process that starts with our example start distribution and an \(\epsilon\)-value that equals \(0.32\), in fractional terms \(8/25\), a value that we already identified as \(\epsilon_2^*\), the second switch for the given start distribution.

| agent | \(t=0\) | \(t=1\) | \(t=2\) | \(t=3\) | \(t=4\) | \(t=5\) | \(t=6\) |

|---|---|---|---|---|---|---|---|

| 1 | 0/1 | 9/100 | 203/900 | 569/2160 | 63307/172800 | 1669/3840 | 1669/3840 |

| 2 | 9/50 | 9/50 | 203/900 | 569/2160 | 63307/172800 | 1669/3840 | 1669/3840 |

| 3 | 9/25 | 61/150 | 407/1200 | 573/1600 | 63307/172800 | 1669/3840 | 1669/3840 |

| 4 | 17/25 | 17/25 | 289/450 | 6269/10800 | 18719/43200 | 1669/3840 | 1669/3840 |

| 5 | 1/1 | 21/25 | 19/25 | 631/900 | 13841/21600 | 1669/3840 | 1669/3840 |

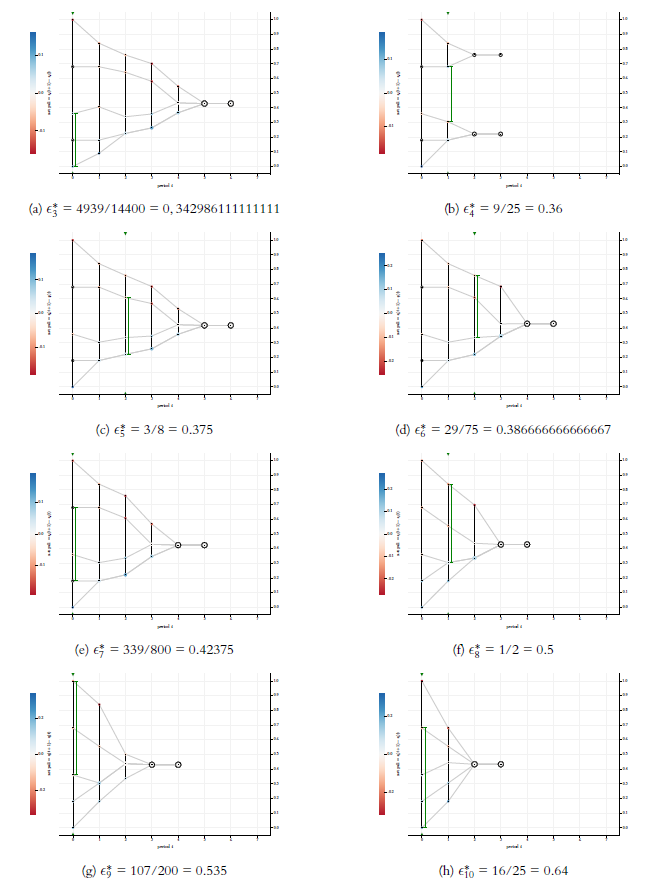

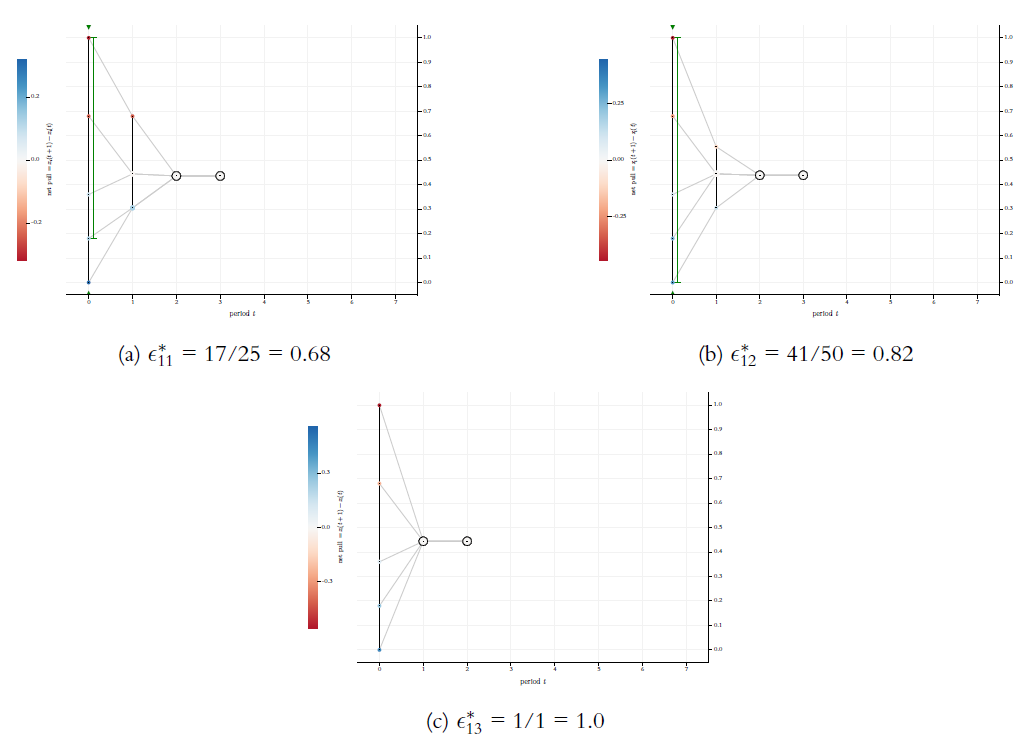

When we run the algorithmic search for the \(\epsilon\)-switches of our example start distribution \(X(0)\), we get a total of 13 switches. They are listed in Table 2. The table displays the exact rational values and their decimal representation as floating-point numbers (64 bits). The 13 switches allow to initialise 13 different BC-processes. The first two are shown in Figure 5. Figures 7 and 8 show the remaining 11 possible BC-processes. The 13 processes exhaust all possibilities for BC-processes that start with \(X(0) = \langle 0, 0.18, 0.36, 0.68, 1.0 \rangle\)—-nothing else is possible.

| switch | exact | float64 |

|---|---|---|

| \(\epsilon_1^*\) | 9/50 | \(0.18\) |

| \(\epsilon_2^*\) | 8/25 | \(0.32\) |

| \(\epsilon_3^*\) | 4939/14400 | \(0,342986111111111\) |

| \(\epsilon_4^*\) | 9/25 | \(0.36\) |

| \(\epsilon_5^*\) | 3/8 | \(0.375\) |

| \(\epsilon_6^*\) | 29/75 | \(0.386666666666667\) |

| \(\epsilon_7^*\) | 339/800 | \(0.42375\) |

| \(\epsilon_8^*\) | 1/2 | \(0.5\) |

| \(\epsilon_9^*\) | 107/200 | \(0.535\) |

| \(\epsilon_{10}^*\) | 16/25 | \(0.64\) |

| \(\epsilon_{11}^*\) | 17/25 | \(0.68\) |

| \(\epsilon_{12}^*\) | 41/50 | \(0.82\) |

| \(\epsilon_{13}^*\) | 1/1 | \(1.0\) |

Comparing the 13 possible processes, the probably most surprising phenomenon regards the switches \(\epsilon_3^*\), \(\epsilon_4^*\), and \(\epsilon_5^*\) (cf. Figures 7a, 7b, and 7c): For \(\epsilon_3^*\) the process reaches a consensus. But for the larger \(\epsilon_4^*\) the consensus is breaking up—a clear cut case of a counter intuitive non-monotonicity. For the next switch \(\epsilon_5^*\) we get consensus again. Though in a less spectacular way, all 13 switches make a difference for the trajectories of the BC-processes that they generate. Going through the 13 runs in Figures 5, 7, and 8, we see a huge variety of differences:

- The number of final clusters may decrease (\(\epsilon_1^* \rightarrow \epsilon_2^*\), \(\epsilon_4^* \rightarrow \epsilon_5^*\)) or increase (\(\epsilon_3^* \rightarrow \epsilon_4^*\)).

- The width of the final cluster structure, i.e., \(x_n(\bar t)-x_1(\bar t)\), may decrease (\(\epsilon_1^* \rightarrow \epsilon_2^*\), \(\epsilon_4^* \rightarrow \epsilon_5^*\)) or increase (\(\epsilon_3^* \rightarrow \epsilon_4^*\)).

- The exact position of a consensus in \([0,1]\) may change (for instance \(\epsilon_5^* \rightarrow \epsilon_6^*\), \(\epsilon_{11}^* \rightarrow \epsilon_{12}^*\)).

- For some \(t < \bar t\), the width of \(X(t)\), i.e., \(x_n(t)-x_1(t)\), may decrease (for instance \(\epsilon_2^* \rightarrow \epsilon_3^*\) for \(t=4\)) or increase (for instance \(\epsilon_3^* \rightarrow \epsilon_4^*\) for \(t=3\)).

- A profile \(X(t)\) that was an \(\epsilon\)-profile beforehand, may get an \(\epsilon\)-split (for instance \(\epsilon_3^* \rightarrow \epsilon_4^*\) for \(t=1\)); a profile that had an \(\epsilon\)-split, may become an \(\epsilon\)-profile (for instance \(\epsilon_4^* \rightarrow \epsilon_5^*\) for \(t=1\)).

- The time to stabilisation may decrease (\(\epsilon_3^* \rightarrow \epsilon_4^*\), \(\epsilon_5^* \rightarrow \epsilon_6^*\), \(\epsilon_7^* \rightarrow \epsilon_8^*\), \(\epsilon_9^* \rightarrow \epsilon_{10}^*\), \(\epsilon_{12}^* \rightarrow \epsilon_{13}^*\)) or increase (\(\epsilon_1^* \rightarrow \epsilon_2^*\), \(\epsilon_4^* \rightarrow \epsilon_5^*\)).

The observed differences in the trajectories are not mutually exclusive, and probably there are more differences than the ones that I listed.

Beyond the example: General observations and conjectures on \(\epsilon\)-switches

The algorithm that finds the \(\epsilon\)-values that make a difference in our example start distribution can be applied to any start distribution. I have applied it to countless start distributions—random, regular, expected value, lots and lots of start distributions I had found interesting for some reason. Result: the algorithm always listed finitely many increasingly large switches, and then stopped. As analytical reflections already showed for the example in Section 4.1, the first switch equals the smallest distance between neighbouring opinions in the (strictly ordered) start profile. The last switch found and at the same time the largest switch equals the width \(x_n(0)-x_1(0)\) of the start profile. The fact that the algorithm stops, and thereby leads to a finite list of switches, is not self-evident (at least not for me): perhaps there could be an infinite number of switches between a smallest and a largest switch. However, in none of my computational experiments was this the case. But I have no proof that the number of switches is always finite.

The finite list of strictly increasing \(\epsilon\)-switches that the algorithm finds, leads to a complete segmentation of \([0,1]\) by the following sequence of intervals (right-open except for the last one):

| \[ \langle \ [0,\epsilon_1^*)\ , \ [\epsilon_1^*,\epsilon_2^*)\ ,\ \dots, \ [\epsilon_{s-1}^*,\epsilon_s^*) \ , \ [\epsilon_s^*,1] \ \rangle \ \text{with} \ 0 < \epsilon_1^* < \epsilon_2^*,\ \dots,\ \epsilon_s^* \leq 1.\] | \[(12)\] |

The list of \(\epsilon\)-switches yields a gapless sequence of segments \([\epsilon_k^*, \epsilon_{k+1}^*)\). In what follows, we will often speak of predecessor or successor switches. That then refers to the switch \(\epsilon_{k-1}^*\) or \(\epsilon_{k+1}^*\) that in the ordered list of switches precedes or succeeds \(\epsilon_k^*\).

For any given start distribution, all \(\epsilon\)-values from the same segment lead to exactly the same BC-process; processes with \(\epsilon\)-values from different segments, on the other hand, are never the same. Overall, for any given start distribution, it is possible to get a complete overview of which BC-processes are possible at all. We can, switch by switch, go through all possible processes and look for properties that interest us:

- Is a consensus being destroyed again?

- Is the number of final clusters increasing again?

- Is the final profile width increasing again?

- How long does it take to stabilise?

- Which switch produces the first consensus that is not destroyed by any subsequent switch?

- In which period was the next switch found?

The answers to all these questions can be generalised in a precise sense: Assuming that the \(k^{th}\) switch \(\epsilon_k^*\) of a start distribution \(X(0)= x_1(0), \dots , x_i(0), \dots, x_{n}(0)\) destroys a consensus, then this is also true for the \(k^{th}\) \(\epsilon\)-switch of a start distribution \(X^\diamond (0)\), which we get from \(X(0)\) by a transformation of the form

| \[ x_i^\diamond(0) = \alpha \cdot x_i(0) + \beta, \text{ with } \alpha > 0 \text{ and } \alpha, \beta \in \mathbf{R}\] | \[(13)\] |

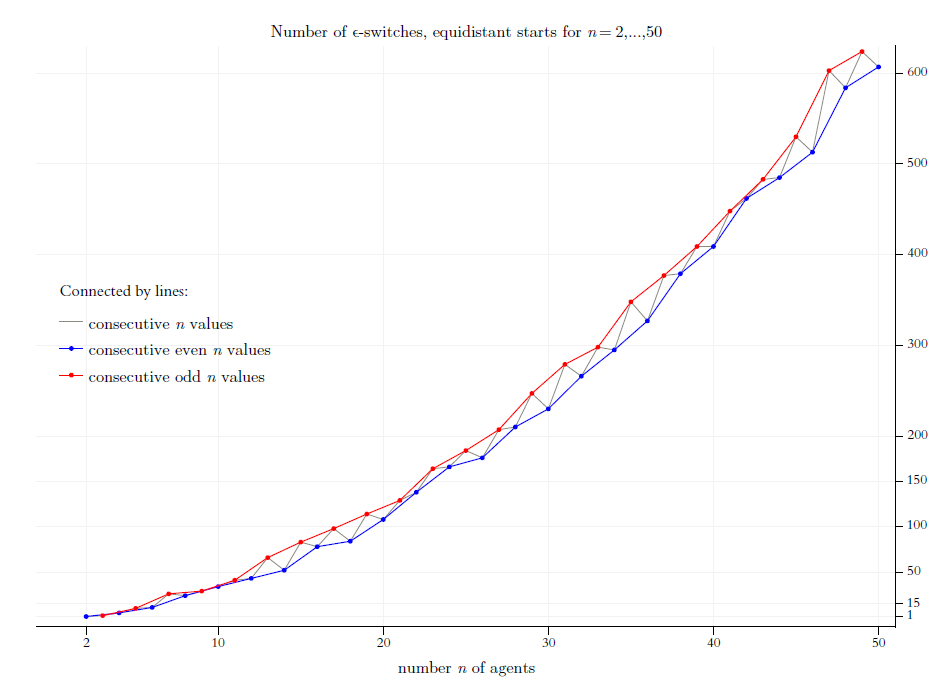

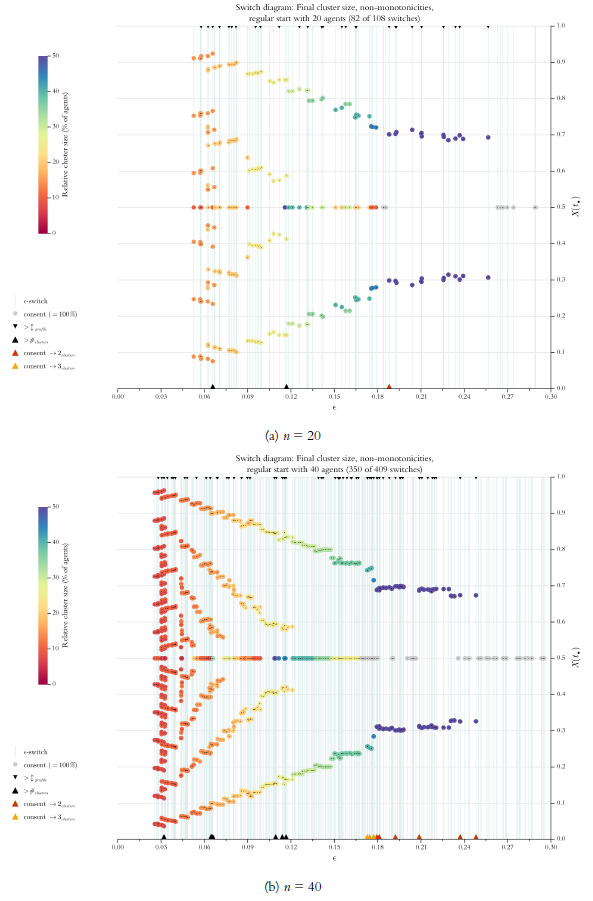

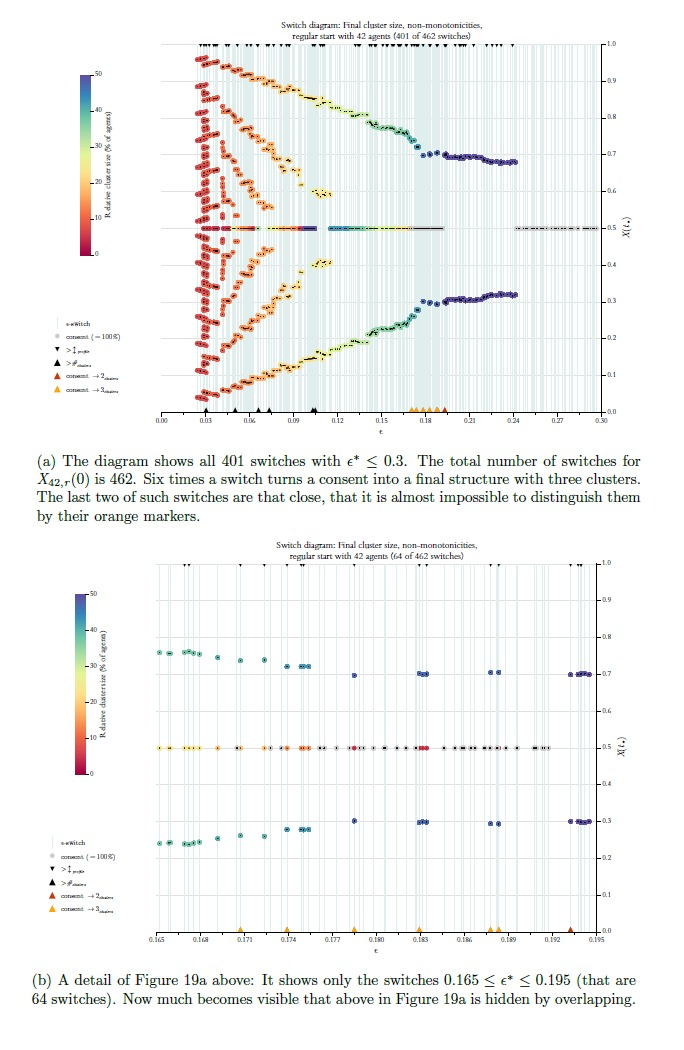

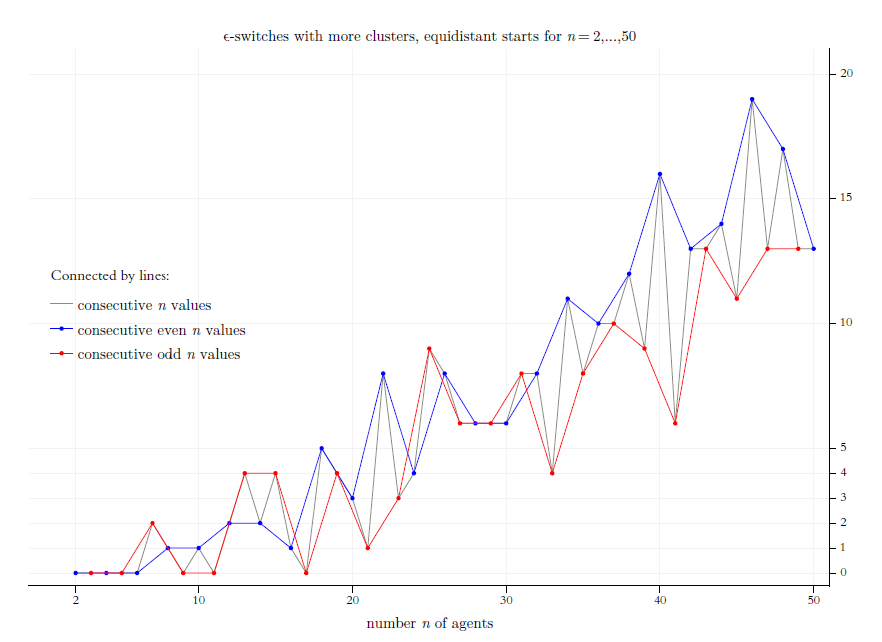

In our example above in Section 4.1, only once a consensus is destroyed by the next larger switch. There are much wilder BC-processes: For the same start distribution, it may happen several times that a consensus is destroyed again by a successor switch. There are several ways to demonstrate that, for instance by the analysis of a major set of random start distributions. Here in this article I will use another strategy: We will look at all regular start distributions for \(n=2, \dots, 50\). Throughout the text, a regular start profile with \(n\) agents will be occasionally referred to as \(X_{r,n}(0)\). In a first step, Figure 9 shows their respective number of \(\epsilon\)-switches. The \(x\)-axis shows the \(n\)-values, the \(y\)-axis the number of \(\epsilon\)-switches that our algorithm finds for a regular start distribution with the respective \(n\). As shown and motivated in Appendix B, Universal characteristics of equidistant start distributions, the number of switches is universal: Whatever the specific equidistance \(c\), whatever the range of the profile, any equidistant start profile with \(n\) agents has the same number of switches. And even more: For all equidistant start distributions with a given \(n\), the properties of the \(k^{th}\) of the \(s\) switches are always the same.31 Thus for example, what we see in Figure 9 also holds for an expected value start distribution as it would for a start distribution with an equidistance of \(c=1\), thereby leaving the unit interval as our opinion space.32

In Figure 9 the blue graph connects the numbers of \(\epsilon\)-switches for consecutive even numbers of \(n\); the red graph does that for odd numbers. The grey graph connects directly the \(y\)-values for consecutive values of \(n\). For \(n=49\) the algorithm finds \(624\) switches, for \(n=50\) there are \(607\).

By inspection of Figure 9 we see: For increasing even values of \(n\), and as well – but separately – for increasing odd values of \(n\), the number of switches increases monotonically. And in both cases the increase is more than linear.

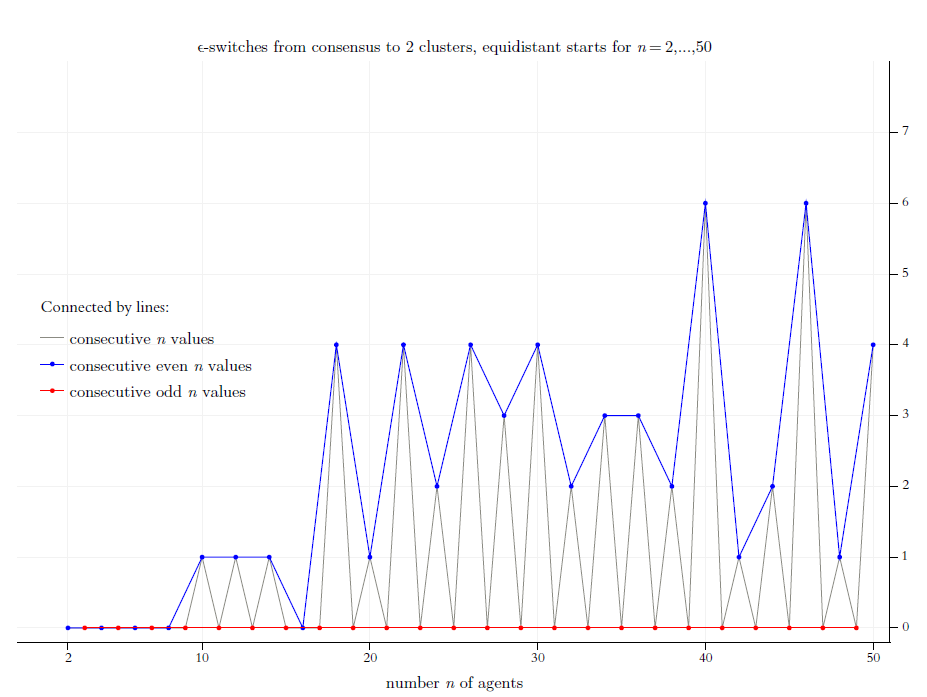

In Figure 10, we start focusing on the ‘wild’ behavior of BC-processes: We look for switches that break up a consensus and turn the consensus into strict polarisation (i.e., a final stable cluster structure with exactly two clusters). Figure 10 shows how many such switches there are. As in Figure 9, the \(x\)-axis shows the increasing values \(n=2, \dots, 50\) of the regular start distributions that the algorithm searches for such switches. The \(y\)-axis shows the results. Again, via the colors blue and red, we distinguish even and odd values of \(n\). If you look at Figure 10, two things immediately jump to the eye: For even values of \(n\) there are quite a lot of destroyed consents; for odd values there is none. As to the even \(n\)-values, for \(n=40\) and \(n=46\) it is six times each that a consecutive switch leads to polarisation while the predecessor switches generated consensus. And, by extrapolation, it looks as if (though stepwise) for increasing (even) values of \(n\) there are higher numbers of such cases.

Why are there no such cases for odd values of \(n\)? The explanation is closely related to our earlier discussion of symmetrical start profiles (cf. Section 3.1): If we start with a regular profile, and \(n\) being an odd value, then there is always a centrist agent exactly in the middle of the opinion profile (possibly as a member of a centrist cluster \(>1\)). Whatever the confidence level \(\epsilon\), the centrist agent will always stay in the center. As a consequence, a constellation with an empty center, as required for a strict polarisation, is not possible.

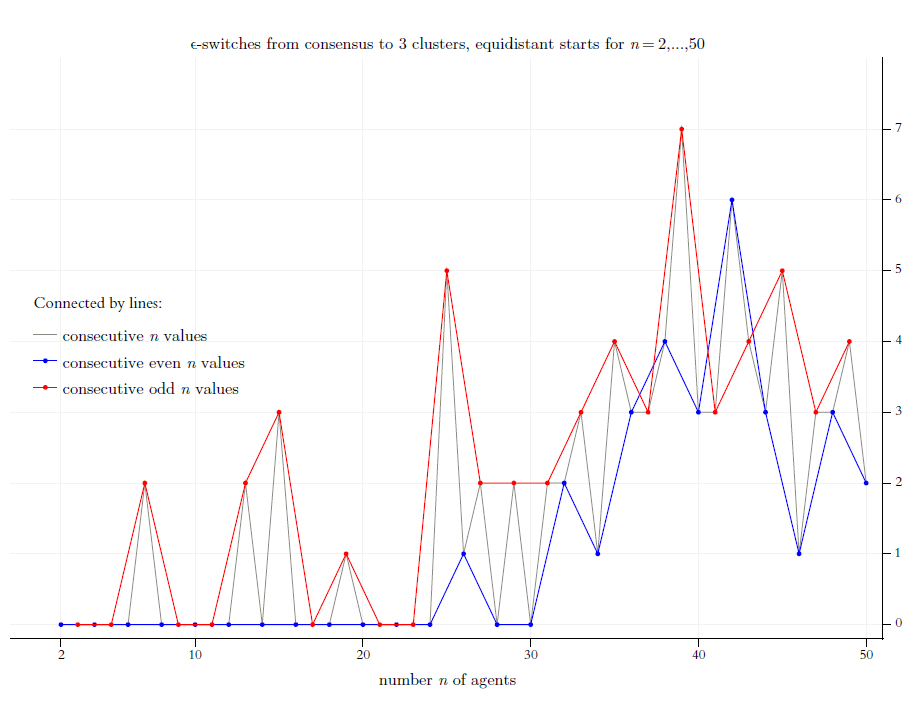

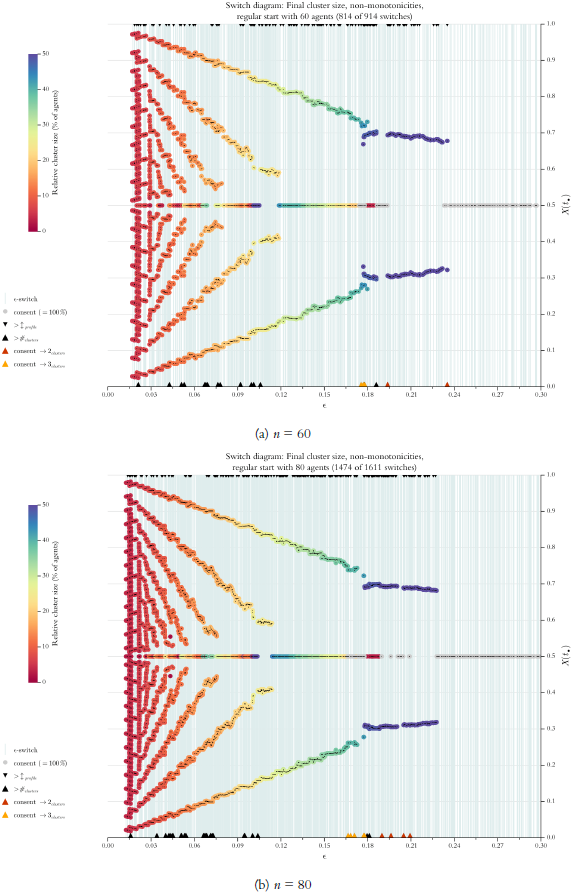

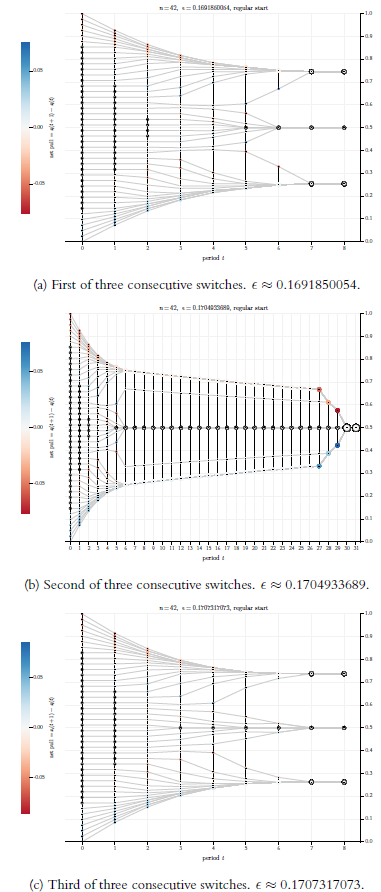

Figure 11 focuses on a second, somehow even more dramatic type of destroyed consent: The consecutive switch generates three final clusters. Now the odd values of \(n\) seem to be much better in producing such a structure. For even \(n\)-values \(<26\) it never occurs that after a consent the next switch generates a final structure of three clusters. However, for \(n=42\) it happens six times. Based upon our perfect and complete knowledge of all switches, we can also look for switches that turn a consent into a final cluster structure with more than three clusters. (But so far I never found such a case in any regular start distribution.)

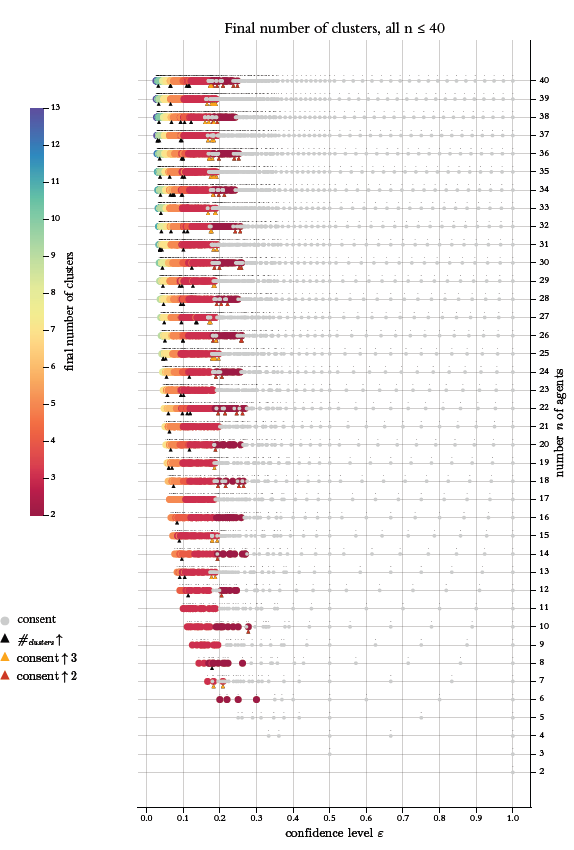

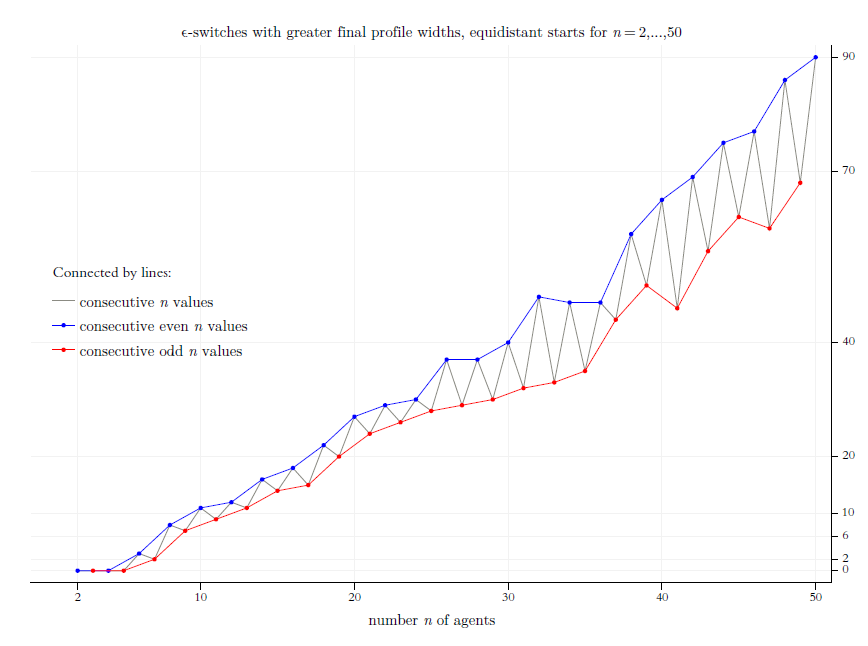

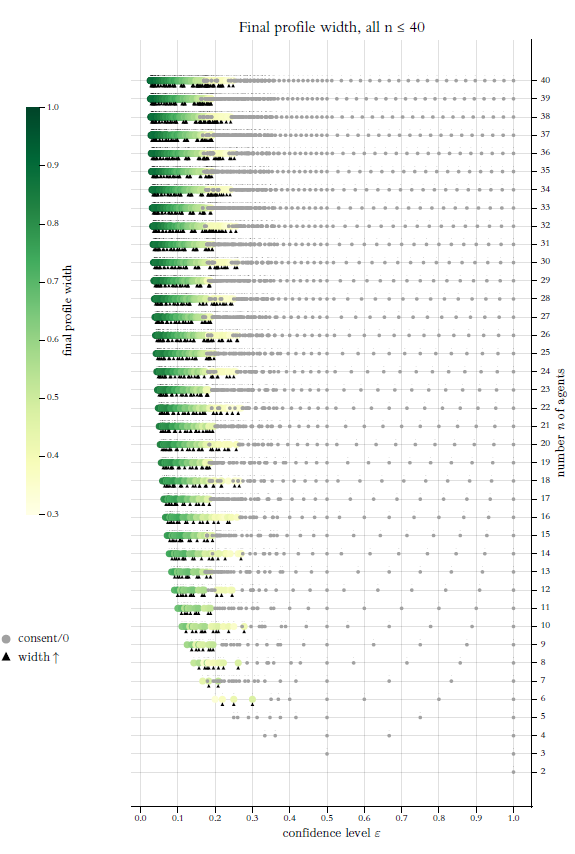

The Figures 9 to 11 show for regular start profiles \((n=2,3, \dots, 50)\) the number of their \(\epsilon\)-switches and how many of them destroy consents. But the figures do not give any information about the exact positions of the switches in the interval \([0,1]\). Figure 12 shows by a new type of diagram all sorts of non-monotonicities together with the positions of the switches that are generating them. On the \(x\)-axis of Figure 12 we have the complete range of possible \(\epsilon^*\)-values, i.e., \([0,1]\). The \(y\)-axis shows the increasing values of the number \(n\) of agents in a regular start profile \(X_{n,r}(0)\). Thus, each switch is a certain point \(\langle x,y \rangle\) in the coordinate system thus given.

At this point, we place a colored circle that indicates by its color the specific feature that we want to visualise with the diagram, namely the final number of clusters. As the \(y\)-axis represents the \(n\) values, all the switches of a start distribution for a given \(n\) are lined up horizontally. The colormap together with legends (both to the left) give the information how to read the specific diagram. A bit above the often overlapping, horizontally lined up circles, there are very small black dots. The dots indicate the exact position of the switch directly below. Due to the minimal size of the black dots, they overlap much less (if at all) than the colored circles. As a consequence, we get a sense for the different densities with which the switches are distributed in the interval \([0,1]\).

In Figures 9 to 11 we looked at regular start profiles with \(n=2,3, \dots, 50\); in Figure 12 we reduce the range to \(n=2,3, \dots, 40\). The reason is better visibility and readability of details. (Admittedly, even after the range reduction the graphics is a bit packed.)

Already a cursory glance at Figure 12 shows that the positional structure of the switches clearly has a pattern. For each \(n\), the very first switch has an \(x\)-axis position at \(\epsilon^*_1 = 1/(n+1)\), i.e., the characteristic equidistance of the regular start distribution with \(n\) agents (cf. Equations 9 and 23). That equidistance is, obviously, at the same time the minimum distance to an \(\epsilon\)-outsider in all processes \(\langle X(0), \epsilon=0 \rangle\). As a consequence, for increasing values of \(n\), the first switch gets smaller and smaller, moves left direction \(0\), and, thereby, produces a convex-shaped curve with regard to the positions of the very first switches. As well trivial is the position of the largest switch: from our analytical reflections above we know that the largest switch of a regular start distribution is always \(1\). What stands out most clearly, is a certain positional pattern in between the first and the last switch: At the beginning the positions of the switches look chaotically distributed. But, as we get farther right, the positional distribution becomes completely regular. At least for \(n \geq 10\), a first type of positional regularity starts for switches greater \(\approx 0.4\): There the distance between consecutive switches becomes equidistant, and the size of the equidistance seems to depend upon \(n\), namely decreasing with \(n\). A second type of regularity then starts a bit farther right, namely for switches greater than \(0.5\): Consecutive switches are positioned equidistantly, but with (about?)the size of the equidistance that we see to the left of \(0.5\). Again, the size of the equidistance seems to decrease with \(n\). And finally, whatever the value of \(n\), \(\epsilon = 0.5\), i.e., the middle of the range of opinions for a regular start profiles, is always a switch. In terms of their density, the switches are very much concentrated in the initial range of possible values between \(1/(n+1)\) and \(\approx 0.35\). At the same time, in that region their positional distribution does not seem to follow any type of a regular pattern.

Figure 12 focuses on the final number of clusters that the switches generate. That number is indicated by a color according to the colormap to the left. Consents, i.e., the cases of just one final cluster, get a special treatment: They are indicated by grey circles that are also a little bit smaller than the other colored circles. And the grey circles are drawn last, after all other circles are already drawn. That makes consents more easily visible. Then there are upwards directed triangles in Figure 12. They mark switches that produce specific non-monotonicities: Black triangles hint to switches that lead to final cluster structures with more clusters than the predecessor switch did. An orange triangle marks the switches that, after a consent under the predecessor switch, lead to three clusters. A red triangle hints to a switch that, after a consensus, leads to strict polarisation, i.e., two clusters. The black triangles are always drawn first. As a consequence (and on purpose), they are overdrawn by orange or red triangles in cases of destroyed consents. Thus, the markers of these cases are always visible.

A careful inspection of Figure 12 makes it very clear: For all \(n\), there is always a switch that leads to a consensus that is final in the sense: No successor switch destroys the consent.33 However, the transition from a final plurality (i.e., a major number) of clusters to a final consensus (just one cluster) is wild, chaotic, and non-monotonic. Only for a few values of \(n\), the first consensus switch is also the final one. Normally, from switch to switch, many times the number of final clusters decreases and increases again. The most dramatic cases of this type are the many cases of a back-and-forth of consensus and dissent (the latter in the sense of polarisation or a final structure with three clusters). And there is a difference between even and odd values of \(n\): For odd values the final consensus switch comes for significantly smaller \(\epsilon\)-values.

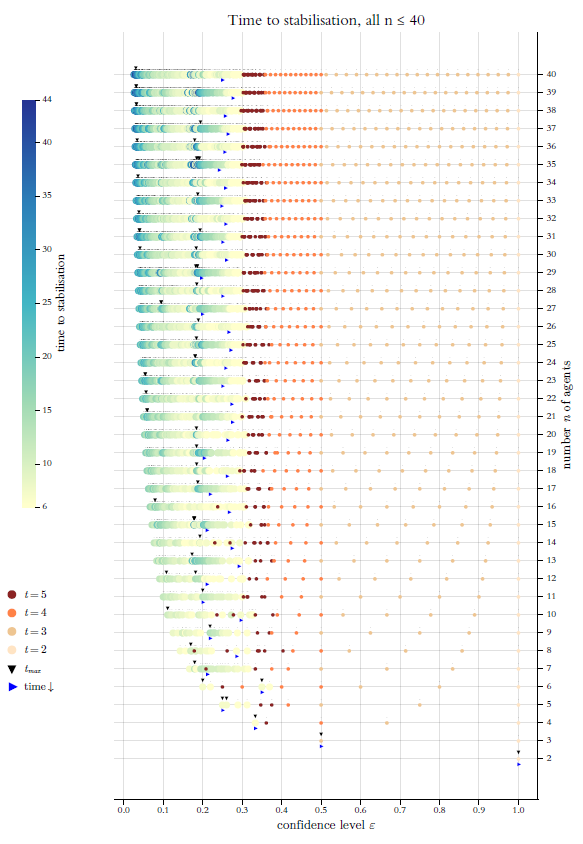

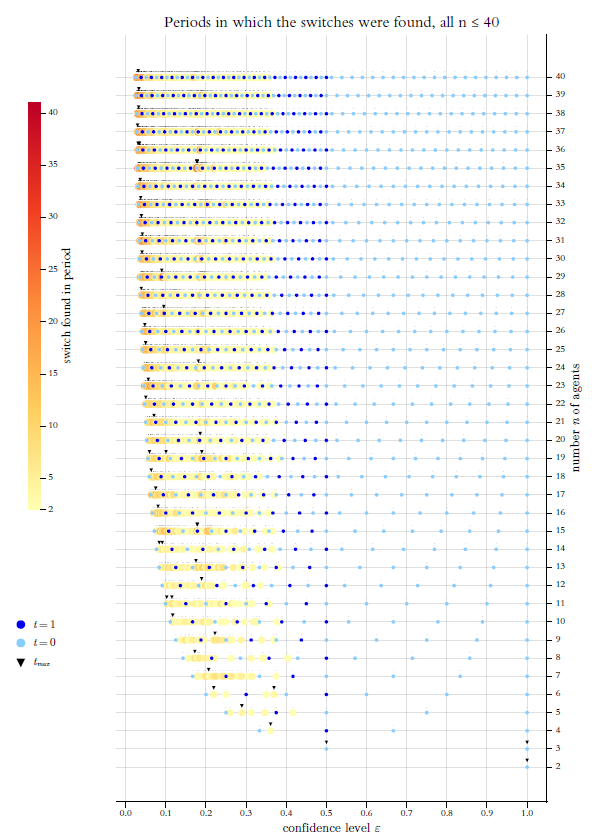

Separate from the main text, Appendix B summarises, in a more formal and technical language, analytical notes and observations on universal characteristics of equidistant start distributions. The appendix also contains additional figures. In the style of Figures 9 to 11: (a) the number of switches that lead to more final clusters than under the respective predecessor switch; (b) the number of switches that lead to final profile widths that are greater than under the predecessor switch. Three further figures, similar in style and structure to Figure 12, show for all switches (a) the final profile widths, (b) the times to stabilisation, and (c) the period in which the switch was found. (Again, what follows in the main text will be understandable without a reading of the appendix.)

A new BC-research tool: \(\epsilon\)-Switch diagrams

Figure 12 gives a general overview: We see the positions of switches and get to know especially the ones that are responsible for certain non-monotonicity effects. But we do not see in detail where exactly the final clusters are located or what the width of a final profile is. For that we need another type of diagram.

From \(\epsilon\)-diagrams to \(\epsilon\)-switch-diagrams

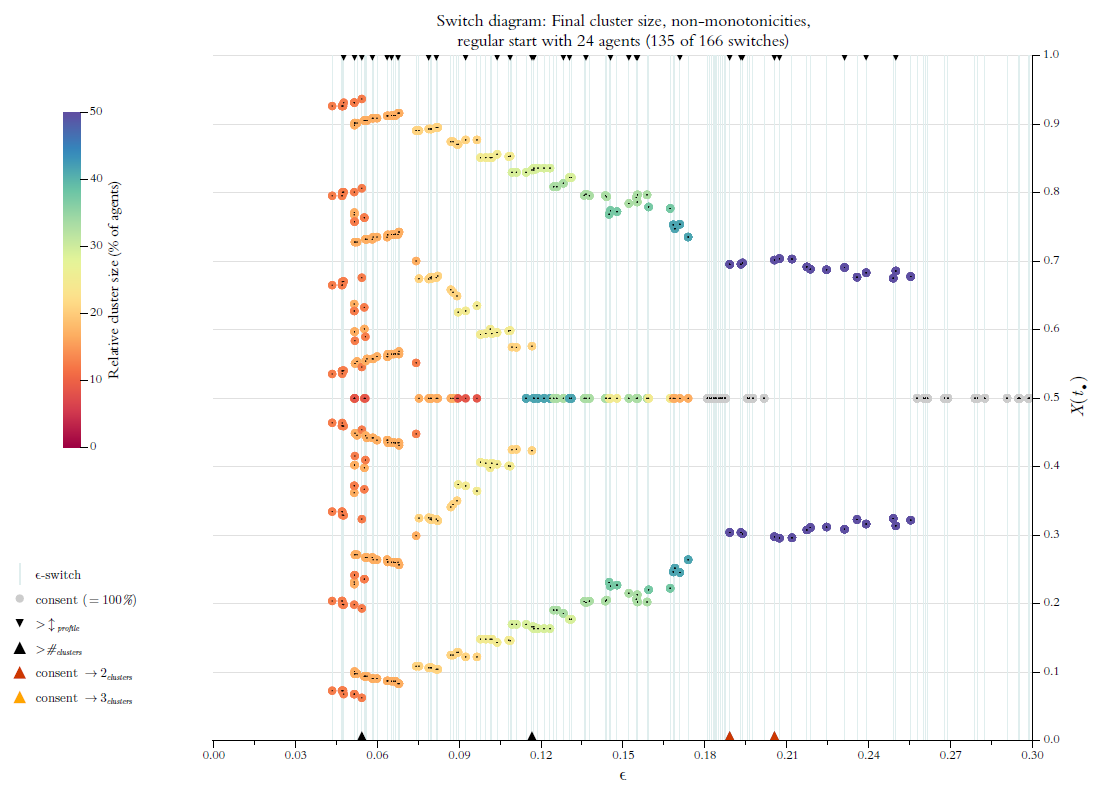

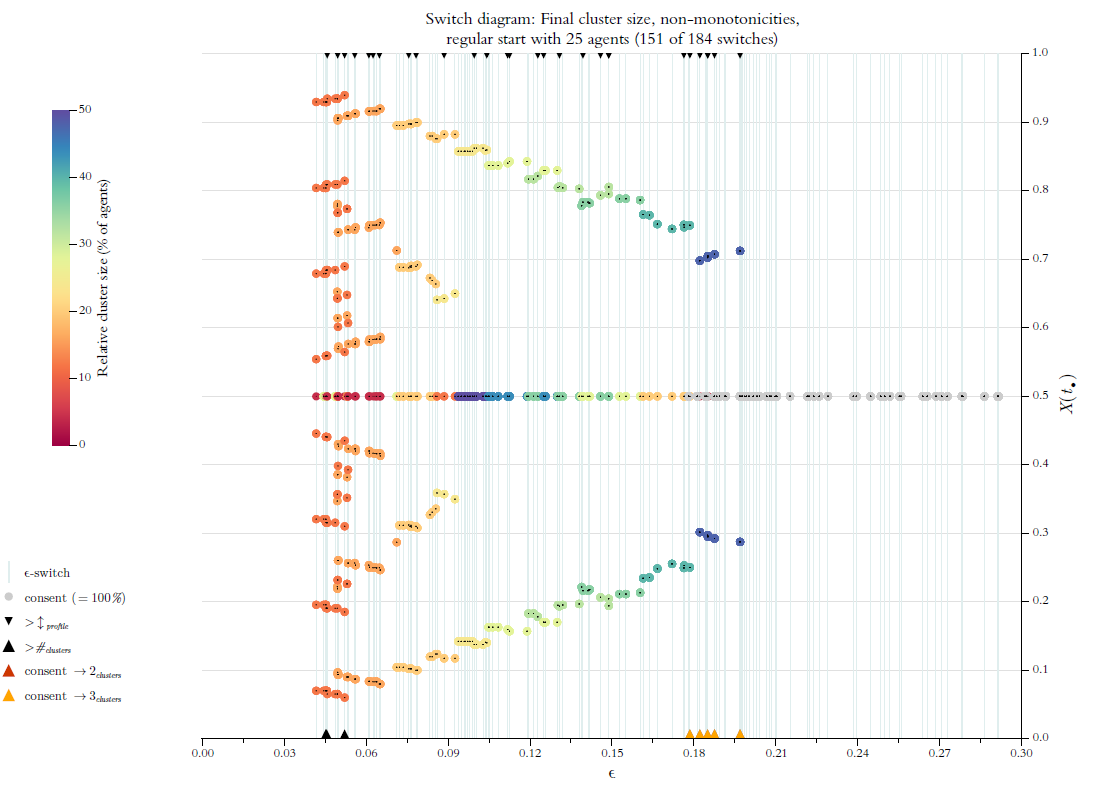

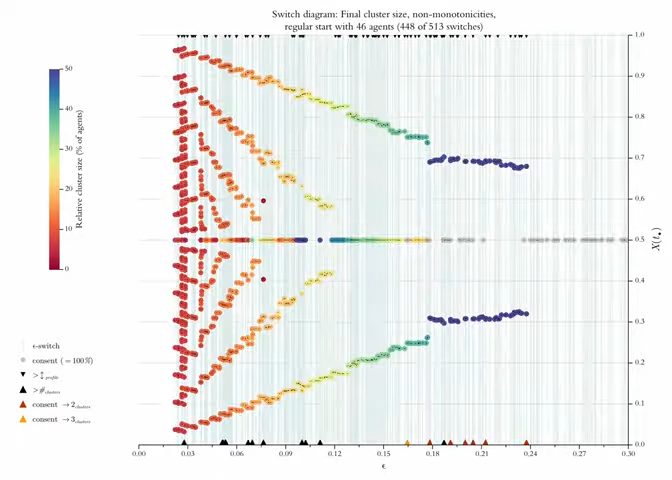

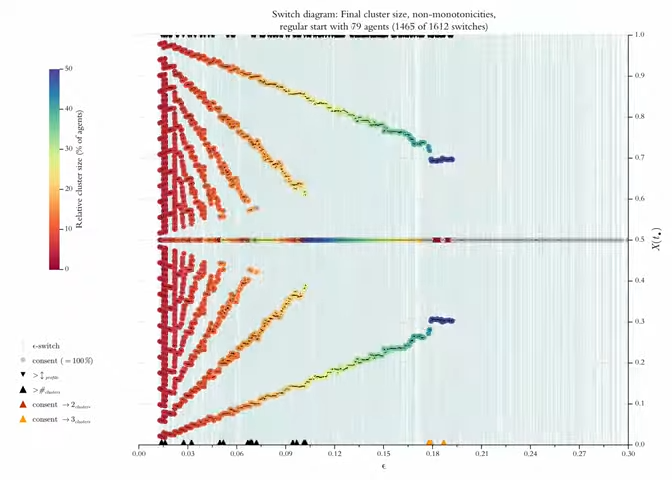

Above, in Figure 4 we used a so called \(\epsilon\)-diagram: For stepwise increasing \(\epsilon\)-values (\(x\)-axis, step size is \(0.1\)) it shows the final cluster structure (\(y\)-axis). Given what we know now, an improved version of this diagram could show the details much more to the point (even in a very literal sense): By our search algorithm we get perfect knowledge of all \(\epsilon\)-switches, and we can compute such a list for any profile \(X(0)\). But then we should also show the final cluster structure solely and exclusively for the \(\epsilon\)-switches, i.e., the \(\epsilon\)-values that make a difference. We will call the improved diagram an \(\epsilon\)-switch diagram (for short switch diagram). Figure 13 is an example.

The thin vertical lines indicate the positions of switches. Colored circles show the position and the relative size of the final clusters. A consensus cluster is colored grey and drawn last. Tiny black dots in the centre of the circles mark the exact position of the respective cluster. At the same time the dots help to distinguish and separate clusters even if their circles overlap. The dots are drawn last. The diagram only shows the switches \(\leq 0.3\). Here that regards \(135\) of a total of \(166\) switches. From Figure 12 we know that with \(\epsilon^* \leq 0.3\) we are already behind the final consensus switch.