The Wisdom of the Small Crowd: Myside Bias and Group Discussion

, ,

and

aUniversity of Groningen, Netherlands; bLudwig-Maximilians-Universität München, Germany

Journal of Artificial

Societies and Social Simulation 26 (4) 7

<https://www.jasss.org/26/4/7.html>

DOI: 10.18564/jasss.5184

Received: 14-Nov-2022 Accepted: 11-Jul-2023 Published: 31-Oct-2023

Abstract

The my-side bias is a well-documented cognitive bias in the evaluation of arguments, in which reasoners in a discussion tend to overvalue arguments that confirm their prior beliefs, while undervaluing arguments that attack their prior beliefs. The first part of this paper develops and justifies a Bayesian model of myside bias at the level of individual reasoning. In the second part, this Bayesian model is implemented in an agent-based model of group discussion among myside-biased agents. The agent-based model is then used to perform a number of experiments with the objective to study whether the myside bias hinders or enhances the ability of groups to collectively track the truth, that is, to reach the correct answer to a given binary issue. An analysis of the results suggests the following: First, whether the truth-tracking ability of groups is helped or hindered by myside bias crucially depends on how the strength of myside bias is differentially distributed across subgroups of discussants holding different beliefs. Second, small groups are more likely to track the truth than larger groups, suggesting that increasing group size has a detrimental effect on collective truth-tracking through discussion.Introduction

Group discussion in general, and argumentation in particular, often plays a critical role in political, social, and scientific communities and can play a key role in decision making and scientific research. Therefore, the understanding of argumentation is the subject of numerous scientific investigations in different research areas in the cognitive and social sciences (Hornikx & Hahn 2012; Oaksford & Chater 2020). These investigations have shown that argumentative contexts are very complex and that it can be more difficult than expected to convince other people with one’s arguments. This is especially true when the participants in a discussion have different prior assumptions about the topic under discussion. Indeed, research has shown that participants in a discussion are influenced by their prior beliefs to such a degree that they favor them over alternatives both in finding new arguments and in evaluating other people’s arguments (Čavojová et al. 2018; Edwards & Smith 1996; Kuhn 1991; McKenzie 2004; Nickerson 1998; Perkins 1985; Stanovich 2021; Taber & Lodge 2006; Wolfe & Britt 2008).

In this paper, following Stanovich (2021), we use the term “myside bias" to refer to the influence of an agent’s prior beliefs on the evaluation and production of information in general, and of arguments in particular. By using this term, we aim to bring together the extensive body of work that has been conducted on the topic of prior belief biases, displaying a variety of terms to refer to such bias in one or another specific context, e.g., either argument production or argument evaluation or information search. Among others, some of the most closely related terms that are in use are: ”confirmation bias" (Gabriel & O’Connor 2022; Rabin & Schrag 1999), “biased assimilation" (Corner et al. 2012; Dandekar et al. 2013; Lord et al. 1979), ”biased/selective processing" (Newman et al. 2018; Shamon et al. 2019), “attitude congruence bias" (Taber et al. 2009), ”refutational processing" (Liu et al. 2015), “defensive processing" (Wood et al. 1995), and ”motivated cognition" (Lorenz et al. 2021). The existence of such a diverse terminology risks concealing the common subject-matter. We hope that our work will contribute to a more unified terminology in the field.

The main focus of this paper is myside-bias in the evaluation of arguments. In this context, research has uncovered the following two effects. On the one hand, discussants overestimate the strength of arguments that support their own prior beliefs or that attack opposing beliefs; on the other hand, discussants underestimate arguments that attack their own prior beliefs or that support opposing beliefs (Corner et al. 2012; Liu et al. 2015; Lord et al. 1979; Newman et al. 2018; Nickerson 1998; Shamon et al. 2019; Stanovich et al. 2013; Stanovich 2021; Stanovich & West 2007; Stanovich & West 2008; Taber et al. 2009; Taber & Lodge 2006; Toplak & Stanovich 2003).

For these reasons, it has been argued that myside-biased individuals risk becoming overconfident in their beliefs and are less willing to revise them, regardless of the truth value of the beliefs in question (Mercier 2017; Mercier & Sperber 2017; Stanovich 2021). Moreover, it has been argued that myside bias contributes to undesirable social phenomena such as attitude polarization (Lord et al. 1979; Newman et al. 2018; Stanovich 2021; Taber et al. 2009). Accordingly, myside bias is expected to have a detrimental effect on the ability of groups of discussants to get to the truth, i.e., on their ability to collectively find the correct answer to a given question.

However, this account ignores the fact that group discussions can provide a deceptive platform for unbiased reasoners who are easily led to hold false beliefs. This point is central to recent theories of reasoning that focus on reasoning in the context of human evolution (Mercier & Sperber 2017; Sperber et al. 2010). From an evolutionary perspective, cognitive devices such as the myside bias, which tests how new information fits with pre-existing beliefs, may provide agents with a mechanism that prevents them from falling prey to deceptive information (Mercier & Sperber 2011; Mercier & Sperber 2017).

The debate about the effects of myside bias also touches on the related and very timely debate about the extent to which group discussions enhance or diminish the collective wisdom derived from the wisdom-of-the-crowds tradition (Surowiecki 2005), and is currently a focus of researchers using both empirical methods (Claidière et al. 2017; Mercier & Claidière 2022; Navajas et al. 2018; Trouche et al. 2014) and formal methods (Hahn et al. 2019, 2020; Hahn 2022; Hartmann & Rafiee Rad 2018, 2020).

The main starting point of this debate is Condorcet’s Jury Theorem (Condorcet 1785), which is about the ability of groups of voters to find the true answer to a binary problem by majority voting. This theorem shows that the probability of the group finding the true answer by majority voting converges to \(1\) as a function of group size, provided a number of conditions are met. In the simplest case, voters should vote non-strategically and independently, and individually vote for the correct answer with probability greater than \(1/2\) (i.e., better than chance). For an overview of jury theorems, see Dietrich & Spiekermann (2022).

Most notably, any communication among a group of discussants destroys the independence of their opinions, thus violating a crucial assumption of Condorcet’s Jury Theorem. As a consequence, one would expect that, all other things being equal, group discussion decreases the ability of groups to track the truth. In this regard, a number of studies have shown that communication does indeed harm collective wisdom (Hahn et al. 2019; Lorenz et al. 2011). In other words, collectives can better track the truth if they simply aggregate their opinions into a single answer without discussing. This would imply that there is a higher chance for a collective to cast a majority of correct votes if the members of the collective do not communicate.

Recently, however, discussions were found, on the contrary, to improve group answers, compared to simply aggregating the opinions that group members originally held. In particular, Mercier & Claidière (2022) found that discussions led to better overall answers for mathematical or factual problems in large groups. This suggests that correct majorities are more likely after discussions than when agents’ original opinions are simply aggregated.

In this regard, researchers have suggested that the myside bias constitutes a driving mechanism that makes group discussions beneficial for collective wisdom. Some have indeed argued that the myside bias can improve the truth-tracking ability of a group of discussants, by increasing the agents’ stubbornness, preventing correct agents from abandoning their correct belief too early and thus fostering a thorough exploration of the different beliefs under considerations (Gabriel & O’Connor 2022). Indeed, while non-biased discussants might easily fall prey to false beliefs, the myside-biased agent attentively assesses external arguments and is only convinced by good enough arguments, checking them against their prior beliefs. Others have suggested that, during a discussion, the myside bias could prompt a fruitful cognitive division of labor between agents at the opposite sides of an issue (Landemore 2012; Mercier 2016; Mercier & Sperber 2017): agents from each side can carefully evaluate arguments that attack their own view and produce counterarguments, thus reducing the likelihood of incorrect beliefs to spread among the discussants.

The above considerations outline two parallel debates, the first one on the effects of myside bias and the second one on the effects of group discussion on collective wisdom, suggesting that there is not yet a clear answer to either of the following two questions:

- Does myside bias help or hinder the ability of groups to track the truth via discussion?

- Does group discussion help or hinder the ability of groups to track the truth?

This paper starts from a modified version of Baccini & Hartmann’s (2022) model of individual myside bias and extends it to develop an agent-based model for group discussions between myside-biased agents that allows us to answer these two questions in detail.

In this regard, our paper provides an epistemic analysis of group discussion, i.e., an analysis of the belief/opinion dynamics of agents in a group, that is primarily concerned with the correctness or incorrectness of the agents’ opinions/beliefs, similar to, for instance, the analyses in Hegselmann & Krause (2006), Zollman (2007), Douven & Kelp (2011), Brousmiche et al. (2016), Hahn et al. (2020), Gabriel & O’Connor (2022). This also means that our main focus will not be around opinion polarisation or opinion clustering, even though they constitute an important research direction in the study of opinion dynamics (see, e.g., Hegselmann & Krause 2002; Alvim et al. 2021; Flache et al. 2017; Kopecky 2022; Kurahashi-Nakamura et al. 2016; Schweighofer et al. 2020; Urbig et al. 2008).

We proceed in a twofold way. First, we propose a Bayesian model that adequately captures three important features of myside bias in argument evaluation at the individual level and provides a Bayesian justification of this model, thus showing that myside bias has a rational Bayesian explanation under certain conditions. In doing so, this paper fills a gap in the literature, because despite the ever-growing literature on Bayesian approaches to reasoning and argumentation (for overviews, see (Chater & Oaksford 2008; Oaksford & Chater 2020; Zenker 2013)), there is still no systematic Bayesian model of myside bias in argument evaluation.

Second, we implement this Bayesian model of myside bias into an agent-based model of group discussion in order to study the impact of myside bias on the ability of groups to track the truth. In this respect, we are particularly interested in deliberative contexts, where agents have to produce and evaluate arguments to reach a collective conclusion. Our perspective is motivated by a number of recent empirical and formal studies that investigate whether group discussion improves on the initial aggregate answers of the agents to a binary decision problem (Claidière et al. 2017; Hartmann & Rafiee Rad 2018; Mercier & Claidière 2022; Trouche et al. 2014).

The paper is structured as follows. In Section 2, we review a number of empirical studies and formal models that are directly concerned with group discussion or myside bias or both. In Section 3, we present a Bayesian model of myside bias, discuss its implications for argument evaluation and provide an epistemic justification for it. In Section 4, we present an agent-based model of group discussion with myside-biased agents, and explain in detail the notion of group truth-tracking that we want to investigate with it. In Section 5, we present and discuss the results of a number of experiments that we performed using the agent-based model, varying the ways in which the myside bias is distributed across the group of discussants. In Section 6, we discuss the implications of our findings in the context of the relevant literature, as well as some limitations of our work and directions for further research. Finally, in Section 7, we draw our conclusions.

Related Work

In this section, we provide an overview of some relevant work. First, we present empirical findings on the characteristics of myside bias in argument evaluation. We then discuss a number of formal models of opinion dynamics in groups that involve some form of myside bias.

Myside bias in argument evaluation

Myside bias in argument evaluation has been studied both in the context of formal argumentation, in which participants are asked to evaluate the conclusions of inferences that have a clear logical structure, and in the context of informal argumentation, in which subjects are asked to evaluate informal arguments that resemble real-world discussions (Čavojová et al. 2018). Overall, both lines of research show that the correspondence between arguers’ prior beliefs and the content of a conclusion or proposition influences how arguers perceive the truth value of a conclusion or the strength of an argument.

In the case of the study of formal arguments, early research on belief bias shows that the prior credibility of the conclusion of a deductive inference influences the arguer’s judgment about the validity of the inference (Evans 1989, 2002, 2007; Evans et al. 1993). Further research has shown that the credibility of an argument’s conclusion in light of one’s prior beliefs is a predictor of whether an actor judges a conclusion to be true or false. For example, Čavojová et al. (2018) found that participants had difficulty accepting the conclusions of logically valid arguments about abortion whose content conflicted with their prior beliefs about abortion. At the same time, participants had difficulty rejecting invalid arguments with the conclusions of which they agreed (Čavojová et al. 2018).

Much of the experimental research on myside bias has been conducted in an informal argumentation framework (Čavojová et al. 2018), and myside bias in argument evaluation has been documented in a substantial number of experiments (Corner et al. 2012; Edwards & Smith 1996; Liu et al. 2015; Lord et al. 1979; Newman et al. 2018; Nickerson 1998; Shamon et al. 2019; Stanovich et al. 2013; Stanovich & West 2007, 2008; Stanovich & West 2008; Taber et al. 2009; Taber & Lodge 2006). A commonly used experimental paradigm consists in initially letting participants express their opinions on a particular issue, such as abortion or public policy; afterwards, participants are exposed to a set of arguments relevant to the issue and asked to rate the strength of arguments from a set of arguments both for and against the participants’ positions on an issue (Edwards & Smith 1996; Liu et al. 2015; Shamon et al. 2019; Stanovich et al. 2013; Stanovich & West 2007; Stanovich & West 2008; Taber & Lodge 2006).

Within this framework, the myside bias has been studied in relation to a variety of research topics in the cognitive and social sciences, such as individual and group reasoning (Mercier 2017; Mercier 2018), scientific thinking (Evans 2002; Mercier & Heintz 2014), intelligence and cognitive abilities (Stanovich et al. 2013; Stanovich & West 2007; Stanovich & West 2008), human evolution (Mercier & Sperber 2011; Mercier & Sperber 2017; Peters 2020), public policies and political thinking (Mercier & Landemore 2012; Shamon et al. 2019; Stanovich 2021; Taber et al. 2009; Taber & Lodge 2006), and climate change (Corner et al. 2012; Newman et al. 2018). Overall, this body of work provides us with a rather coherent picture of how an agent’s prior beliefs impact on the agent’s judgement about the persuasiveness of different arguments.

As briefly mentioned above, an agent judges the strength of an argument depending on whether the argument is compatible or not with the agent’s prior position on the issue that is considered. For instance, in line with Edwards & Smith (1996), Taber et al. (2009) found that arguments compatible with the participants’ prior political stances were deemed stronger than incompatible ones; in addition, participants with increasingly stronger initial attitude commited a stronger bias, and participants with more prior knowledge would spend more time in analysing and counter-arguing constrastive arguments to their prior beliefs.

Recently, Liu et al. (2015) developed what they called a congruence model, according to which an agent’s judgement about the strength of an argument is determined by two dimensions: the compatibility of the argument with the agent’s prior view, and the intrinsic quality of the argument. Liu et al. (2015), and more recently Shamon et al. (2019), found that both these dimensions, and not just the compatibility with prior beliefs, are relevant for explaining people’s assessment of argument strength. In addition, Shamon et al. (2019) also found that arguments judged as familiar are rated as stronger than less familiar arguments.

A number of studies also support the claim that the myside bias in argument evaluation is a driving mechanism of attitude polarisation, i.e., the fact that participants' prior attitudes become more extreme after exposure to conflicting arguments (Lord et al. 1979; Newman et al. 2018; Stanovich 2021; Taber et al. 2009). In this regard, experiments in Corner et al. (2012) and (Shamon et al. 2019) suggest that attitude polarisation is not a necessary consequence of myside bias in argument evaluation, and that mysided reasoners might not always end up entertaining more extreme beliefs after discussion.

Overall, three salient features of myside bias in the evaluation of arguments stand out:

- Arguments that favor their own prior beliefs and disfavor opposing views are overweighted (Liu et al. 2015; Lord et al. 1979; Shamon et al. 2019; Stanovich et al. 2013; Stanovich 2021; Stanovich & West 2007; Stanovich & West 2008). At the same time, an argument that attacks the prior opinion of the arguer or confirms contrary views is generally classified as a weak argument (Nickerson 1998).

- Reasoners who are neutral toward the topic under discussion tend not to exhibit a myside bias in evaluation tasks (Shamon et al. 2019; Taber & Lodge 2006).

- The myside bias occurs in various gradations: Proponents who believe more firmly in their point of view tend to have a stronger bias than proponents who hold a milder view (Shamon et al. 2019; Stanovich & West 2008; Taber et al. 2009). In other words, two arguers who are on the same side of an issue may show stronger or weaker bias depending on their beliefs.

In summary, myside bias can be interpreted as a difference of opinion about the extent to which an argument confirms (or refutes) a belief (or its opposite) between an agent who is neutral toward the belief and the case in which the agent supports either the truth or falsity of the belief. Finally, note that the model we develop in Section 3 accounts for all three empirically observed features of the bias described above.

Formal models of myside bias

A number of formal frameworks has been developed to study the effect of myside bias at the individual level. For instance, Rabin & Schrag (1999) developed a Bayesian model of belief update with confirmation bias. In their framework, confirmation bias works in the following way: agents that are more convinced of either the truth or the falsity of a proposition can mistakenly evaluate contrasting signals as supporting signals of their favoured alternative with a fixed probability. While representing the first approach to modelling myside bias within a Bayesian framework, their model falls short of giving a sufficiently fine-grained formal representation of myside bias. Admittedly, there is no prior-dependent modulation of the bias: the bias has the same intensity independently of the agents’ priors. Furthermore, agents do not underweight or overweight arguments, but only mistake disconfirming arguments for confirming ones with a fixed probability.

More recently, Nishi & Masuda (2013) have implemented the model of Rabin & Schrag (1999) in a multi-agent context, and studied the emergence of macro-level phenomena such as consensus and bi-polarisation as a result of a myside bias in the evaluation of evidence. As such, their work suffers from the same limitations we identified above in the work of Rabin & Schrag (1999). The model that we propose retains the Bayesian approach of Rabin & Schrag (1999) while also allowing for a more realistic representation of the myside bias, taking into account the three above-mentioned empirically observed properties.

Other models of myside-biased individual argument evaluation have been proposed. For instance, building on the Argument Communication Framework of Mäs & Flache (2013) and on the empirical findings in Shamon et al. (2019), Banisch & Shamon (2021) developed an individual-level model of myside bias which accounts for the representation of an attitude-dependent gradation of the bias via a strength of biased processing parameter. In their model, an agent accepts/rejects arguments with a certain probability that depends on whether the arguments cohere or not with the prior attitude of an agent, with coherent arguments being more likely to be accepted. On top of this, the strength of biased processing parameter controls how much more likely it is for an agent to accept arguments that cohere with its prior attitude, compared to accepting arguments that do not.

Starting from the work of Hunter et al. (1984), Lorenz et al. (2021) develop a very general model of individual attitude that combines a variety of cognitive mechanisms driving an agent’s attitude dynamics. Among other mechanisms, the model incorporates what they call motivated cognition, which makes an agent’s evaluation of a received piece of information dependent on the absolute value of the difference between the agent’s attitude and the message, where both attitude and message are real numbers.

Let us highlight some relevant distinctions between the model that we will propose on the one hand, and the models in Banisch & Olbrich (2021) and Lorenz et al. (2021) on the other. First, neither of these two models is Bayesian: in Banisch & Olbrich (2021), the opinion of an agent is the sum of the arguments that it accepts, where arguments can take either value \(1\) or \(-1\), and the changes in an agent’s opinion are determined by changes in the set of arguments that it accepts or rejects after interacting with others; in Lorenz et al. (2021), the agents’ attitudes are real numbers (positive or negative) and the pieces of information that they exchange are the values of their attitudes, rather than pieces of evidence or arguments.

Second, as for the case of Mäs & Flache (2013), the agents in Banisch & Shamon (2021) can only exchange arguments that, although of possibly different polarity (i.e., supporting either one of two sides of an issue), are all equally strong. In this regard, the Bayesian setting that we employ allows for a more fine-grained representation of arguments, where each argument is associated with a diagnostic value that specifies how much the argument supports one side of the issue against the other.

Both Banisch & Shamon (2021) and Lorenz et al. (2021) also implemented their individual-level models in a multi-agent environment and studied the opinion patterns emerging at the collective level as a result of the interaction between myside-biased agents. In this regard, Banisch & Shamon (2021) found that, if the agents’ bias is weak, the group converges to a consensus more rapidly; conversely, as the agents’ bias becomes stronger, a persistent state of bi-polarisation at the collective level is reached, i.e., a state where agents can be divided into two groups characterised by extreme but mutually opposed beliefs. Similarly, Lorenz et al. (2021) found that motivated processing produces patterns of bi-polarisation. Note that neither of these models is concerned with the problem of truth-tracking.

Other multi-agent models of belief formation implement some form of myside bias in evaluation and investigate its relation to macro-level societal patterns, such as convergence to consensus or polarisation. For instance, Alvim et al. (2019, 2021) propose a model of polarisation in social networks with confirmation bias, which is inspired by the work on bounded confidence models of Hegselmann & Krause (2002). These models in turn generalise the studies on iterative opinion pooling (DeGroot 1974; French Jr 1956; Lehrer & Wagner 1981), where agents update their opinions by a weighted average of the opinions of neighbouring agents.

The models in Alvim et al. (2019), Alvim et al. (2021) embed a confirmation bias factor as a function of the distance between the prior beliefs of two neighbouring agents. When updating their beliefs, agents compute a weighted average of their neighbours, where the weights of the underlying network structure are themselves weighted by the confirmation bias factor. Note, however, that Alvim et al. (2019), Alvim et al. (2021) are concerned with group polarization, and not group truth-tracking. While a number of generalizations of bounded-confidence models have been proposed to address problems of truth-tracking (see, e.g., Hegselmann & Krause 2006, 2015; Douven & Riegler 2009), we believe that other formal frameworks are more apt to specifically model group discussion, in particular with respect to agents exchanging stronger or weaker arguments in support of alternatives to an issue.

Let us conclude this section by discussing the very recent Bayesian model of group learning in social networks with confirmation bias developed by Gabriel & O’Connor (2022). Their model builds on the two-armed bandit model developed in Bala & Goyal (1998) and on the network models of Zollman (2007), Zollman (2010) to study group learning. Without going into the details of the two-armed bandit model, it should be said that agents in the learning process can learn both by receiving signals from the world and by communicating with their neighbours. Within this framework, Gabriel & O’Connor (2022) consider two different ways of modeling confirmation bias. First, moderately biased agents are given an intolerance parameter that sets for each agent the probability of ignoring information from its neighbours, based on the likelihood of the information in light of its prior beliefs. Second, agents that are strongly biased completely ignore information received from neighbours for which the probability of the information in light of their own beliefs is less than a certain threshold.

Gabriel & O’Connor (2022) found that moderate confirmation bias increases the probability that agents converge to the correct consensus; this effect is attributed to the fact that a moderate bias allows agents to explore different relevant options for a longer time, thus making more likely that truth will ultimately result from the group learning process. On the other hand, with strong confirmation bias, as the strength of the bias increases, consensus becomes less likely, and more agents in the network stabilise on choosing the wrong option.

Our model differs from Gabriel & O’Connor’s (2022) approach in three respects: First, neither the moderate nor the strong confirmation bias in Gabriel & O’Connor (2022) correspond to a prior-dependent underweighting or overweighting of argument strength. In both their moderate and their strong bias case, agents either update on the evidence as they receive it, or completely ignore the evidence received. Therefore, their model omits what was considered one of the key elements of myside bias.

Second, while the analysis in Gabriel & O’Connor (2022) focuses on groups of relatively small size (up to \(25\) agents), we want to explore the effect of myside bias on larger groups as well. This is motivated by our interest in deliberative contexts that can involve larger groups, such as interaction in social media groups or state legislatures.

Third and finally, while Gabriel & O’Connor (2022) focus only on homogeneously biased groups, much of our analysis relies on modeling heterogeneous groups in which the myside bias is distributed differently across the group of discussants. We will show that a number of interesting types of group dynamics emerge in these groups that are important for finding the truth in a discussion.

A Bayesian Model of Myside Bias in Argument Evaluation

To provide a Bayesian model of myside bias, we introduce binary propositional variables \(A\) and \(B\) (in italic script) which have the values A and \(\neg\)A, and B and \(\neg\)B (in roman script), respectively, with a prior probability distribution \(P\) defined over them. In the present context, B is the target proposition and A is an argument in support of B. A and B are contingent propositions and we assume that \(P({\rm A}), P({\rm B}) \in (0,1)\). Here, \((0,1)\) is the interval containing all real numbers between 0 and 1, excluding the endpoints. \(P\) represents the subjective probability function of an agent and \(P({\rm A})\) measures how strongly they believe in A. See Sprenger & Hartmann (2019) for a philosophical justification of the Bayesian framework.

Next, we are interested in the posterior probability of B after learning A. According to Bayes’ theorem, it is given by \(P^{\ast}({\rm B}) := P({\rm B \vert A})\) which can also be written as:

| \[ P^{\ast}({\rm B}) = \frac{P({\rm B})}{P({\rm B}) + x\cdot P({\rm \neg B})} .\] | \[(1)\] |

| \[ x := \frac{P({\rm A \vert \neg B})}{P({\rm A \vert B})},\] | \[(2)\] |

This proposition directly relates confirmation and disconfirmation of one’s own beliefs to the likelihood ratio \(x\): if \(x < 1\), then the agent’s degree of belief in B increases, and therefore the argument A confirms the target belief B; if \(x > 1\), then the agent’s degree of belief in B decreases, and therefore the argument A disconfirms the target belief B (“A attacks B”); if \(x = 1\), then learning A does not make any difference for the agent’s degree of belief in B, which means that A is not relevant for the truth or falsity of B. The likelihood ratio \(x\) is also referred to as the diagnosticity of an argument A relative to a belief B. Here the term “diagnosticity” refers to the fact that the likelihood ratio measures how much A specifically supports the truth of B against its falsity. For instance, consider a case in which an argument A is more likely to be true if B is true than if \(\neg\)B is true, i.e., when \(P({\rm A \vert B}) > P({\rm A \vert \neg B})\): then \(x < 1\) and, by Proposition 1, the argument A will increase the degree of belief in the target proposition B. The more likely it is that an argument A is true if B is true, compared to the case where \(\neg\)B is true, the smaller \(x\) is and the higher the confirmation of B is.

Another important property to be spelled out is the following:

This proposition captures the notion that the change in an agent’s degree of belief in B triggered by argument \(x\) is, in absolute value, the same as the change triggered in an agent’s equally strong degree of belief in the alternative \(\neg\)B triggered by the inverse of \(x\). In other words, if we take two agents with equally strong degrees of beliefs, but towards opposite alternatives of an issue, the likelihood ratio \(x\) and its inverse \(1/x\) determine equal changes in their degrees of beliefs.

The perceived likelihood ratio

The central idea of the proposed model is that the myside bias affects the way an agent judges the diagnosticity of an argument A relative to B. This, in turn, affects the way the agent updates the strength of their belief in B based on the argument A. See also Nickerson (1998).

Therefore, we model the myside bias as a distortion of the (pure) likelihood ratio \(x\) of A relative to B, via a perceived likelihood ratio function \(x'\). If an agent assigns a high degree of belief to B, then using \(x'\) yields more confirmation than using the (pure) likelihood ratio \(x\), provided that \(x\) is confirmatory (i.e., \(x < 1\)); on the other hand, if \(x\) is disconfirmatory (i.e., \(x > 1\)), then \(x'\) yields less confirmation than \(x\). More specifically, we propose the following functional form:

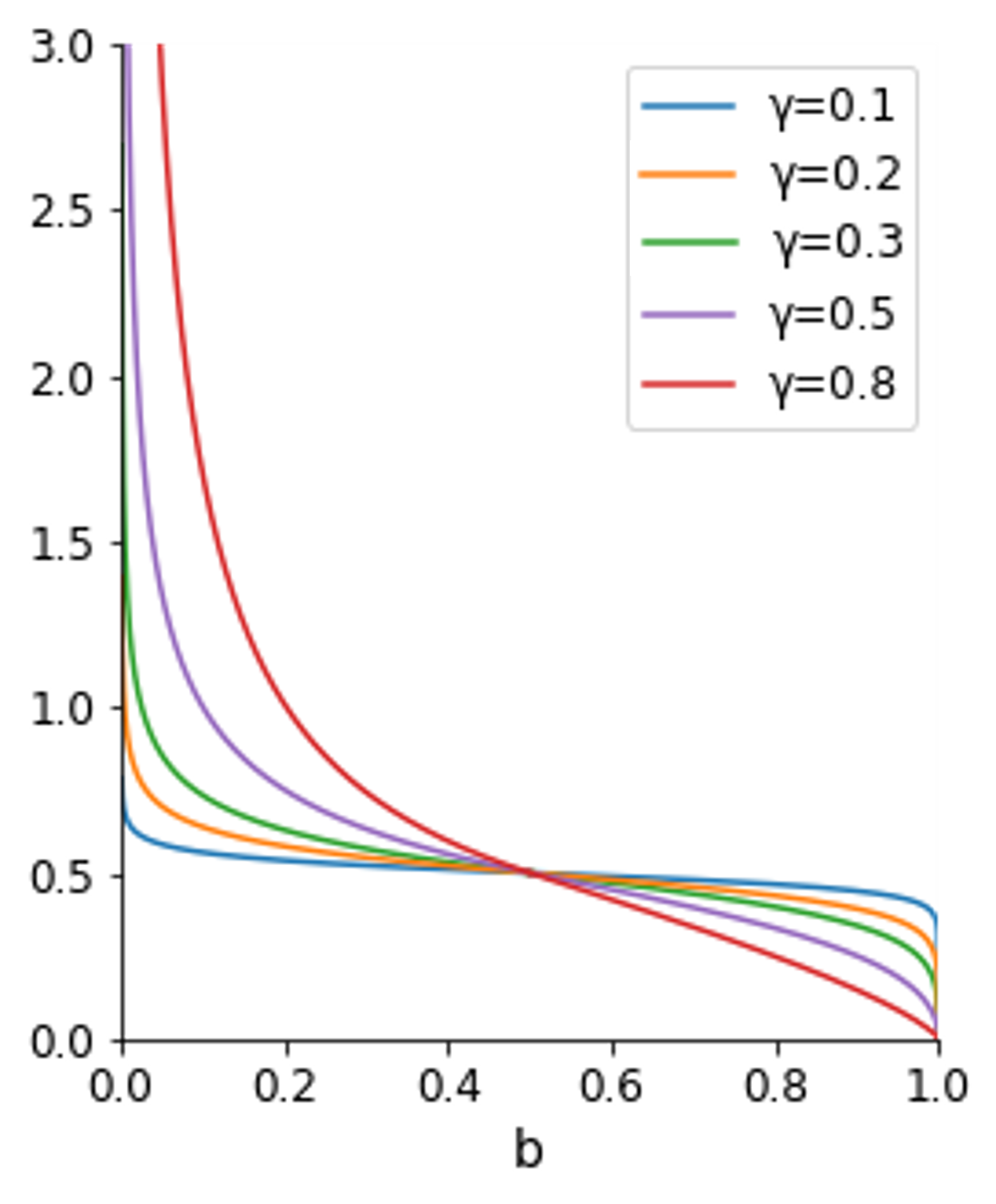

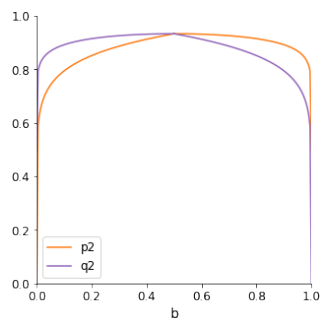

Note that the perceived likelihood ratio \(x'\) is a function of the prior probability of the target proposition, as opposed to the pure likelihood ratio \(x\), which is considered independent of the prior probability. Figure 1 shows the perceived likelihood ratio \(x'\) as a function of the agent’s prior degree of belief \(b\). We see that \(x' < x\) if \(b > 1/2\) and \(x' > x\) if \(b < 1/2\). Furthermore, we take the parameter \(\gamma\), which determines the convexity of the function, to characterise different ways in which agents can be more or less radically biased. This is motivated by the fact that, for a fixed agent’s prior belief \(b\), as the value of \(\gamma\) increases, the distortion of the (pure) likelihood ratio becomes stronger. In other words, \(\gamma\) can account for the agents’ individual differences in the way they more or less strongly distort arguments, which are independent from the agents’ prior degrees of belief. We will refer to \(\gamma\) also as the radicality parameter. We will see below that \(\gamma\) has to be in the open interval \((0, 1)\). Then the distortion is much stronger for values of \(b\) close to the extremes (i.e., \(0\) and \(1\)), than for middling values of \(b\).

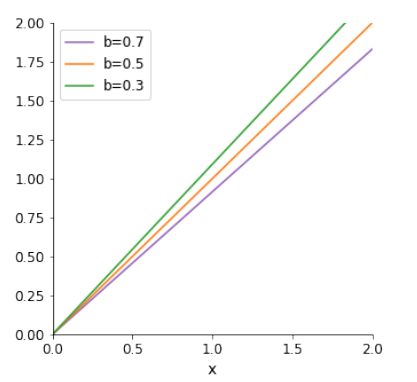

Figure 2 plots the perceived likelihood ratio \(x'\) as a function of the (pure) likelihood ratio \(x\) for fixed values of \(b\) and \(\gamma\). In this case, \(x'\) is a linear function of \(x\), where \(x' > x\) if \(b > 1/2\). Similarly, \(x' < x\) if \(b < 1/2\).

We summarise our findings in three propositions:

Propositions 3 and 4 demonstrate that the perceived likelihood ratio \(x'(x,b)\) is adequate to represent the myside bias, because it incorporates the three salient features of myside biased identified above. In particular, Proposition 3 shows that if the agent is more convinced of B than of \(\neg\)B, any argument will be perceived as more confirmatory or less disconfirmatory compared to the evaluation of a neutral observer (who uses the pure likelihood ratio \(x\)). On the other hand, if an agent is prone to believe that the target proposition is false, they will tend to perceive arguments as less confirmatory or more disconfirmatory than a neutral observer. Furthermore, an agent who is indifferent between B and \(\neg\)B will not be biased towards either of the two sides. This is consistent with the first two salient features of myside bias.

In addition, Proposition 4 shows that, all things being equal, the perceived likelihood ratio decreases as the strength of belief in B increases, and increases as the strength of belief in B decreases. Intuitively, this means that myside bias gets more pronounced as the degree of belief in the target proposition increases, in accordance with the third salient feature of myside bias.

Proposition 5 guarantees that the distortion preserves the property described in Proposition 2, according to which a likelihood ratio \(x\) determines the same change in an agent’s degree of belief in, say, \(\rm B\), as the change determined by the inverse of \(x\) in an agent whose degree of belief in \(\neg{\rm B}\) is equally strong. If this were not the case and our function would map two pure likelihood ratios \(x,y\) such that \(x=1/y\) to values that are not the inverse of one another, this would determine an asymmetry in the way agents with equally strong degrees of belief in opposite sides of an issue distort equally strong (pure) likelihood ratios. This would be equivalent to unjustifiably assuming that the distortion of arguments can be stronger or weaker dependently on which side of the issue an agent is on.

The myside bias update

An agent who commits the myside bias does not update with the (pure) likelihood ratio \(x\), but with the perceived likelihood ratio \(x'(x, b)\) provided in Definition 1. Using Bayes’ theorem with \(x'\) instead of \(x\), the posterior degree of belief in the target proposition B, after updating on the argument A, is then given by:

| \[ P^{\ast \ast}({\rm B}) = \frac{b}{b + x'(x, b) \cdot \bar{b}} .\] | \[(3)\] |

Therefore, our model predicts that agents’ posterior degrees of belief will be more extreme than those of an agent who uses Equation (1) to calculate their posterior degree of belief (unless they are indifferent to the target statement). More specifically, agents who rate \(\neg\)B as more likely than B will have a lower posterior degree of belief than that obtained using Equation (1). Conversely, agents who believe B more strongly than \(\neg\)B will have a higher posterior degree of belief than an agent who uses Equation (1). This prediction is consistent with recent findings presented in Bains & Petkowski (2021).

Another interesting consequence of the proposed model is that the new updating rule (i.e., Equation 3) is non-commutative, i.e., the result of an update on two or more arguments depends on the order in which the update takes place. Figure 3 illustrates this point. Here we consider an agent who is initially indifferent between B and \(\neg\)B (and therefore sets \(b= 1/2\)). Subsequently, the agent is presented with two arguments, \({\rm A_1}\) (with the pure likelihood ratio \(x_1\)) and \({\rm A_2}\) (with the pure likelihood ratio \(x_2\)), such that \(x_1 < 1 < x_2\), i.e., the first argument (\({\rm A_1}\)) is confirmatory and the second argument (\({\rm A_2}\)) is disconfirmatory. If the agent first updates on \({\rm A_1}\), then their degree of belief in B will increase; in turn, this will determine an underweighting of the disconfirmatory strength of \({\rm A_2}\), since \(P^{\ast \ast}({\rm B}) > 1/2\), by Proposition 3. However, if the agent first updates on \({\rm A_2}\), then \(P^{\ast \ast}({\rm B}) < 1/2\) and their second update (on \({\rm A_1}\)) uses the perceived likelihood ratio \(x_1' > x_1\), again by Proposition 3. Thus, the agent assigns a higher posterior degree of belief to B if they first update on the stronger argument \({\rm A_1}\), followed by a second update on the weaker argument \({\rm A_2}\).

Mathematically, the reason for the non-commutativity of the new updating rule is the fact that the perceived likelihood ratio is a function of the prior probability. (While updating by Bayes’ theorem is commutative, it is well known that updating by Jeffrey conditionalisation is non-commutative, however, for a different reason.) It is worth noting that likelihood ratios that depend on the prior probability of the hypothesis being tested, while unusual, are not uncommon in the literature. See, e.g., Chapter 5 of Bovens & Hartmann (2003) for a discussion.

We summarise our findings on the non-commutativity of myside-biased updating in the following proposition.

Hence, it is epistemically advantageous for a myside-biased agent to first update on the stronger argument, i.e., on the argument with the smaller (pure) likelihood ratio.

Our model also predicts that reasoners are easily persuaded of their own position and harder to change. For instance, the stronger an agent’s prior degree of belief becomes, the stronger contrasting arguments need to be in order to sway the reasoner. In contrast with this view, Mercier (2017), Mercier (2020) and Mercier & Sperber (2017) argue that myside bias does not directly affect an agent’s evaluation of external arguments, and that reasoners are able to accept good arguments even when they challenge their own view. Within this framework, one would not expect to observe differences in argument evaluation between reasoners differing in their prior degrees of beliefs. This contrasts with our prediction that argument evaluation changes as a function of an arguer’s prior degree of belief.

While the correctness of one or the other of these predictions remains an open question, our model has the advantage that it intuitively explains harmful group-level phenomena, such as polarization in peer groups and communication difficulties between polarized groups as an effect of one-sided exchanges and evaluations of arguments (Stanovich 2021).

Justifying the model

So far, we have presented a model that is consistent with the three salient features of myside bias. The model is Bayesian because it models the bias in a Bayesian way: The agent assigns a prior probability to B, is then presented with an argument A, and updates B accordingly. This requires specifying a likelihood ratio, and our model identifies an appropriate choice, viz., \(x'(x,b)\). However, this choice must be justified. Otherwise, the model would be a purely ad hoc solution. So how can the choice and the proposed functional form of \(x'(x,b)\) be justified?

To address this question, we introduce a new propositional variable \(E\) and argue that the agent does not only learn A, but also E. In the present context, the appropriate posterior probability of B is therefore \(P^{\ast \ast \ast}({\rm B}) = P({\rm B \vert A, E})\). We will then see that, under certain conditions, \(P^{\ast \ast \ast}({\rm B}) = P^{\ast \ast}({\rm B})\).

The new propositional variable \(E\) has the values E: “The target belief coheres with the background beliefs” and \(\neg\)E: “The target belief does not cohere with the background beliefs” We take E to be supporting evidence for B. That is, it is rational to assign a higher degree of belief to a proposition that fits well to one’s background beliefs than to a proposition that does not. Hence, it is rational that \(P({\rm B \vert E}) > P({\rm B \vert \neg E})\).

It has already been suggested that the link between an agent’s beliefs and their background beliefs justifies their putative bias in evaluating arguments (Evans 2002; Evans & Over 1996). For example, Evans (2002) argues that a broadly coherent system of beliefs is necessary to make sense of the world, and that this justifies an individual’s biased attitude toward her or his own view and toward alternatives. Our proposal is in line with this research.

Before proceeding, it is important to note that the agent considers proposition E on the basis of the argument A put forward. Considerations of coherence with background beliefs also play a role, of course, in an agent’s determination of the prior probability of B. Here, however, the focus is on the following question: does B cohere with the agent’s background beliefs in light of A?

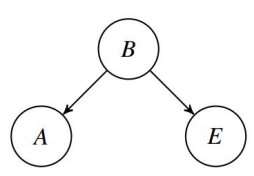

Next, we note that \(A \perp \!\!\! \perp E \vert B\). That is, once we know that B, learning E will not change the degree of belief an agent assigns to A: The truth (or falsity) of the argument only depends on the target belief. This plausible assumption then suggests the Bayesian network represented in Figure 4. Note that there are arcs from \(B\) to \(A\) and from \(B\) to \(E\), indicating that the corresponding propositional variables are directly probabilistically dependent on each other. For an introduction to the theory of Bayesian networks, see Hartmann (2021) and Neapolitan (2003).

To complete the Bayesian network, we have to specify the prior probability of the root node \(B\), i.e.,

| \[ P({\rm B}) = b,\] | \[(4)\] |

| \[ \begin{eqnarray} \label{prior2} P({\rm A \vert B}) := p_1 \quad &,& \quad P({\rm A \vert \neg B}) := q_1 \nonumber \\ P({\rm E \vert B}) := p_2 \quad &,& \quad P({\rm E \vert \neg B}) := q_2 . \end{eqnarray} \] | \[(5)\] |

Using this, we can calculate the posterior probability of B after learning A and E.

To establish that \(P^{\ast \ast \ast}({\rm B}) = P({\rm B \vert A, E}) = P^{\ast \ast}({\rm B})\), we need to show that

| \[ x_E := q_2/p_2 = \begin{cases} \frac{2 \, \bar{b}^\gamma}{b^\gamma + \bar{b}^\gamma} & {\rm for} \ b\geq 1/2 \\ \\ \frac{b^\gamma + \bar{b}^\gamma}{2 \, b^\gamma} & {\rm otherwise} \\ \end{cases}\] |

| \[ \begin{eqnarray} p_2 &:=& \begin{cases} 1/2 \cdot \left(b^\gamma + \overline{b}^\gamma \right) & \textrm{ for } b\geq 1/2 \\ \\ b^\gamma & \textrm{ otherwise, and} \end{cases} \label{p2q2like1} \end{eqnarray} \] | \[(6)\] |

| \[ \begin{eqnarray} q_2 &:=& \begin{cases} \bar{b}^\gamma & \textrm{ for } b\geq 1/2 \\ \\ 1/2 \cdot \left(b^\gamma + \overline{b}^\gamma \right) & \textrm{ otherwise} \label{p2q2like2} \end{cases} \end{eqnarray} \] | \[(7)\] |

Third, both \(p_2\) and \(q_2\) have a maximum at \(b =1/2\) if \(\gamma <1\). See also Figure 4. This is plausible, because a proposition with a middling prior probability is most “flexible” and one would expect it to easily fit into a system of background beliefs. This is not to be expected with a proposition of whose truth or falsity one is much more convinced. For \(\gamma > 1\), \(p_2\) has a minimum at \(b =1/2\). Because this is not plausible (given the above considerations), we restrict the range of \(\gamma\) to the open interval \((0,1)\) (see Definition 1)).

The crucial idea of the present proposal is that an agent who holds a belief B and who is confronted with an argument A for or against B does not only update their strength of belief in A but also investigates, prompted by the argument A, whether B fits to their background beliefs. This will lead to an increase or decrease of the agent’s strength of belief in B—the myside bias—which then, under these assumptions, turns out to be a rational response. The suggestion we make here thus bears similarity with other proposals in the literature that tie the notion of coherence to prior-belief effects in the evaluation of arguments, evidence and information, e.g., Thagard (2006), Rodriguez (2016), Wolf et al. (2015).

In closing this section, let us shortly comment on the notion of coherence that is used here. “Coherence” is a notoriously vague term that plays a key role in the coherence theory of justification in epistemology (see, e.g., BonJour (1985)). It refers to the property of an information set to “hang together well” which is often taken to be a sign of its truth. Witness reports in murder cases are good illustrations of this. But while we have a good intuitive sense of which information sets are coherent and which are not (and which of two information sets is more coherent), it is notoriously hard to make precise what coherence means and to substantiate the claim that coherence is, under certain conditions, truth-conducive (or at least probability-conducive) in the sense that a more coherent set is, given certain conditions, more likely to be true (or has a higher posterior probability). These questions have been addressed in the literature in formal epistemology. See, e.g., Bovens & Hartmann (2003), Douven & Meijs (2007) and Olsson (2022). It will be interesting to relate the qualitative proposal made in this paper to that literature. This will allow for a more fundamental derivation of the perceived likelihood ratio proposed in this paper. We leave this task for another occasion.

An Agent-Based Model of Myside Bias in Group Discussion

To investigate the effects of myside bias in group discussions, we created an agent-based model in NetLogo (Wilensky 1999). This model simulates a group discussion in which the agents debate a binary issue. The debated issue has a correct/true alternative and an incorrect/false alternative. Our goal is to evaluate the impact of myside bias by determining what effect it has on the ability to track the truth in discussions between agents with a myside bias compared to agents without this bias. The model code is available at https://www.comses.net/codebases/68a53ba2-8cfd-4805-bb16-5e8bd6840d25/releases/1.1.0/.

The setup

Our model consists of \(n\) agents and a unique propositional variable, which can either assume value "true" or value "false". This propositional variable represents the issue that the agents have to settle during their discussion. Note that we assume that the issue has only one correct answer, namely "true", while the other answer is incorrect.

At the start, each agent is randomly assigned a prior probability distribution over the propositional variable, by randomly generating, for each agent, its prior degree of belief in the correct alternative of the variable from a uniform probability distribution. In this paper, we will be concerned with groups of decision makers that are competent on average, and thus we will assume that at the beginning of the discussion, the average belief of the discussants in the correct answer to the question is strictly above chance. We do this by generating distributions of priors over the agents whose average is strictly greater than \(1/2\).

This choice is inspired by some variants of the Condorcet’s Jury Theorem, which we briefly mentioned in Section 1. While the simplest version of this theorem considers groups of voters that all have the same competence (i.e., the same probability of casting a correct vote), these variants focus on more realistic scenarios in which voters may have different competences, and in which some voters might possibly be incompetent, i.e., more likely to vote for the wrong option (probability < \(0.5\)) (Dietrich 2008; Owen et al. 1989).

Discussion groups that are competent on average have particularly interesting features to analyse from a truth-tracking perspective. First, such groups can contain agents that have a higher degree of belief in the incorrect answer: in the context of tracking truth, we can then investigate under which conditions group discussion leads those initially incorrect agents to strengthen, weaken or reverse their prior incorrect beliefs. Second, on average competent groups can contain an initial majority of agents that support the incorrect side of the issue: this will allow us to investigate under which conditions initially incorrect majorities are overturned or retained as a result of discussion.

Since we mentioned Condorcet’s Jury Theorem, it is important to note that we do not interpret agents’ degrees of belief as competence in the sense of this theorem, i.e., competence as a person’s probability of voting for the correct answer. In our setting, we take an agent’s degree of belief as an indicator of accurate voting, namely correct if their belief level is higher than \(1/2\), otherwise incorrect.

Before the start of the discussion, each agent is also assigned a specific positive number strictly smaller than \(1\), representing its individual radicality parameter \(\gamma\) discussed in the previous section. The model encodes three distinct procedures for assigning values of \(\gamma\) to the discussants. The first option consists in assigning the same value for \(\gamma\) to all agents; this way the resulting group of individuals is homogeneous with respect to the radicality parameter.

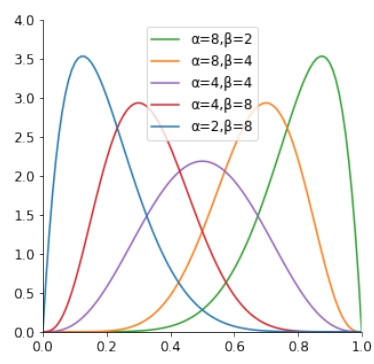

The second option is to assign agents values drawn from a \(\beta\)-distribution, that can be fixed by assigning values to the parameters \(\alpha\) and \(\beta\). In this way, we can generate a group of discussants that is heterogeneous with respect to the radicality parameter, where the values for \(\gamma\) are drawn from a distribution which is the same for the entire group of discussants. Figure 6 plots examples of \(\beta\)-distributions produced by different values for the parameters \(\alpha\) and \(\beta\).

The third option is to generate two distinct \(\beta\)-distributions, one fixing the distribution of the radicality parameter in the group of agents having a higher prior degree of belief in the correct alternative, and the other fixing the distribution of the parameter in the group of agents with a stronger degree of belief in the incorrect answer. This option allows us to model situations in which the correct/incorrect subgroups of discussants show different radicality of the myside bias.

The discussion process

Discussion among agents is modelled in a novel way compared to the preexisting models that we have mentioned in Section 2. This is so for two reasons. First, the existing models are often enriched with a multiplicity of aspects characterising real-world argumentative dynamics (e.g., different network topologies Zollman 2007; Alvim et al. 2021; Gabriel & O’Connor 2022, or the reliability of agents and information Hartmann & Rafiee Rad 2018; Hahn 2022). Including these refinements in our modelling could hinder our scope to specifically analyse the effect of myside bias on group discussion, and therefore a simpler model may better serve our purpose.

Second, our model can provide a novel basic framework within which to study argumentative dynamics in groups. As mentioned in the previous section, in our framework, arguments are propositional random variables, each of which is associated with a likelihood ratio representing its diagnostic value. In our model, the agents discuss by directly exchanging these likelihood ratios with one another. This approach is rather novel compared to other computational models of group discussion or information exchange between agents, where they typically exchange positive/negative signals of equal strength (see, e.g., Ding & Pivato 2021; Mäs & Flache 2013), or simply take as information one another’s beliefs (see, e.g., Hegselmann & Krause 2002 and Alvim et al. 2019).

The discussion begins by randomly drawing a first speaker. The first speaker draws an argument and presents it to the other agents. Depending on its prior beliefs, the agent presents:

- an argument in support of the correct alternative (likelihood ratio strictly smaller than \(1\)), if the agent’s prior is \(b> 1/2\);

- an argument in support of the incorrect alternative (likelihood ratio strictly bigger than \(1\)) if the agent’s prior is \(b< 1/2\);

- no argument if the agent’s prior is \(b= 1/2\).

In our setting, agents pick their arguments by drawing the corresponding likelihood ratio from a distribution that is fixed at the start, and that is common for all agents. We can think of a specific distribution over the space of likelihood ratios as determining the argumentative competence of the agents, i.e. the ability of an agents to present stronger or weaker arguments in support of their preferred alternative. Indeed, the distribution determines which likelihood ratios are more likely to occur and which are less likely: this way, stronger arguments might be made more frequent than weaker arguments, or vice versa, by manipulating the parameters of the distribution.

For the specific results that we present in this paper, we assume that stronger and weaker arguments are equally likely to be drawn by a given agent; to put it differently, very convincing arguments can occur as often as weakly convincing arguments. We furthermore assume that this is the case for both initially correct and initially incorrect agents, i.e., agents with \(b>0.5\) can pick any argument confirming the correct side of the issue, and agents with \(b<0.5\) can pick any argument disconfirming the correct side of the issue. Furthermore, we do not assume any difference in competence between agents with \(b>0.5\) and agents with \(b<0.5\), i.e., we ensure that the likelihood for a correct agent to pick a confirming argument with corresponding likelihood ratio \(x<1\), is the same as the likelihood for an incorrect agent to draw a disconfirming argument with corresponding likelihood ratio \(1/x>1\). We will have more to say about these specific choices in Section 6.

Let us now comment on our modelling choice concerning the mechanism with which agents present arguments relevant for the issue under discussion. In particular, note that agents that prefer either one of the alternatives to the target issue will exclusively present arguments in favour of the alternative they prefer. Note that, modelled this way, argument production is itself subjected to a form of myside bias: the prior beliefs of an agent determine the set of possible arguments that an agent can share with the other discussants (Mercier 2017; Mercier & Sperber 2017).

Our choice to model argument production in this way is rooted in the argumentative theory of reasoning developed in Mercier & Sperber (2017), which claims that participants in a discussion aim at persuading others of their own belief, rather than cooperatively evaluating reasons in favour and against the target issue together with the other discussants.

As a consequence, this theory predicts that participants in a discussion only present arguments that favour their own prior position (or disfavour contrasting positions) on the discussion topic, while avoiding contrasting arguments. Indeed, by presenting this latter type of arguments, an agent would risk swaying the rest of the discussants towards opposite views to its own, thus not serving the purposes to convince other discussants of its own view. This prediction has been observed in a number of empirical studies (Mercier 2017; Mercier & Sperber 2017; Trouche et al. 2014).

As already mentioned in Banisch & Olbrich (2021), we remark that a more general way to model argument production would be to implement a parameter that regulates how much more likely an agent is to present prior-compatible arguments than to present prior-incompatible arguments. Furthermore, this parameter could be made prior-dependent so as to model different degrees of biased argument production, where, for instance, agents with more extreme opinions are more biased than agents with milder opinions. Including such a mechanism of argument production in our model would allow for a more fine-grained analysis of the interaction between myside-bias in argument evaluation and myside-bias in argument production. We leave an extension of our model in this direction for future work.

After the argument is presented, all the other agents update their prior belief in light of the given argument, according to the update rule given in Equation 3. The discussion proceeds by repeating this process, until any further change in the agents’ beliefs is highly unlikely to occur. More precisely, we stop the simulation when: all agents with \(b > 1/2\) have degree of belief \(b>0.99999\); and all agents with \(b < 1/2\) have degree of belief \(b < 0.00001\); and there is no agent with degree of belief \(b = 1/2\).

Tracking truth and monitoring discussion

As mentioned earlier, we are primarily interested in the truth-tracking ability of groups of myside-biased agents, and on whether group discussion is epistemically detrimental or beneficial.

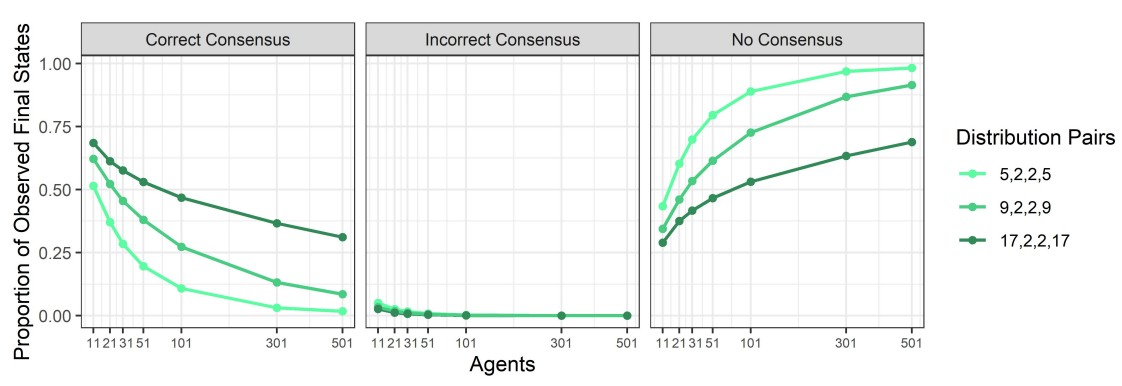

Note that, in our framework, the truth-tracking ability of a group cannot simply be defined as its ability to converge unanimously to the correct answer such as in the case of the moderate bias in Gabriel & O’Connor (2022), or in Zollman (2007), Hegselmann & Krause (2002). Indeed, in our setting, a group discussion might end up in one of the following three states: correct consensus, in which all agents agree on the correct answer; incorrect consensus, in which all agents agree on the incorrect answer; no consensus, in which all agents strongly believe in different alternatives. By consequence, there might be situations in which some agents entertain extremely high degrees of belief in the correct answer, while other agents simultaneously entertain extremely high degrees of belief in the incorrect answer.

Directly mirroring the experiments in Mercier & Claidière (2022), and in the spirit of Condorcet, we decide to focus on the effect of myside-biased discussion on belief aggregation via the majority rule. In other words, we investigate under which condition discussion is beneficial or detrimental for a majority of the agents to entertain the correct belief. Within this perspective, we can think of our model as a model of collective decision making in the sense of Dryzek & List (2003), Dietrich & Spiekermann (2022), where agents undergo a deliberation phase, during which they communicate and revise their beliefs, and a post-deliberation phase, during which the agents’ revised beliefs are aggregated according to a decision rule, the majority rule in our case.

From the perspective of belief aggregation via majority rule, we will investigate the following: first, under which conditions initially correct majorities are retained and, simultaneously, initially incorrect majorities overturned; second, under which conditions correct majorities are more likely before or after discussion.

To explain the epistemic effects of discussion in biased groups at the level of belief aggregation via the majority rule, it is also crucial to monitor some more general aspects of group discussion. In particular, we consider how many agents change their mind, both among agents initially holding correct beliefs and among those initially holding incorrect beliefs. This is crucial, because it allows us to understand what types of conversation produce the epistemic effects observed at the level of belief aggregation via the majority rule. For instance, this type of information enables us to know whether, for a given discussion group, changes in the likelihood of correct/incorrect majorities after discussion are to be attributed to an effective and virtuous group argument exchange of the kind envisioned by Mercier & Sperber (2017) and Gabriel & O’Connor (2022), or to an effective but vicious group discussion where correct agents are swayed to prefer the incorrect beliefs as in Hahn et al. (2019), or to a context of ineffective argument exchange in which agents do not change their minds and simply strengthen their initial stance.

Results

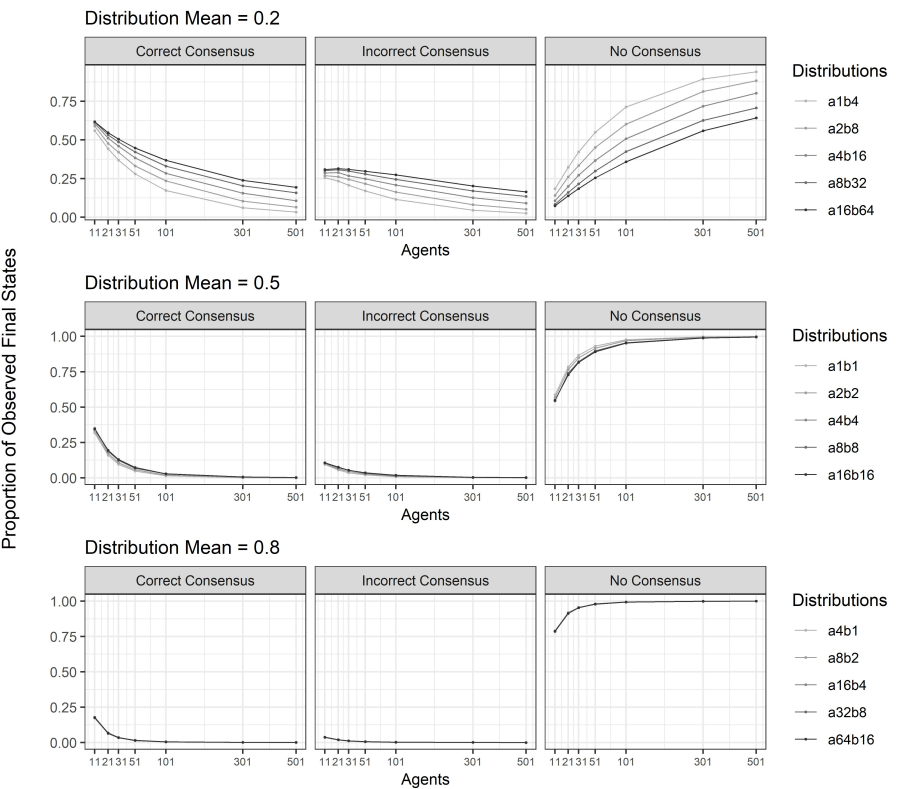

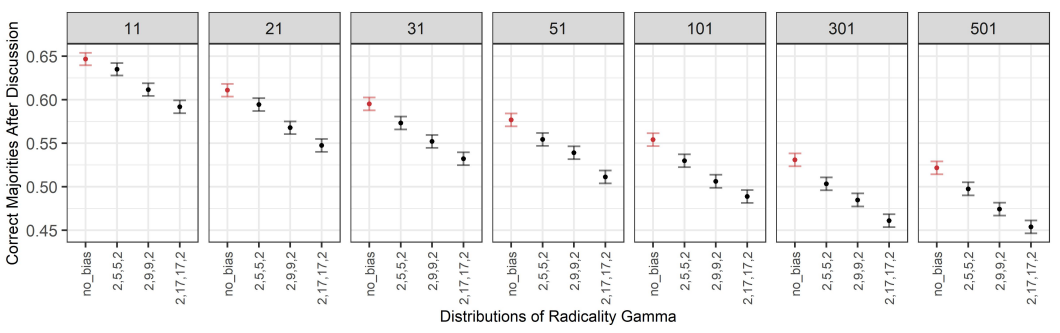

In this section, we present the results of a number of experiments performed using the agent-based model of group discussion that we have just presented. We will proceed in the following three steps. First, we present the results of experiments of groups in which the radicality parameter \(\gamma\) is distributed homogeneously among the agents, i.e., all agents are assigned the same value of \(\gamma\). Second, we present a number of results from experiments on heterogeneous groups in which the parameter \(\gamma\) is distributed according to a given initial distribution that is the same across the group of initially correct agents, i.e., those agents that hold the correct belief before the discussion, as well as across the group of initially incorrect agents, i.e., those agents that initially hold the incorrect belief. Finally, we present results performed on heterogeneous groups of agents in which the parameter \(\gamma\) is distributed differently across initially correct agents and initially incorrect agents.

Let us briefly recall that in the experiments below, we considered on average competent groups of agents, whose prior beliefs are drawn from a uniform distribution. This implies that already at the start of the conversation some agents might be rather opinionated, i.e., their beliefs might be closer to the extremes \(0\) or \(1\) than to the neutral point \(0.5\). In this regard, we remark that a preliminary exploratory analysis conducted on groups whose priors are drawn from a \(\beta\)-distribution more or less sparse around the average \(0.5\) suggested qualitatively alike results to the ones we present below. As an example of a difference in magnitude, let us mention that groups whose priors are drawn from a \(\beta\)-distribution less sparse around the average \(0.5\) are more likely to reach a consensus after discussion compared to the groups that we consider below. This is an unsurprising consequence of the fact that, as the agents’ priors become more similar and approach the neutral point \(0.5\), the disagreement between agents in the evaluation of the evidence decreases.

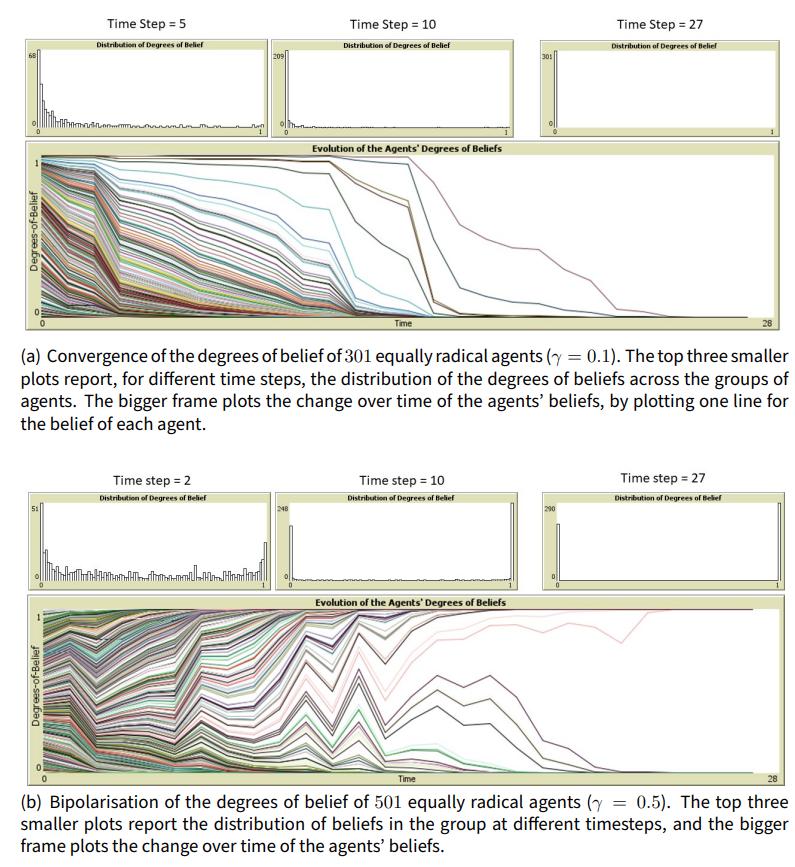

Two kinds of pattern can characterise the macro-level dynamics of group discussion for all types of groups that we considered (homogeneous groups, heterogeneous groups with a common radicality distribution, and heterogeneous groups with two distinct radicality distributions). The first kind of macro-level pattern is characterised by the convergence of the agents’ beliefs towards very high or very low degrees of belief in the correct answer. This pattern typically results from discussion in groups of smaller size with only mildly radical agents; for instance, as illustrated in Figure 7(a), in homogeneous groups this pattern is frequent for small group sizes where the radicality parameter \(\gamma\) is smaller than \(0.3\).

The second pattern that we registered is that of bipolarisation, illustrated in Figure 7(b), which occurs in larger discussion groups, or in groups with strongly biased agents. For the case of homogeneous groups, bipolarisation becomes the most frequent pattern, for instance, if a group of mildly biased agent (generally, \(\gamma<=0.3\)) is large enough, or if agents become more radical.

We also note that in the experiments we conducted, conversations can be rather short also in larger groups and they can converge to a consensus or a non-consensus state quite fast. This is an unsurprising consequence of the fact that agents are as likely to present stronger arguments as to present weaker arguments in support of their case: when very strong reasons are presented, then conversations can be rather quick and sway many discussants to support the arguer’s side. On the other hand, when less decisive arguments are provided, the conversation can last longer and be more divisive. Again, we found that simulations where weaker or stronger arguments (confirmatory and disconfirmatory) were made more or less frequently showed qualitatively alike trends to the cases we analyse below, in which arguments of different strength are equally likely to occur.

In each of the experiments below, we analyse the data taken from 30,000 iterations of the simulation for each given pair of group size and distribution of the radicality parameter \(\gamma\).

Experiment 1: Homogeneous groups

As stated above, we conducted a first experiment on homogeneous groups of agents, where all agents are assigned the same value of the radicality parameter \(\gamma\). Recall also that the priors of the agents are drawn from uniform distributions, and that arguments of all strengths are equally likely to be drawn. We run simulations for groups of different sizes (\(11, 21, 31, 51, 101, 301\) and \(501\)) and different values of \(\gamma\) (\(0, 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8\) and \(0.9\)), where \(0\) means ‘no bias’. Note indeed that Equation (3) and Equation (1) are equivalent if we assign the value \(0\) to \(\gamma\). Indeed, if we plug in \(0\) for \(\gamma\) in the expression of the perceived likelihood ratio \(x'\) given in Definition 1, we obtain that \(x'(x,b) = x\), for any value of \(b\). In turn, by plugging in \(x\) for \(x'(x,b)\) in Equation (3), we obtain Equation (1). Thus the case of a non-biased update can be recovered as a myside-biased update where \(\gamma\) is assigned value \(0\).

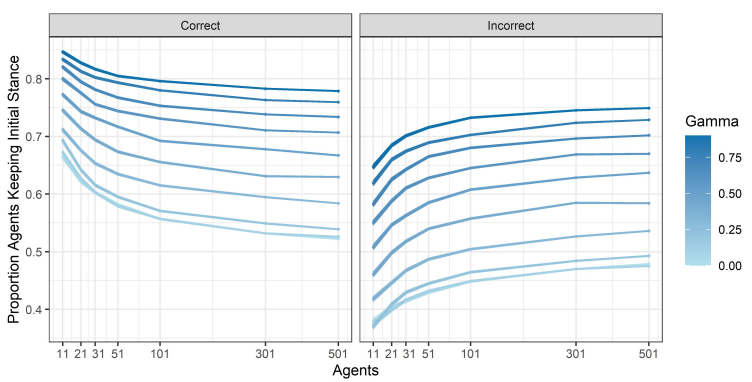

Effects of myside bias and group size on the discussion

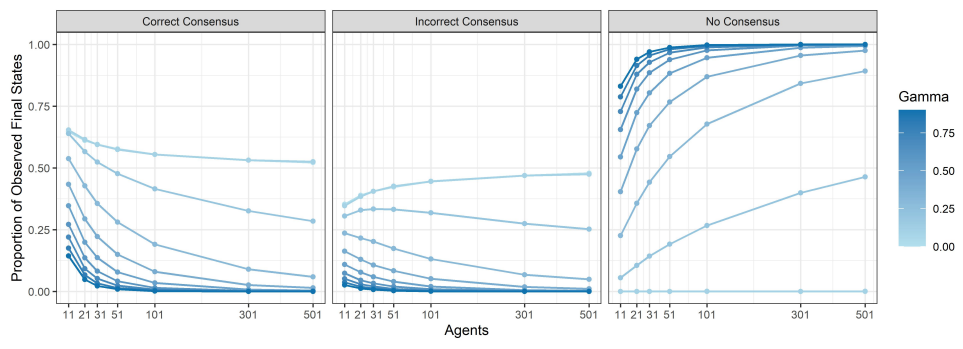

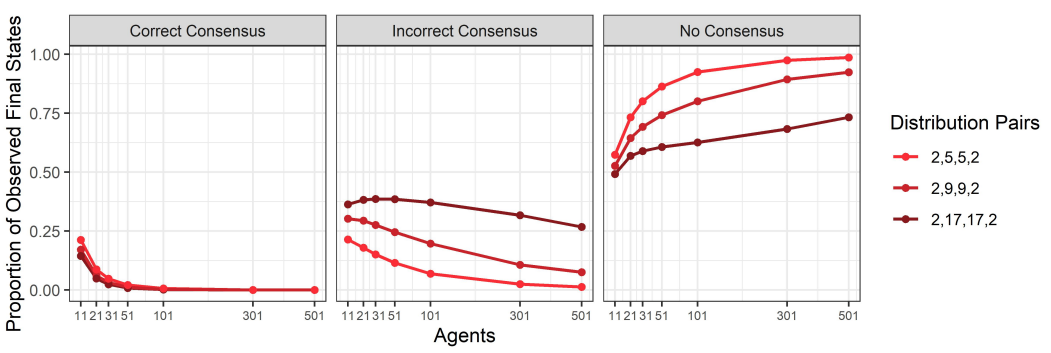

Let us now analyse the effects of myside bias on several features of the discussions that agents conduct. We start by looking at the distribution of correct, incorrect and no consensuses for each combination of group size and value of \(\gamma\), which is summarised in Figure 8.

Two effects can be clearly observed, one determined by the parameter \(\gamma\) and the other determined by group size. First, for a fixed value of group size, as the value of \(\gamma\) increases (moving from lighter to darker shades of blue), non-consensus states become generally more frequent. It is, however, worth noticing that there is no substantial difference between the no-bias case and the case in which \(\gamma=0.1\) (the two lines overlap), although for \(\gamma=0.1\), a minuscule number of non-consensus states has been observed. This is consistent with the fact that cases without bias and cases with \(\gamma > 0\) are qualitatively different in that if there is no bias, then a consensus state will always be reached.

Second, for a fixed value of \(\gamma > 0\), as group size increases, non-consensus states become more likely, with both correct consensuses and incorrect consensuses becoming less frequent. Nevertheless, if we look at the case of \(\gamma = 0.1\), non-consensus states are hardly ever reached. In such a case, as group size increases, the number of correct consensuses decreases, while the number of incorrect consensuses increases.

The considerations above suggest the following picture. First, the more radical actors become, the more difficult it is for them to agree on one side of the issue, whether it is the right side or the wrong side. Moreover, this inability to reach consensus seems to have a similar effect on the ability to converge to a correct answer as it does on the ability to converge to an incorrect answer, i.e., for a fixed group size, there is no noticeable difference in the way the number of correct consensuses and incorrect consensuses decreases with increasing radicality. On the other hand, at least for low levels of radicality, it appears that increasing the group size of discussants gives the incorrect consensus an advantage over the correct consensus.

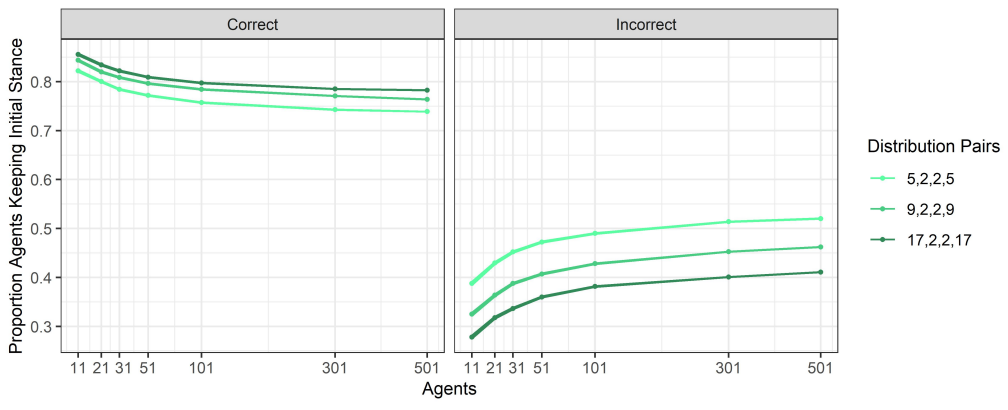

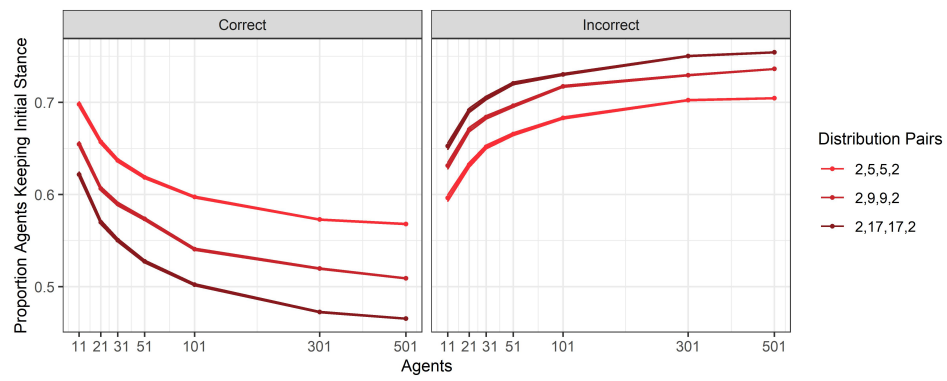

We can better understand these effects by considering how discussion changes for different values of \(\gamma\) and group sizes, which we summarise in Figure 9. Figure 9 shows, for each combination of \(\gamma\) and group size, the proportion of correct and incorrect discussants at the beginning of the conversation who maintained their initial position on the topic at the end of the discussion.1 Again, two clear effects can be seen, one determined by the value of \(\gamma\) and one by group size.

First, it is clear that, for fixed group size, as the value of \(\gamma\) increases (moving from lighter to darker shades of blue), more and more agents keep their initial stance. This is true for both those agents that are initially correct and for those agents that are initially incorrect.

So far, it seems that the effect of increasing the radicality of the agents mainly impacts the effectiveness of the discussion to actually change the agents’ minds. As agents become more radical, arguments become less of a determinant factor in the way agents form their beliefs.

The second effect has to do with group size. In particular, we observe that, for a fixed value of \(\gamma\), as group size increases, the proportion of initially correct agents that mantain their initial correct belief decreases (left panel of Figure 9). This means that, as group size increases, the proportion of initially correct agents that are swayed into adopting the incorrect belief increases; at the same time, the proportion of initially incorrect agents that stick with their initially incorrect belief increases (right panel of Figure 9).

As the size of the discussion group increases, no matter how radical in their bias the agents are, increasingly more agents that are correct are persuaded of the incorrect alternative, while less incorrect agents are persuaded to switch to the correct alternative. This is an effect of the fact that, while in smaller groups, the proportion of initially correct agents is often considerably larger than that of the initially incorrect agents, on average competent groups of larger sizes do not have this feature. Thus, as group size increases, incorrect agents become increasingly more likely to speak at the beginning of the conversation. As a consequence, in larger groups, initially incorrect agents are more often able to anchor agents with milder preferences for the correct side of the issue into favouring the incorrect side of the issue. This anchoring effect is a result of the non-commutativity of the update rule determined by Equation 3, which we will discuss in more detail in the Section 6.

To sum up, our findings on the results of myside bias and group size in group discussions were two-fold: first, we noticed that increasing the radicality of the bias leads on average more agents to stick with their initial beliefs, which in turns translates into non-consensus states being the increasingly more frequent outcome of a discussion; second, we found that as group size increases, initially correct agents tend on average to be more often swayed to the incorrect side of the issue, which has the effect of making correct consensuses increasingly less frequent in favour of incorrect consensuses.

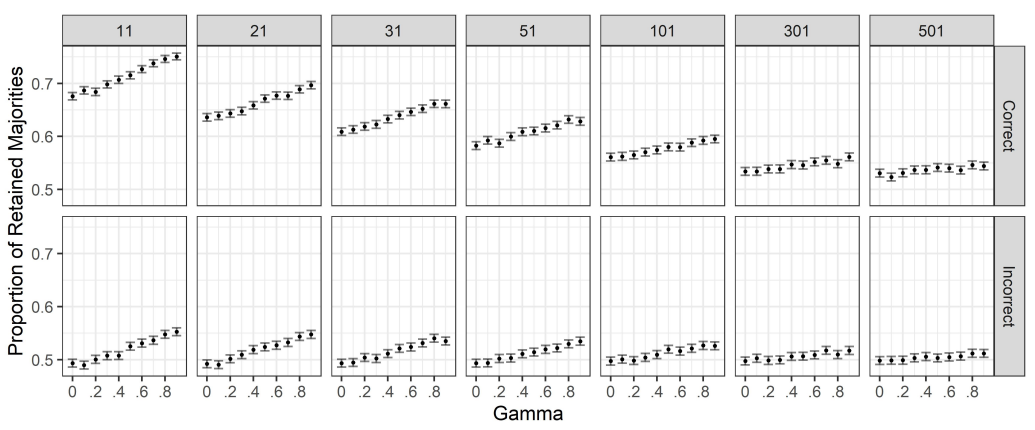

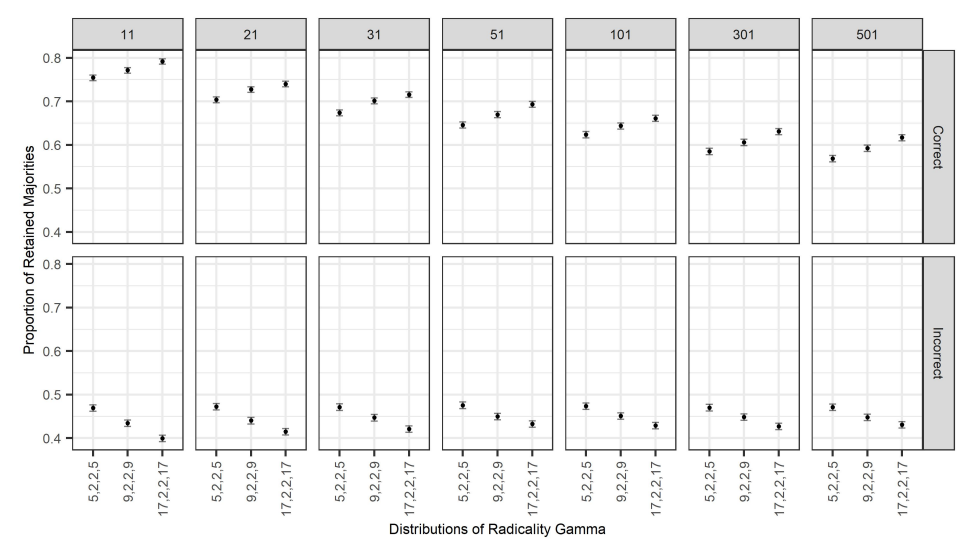

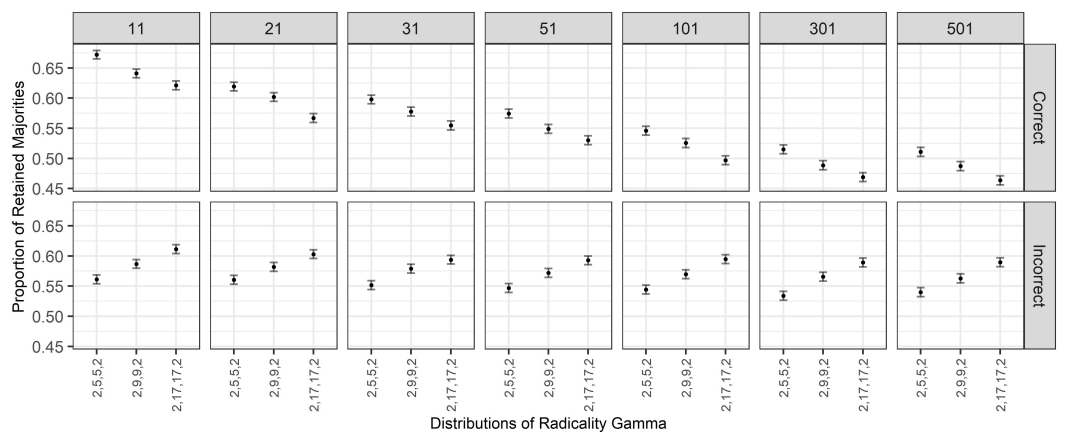

The effects on truth-tracking

Recall that we are interested in the impact of group discussion on belief aggregation based on majority rule. In particular, we are interested in understanding whether discussion is detrimental or beneficial to collective decisions determined by majority rule. In this direction, we start by looking at the average proportion of correct majorities that are retained after discussions, and the number of correct majorities that are lost after discussions. We do the same for those majorities that are incorrect at the start, i.e., for those cases in which the majority of the group favours the incorrect alternative. This way we obtain an overview of the ability or inability of group discussion to retain old correct majorities and acquire new correct majorities.

Results are shown in Figure 10. As for the case of the feature of the discussion, we unsurprisingly observe a clear effect of group size on the proportion of retained correct majorities. Indeed, for a fixed value of the radicality of the bias, we see that, as group size increases (from the leftmost panel to rightmost), the proportion of initially correct majorities that are retained decreases. On the other hand, increasing group size seemingly leaves the proportion of the retained incorrect majorities almost unaltered, except for high values of \(\gamma\).

This suggests that larger groups perform worse than smaller groups when it comes to obtaining correct majorities during a discussion. This is consistent with the fact mentioned above that as group size increases, it becomes more likely that an originally correct representative with a less strong conviction will switch to the wrong alternative.

We also find that for smaller group sizes, the number of correct majorities that are retained increases as the bias of the agents becomes more radical; simultaneously, we observe that this effect disappears in larger groups. Similarly, the number of false majorities that are retained also appears to increase with the same trend. Again, this points to the fact observed above that the discussion becomes less effective as the representatives become more radical. On the other hand, as group size increases, the proportion of both correct and incorrect majorities that are retained does not change with the value of \(\gamma\).

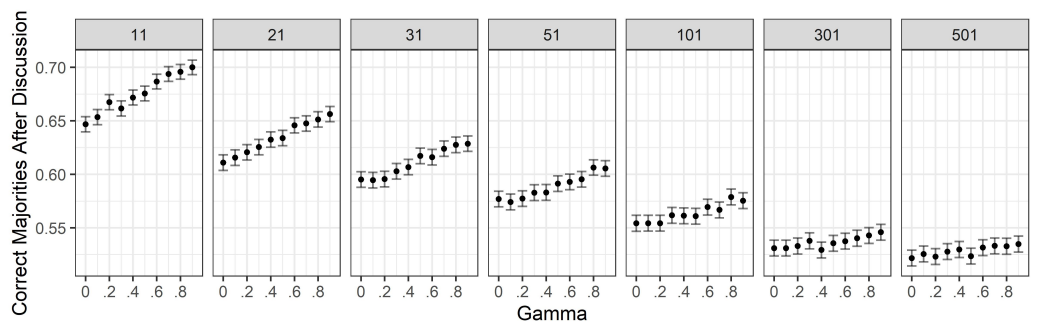

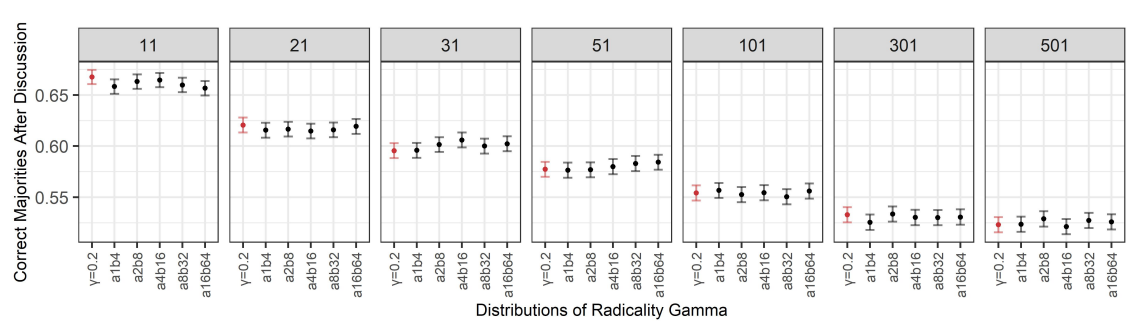

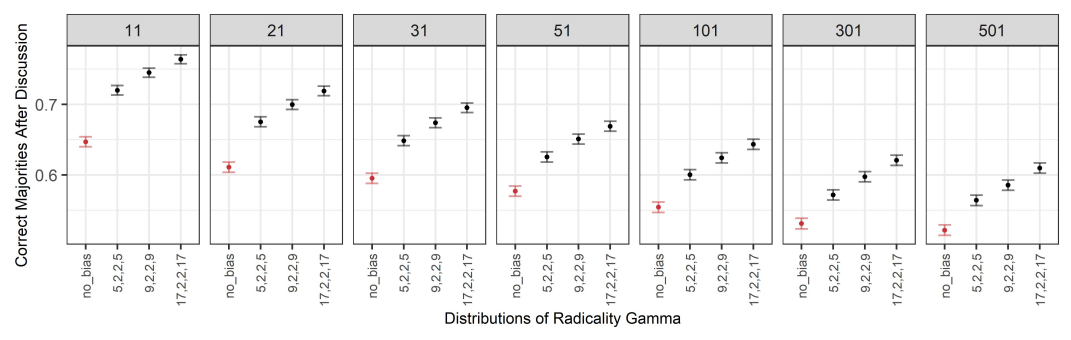

Finally, we measured the proportion of correct majorities after discussion (Figure 11), and we then compared it with the proportion of correct majorities before discussion. Comparing the proportion of correct majorities before discussion to the proportion of correct majorities after discussion is useful in giving us an indication of how likely it is to have a correct majority before the conversation versus after the conversation. This helps us to check under which conditions, if any, discussion in groups is more beneficial than mere belief aggregation via majority rule before discussion.

In order to estimate the likelihood of correct majorities before discussion, we calculated, for each group size, the proportion of correct majorities before the start of the discussion and checked whether there were statistically significant differences in their values across different group sizes. The proportions of correct majorities shows a decreasing tendency with increasing group size; the difference in the proportions across group sizes was found to be significant between groups of \(11\) agents and group sizes greater than or equal to \(31\) agents, and between groups of \(21\) agents and group sizes greater than or equal to \(101\).2

We then noticed that the range of the values assumed by the proportion of correct majorities before discussion across different group sizes is very small (\(0.842\pm0.002\) (\(99\%\) confidence) for \(11\) agents, and \(0.833\pm0.002\) (\(99\%\) confidence) for \(501\) agents), while the range of the values assumed by the proportion of correct majorities after discussion across different group-sizes is much larger (for instance, for \(\gamma=0\), \(0.647\pm0.007\) (\(99\%\) confidence) for \(11\) agents, and \(0.522\pm0.007\) (\(99\%\) confidence) for \(501\) agents). For these reasons, in order to simplify our analysis, we computed the proportion of correct majorities at the start over the aggregate data of all group sizes, and found it to be approximately \(0.836\) (\(\pm0.001\), \(99\%\) confidence). We then took this aggregate value to represent the likelihood of a correct majority before discussion for any group size, and used it as a benchmark against which to compare the likelihood of correct majorities after discussion. Indeed, given the much larger scale of the differences in the proportion of correct majorities after discussion (compared to the differences before discussion) across different group sizes, this aggregate value still allowed us to meaningfully detect group-size dependent effects on the likelihood of correct majorities after discussion compared to their likelihood before discussion.

The proportion of correct majorities after discussion is reported in Figure 11. First, note that for all combinations of group size and radicality of the bias, the proportion of correct majorities after discussion is never above the aggregate average value of \(0.836\). This means that correct majorities are always less likely after a discussion than before the discussion. This is true for groups with unbiased discussants as well as for groups with biased discussants.