Stakeholder Engagement: An Expertise-Centred Approach

University College Dublin, Ireland

Journal of Artificial

Societies and Social Simulation 28 (3) 10

<https://www.jasss.org/28/3/10.html>

DOI: 10.18564/jasss.5741

Received: 27-Jun-2024 Accepted: 20-Jun-2025 Published: 30-Jun-2025

Abstract

With the popularisation of empirically calibrated models and the increasing interest in making computer simulation more useful and impactful, engaging with stakeholders has progressively become an attractive alternative during the modelling process in agent-based social simulation. A common justification for involving stakeholders is that they contribute expert knowledge. While common, the text argues, this justification is somewhat misleading, for it is informed by an account of expertise primarily centred on subject-matter competence and superior performance. This article, thus, takes an expertise-centred approach to analyse more broadly what makes the involvement of stakeholders warranted and how their expertise can be better incorporated into the modelling process. The analysis suggests that the current conceptualisation of stakeholder engagement in agent-based social simulation could greatly benefit from further clarifying: (i) the multiple sources and contents of stakeholder expertise, (ii) the role that computational models play in the retrieval and enactment of expertise, and (iii) the impact of recognition and attribution of expertise on the modelling process.Introduction

With the popularisation of empirically calibrated models and the increasing interest in making computer simulation more useful and impactful, engaging with stakeholders has progressively become an attractive alternative during the modelling process in agent-based social simulation. These stakeholders, it is often argued, can help overcome crucial methodological challenges, such as the paucity of data available for calibration and validation, and increase the contextual relevance and accuracy of models (Barreteau et al. 2016; Elsawah et al. 2015; Gilbert et al. 2018; Scherer et al. 2015; Schulze et al. 2017; Voinov et al. 2016; Voinov & Bousquet 2010).

The agent-based social simulation literature does not elaborate extensively on why involving stakeholders can improve computational modelling. A common justification for this belief, likely introduced in agent-based modelling via broader stakeholder engagement and knowledge elicitation practices1, is based on presumptions of expertise: it is worth engaging with stakeholders because of the knowledge that can be leveraged from them. Their involvement in the modelling process is welcome because they contribute expert knowledge (Krueger et al. 2012; Morgan 2014; O’Hagan et al. 2006).

This article focuses on expertise and its instantiation in dynamics of knowledge transfer and exchange to argue that, while common, this justification for the inclusion of stakeholders in the modelling process is misleading, since it is disproportionately influenced by an account of expertise centred on subject-matter competence and superior performance. Overall, the goal is to show that richer and more diverse practices of stakeholder engagement could be implemented in agent-based social simulation by explicitly accounting for: (i) the multiple sources and contents of stakeholder expertise, (ii) the role that computational models play in the retrieval and enactment of expertise, and (iii) the impact of recognition and attribution of expertise on the modelling process.

Four important clarifications are warranted at this point: first, ‘stakeholder engagement’ will be used broadly to describe any type of participation and involvement of stakeholders in the modelling process, recognising that alternative terms (some argue, not interchangeable; e.g., Basco-Carrera et al. 2017) are used in the literature. Second, the text addresses how agent-based social simulation is improved by engaging with stakeholders. The discussion, thus, is limited in scope, for stakeholder engagement practices need not use computer simulation nor strongly rely on it methodologically (Moallemi et al. 2021; Voinov et al. 2018)2. Third, some research has focused on developing tools and strategies to address issues of clarity and transparency when communicating and interacting with stakeholders (e.g., Bommel et al. 2014; Giabbanelli et al. 2017; Hassenforder et al. 2015; Laatabi et al. 2018; Le Page et al. 2012; Polhill & Salt 2016; Scherer et al. 2015). This research will not be dealt with in the paper, since tools and strategies are relatively agnostic when it comes to conceptualisations of knowledge and expertise. Fourth, the text will not extensively elaborate on non-epistemic determinants of engagement dynamics e.g., interests, power, and ideologies. Yet, their influence will be highlighted when relevant.

The discussion advanced is organised along three major questions: (i) who is an expert?, (ii) how can experts tap into their expertise?, and (iii) how is expertise incorporated into the modelling process? These questions are used to develop a stepwise characterisation of expertise that goes beyond its standard interpretation as subject-matter competence and individual accomplishment, by bringing together research on social simulation, stakeholder engagement, knowledge elicitation, and expert performance. This stepwise approach also provides insights that accommodate different levels of familiarity with the involvement of stakeholders in the modelling process. The first question sheds light on how current stakeholder engagement practices bring to the foreground issues related to the source of expertise. The second question moves past current practices to inquire more broadly into the role of agent-based modelling in knowledge elicitation and exchange dynamics. Finally, the third question encourages reflection on how dynamics of recognition and attribution of expertise during the modelling process moderate the effectiveness of stakeholder engagement.

Who is An Expert?

Although a univocal definition is lacking, expertise has traditionally been understood in terms, on the one hand, of an identifiable outcome e.g., superior performance, and, on the other hand, of behaviours that demonstrate or instantiate a mastery that is comparatively advantageous (Ward et al. 2020). Stakeholders, thus, would be expected to improve a computer simulation because they command distinctive knowledge and skills that the research team deems important for the fulfilment of a variety of modelling features and functions, such as clarity, transparency, validation, legitimacy, or trustworthiness (Barreteau et al. 2016; Gilbert et al. 2018; Schulze et al. 2017; Voinov et al. 2016). Since expertise is not evenly distributed in the population and different expertises are unlikely to prove equally effective for any modelling feature and function, expert selection becomes one of the most critical design decisions in stakeholder engagement practices.

A first sense of expertise: its source and content

The stakeholder engagement literature is ambiguous about who should be considered an expert, partly because a precise definition is missing. Some authors distinguish between ‘experts’ and ‘stakeholders’, for expertise is linked to a distinctive set of scientific or technical skills and competence (Bijlsma et al. 2011; Chow & Sadler 2010; Krueger et al. 2012). Other authors, conversely, differentiate expertises based on the experts’ position in the system e.g., ‘local’ vs ‘external’, or to the source of expertise e.g., ‘technical or scientific’ vs ‘lay, traditional, or indigenous’ (Bohensky & Maru 2011; Chow & Sadler 2010; Fischer 2000; Voinov et al. 2016). Even though, as will be discussed below, these distinctions are supported by different assumptions, overall, two major types of expertise are identifiable in the stakeholder engagement literature. ‘Expert’ is used to denote, first, individuals with specialised training, certification, and experience and, second, individuals with informal subject-matter mastery over a topic of interest. For the sake of simplicity, these two types of expertise will be referred to from here onwards as formal and informal, respectively3.

Although, historically, expertise has been more often associated with formal expertise, especially in contemporary techno-bureaucratic societies (Grundmann 2017; Mieg & Evetts 2018), previous stakeholder engagement practices have been instrumental in bringing informal expertise to the foreground. Often, informal expertise has been acknowledged as an alternative source of information for phenomena in which there is a paucity of data. This paucity has been characterised in two ways: as a general lack of data or as a lack of contextually relevant data. When the concern is the latter, at the same time, the value of informal expertise is usually defined intrinsically e.g., acknowledging the qualitatively different nature of the knowledge linked to this expertise4, or extrinsically e.g., in relation to some methodological or technical limitations of formal expertise.

Since the distinction between formal and informal expertise is not standard in the agent-based social simulation literature, further work is needed to better characterise these expertises and their potential contribution to stakeholder engagement practices. Currently, there are no widely agreed-upon indicators of informal expertise, as evidenced by the separation between stakeholders and experts made by some authors. In turn, while formal expertise indicators are relatively standard, they are not entirely reliable. The social simulation literature does not forcefully question the use of formal experts. However, research on knowledge elicitation has shown, among other things, that common indicators of formal expertise, such as years of experience, number of publications, and academic qualifications, do not necessarily lead to superior performance (Burgman et al. 2011) and that formal experts similarly ranked by these indicators can often disagree (Morgan 2014; O’Hagan et al. 2006).

It is equally worth analysing the potential interplay between and within formal and informal expertise in stakeholder engagement practices, especially in instances where differences in expertise are recognised and acted upon by stakeholders during the modelling process. Expert interaction and collaboration, particularly in traditional interdisciplinary research, are regularly understood in terms of complementarity of expertise (de Ridder 2014; Holbrook 2013; Wagenknecht 2016). In stakeholder engagement practices, however, the interaction between participants need not be framed as, or can (in)voluntarily deviate from, complementarity of expertise. The participation of informal experts, for example, could be deliberately framed or evolve as a countermeasure, rather than a complement, to formal experts. In this case, the usefulness of an expertise would hinge on aspects beyond individual competence, and some measures should be taken to prevent the relationship between experts from becoming contentious and unproductive.

Variations within informal expertise

The multiple success cases reported in the literature showcase the benefits of engaging with informal experts. The source of these experts’ credibility, however, is difficult to pin down, in part, because the current conceptualisation of stakeholder engagement does not sufficiently differentiate between subtypes of informal expertise. As mentioned above, terms such as ‘local’, ‘lay’, ‘indigenous’, or ‘traditional’ are used when referring to informal knowledge and expertise (Bohensky & Maru 2011; Chow & Sadler 2010; Fischer 2000; Voinov et al. 2016). While the terms are often used interchangeably, they are not entirely equivalent. For instance, local or lay expertise may be attributed to non-formally certified individuals with high competence in knowledge and skills typically linked to a professional domain. In contrast, traditional and indigenous expertise are truly local i.e., belonging to a particular social group, and their associated knowledge differs from specialised scientific knowledge in how it is developed (e.g., everyday human experience), preserved (e.g., orally), and socially transmitted (e.g., through imitation) (Bohensky & Maru 2011; Fischer 2000). Compared to traditional expertise, in turn, indigenous expertise involves additional self-identity traits, occasionally, with strong historical roots (Magni 2017).

Further work on the characterisation of informal subtypes of expertise is warranted, since, given the thematic diversity in agent-based social simulation, practitioners are likely to face radically different landscapes of informal expertise. Initially, depending on the topic (and the modelling goals), there might be multiple patterns of interaction between informal and formal expertise. Subtypes of informal expertise can differ in the extent to which they: (i) involve conflicting epistemic values and worldviews that make their integration with formal expertise challenging or questionable, (ii) require bringing to the foreground issues of epistemic power and domination, (iii) call for alternative engagement methods, and (iv) are considered worth including for reasons beyond the adequacy of the knowledge they provide e.g., preservation or social justice (Bijlsma et al. 2011; Bohensky & Maru 2011; Chow & Sadler 2010; Fischer 2000; Renger et al. 2008; Voinov et al. 2016).

In addition, informal expertise subtypes are highly contextual. For instance, traditional and indigenous expertise are uncommon for decidedly novel phenomena. There is no indigenous expertise, particularly in the most restrictive sense of the term, in digital technologies. Certainly, it is possible to develop informal expertise in the subject. However, this expertise will not be related to longstanding and culturally unique alternative worldviews of digital technologies. Similarly, ‘local’ might have a more intricate interpretation in contemporary large-scale phenomena. What counts as ‘local’ in worldwide dynamics such as COVID-19? Various hierarchised and interconnected geographically ‘local’ levels might exist, depending on the focus. In turn, an expertise might be deemed ‘local’ for a variety of reasons. Expertise in Latin America could be deemed local because of the cultural similarities between the countries in the region; in Europe, instead, because of their formal political and economic integration.

Finally, not every subtype of informal expertise is developed and exerted in the same way. For example, match prediction, most notably in football, has been used to test expert and laypeople prediction performance. Previous research has produced conflicting results because the delimitation of expertise in this context is not straightforward (Butler et al. 2021; O’Hagan et al. 2006). Since formal match prediction expertises do not exist, research on the topic has relied on a heterogeneous set of experts, including, among others, journalists, coaches, and gamblers, who evidently possess different expertise. Although informal expertise can certainly be attributed to these groups, there are no standardised criteria to commensurate the claim that individuals belonging to these groups (and the groups themselves) can make to their ‘match prediction’ expertise.

The scope and reliability of expertise

The use of experts for match prediction faces challenges, not only commensurating alternative informal ‘match prediction’ expertises, but also assessing their scope and reliability. Along with the question of whether one of these informal expertises is decidedly better, there is the question of the predictive accuracy the best experts could achieve. Some modelling goals that are currently common in stakeholder engagement e.g., communication or decision-making, are only weakly connected, if at all, with expert performance. This connection, however, is stronger for goals such as prediction, in which increasing the predictive adequacy and accuracy of a simulation will likely be a major driver of stakeholder engagement.

Since no expertise can guarantee perfect prediction capabilities, assessing the (contextual) scope and reliability of an expertise is needed to moderate expectations in stakeholder engagement practices. While prediction is a common driver of stakeholder engagement in other disciplines (Burgman et al. 2011; O’Hagan et al. 2006; O’Hagan 2019), it could be questioned whether there is expertise with truly predictive capabilities for social phenomena and in the social sciences (Anzola & García-Díaz 2023; Morgan 2014). Certainly, there are experts in social science, and these experts constantly make predictions about social phenomena with varying degrees of success. Currently, however, it is not clear how the well-documented limitations for prediction in both agent-based social simulation (Anzola 2021b; Elsenbroich & Polhill 2023; Polhill et al. 2021) and social science (Byrne 1998; Martin et al. 2016; Salganik et al. 2020) should be accounted for when involving stakeholders in the modelling process.

While pursuing modelling goals strongly connected to performance, the delimitation of the scope and reliability of an expertise requires, on the one hand, characterising the types of performance that are possible, expectable, and acceptable with the aid of expert knowledge and, on the other hand, identifying mechanisms that make the link between knowledge input and simulation output transparent. In the case of prediction, for example, assessments of expert performance will differ depending on whether stakeholders are expected to make point-predictions, anticipate trajectories of complex social phenomena, or provide knowledge that the research team separately turns into predictions (pre- or post- implementation of the computational model). Similarly, when predictions fail, researchers should have means, at least in principle, to discern whether expert knowledge is the cause (Anzola & García-Díaz 2023). Ultimately, clarifying the scope and reliability of expertise in social simulation is necessary to prevent the misutilisation and misattribution of expertise.

In addition to modelling goals, the delimitation of the scope and reliability of an expertise should be informed by the context in which that expertise is acquired and exerted. Individual expertise can be radically shaped by fortuitous circumstances. For example, several formally trained and certified healthcare professionals acquired valuable informal expertise in epidemiology after being redeployed to new roles during the COVID-19 crisis. Researchers should be mindful of these circumstances, particularly when they lead to the development of additional expertises, first, because experts themselves might not be fully able to differentiate them and, second, because some design elements in the engagement practice could be manipulated to influence which expertise they tap into.

Institutional constraints should be equally acknowledged when assessing the scope and reliability of both formal and informal expertise. For example, research on policy advisory systems shows that, in expert policy advice (i.e., where scientists provide their academic expertise to policymakers) and public administration (i.e., where expert public servants provide their professional expertise to politicians), individuals tap into their expertise based on, on the one hand, how incentives, authority, and accountability are institutionally managed and, on the other hand, the role and status of experts in policy advising dynamics (Carpenter & Krause 2015; Christensen 2021; Craft & Howlett 2013; Jasanoff 2003). In stakeholder engagement practices that support policy- and decision-making, thus, expert public servants might not be able to leave their institutional role behind and act exclusively as individual experts.

Overall, then, although the current conceptualisation of stakeholder engagement in the agent-based social simulation literature has rightfully brought informal expertise to the foreground, our understanding of the determinants and enactment of this expertise is underdeveloped, as only a relatively narrow set of informal expertise subtypes and modelling goals are usually used. Looking forward, practitioners interested in using stakeholder engagement might benefit from asking:

- What expertises, formal and informal, are available, and how do they differ (especially within informal expertise)?

- How can informal expertise be assessed?

- Under what conditions can stakeholders’ expertise improve the simulation process?

- Do stakeholders command more than one expertise?

- If so, what expertise are they expected to contribute?

How Can Experts Tap into Their Expertise?

The relevance and quality of the knowledge elicited from an expert, regardless of the type of expertise, are strongly influenced by the elicitation environment. Previous research indicates that experts often rely on tacit knowledge and context-dependent strategies and heuristics when tapping into their expertise (Gigerenzer 2008; Mosier et al. 2018; Ward et al. 2018). Given the increasing interest in stakeholder engagement in agent-based social simulation, and the potentially intricate landscape of expertise researchers might find, it may be worth discussing in more depth how the modelling process can be set up to help stakeholders successfully deploy their expertise.

A second sense of expertise: its retrieval and enactment

The stakeholder engagement literature in agent-based social simulation has not paid significant attention to the elicitation environment, in part, because the topic has been relatively neglected by studies on expertise and expert performance. Historically, mastery and individual superior achievement have been analysed conflating the environments of expertise acquisition and deployment. Stakeholder engagement, however, might require experts to retrieve knowledge in atypical deployment environments. Awareness of the context-bounded nature of expertise has recently encouraged several methodological developments and general recommendations in the contemporary knowledge elicitation literature. Many of them can be readily applied in any stakeholder engagement scenario in agent-based social simulation. Some others, however, should be the object of critical reflection and discussion.

Arguably, the most useful recommendation is to differentiate between substantive and normative expertise. The former is a type of expertise associated with the command of specialised knowledge; the latter, with the ability to adequately retrieve and use that knowledge (Bolger 2018; O’Hagan et al. 2006). For example, in the climate sciences, where the separation between substantive and normative expertise has been more explicitly documented, substantive expertise refers to subject-matter knowledge about the phenomenon of interest: climate change, whereas normative expertise refers to the ability to express that knowledge through statistically standard and appropriate principles, methods, and procedures e.g., probability distributions for severe weather events. The distinction between these two types of expertise was previously overlooked in research on expertise and knowledge elicitation because probability distributions were most frequently elicited from formal experts with high competence in both climate phenomena and mathematics, so their normative expertise was never questioned. Empirical analyses of various contexts of elicitation, however, have shown that not every substantive expert is a normative expert and that neglecting normative expertise increases the risk of elicitation errors and biases, such as overconfidence (Lin & Bier 2008; O’Hagan et al. 2006; Speirs-Bridge et al. 2010).

The substantive-normative dichotomy is neither a substitute nor entirely overlaps with the formal-informal. Both formal and informal are better accommodated by the notion of substantive expertise, as they are usually accredited through mastery over a body of knowledge. It is not that some formal and informal experts lack command of normative expertise, but, rather, that subject-matter knowledge has been more commonly used as the defining trait of expertise (because of: (i) the historically higher status given to scientific knowledge, (ii) the relatively standard mechanisms to certify competence in scientific knowledge, and (iii) the select group of individuals that were able to claim command of this knowledge). In spite of the historical emphasis on substantive expertise, it is normative expertise individuals tap into when retrieving knowledge and ‘putting it to work.’ Normative expertise, ultimately, is what makes expert knowledge actionable.

An additional noticeable difference is that, unlike formal and informal, substantive and normative are not alternative expertises that researchers can or must choose from in stakeholder engagement practices. Instead, they are complementary expertises that are equally needed for expert performance. Considerations about how they are each accommodated by an engagement environment, thus, will naturally impinge on multiple methodological decisions. For example, in knowledge elicitation scenarios, it is common for experts to be selected on substantive criteria, leaving normative expertise to be accounted for methodologically. As a result, several tools and strategies have been devised to methodologically minimise (e.g., through preselection filters and training) or mitigate (e.g., through expert knowledge aggregation) potential problems with normative expertise (Aspinall & Cooke 2013; Bolger 2018; Garthwaite et al. 2005; O’Hagan et al. 2006).

The current conceptualisation of stakeholder engagement in agent-based social simulation could benefit from incorporating the distinction between substantive and normative expertise, first, because, as mentioned, not every substantive expert is also a normative expert and, second, because, in these scenarios, means and goals of elicitation are usually chosen and controlled by the research team, rather than the stakeholders. Practitioners, then, should deliberately assess stakeholders’ normative expertise, since, when properly accounted for, this expertise supports more natural dynamics of knowledge transfer and exchange. The issue is not about trying to reproduce ‘natural’ elicitation environments, but about explicitly mapping the connection between expertise, elicitation tools, and modelling goals.

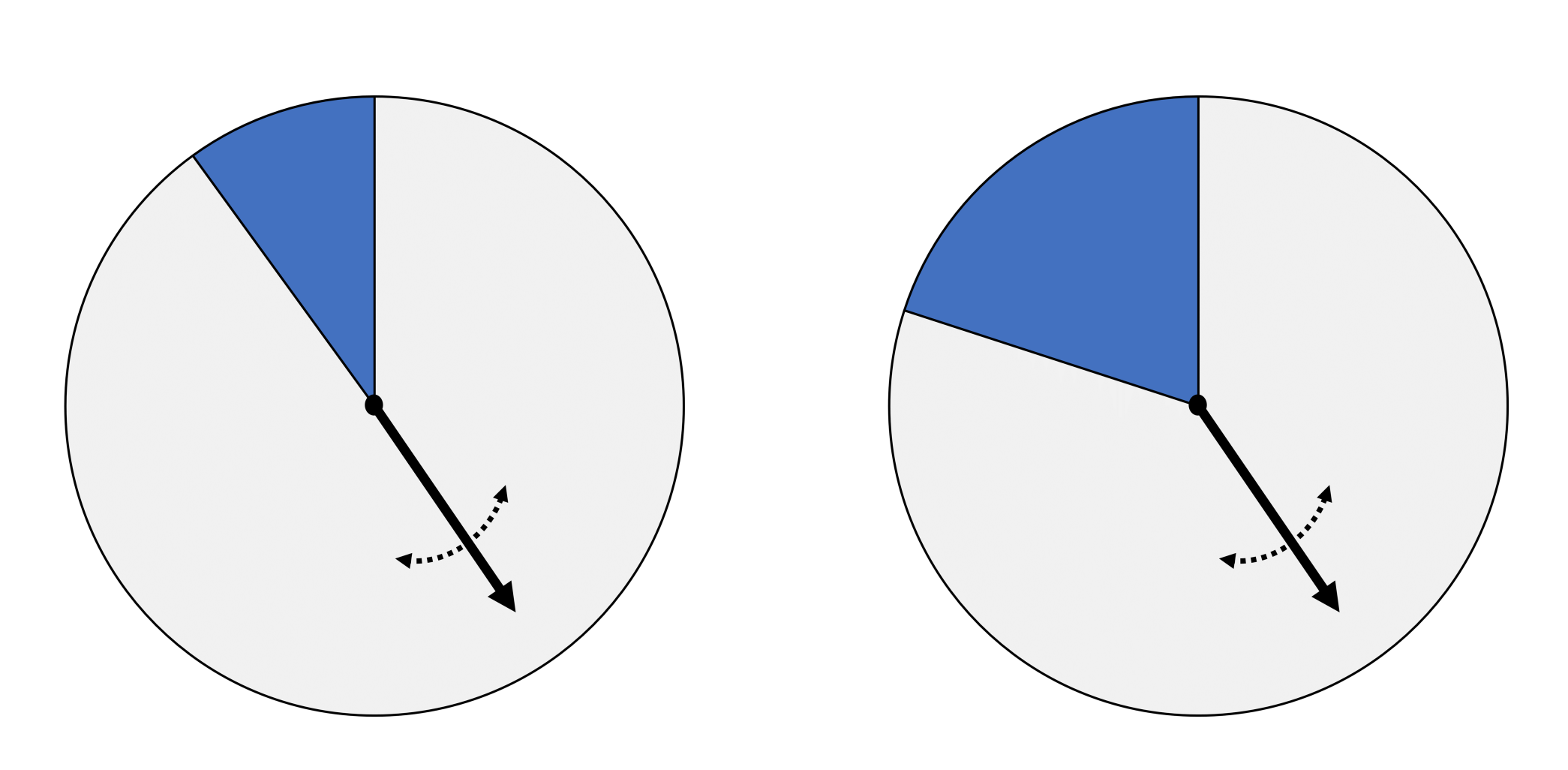

Normative challenges in the retrieval of expert knowledge may be easily overlooked and underestimated, especially when dealing with informal expertise. For instance, it has been shown that, while most people (lay and experts) are familiar with the use of probabilities to describe the likelihood of weather events, even basic statements such as: “a 30% chance of rain tomorrow”, can easily be interpreted differently (Gigerenzer et al. 2005). In a hypothetical stakeholder engagement scenario, as mentioned, this problem could be avoided by manipulating the stakeholder pool e.g., filtering out individuals who misinterpret the statement, or by training stakeholders in the role of reference classes in everyday weather prediction. An alternative solution is to bypass this lack of normative expertise by using engagement structures or tools that match stakeholders’ type and level of competence. Dryden & Morgan (2020), for instance, report on a study in which individuals without prior knowledge of climate attribution are able to understand changes in probability distribution and explain these changes to others with the use of spinner boards (Figure 1). The replacement of the mathematical formalism with a visual and interactive representation of probability distributions, the authors argue, facilitated dynamics of knowledge transfer and exchange, despite the participants’ lack of normative expertise in statistics and probability.

Statistics may not feature prominently in stakeholder engagement practices involving agent-based models, as the design and manipulation of these models do not depend on an underlying mathematical formalism. Normative expertise warrants further explicit consideration, nonetheless. Since stakeholder expertise needs to be properly aligned with engagement methods and goals, key design decisions in engagement scenarios should deliberately focus on the management of normative expertise. In agent-based social simulation, this normative expertise is distinctively linked to the use of models as representational objects.

The role of computer simulation

In general, the stakeholder engagement literature argues that computational modelling is a useful methodological tool for activities that require communication, collective decision-making, and conflict resolution in situations with evident social and cognitive asymmetries (Barreteau et al. 2016; D’Aquino et al. 2002; Voinov et al. 2016, 2018). However, discussions about how exactly computer simulation is incorporated into or is produced as a result of these activities are too high-level: they predominantly centre on general purposes and technical aspects rather than practices. Models, for example, are believed to encourage collective understanding and negotiation of meaning. Terms such as ‘boundary objects’ (Star & Griesemer 1989) have become popular to describe the role of models for this reason. Similarly, technical aspects, such as methodological complementarity with other tools e.g., role-playing games, cognitive mapping, and UMLs (Barreteau et al. 2001; Bommel et al. 2014; Campo et al. 2010; Elsawah et al. 2015; Le Page & Bommel 2005), or the technical advantages provided by modelling platforms designed specifically for stakeholder engagement (Le Page et al. 2012; Taillandier et al. 2019), are often highlighted.

This high-level approach to the role of models makes them too passive. As artefacts, models accommodate knowledge and expertise in a variety of ways, and may significantly influence engagement practices through their design (i.e., what they are – in terms of both design principles and physical instantiation), function (i.e., what they are made for), and deployment (i.e., how they are used – in terms of both their direct manipulation and the inferences drawn from them) (Alvarado 2022; Knuuttila 2011). A model’s function, for instance, is regularly taken as an issue of modelling goals. The agent-based social simulation literature, following model-based science, has become increasingly pluralistic when it comes to modelling goals, acknowledging a variety of valuable endeavours for which models can be used (Davidsson & Verhagen 2017; Edmonds et al. 2019; Epstein 2008). Interestingly, there seems to be a ‘function gap’ between those goals that have traditionally been considered central to the practice of science e.g., prediction and explanation, and other alternative goals, including those typically mentioned in stakeholder engagement e.g., communication or conflict resolution: discussions about central goals regularly neglect alternative goals, and vice versa.

This ‘function gap’ seems to be associated, on the one hand, with the extent to which the data encoding, processing, and generation features of computational models are emphasised and, on the other hand, with the perceived cognitive costs of employing these models. Strategies for model deployment are not described in a standard manner in the literature. When discussing central goals, the deployment of models is usually circumscribed within the context of knowledge production: models are used to generate new knowledge. The stakeholder engagement literature, conversely, overemphasises how models, as external representations, reduce individuals’ cognitive load i.e., their mental effort. As boundary objects, for example, models are believed to mediate or help coordinate between different worldviews (Star & Griesemer 1989; Voinov et al. 2016). Computational models can certainly play a mediating role in engagement practices, allowing individuals to offload some of the cognitive burden onto them5. These models, however, can be immersed in more intricate knowledge transfer and exchange dynamics.

Computational models are distinct physical artefacts with embedded knowledge. Initially, their design incorporates technical knowledge that makes it possible, from the perspective of hardware and software, to implement and execute the model on a physical computer (Akera 2007; Winsberg 2010). They have embedded, as well, substantive knowledge about the phenomenon of interest. In computational (social) science, this substantive knowledge is highly interdisciplinary and, more importantly, strongly linked to previous models (e.g., through code reuse or model templates; Janssen 2017; Knuuttila & Loettgers 2023) or entire research programmes (e.g., through causal mechanisms or model clusters; Ylikoski & Aydinonat 2014; Anzola 2019).

The manipulation of these models, in turn, instantiates distinct normative knowledge that is not entirely model-dependent. This knowledge can be predominantly technical (e.g., about coding or validation techniques, tools, and best practices; Janssen et al. 2008; Collins et al. 2024; David 2013) or support general methodological beliefs and assumptions (e.g., that agent-based models are instances of modelling, formal theory, experimentation, or a combination thereof; Anzola 2021a, 2021d). Since computational models are a unique type of model that can manipulate and produce novel data, additional substantive and normative knowledge is attached to this feature. In agent-based modelling, the simulation is meant to account for the dynamics of the phenomenon of interest (Gilbert & Troitzsch 2005), providing processual representations of social phenomena that are not possible without computational means, either because they are epistemically opaque and computationally demanding or because additional epistemic functions e.g., a micro-to-macro emergent transition, are attached to it (Anzola 2021a).

Because of these robust data encoding, processing, and generation capabilities, the role of computational models in engagement practices can easily go beyond the reduction of cognitive loads. These models can perform cognitive tasks that exceed human cognition by virtue of complex interdisciplinary knowledge (not limited to that of the stakeholders) artefactually embedded in or instantiated by them. While computer simulations can be used minimalistically in some contexts of stakeholder engagement, for example, as visualisation aids, they can also be incorporated into intricate distributed socio-technical systems in which practices and decisions cannot be made sense of without bringing the features and manipulation of these models to the foreground (Chandrasekharan & Nersessian 2015; van Basshuysen et al. 2021; van Egmond & Zeiss 2010). Decisions made during the COVID-19 pandemic, for example, were made because of the models that were available to decision-makers. As such, these computational models played a ‘performative’ role during the pandemic i.e., inferences and decisions that affected diffusion dynamics were made following the insights drawn from interacting with models, rather than with the real world (van Basshuysen et al. 2021; Winsberg et al. 2020).

Computational models, then, are complex cognitive artefacts that can be incorporated into a variety of knowledge transfer and exchange dynamics. The extent to which they can be improved with the involvement of stakeholders in the modelling process depends, initially, on design elements of the engagement scenario, such as whether stakeholders are asked to intervene pre- or post-implementation or the level of control they are given over the modelling process. It depends, as well, on the interplay between stakeholders’ expertise and the knowledge embedded in these models and instantiated during their manipulation. Not every computational model encodes, processes, and generates synthetic data in the same way, so the demands for substantive and normative expertise from stakeholders can easily vary.

Agent-based social simulation requires from stakeholders substantive and normative expertise that matches distinct methodological features, most notably, the explicit representation of agents, interactions, and environment and the unique commitment to micro-to-macro emergent and self-organising dynamics (Anzola 2021a; Gilbert & Troitzsch 2005). Because, as mentioned, these models do not depend on an underlying formalism, they can accommodate in multiple ways previous data and theory. This affects, among others, the level of abstraction and idealisation in representation and the criteria to evaluate the fulfilment of modelling goals (Anzola 2021c; Boero & Squazzoni 2005; Edmonds et al. 2019). For example, considerations of expertise and predictive performance cannot be detached from the intricate, sometimes contradictory, way in which agent-based social simulation has historically approached the predictive capabilities of agent-based models (Anzola & García-Díaz 2023).

Computational models and modelling practices

In addition to making explicit the connection between knowledge, expertise, and model features and purposes, further research on modelling practices is equally warranted, for they instantiate wider and more intricate dynamics of knowledge and expertise that partially determine the role and effectiveness of stakeholder engagement in computational modelling. Initially, these dynamics could provide separate or independent rationales for the use of computational models. For instance, in some cases, simulation might be incorporated into stakeholder engagement practices for reasons more closely connected to the expertise of the research team than to the problem being tackled.

In turn, modelling practices might reflect or follow entrenched knowledge dynamics. The more noticeable emphasis on performance in the elicitation of probability distributions, for example, has encouraged a significant amount of research on mechanisms to counter problems with biases and heuristics, such as calibration and feedback (Aspinall & Cooke 2013; Burgman et al. 2011; O’Hagan 2019; Speirs-Bridge et al. 2010). Over time, key findings from this research have been systematised in elicitation protocols that seek to facilitate the identification and mitigation of methodological challenges faced in knowledge elicitation scenarios (Hemming et al. 2018; O’Hagan 2019; Speirs-Bridge et al. 2010).

This interest in systematisation is somewhat absent in research on stakeholder engagement. This might be due, initially, to the fact that some typical engagement goals are not fully dependent on expert performance. Similarly, engagement activities are often long and unstructured, so more fine-grained guidelines and recommendations, such as protocols, may be harder to craft and follow. There could be, as well, negative attitudes towards systematisation e.g., it might be detrimental to participants’ motivation (Voinov & Bousquet 2010), and divergent beliefs and assumptions about how to better engage with stakeholders e.g., games have become popular, in part, because of the belief that eliciting actions or behaviours is more effective than eliciting opinions (Barreteau et al. 2001; D’Aquino et al. 2003).

These alternative approaches to knowledge systematisation are likely to influence crucial decisions pertaining to, on the one hand, the structuration of stakeholder engagement practices and, on the other hand, the design and manipulation of computational models. However, we currently lack the means to fully assess their influence in the context of agent-based social simulation, as negative experiences or outcomes are rarely disclosed and the connection between design choices and outputs is not sufficiently documented and systematised in scientific reporting (Hassenforder et al. 2015; Prell et al. 2007; Voinov et al. 2018).

Modelling practices can be equally impacted by the wider expertise and knowledge dynamics in which stakeholders are involved. The retrieval and enactment of expertise in agent-based social simulation scenarios, for example, might be affected by stakeholders’ perceptions of and attitudes towards the use of digital technologies, especially when collective interaction, inferences, and decision-making are highly dependent on computational models. Individual and collective negative perceptions and attitudes can target any of the three dimensions of a model’s artefactual nature: function, design, and deployment.

In terms of function, more research is needed, among others, on stakeholders’ attitudes towards technology-mediated social interaction and decision-making. Previous research has shown that higher levels of expertise are associated with reduced levels of trust in technology-supported decision-making (Burton et al. 2020; Logg et al. 2019). Distrust has also been associated with knowledge and perceptions of task-dependent factors of success (e.g., whether the decision needs subjective assessment to be effective; Castelo et al., 2019) and other types of subjective judgements, including aesthetics and moral (e.g., whether technology-mediated decision-making is fair; Newman et al. 2020). It is worth exploring whether those findings apply to the context of stakeholder engagement in social simulation, particularly, if trust in the usefulness of computational models is lower in individuals with higher levels of substantive expertise (both formal and informal).

Regarding design, it is unclear whether some expertises prevent stakeholders from retrieving knowledge in ways that are compatible with the representational nature of agent-based models. Research on scientific representation has shown that the question about how models represent is not easy to answer, first, because, depending on the circumstances, several non-equivalent models of a phenomenon can prove useful and, second, because people interact with various non-scientific contexts of representation on a daily basis (Frigg & Nguyen 2020). It is not hard to find methodological aspects of agent-based simulation for which judgements on the adequacy of models can be strongly mediated by expertise. Some stakeholders, either because of their training or their preferred problem-solving heuristics, might not consider agent-based models useful because of their simplicity (a common criticism levelled against the method in the scientific literature). In turn, formal experts, especially those with competence in mathematics, might consider the lack of an underlying mathematical formalism a methodological downside (Lehtinen & Kuorikoski 2007).

Finally, perceptions of and attitudes towards the deployment of computational models could be influenced by how the interaction with stakeholders is moderated. Computer simulation, especially in the post-implementation phase, can limit the cognitive process of option generation (i.e., the identification of alternative courses of action individuals go through in problem-solving scenarios; Johnson & Raab 2003; Smaldino & Richerson 2012), since the engagement dynamic is constrained by the interaction with the computational model. If the deployment of the simulation is not adequately managed, stakeholders could become critical of it during the pre- to post-implementation transition. However, adequately managing the deployment is challenging because it requires, first, accounting for the effects of computational modelling, as a digital technology, on the cognitive processes that support knowledge retrieval (Mosier 2008) and, second, deliberately accommodating various types and levels of expertise. Individuals with lower levels (or some particular types) of expertise are, among others, likely to demand more time, support, or supervision to, on the one hand, grasp the intricacies of computational modelling and, on the other hand, interact with the model to perform some tasks (Zellner et al. 2022).

An explicit focus on the role of models and modelling practices in stakeholder engagement brings to the foreground the retrieval and operationalisation of expert knowledge. The difference between substantive and normative expertise can greatly inform our understanding of the potential contribution of diverse stakeholders to the modelling process. However, further research is needed to characterise the normative expertise associated with the development and manipulation of agent-based models and to clarify the assessment of expertise in scenarios where stakeholder participation is not driven by expectations of quantifiable superior performance. Considering the various modelling features, purposes, and practices that can be relevant when interacting with stakeholders, practitioners of agent-based social simulation might consider asking:

- What substantive and normative expertise is associated with the computational model?

- What normative expertise is required from the stakeholders during the engagement practice?

- Are there criteria to assess whether stakeholders adequately tap into their normative expertise and its effects on the modelling process?

- Is the design, function, and deployment of the model sensitive to wider knowledge and expertise dynamics?

How is Expertise Incorporated into the Modelling Process?

Because of their dissimilar approach to performance and knowledge systematisation, the knowledge elicitation and the stakeholder engagement literatures differ in the value they ascribe to interaction. The latter regularly focuses on unstructured knowledge dynamics, where interaction is frequent, often encouraged, and methodologically essential. In these scenarios, interactions with models might be highly intricate and robust, for, as mentioned before, models could be used by stakeholders as mediating or boundary objects.

In the knowledge elicitation literature, conversely, the usefulness of interaction is predominantly assessed from the perspective of accuracy, in terms, first, of whether having multiple experts will lead to more accurate knowledge and, second, of whether consensus in expert judgement is better achieved through mathematical (using statistical methods) or behavioural (using interaction) knowledge aggregation (Aspinall & Cooke 2013; O’Hagan et al. 2006). Given the inconclusive evidence for the superiority of group elicitation and its additional risks e.g., group cognitive biases, the knowledge elicitation literature prioritises engagement scenarios in which experts are usually asked to retrieve knowledge individually or in highly controlled interaction dynamics, for instance, by giving them separate access to other experts’ opinions.

Group interaction and knowledge retrieval

Since various simulation topics and modelling goals might prioritise epistemic features of the knowledge stakeholders possess e.g., whether it is true or accurate, further discussion on the connection between expertise, interaction, and knowledge retrieval is warranted. While relevant, neither the stakeholder engagement nor the knowledge elicitation literatures have extensively explored the negative effects of interaction, for they are predominantly focused on individual biases and heuristics. Not for the same reason, however. The stakeholder engagement literature focuses on individuals because it links biases to other non-epistemic aspects, such as goals, interests, and values. In opposition, the knowledge elicitation literature neglects group biases because behavioural aggregation is the least common type of elicitation structure.

As a result, our current understanding of the potential pitfalls of interactive knowledge dynamics is mostly limited to the acknowledgement of broad structural and functional aspects that minimise the epistemic risks to which these dynamics are exposed. For example, engagement practices with more structured and limited interactions, such as those where experts interact only through written feedback, will naturally be less prone to bias. Similarly, bias will be less of an issue in interaction supporting modelling goals that do not heavily depend on knowledge adequacy, such as communication or conflict resolution.

More detailed insights into the epistemic risks of interaction in knowledge transfer and exchange dynamics are provided by research on team and collective decision-making. Previous research shows that interaction can exacerbate individual biases and, more interestingly, foster unique group biases. The likelihood of experiencing group biases, in particular, hinges on how aspects such as leadership, time allocation, group cohesion, or task specificity are managed in group activities (Bang & Frith 2017; Jones & Roelofsma 2000; Kerr & Tindale 2004). It also hinges on the complexity of the phenomenon for which solutions are sought. Collaborative work is more prone to biases, first, when the search and evaluation space for problems and solutions cannot be easily anticipated and, second, when the contribution of experience and expertise to problem-solving and decision-making is difficult to assess (Kao & Couzin 2014; Kavadias & Sommer 2009).

Although some of these findings are not entirely relevant for stakeholder engagement in agent-based social simulation, among others, because there are differences between collective and collaborative work and between knowledge elicitation and decision-making, the evidence suggests that the negative effects of interaction, including group biases, may be minimised or countered through design choices regarding the structure of the engagement environment, the selection of participants, and the moderation of the interaction. Looking forward, it is worth studying in more detail how all these aspects materialise in the context of stakeholder engagement practices that rely on the use of agent-based models and how interaction for, with, and through computer simulation contributes to the fulfilment of diverse modelling goals.

The critical discussion of design choices should lead to the identification of contextually appropriate strategies to minimise risk. For example, because of the important role of accuracy and consensus, the knowledge elicitation literature warns about the higher susceptibility of behavioural aggregation to group biases such as ‘groupthink’: a suboptimal type of collective decision-making produced by poorly justified consensus (Bolger 2018; Krueger et al. 2012; Renger et al. 2008). The preferred design choice to tackle this risk in typical knowledge elicitation scenarios has been to minimise expert interaction. This might not be the most suitable solution for simulation-based stakeholder engagement, since agent-based modelling offers design and manipulation advantages that favour interaction. In addition, even if present, groupthink might not be as problematic if neither expert consensus nor judgement accuracy are essential to the fulfilment of the modelling goals.

While eliminating interaction might not be the best design choice, some modelling goals might be more easily achieved in engagement scenarios that are not currently popular. In the collective decision-making literature, for instance, there is a growing interest in the phenomenon known as ‘wisdom of the crowds’ (Surowiecki 2005): the possibility for large groups of untrained individuals to outperform a small group of experts. Agent-based social simulation can greatly benefit from exploring types of stakeholder interaction such as crowd wisdom, not only because of the potential increased accuracy, but also because crowd wisdom can be easily paired with crowdsourcing to ease problems with recruitment, a well-known limitation of current stakeholder engagement practices.

These alternative interaction strategies come with their own downsides. Crowdsourcing is resource-efficient, but also prone to issues with stakeholder motivation and involvement. It is, as well, not entirely compatible with, or may limit the effectiveness of, other common goals in engagement practices, such as training or decision-making (Voinov et al. 2016, 2018). Research on the comparative effectiveness of crowd wisdom, in turn, has produced conflicting results because: (i) expertise is difficult to define and quantify in some contexts (Butler et al. 2021), (ii) some phenomena might prove too complex for both laypeople and experts to confidently support their decisions (Kao & Couzin 2014), and (iii) human (deliberate) action and large-scale intervention might affect the effectiveness of both expert and laypeople judgement e.g., early predictions about COVID-19 were affected by a variety of interventions, including individual protective behaviour (e.g., handwashing and mask wearing), vaccination, and policy measures (e.g., lockdowns) (Talic et al. 2021).

The cost-effectiveness of crowd wisdom, thus, hinges on an intricate relationship between the elicitation environment and the phenomenon of interest that is not thoroughly determined by the way the stakeholder interaction is set up. However, even if large groups of laypeople cannot always outperform experts, the basic premise behind this stakeholder engagement alternative makes a comprehensive review of current interaction strategies welcome. Interaction structures will likely be more effective, less resource-intensive, and less prone to bias if factors such as the type and level of expertise are deliberately taken into account during the design phase.

A third sense of expertise: its recognition and attribution

The formal-informal and substantive-normative dichotomies, to an extent, work under the assumption that expertise requires mastery and superior performance. However, given the advantages of interaction structures such as crowd wisdom, it is worth asking whether engagement practices involving individuals not recognised as experts should be managed within the framework of expertise. There is no univocal answer to this question, among others, because there are conflicting accounts of whether knowledge elicited from these participants counts as expert knowledge. Some authors use the term ‘expert’ uncommittedly, simply as a label for any individual from whom knowledge is elicited (O’Hagan et al. 2006). An alternative option would be, following Garthwaite et al. (2005), to say that these individuals contribute with their lack of expertise. Under this view, stakeholder engagement helps fulfil modelling goals through the interaction with, and between, experts and non-experts.

A third option, following Collins & Evans (2020), would be to say that these individuals contribute with different types of expertise. This option has not been discussed in the knowledge elicitation and the stakeholder engagement literatures, for it is not easy to reconcile with the traditional assumptions of expert advice/decision systems i.e., experts are sought because they possess expertise that is rare and should lead to superior performance. Yet, it might provide novel insights into how experts’ interactions with computational models, with each other, and with the research team affect the effectiveness of engagement practices and modelling processes.

Collins & Evans (2020) claim that expertise can be classified into three dimensions:

- individual accomplishment: related to individual mastery or superior competence.

- esotericity: related to the ease of access to the social group that controls an expertise (e.g., unlike professional knowledge, which requires controlled specialised training, language proficiency can be gained by everyday interaction in multiple ‘open’ settings, as it is a basic foundation of social life).

- exposure to tacit knowledge: related to how an individual accesses an expertise (e.g., the expertise in science developed by reading about it differs from that developed by practising it).

While the first dimension can be somewhat mapped onto the notion of substantive expertise (both formal and informal), especially in terms of mastery and superior performance, the second and third dimensions break down additional components of expertise that are not entirely accounted for by the dichotomies previously discussed.

Fully describing this sociological account of expertise goes beyond the scope of this text. However, for the present discussion, two implications are worth mentioning. First, experts with similar levels of substantive expertise might have dissimilar types of esoteric expertise and levels of exposure to the tacit knowledge associated with that expertise, which means they are unlikely to command the same normative expertise. This distinction cannot be reduced to differences within informal expertise. In the context of agent-based social simulation, it brings to the foreground issues of knowledge retrieval and evaluation. Stakeholder engagement practices can retrieve expert knowledge through observations, decisions, behaviours, actions, or opinions, in multiple contexts of interaction. Currently, it is not clear how practitioners consider these options to be connected with each other and, more generally, with participants’ knowledge and expertise. Engagement scenarios might be designed differently, for example, depending on whether it is believed that these options are similarly related to (the same kind of) knowledge or that they all can be used interchangeably, irrespective of the esotericity of the expertise, the level of exposure to tacit knowledge, and the individual accomplishment of the participants.

Similarly, it is not clear whether practitioners value these knowledge retrieval options equally and select among them prioritising the needs and expectations of particular modelling processes. Judgements on the adequacy of these options are likely linked to the technical and methodological expertise of the research team and the epistemic status of these options in the standard social science literature. For example, experiments and surveys, regularly used in mainstream social science for the elicitation of knowledge through actions/decisions and opinions, respectively, are supported by different methodological assumptions. Considerations about ceteris paribus conditions feature more prominently in the design and assessment of experiments; considerations about self-reporting, in surveys. These differences can have major effects on stakeholder engagement practices, for, as mentioned before, expertise can be more easily retrieved in scenarios that adequately capture or reproduce ecologically important heuristics and strategies.

The second implication of this account is that individuals without formally or informally recognised mastery over a substantive expertise can contribute with multiple ‘ubiquitous’ expertises: those at the lower end of the esoteric dimension. This acknowledgement simply extends a key assumption of empirical research to stakeholder engagement practices: individuals have expert knowledge of their own experience, which makes their personal accounts instrumental in achieving both central and alternative modelling goals (and perhaps bridging the gap between the two). It raises an interesting question, though, about how stakeholder engagement and empirical research differ from the perspective of knowledge and expertise, among others, in terms of participant recruitment or sample-to-population inferences6.

Attributions of expertise

In stakeholder engagement practices, interaction makes the enactment of expertise not entirely dependent on individual knowledge and skills. Previous research shows that interaction dynamics and performance in collaborative work and collective decision-making are impacted by expertise attributions i.e., the ascription of expert status to an individual or group (Barton & Bunderson 2014; Thomas-Hunt et al. 2003). Expertise attributions initially moderate interaction through general social expectations. Historically, the social expectations most commonly associated with expertise pertain to the superiority of expert performance and the legitimised sources of expertise accreditation (certification, experience, publications, citations, etc. – those typical of formal expertise) (Burgman et al. 2011; Carr 2010; Mieg & Evetts 2018). These two traditional social expectations of expertise, as discussed above, have recently been severely questioned.

The weakening of traditional social expectations of expertise raises some design challenges, since research teams must make explicit a variety of assumptions that support expertise attributions in engagement scenarios where stakeholders with different expertise interact. Even though typical traits of formal expertise do not necessarily correlate with performance, they have the advantage of being relatively easy to identify and quantify. In engagement scenarios in which stakeholders with various types of expertise interact, attributions will be made based on various combinations of internal and external judgements i.e., directly related to the experts and their expertise or other discrimination criteria, respectively (Collins & Evans 2020).

Awareness of external judgements is particularly important, for they could diverge significantly from traditional social expectations and remain tacit during the engagement practice. They could, as well, bring to the foreground non-epistemic issues, such as power, ideology, and values. Making expertise attributions explicit could help account, among others, for the contentious view that some stakeholders have of formal expertise and its structure of accreditation. More importantly, considering the contemporary erosion of the authoritative position of scientific expertise (Collins & Evans 2020; Klein et al. 2020), attributions of expertise to the research team could affect stakeholders’ perceptions, attitudes, and participation. For example, it is not clear if, when simulations are ‘socially legitimised’ (Barreteau et al. 2016), the research team’s expertise is legitimised, as well.

Since participants’ input is usually funnelled into the modelling process by the research team (especially when additional expert knowledge e.g., programming, is required), stakeholders might use internal and external judgements to attribute expertise, even for relatively simple methodological decisions, such as time allocation for input and feedback. These attributions of expertise will likely be linked to the research team’s ability not only to technically execute the methodological design, but also to manage participants as both experts (i.e., their knowledge) and individuals (i.e., with distinctive values, interests, beliefs, needs, etc.). Interestingly, the ability to adequately manage stakeholders seems to depend on a skill set provided by experience, rather than training and certification (Elsawah et al. 2023). As such, then, researchers with previous experience in stakeholder engagement practices are themselves in possession of unique tacit informal expertise that is worth making explicit.

The relative novelty of stakeholder engagement in agent-based social simulation has encouraged most discussions on expertise to look outwards: to the stakeholders. While knowing who these stakeholders are and why and how they should be involved in the modelling process is warranted, the research team’s expertise and its expertise attributions should also be made explicit, for they are likely to affect both the engagement design and its outputs. Information on expertise attributions made by members of the research team is, currently, mostly tacit. It is not clear what internal and external judgements are used to assess the stakeholders’ expertise and how expertise attributions influence design choices. For instance, it could be questioned whether the decision to give participants control over the engagement process, a design choice that has proven highly influential in the output and success of stakeholder engagement practices (Barreteau et al. 2016; D’Aquino et al. 2003; Renger et al. 2008; Voinov et al. 2016), is somehow connected to the recognition of ubiquitous expertise as expertise proper and the value it is given compared to esoteric expertise.

Internal dynamics of expertise and expertise attributions within the research team are equally unknown. Given the high level of interdisciplinarity in agent-based social simulation, research teams are likely to have members with non-overlapping expertise, which might foster attributions that are not fully reliant on internal judgements (Anzola et al. 2022). Making these attributions explicit can help improve the team’s overall performance. For instance, to work efficiently, the research team must develop a transactive memory system to efficiently encode, store, and retrieve relevant knowledge and heuristics associated with the multiple expertises in the team (Akkerman et al. 2007). In turn, individuals might defer or give more weight to the input of those who, based on previous record and performance, are believed to be the highest performing members (Bonner et al. 2002).

Thus, incorporating findings from broader research on dynamics of expertise development, retrieval, enactment, and attribution could help practitioners critically expand the range and scope of design considerations in stakeholder engagement. Previous research on expertise and expert performance evidences, first, the possibility to meet a variety of modelling goals with engagement structures that are currently not common and, second, the need to make explicit expertise attributions and expectations, especially when dealing with stakeholders that do not fit the traditional label of ‘expert.’ In the future, when seeking to better align the engagement environment and the modelling goals, particularly for topics and purposes that more strongly depend on knowledge adequacy, practitioners are encouraged to inquire:

- Are the potential epistemic effects of the interaction for, with, and through the computational model assessed during the design phase?

- Can variations in individual accomplishment, esotericity, and exposure to tacit knowledge influence expertise recognition and attribution?

- Do expertise attributions by the research team influence the level of decision-making and control granted to stakeholders during the engagement process?

- Is the expertise of the research team contested or legitimised during the engagement dynamic?

- Are expertise dynamics within the research team explicit and adequately reflected in the engagement design?

Conclusion

Involving stakeholders is increasingly being recognised as a means to improve the representational adequacy and the usefulness of agent-based modelling. However, the current conceptualisation of stakeholder engagement in agent-based social simulation can only partially accommodate the impact of different expertises on knowledge transfer and exchange dynamics. This text, thus, relying on an expertise-centred approach, discussed relevant knowledge gaps that can motivate further research and subsequent efforts to make explicit our knowledge on stakeholder engagement.

The article addressed three questions regarding the selection of experts, the retrieval of expert knowledge, and the influence of interaction on the recognition and enactment of expertise. It was argued, initially, that further clarification of the benefits provided by formal and informal expertise is needed because, first, these expertises are not supported by equally recognisable knowledge and, second, the expertise landscape in agent-based social simulation is likely not uniform. Later, it was claimed that the function, design, and deployment of computational models in stakeholder engagement should be more robustly characterised, for the development and manipulation of computational models are influenced by the substantive and normative expertise embedded in them and instantiated in modelling practices. Finally, it was suggested that, given the methodological features of agent-based models, additional research is warranted on the effects of interaction and expertise attributions, as stakeholder engagement practices might be strongly mediated by contextual combinations of internal and external expertise attributions. Some questions seeking to encourage further reflection and inform the design of stakeholder engagement scenarios were provided for each dimension of expertise. These questions are listed together in Table 1.

| Expertise source and content | |

| - What expertises, formal and informal, are available, and how do they differ (especially within informal expertise)? | |

| - How can informal expertise be assessed? | |

| - Under what conditions can stakeholders’ expertise improve the simulation process? | |

| - Do stakeholders command more than one expertise? | |

| - If so, what expertise are they expected to contribute? | |

| Expertise retrieval and enactment | |

| - What substantive and normative expertise is associated with the computational model? | |

| - What normative expertise is required from the stakeholders during the engagement practice? | |

| - Are there criteria to assess whether stakeholders adequately tap into their normative expertise and its effects on the modelling process? | |

| - Is the design, function, and deployment of the model sensitive to wider knowledge and expertise dynamics? | |

| Expertise recognition and attribution | |

| - Are the potential epistemic effects of the interaction for, with, and through the computational model assessed during the design phase? | |

| - Can variations in individual accomplishment, esotericity, and exposure to tacit knowledge influence expertise recognition and attribution? | |

| - Do expertise attributions by the research team influence the level of decision-making and control granted to stakeholders during the engagement process? | |

| - Is the expertise of the research team contested or legitimised during the engagement dynamic? | |

| - Are expertise dynamics within the research team explicit and adequately reflected in the engagement design? | |

Because knowledge transfer and exchange dynamics are not the exclusive competence of the literature on stakeholder engagement, the next step is to take stock of the available knowledge on expertise. This process entails: (i) identifying the tacit knowledge developed from previous practices of stakeholder engagement in agent-based social simulation that is worth making explicit, (ii) establishing links with broader research exploring issues of knowledge elicitation, transfer, and exchange, and (iii) bringing to the foreground neglected topics that warrant further research, such as the role of perceptions of and attitudes towards technology-assisted interaction and decision-making.

Following this first stage, the articulation of a body of knowledge that informs more diverse approaches to stakeholder engagement requires, in addition, establishing alternative goals and incentives for research (e.g., researching how stakeholder engagement is made) and reporting (e.g., publicly documenting the process, and possible failures, and not just the results of stakeholder engagement practices). Finally, knowledge synthesis methods and processes should be more widely agreed upon: stakeholder engagement is a highly complex and interdisciplinary topic, with an already vast literature. New knowledge syntheses that prioritise engagement practices in which agent-based modelling plays a central role can help new entrants and interested practitioners familiarise themselves with this modelling approach in a more effective manner.

Synthesising previous knowledge will likely lead to the identification of additional knowledge gaps in our overall understanding of expertise. Given the increasing interest in decision-making, it might be worth studying the fluent nature of expertise, among others, in engagement scenarios in which stakeholders do not transversely rely on the same expertise. In turn, we currently lack understanding of how, when taking part in stakeholder engagement practices, members of the research team navigate several roles e.g., as researcher, advisor, or decision-maker, that require multiple expertises with: (i) separate processes of accreditation and certification, (ii) alternative retrieval and enactment heuristics, and (iii) dissimilar attributions of expertise.

Similarly, since non-epistemic issues e.g., power, ideology, interest, or values, are unavoidable in stakeholder engagement practices, their connection with knowledge dynamics through, among others, expertise attribution, warrants further analysis. When several non-overlapping expertises converge in social simulation, individuals have asymmetrical authority over those expertises. Collaboration, then, requires trust in the knowledge and skills of the other experts involved (Anzola et al. 2022). Because individuals do not command the same expertise, this trust is supported by intertwined epistemic and non-epistemic components that will likely moderate the effectiveness of the interaction and the output of the engagement practice.

Notes

- There are noticeable differences in the extent to which this belief, and the overall practice of stakeholder engagement, have been adopted in agent-based computational social science. Most reported instances of stakeholder engagement come from research areas such as environmental resource management (Badham et al. 2019; Barreteau et al. 2016; Lippe et al. 2019; Schlüter et al. 2021; Schulze et al. 2017; Voinov et al. 2018), in which practitioners often rely on long-standing traditions of participatory modelling (extending well beyond research employing computational models) ( Moallemi et al. 2021, van Bruggen et al., 2019, Voinov et al., 2018).↩︎

- In turn, the discussion is centred exclusively on agent-based modelling, even though the method shares some similarities with other types of social simulation e.g., system dynamics or discrete-event simulation, used in stakeholder engagement. From the perspective of knowledge and expertise, the text shows, each simulation method may warrant a separate analysis.↩︎

- The label ‘formal’ is used in the text in connection with the source and enactment of expertise. Formal expertise has traditionally been certified, accredited, and exerted in practices closely connected to the formal education system. This characterisation of expertise as formal is independent of the relatively common understanding of computational models as formal systems or as instantiations of formal knowledge. In the latter, the notion of formality is linked, alternatively, to the language system.↩︎

- Issues of values, power, and worldviews are often mentioned as justification (Bohensky & Maru 2011; Campo et al. 2010; D’Aquino et al. 2003; Fischer 2000; Renger et al. 2008; Voinov et al. 2016). Given their non-epistemic nature, these issues are outside the scope of this text. They are, however, closely intertwined with epistemic considerations in the design of stakeholder engagement practices.↩︎

- A simulation reduces stakeholders’ mental work, among others, by giving them the option to negotiate their worldviews over an artefact, rather than their entire reality.↩︎

- In qualitative and quantitative research, sampling techniques are usually chosen following considerations of relevance and representativeness. Participant selection in stakeholder engagement is based primarily on the former because of the higher status given to expert knowledge. It could be questioned, for example, whether representativeness should be more explicitly accounted for when stakeholders’ expertise is primarily linked to knowledge and command of their own experience. However, this question is not easy to answer, among others, because it is partly mediated by modelling goals. Representativeness might not be as important for modelling goals that do not strongly depend on performance.↩︎

References

AKERA, A. (2007). Calculating a Natural World. Cambridge, MA: MIT Press.

AKKERMAN, S., Van den Bossche, P., Admiraal, W., Gijselaers, W., Segers, M., Simons, R.-J., & Kirschner, P. (2007). Reconsidering group cognition: From conceptual confusion to a boundary area between cognitive and socio-cultural perspectives? Educational Research Review, 2(1), 39–63. [doi:10.1016/j.edurev.2007.02.001]

ALVARADO, R. (2022). Computer simulations as scientific instruments. Foundations of Science, 27(3), 1183–1205. [doi:10.1007/s10699-021-09812-2]

ANZOLA, D. (2019). Knowledge transfer in agent-based computational social science. Studies in History and Philosophy of Science Part A, 77, 29–38. [doi:10.1016/j.shpsa.2018.05.001]

ANZOLA, D. (2021a). Capturing the representational and the experimental in the modelling of artificial societies. European Journal for Philosophy of Science, 11(3), 63. [doi:10.1007/s13194-021-00382-5]

ANZOLA, D. (2021b). Disagreement in discipline-building processes. Synthese, 198, 6201–6224. [doi:10.1007/s11229-019-02438-9]

ANZOLA, D. (2021c). Social epistemology and validation in agent-based social simulation. Philosophy & Technology, 34, 1333–1361. [doi:10.1007/s13347-021-00461-8]

ANZOLA, D. (2021d). The theory-practice gap in the evaluation of agent-based social simulations. Science in Context, 34(3), 393–410. [doi:10.1017/s0269889722000242]

ANZOLA, D., Barbrook-Johnson, P., & Gilbert, N. (2022). The ethics of agent-based social simulation. Journal of Artificial Societies and Social Simulation, 25(4), 1. [doi:10.18564/jasss.4907]

ANZOLA, D., & García-Díaz, C. (2023). What kind of prediction? Evaluating different facets of prediction in agent-based social simulation. International Journal of Social Research Methodology, 26(2), 171–191. [doi:10.1080/13645579.2022.2137919]

ASPINALL, W., & Cooke, R. (2013). Quantifying scientific uncertainty from expert judgement elicitation. In J. Rougier, S. Sparks, & L. Hill (Eds.), Risk and Uncertainty Assessment for Natural Hazards. Cambridge: Cambridge University Press. [doi:10.1017/cbo9781139047562.005]

BADHAM, J., Elsawah, S., Guillaume, J., Hamilton, S., Hunt, R., Jakeman, A., Pierce, S., Snow, V., Babbar-Sebens, M., Fu, B., Gober, P., Hill, M., Iwanaga, T., Loucks, D., Merritt, W., Peckham, S., Richmond, A., Zare, F., Ames, D., & Bammer, G. (2019). Effective modeling for integrated water resource management: A guide to contextual practices by phases and steps and future opportunities. Environmental Modelling & Software, 116, 40–56. [doi:10.1016/j.envsoft.2019.02.013]

BANG, D., & Frith, C. (2017). Making better decisions in groups. Royal Society Open Science, 4(8), 170193. [doi:10.1098/rsos.170193]

BARRETEAU, O., Bots, P., Daniell, K., Etienne, M., Perez, P., Barnaud, C., Bazile, D., Becu, N., Castella, J.-C., Daré, W., & Trebuil, G. (2016). Participatory approaches. In B. Edmonds & R. Meyer (Eds.), Simulating Social Complexity. Berlin Heidelberg: Springer. [doi:10.1007/978-3-319-66948-9_12]