Christian Hahn, Bettina Fley, Michael Florian, Daniela Spresny and Klaus Fischer (2007)

Social Reputation: a Mechanism for Flexible Self-Regulation of Multiagent Systems

Journal of Artificial Societies and Social Simulation

vol. 10, no. 1

<https://www.jasss.org/10/1/2.html>

For information about citing this article, click here

Received: 20-Jan-2006 Accepted: 20-Nov-2006 Published: 31-Jan-2007

Abstract

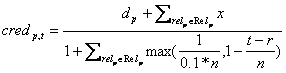

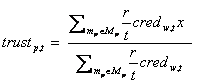

AbstractThe credibility credp,t of a provider p at time t is determined by considering evidence that saw the provider p spreading true or false information about some target q. This is illustrated by the set Relp of tuples relp (x,r) consisting of a time stamp r indicating in which round the evidence was collected and a value x expressing whether the witness spread true or false information. This value is calculated after the agent could make direct experience with the target q. If q behaved as predicted by p, the credibility value is set to 1, otherwise this value is set to 0. If an agent has no evidence about a target agent, it uses the default value d of p (i.e. dp) that depends on the witness' incentive to intentionally diffuse false information (e.g., journalist agents = .8, organisational colleagues =.7 and providers = .6). Since organisational colleagues would not benefit by spreading false information their default value is higher compared to any competing provider who would indirectly benefit of a decreased trust value of the agent. We have assigned a high credibility value to journalists because they have no incentive at all to spread false information (i.e. they are not involved in the auction itself). The witness' reliability is determined in accordance to Equation 1. If the credibility value of p lies below 0.3, it is considered as liar. If the credibility value lies above 0.55, it is considered to answer questions in a truthful manner. Between both values, p's attitude towards lying is set to unknown.

|

(1) |

In Equation 1, n illustrates the number of rounds that are simulated (n is set to 100 for all configurations we present in Section 5). The credibility values are weighted according to the time stamp r at which they were collected. Consequently, an evidence that was collected long time ago does not affect the overall credibility value as much as an evidence that was collected at t-1. This satisfies the fact that providers may change their attitude towards lying during the simulation.

|

(2) |

The most reputable providers get finally a task offered. If an agent classifies a provider to be a cheater, it stops to assign tasks to that provider altogether. We hypothesise that the composition of attributes illustrated in 4.5 and the computation model discussed in this section prevents negative effects of malicious behaviour and improves the cooperation and coordination between agents by reducing the risk of selecting unfeasible strategies including inappropriate organisation partners caused by local knowledge and inappropriate beliefs.

|

| Figure 1. Activity diagram describing the agent's internal process and the message exchange regarding reputation |

| Table 1: The configurations and their settings | ||||||||

| Performance criteria | Configuration | Configuration Settings | ||||||

| Round | Number of Providers, Customers | Number of Cheaters (in %) | Message Limit (ML) | Self-organisation | Liars (in %) | Figures | ||

| Reliability: Fraud handling | Configuration 1 | 0 | 90,30 | 10 | 1 | No | 0 | 1,2 |

| Configuration 2 | 0 | 90,30 | 10 | 1/5/8/16 | No | 0 | 3,4 | |

| Reliability: Scaling of cheaters | Configuration 3 | 0 | 90,30 | 10/20/30 | 5 | No | 0 | 5,6,7 |

| Reliability: Impact of lies | Configuration 4 | 0 | 90,30 | 20 | 5 | No | 20 | 8,9 |

| Scalability | Configuration 5 | 0,35,70 | (30,30,30),30 | 20/20/20 | 5 | Yes | 0 | 10 |

| Stability | Configuration 6 | 0 | 90,30 | 30 | 5 | No | 0 | 11 |

| Flexibility | Configuration 7 | 0 | 90,30 | 20 | 5 | Yes | 0 | 12,13 |

|

| Figure 2. Reduction of fraud (Configuration 1) |

Despite the similar reduction of fraud by none and image, image realises much better results than none in terms of diffusing information about cheaters within the market, especially among providers. The number of providers that know less than 25 % of the cheaters decreases for image much more and much faster (from 90 initially to 22 in round 100) in favour of the number of those agents that know between 50% and 75% of the cheaters (from 0 to 56) and those that know more than 75% (from 0 to 4). For none still 61 providers know only few cheaters (less than 25%) in round 100. Using prestige, the knowledge about deviant agents can be spread even more effective than for image. 74 providers know more than 75% of the cheaters finally. Figure 3 shows graphs that are rather typical for all configurations with respect to the spread of knowledge for prestige.

|

| Figure 3. Number of providers knowing certain percentages of cheaters (Configuration 1, Prestige) |

|

| Figure 4. Variation of message limit (Configuration 2) |

|

| Figure 5. Requested reputation reports (Configuration 2) |

|

| Figure 6. Scaling of cheater rate (none) (Configuration 3) |

|

| Figure 7. Scaling of cheater rate (image) (Configuration 3) |

In fact, image and especially prestige are even more effective in comparison to none if the initial cheater rate is scaled up. A matter of special importance is described by the fact that for both reputation types, deviant behaviour can be banished during the same period independent of the actual initial fraud percentage. Figure 8 shows that at an initial cheater rate of 30%, the difference regarding the number of tasks completed without fraud finally amounts to 3,5 tasks between prestige and none (between prestige and image to 2 tasks). At an initial rate of 20 %, the difference between none and prestige is around 2,3 tasks, at rate of 10% only 1,3 tasks.

|

| Figure 8. Improvement of task completion (Configuration 3, 30% cheaters) |

|

| Figure 9. Ratio of lies (Configuration 4) |

|

| Figure 10. Impact of lies on the fraud cases (Configuration 4) |

|

| Figure 11. Scaling of agent population (Configuration 5) |

|

| Figure 12. Decrease of cheaters' profits (Configuration 6) |

|

| Figure 13. Number of organisations |

Figure 14 in combination with Figure 13 highlights the directly proportional relationship between the number of organisations and the cases of fraud. This figure shows the average number of fraud cases of configuration 3 (20% cheaters) less the fraud cases of the configuration allowing self-organisation as trend lines. For both, prestige and image, the difference between the average fraud cases remains positive, i.e., reputation in combination with self-organisation reduces fraud additionally with respect to our standard configuration. These effects base on the trustworthiness of organisations and their members. Consequently, when self-organisation is allowed, none is more effective initially (until round 45). In comparison to image, none also reduces fraud more effective until round 37. Afterwards the more comprehensive investment in information pays out for image.

|

| Figure 14. Surplus of fraud cases without self-organisation |

| Table 2: Selected results of the simulations | ||

| Perturbation | Configuration | Main results |

| Reliability: Fraud handling | Configuration 1 | Spreading of trustworthiness values helps to decrease the rate of fraud Prestige decreases fraud cases more rapidly and more effectively |

| Configuration 2 | Image: decrease of fraud cases is stronger, the higher the message limit (ML) Fraud cases for ML 8/16 are comparable to prestige (Configuration 1) Further scaling of ML does not further decrease fraud cases | |

| Reliability: Scaling of cheaters | Configuration 3 | Prestige and image are capable to eliminate fraud completely, independently of the initial cheater rate |

| Reliability: Impact of lies | Configuration 4 | Image cannot reduce the rate of lies, whereas prestige is able to banish the spread of false information |

| Scalability | Configuration 5 | The higher the number of providers raises, the more effective reputation and especially prestige can handle entering cheaters Each time the cheater population increases, number of fraud cases drops more rapidly and deeper for all three reputation types |

| Stability | Configuration 6 | The more fraud cases can be reduced, the more the average profit per round of the cheaters diminish, and the more cheaters become bankrupt and cease the market |

| Flexibility | Configuration 7 | Prestige fosters the formation of organisations |

2 According to Scott (1995: 33), "institutions consist of cognitive, normative, and regulative structures and activities that provide stability and meaning to social behaviour." Institutions — in terms of "property rights, governance structures, conceptions of control, and rules of exchange — enable actors in markets to organize themselves, to compete and to cooperate, and to exchange" (Fligstein 1996: 658).

3 The notion of 'desirable conduct' refers to possible solutions to the problem of social order and may comprise social cooperation, altruism, reciprocity, or norm obedience (cf. Conte and Paolucci 2002: 1).

4 Conte and Paolucci (2002: 76f.) draw a clear distinction between the 'transmission of reputation (or gossip)' in terms of a dissemination of a cognitive representation and the 'contagion of reputation' (or 'prejudice') in the sense of the spreading of a property in cases of social proximity and common membership in a social group, network or organisation.

5 The notion of markets as 'social fields' (cf. Bourdieu 2005, Fligstein 2001) implies that markets are structured (1) by a distribution of desirable and scarce resources (capital) that define the competitive positions of agents and constrain their strategic options, (2) by institutional rules that guide and constrain the actions of agents, and (3) by practices of competing agents which engage in power struggles to control relevant market shares and improve their relational position within the market field.

6 The simulation software was implemented in JAVA and is available at: http://www.ags.uni-sb.de/~chahn/ReputationFramework.

AXELROD R (1984) The Evolution of Cooperation. New York: Basic Books.

BA S L and Pavlou P A (2002) Evidence of the effect of trust building technology in electronic markets: Price premiums and buyer behavior. MIS Quarterly, 26 (3). pp. 243-268.

BHAVNANI R (2003) Adaptive Agents, Political Institutions and Civic Traditions in Modern Italy. Journal of Artificial Societies and Social Simulation, vol. 6, no. 4. https://www.jasss.org/6/4/1.html

BOURDIEU P (1980) The production of belief: contribution to an economy of symbolic goods. Media, Culture and Society, 2. pp. 261-293.

BOURDIEU P (1992) Language and Symbolic Power. Oxford: Blackwell.

BOURDIEU P (1994) In Other Words. Essays Towards a Reflexive Sociology. Cambridge, Oxford: Polity Press.

BOURDIEU P (1998) The Forms of Capital. In Halsey A H, Lauder H, Brown P, Stuart Wells A (Eds.), Education. Culture, Economy, and Society. Oxford, New York: Oxford University Press. pp. 46-58.

BOURDIEU P (2000) Pascalian Meditations. Stanford/Ca.: Stanford University Press.

BOURDIEU P (2005) Principles of an Economic Anthropology. In Smelser N J, Swedberg R (Eds.), The Handbook of Economic Sociology. second edition, Princeton: Princeton University Press. pp. 75-89.

BOURDIEU P and Wacquant, L J D (1992) An Invitation to Reflexive Sociology. Cambridge, Oxford: Polity Press.

CASTELFRANCHI C (2000) Engineering Social Order. In Omicini A, Tolksdorf R, Zambonelli F (Eds.), Engineering Societies in the Agents World. First International Workshop, ESAW 2000, Berlin, Germany, August 2000. Revised Papers. Lecture Notes in Artificial Intelligence LNAI 1972. Springer-Verlag: Berlin, Heidelberg, New York. pp. 1-18.

CASTELFRANCHI C and Conte R (1995) Cognitive and Social Action. London: UCL Press.

CASTELFRANCHI C, Conte R and Paolucci M (1998) Normative reputation and the costs of compliance. Journal of Artificial Societies and Social Simulation 1 (3), https://www.jasss.org/1/3/3.html

COLEMAN J (1988) Social Capital in the Creation of Human Capital. American Journal of Sociology, 94 (Supplement). pp. 95-120.

CONTE R and Gilbert N (1995) Introduction: Computer simulation for social theory. In Gilbert N and Conte R (Eds.), Artificial Societies: The Computer Simulation of Social Life. London: UCL Press. pp. 1-15.

CONTE R and Paolucci M (2002) Reputation in Artificial Societies: Social Beliefs for Social Order. Dordrecht: Kluwer Academic Publishers.

DASGUPTA P (1988) Trust as a Commodity. In Gambetta D (Ed.), Trust: Making and Breaking Cooperative Relations. Oxford, New York: Basil Blackwell. pp. 49-72.

DAVIS R and Smith R G (1983) Negotiation as a metaphor for distributed problem solving. Artificial Intelligence (20). pp. 63-109.

DELLAROCAS C (2003) The digitization of word of mouth: Promise and challenges of online feedback mechanisms. Management Science 49 (10). pp. 1407-1424.

ELSTER J (1989) The Cement of Society. A Study of Social Order. Cambridge: University Press.

FISCHER K and Florian M (2005) Contribution of Socionics to the Scalability of Complex Social Systems: Introduction. In Fischer K, Florian M and Malsch T (Eds.), Socionics: Its Contributions to the Scalability of Complex Social Systems, Lecture Notes in Artificial Intelligence LNAI 3413. Berlin, Heidelberg, New York: Springer-Verlag.

FLIGSTEIN N (1996) Markets as politics: A political-cultural approach to market institutions. American Sociological Review 61. pp. 656-673.

FLIGSTEIN N (2001) The architecture of markets. An economic sociology of twenty-first-century capitalist societies. Princeton and Oxford: Princeton University Press.

FOMBRUN C J (1996) Reputation: Realizing Value from the Corporate Image. Boston, Mass.: Harvard Business School Press.

FOMBRUN C and Shanley M (1990) What's in a Name? Reputation-Building and Corporate Strategy. Academy of Management Journal 33. pp. 233-258.

GRANOVETTER M (1985) Economic Action and Social Structure: The Problem of Embeddedness. American Journal of Sociology 91. pp. 481-510.

HAHN C, Fley B, and Florian M (2006) Self-regulation through social institutions: A framework for the design of open agent-based electronic marketplaces. Computational & Mathematical Organization Theory 12 (2-3). pp. 181-204.

HALES D (2002) Group Reputation Supports Beneficent Norms. Journal of Artificial Societies and Social Simulation 5 (4). https://www.jasss.org/5/4/4.html

JENNINGS N R, Sycara K and Wooldridge M J (1998) A roadmap of agent research and development. Journal of Autonomous Agents and Multi-Agent Systems 1 (1). pp. 7-38.

KOLLOCK P (1994) The Emergence of Exchange Structures: An Experimental Study of Uncertainty, Commitment, and Trust. American Journal of Sociology 100 (2). pp. 313-345.

KREPS D M and Wilson R (1982) Reputation and Imperfect Information. Journal of Economic Theory 27. pp. 253-279.

LEPPERHOFF N (2002) SAM - Simulation of Computer-mediated Negotiations. Journal of Artificial Societies and Social Simulation 5 (4). https://www.jasss.org/5/4/2.html

MALSCH T (2001) Naming the Unnamable: Socionics or the Sociological Turn of/to Distributed Artificial Intelligence. Autonomous Agents and Multi-Agent Systems 4. pp. 155-186.

MERTON R K (1967) Social Theory and Social Structure. Revised and enlarged edition, New York: The Free Press.

PARSONS T (1951) The Social System. Glencoe, Ill. [etc.]: The Free Press.

PANZARASA P and Jennings N R (2001) The Organisation of Sociality: A Manifesto for a New Science of Multi-Agent Systems. Proceedings of the 10th European Workshop on Multi-Agent Systems (MAAMAW-01), Annecy, France http://www.ecs.soton.ac.uk/~nrj/download-files/maamaw01.pdf

PAVLOU P A and Gefen D (2004) Building effective online marketplaces with institution-based trust. Information Systems Research 15 (1). pp. 37-59.

RAO H (1994) The Social Construction of Reputation: Certification Contests, Legitimation, and the Survival of Organizations in the American Automobile Industry, 1895-1912. Strategic Management Journal 15. pp. 29-44.

RAUB W and Weesie J (1990) Reputation and Efficiency in Social Interactions: An Example of Network Effects. American Journal of Sociology 96 (3). pp. 626-54.

RESNICK P, Zeckhauser R, Friedman E and Kuwabara K (2000) Reputation Systems. Communications of the ACM 43 (12). pp. 45-48.

RITZER G (1996) Sociological Theory. Fourth Edition. New York etc.: McGraw-Hill.

ROUCHIER J, O'Connor M and Bousquet F (2001) The creation of a reputation in an artificial society organised by a gift system. Journal of Artificial Societies and Social Simulation 4 (2) https://www.jasss.org/ 4/2/8.html

SAAM N J and Harrer A (1999) Simulating Norms, Social Inequality, and Functional Change in Artificial Societies. Journal of Artificial Societies and Social Simulation 2 (1) https://www.jasss.org/2/1/2.html

SABATER J (2003) Trust and reputation for agent societies. Monografies de l'Institut d'Investigació en Intel.ligència Artificial, Number 20. Spanish Scientific Research Council. Universitat Autònoma de Barcelona Bellaterra, Catalonia, Spain (PhD thesis) http://www.iiia.csic.es/~jsabater/Documents/Thesis.pdf

SABATER J and Sierra C (2002) Reputation and Social Network Analysis in Multi-Agent Systems. Proceedings of First International Joint Conference on Autonomous Agents and Multiagent Systems. pp. 475-482.

SAWYER R K (2003) Artificial societies: Multi agent systems and the micro-macro link in sociological theory. Sociological Methods and Research 31 (3). pp. 325-363.

SCHILLO M, Bürckert H J, Fischer K and Klusch M (2001) Towards a Definition of Robustness for Market-style open Multiagent Systems. Proceedings of the Fifth International Conference on Autonomous Agents (AA'01). pp. 75-76.

SCHILLO M, Fischer K, Fley B, Florian M, Hillebrandt F and Spresny D (2004) FORM — A Sociologically Founded Framework for Designing Self-Organization of Multiagent Systems. In Lindemann G, Moldt D, Paolucci M and Yu B (Eds.), Regulated Agent-Based Social Systems. First International Workshop, RASTA 2002. Bologna, Italy, July 2002. Revised Selected and Invited Papers. Lecture Notes in Artificial Intelligence LNAI 2934. Berlin, Heidelberg, New York: Springer-Verlag. pp. 156-175.

SCOTT W R (1995) Institutions and Organizations. Thousand Oaks, London, New Delhi: Sage Publications.

SHAPIRO S (1987) The Social Control of Impersonal Trust. The American Journal of Sociology 93 (3). pp. 623-658.

SHENKAR O and Yuchtman-Yaar E (1997) Reputation, image, prestige, and goodwill: An interdisciplinary approach to organizational standing. Human Relations 50 (11). pp. 1361-1381.

TADELIS S (1999) What's in a Name? Reputation as a Tradeable Asset. American Economic Review 89 (3). pp. 548-563.

WEIGELT K and Camerer C (1988) Reputation and Corporate Strategy: A Review of Recent Theory and Applications. Strategic Management Journal 9. pp. 443-454.

YOUNGER S (2004) Reciprocity, Normative Reputation, and the Development of Mutual Obligation in Gift-Giving Societies. Journal of Artificial Societies and Social Simulation 7 (1) https://www.jasss.org/7/1/5.html

YU B and Singh M (2002) Distributed Reputation Management for Electronic Commerce. Computational Intelligence 18 (4). pp. 535-549.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2007]