Modelling Value Change: An Exploratory Approach

and

Delft University of Technology, Netherlands

Journal of Artificial

Societies and Social Simulation 27 (1) 3

<https://www.jasss.org/27/1/3.html>

DOI: 10.18564/jasss.5199

Received: 01-Jul-2022 Accepted: 30-Jun-2023 Published: 31-Jan-2024

Abstract

Value and moral change have increasingly become topics of interest in the philosophical literature. Several theoretical accounts have been proposed. These are usually based on certain theoretical and conceptual assumptions. Their strengths and weaknesses are often difficult to determine and compare because they are based on limited empirical evidence. We propose agent-based modeling to build simulation models that can theoretically help us explore accounts of value change. We can investigate whether a simulation model based on a specific account of value change can reproduce relevant phenomena. To illustrate this approach, we build a model based on the pragmatist account of value change proposed by Van De Poel and Kudina (2022). We show that this model can reproduce four relevant phenomena, namely 1) the inevitability and stability of values, 2) societies differ in openness and resistance to change, 3) moral revolutions, and 4) lock-in. This makes this account promising, although more research is needed to see how well it can explain other relevant phenomena and compare its strengths and weaknesses to other accounts. On a more methodological level, our contribution suggests that simulation models might be useful to theoretically explore accounts of value change and make further progress in this area.Introduction

Technological and societal developments can lead to changes in values. Under different headings (e.g., technomoral change, moral revolutions, moral progress, and value change), this phenomenon has increasingly drawn attention in the philosophical literature (Baker 2019; Buchanan et al. 2016; Danaher 2021; Kitcher 2021; Morris 2015; Swierstra 2013; Van De Poel & Kudina 2022; Van Der Burg 2003). In the philosophy of technology, scholarship has evolved from the initial view that technology determines society (e.g., Ellul 1964) to the idea that technologies are human constructs shaped by interests and values (e.g., Winner 1980) and, more recently, a co-evolutionary view on technology and values, where technologies and values develop in interaction and co-shape each other (Jasanoff 2016; Rip & Kemp 1998; Swierstra & Rip 2007; Van De Poel 2020).

The literature offers various accounts of value change (e.g., Baker 2019; Swierstra 2013; Van De Poel & Kudina 2022). Most are based on certain theoretical assumptions and few empirical examples. In general, the empirical evidence for the approaches is scant. We think further headway can be made by building formal simulation models of value change accounts. Building such models has several advantages. First, creating a simulation model forces the proponents of certain accounts to be more explicit and precise about the key assumptions and the presumed mechanisms of value change. Second, simulation models can be used for conceptual and theoretical exploration (Edmonds et al. 2019). Once we have built a simulation model based on a certain account, we can systemically explore whether the model can reproduce certain phenomena that we consider key for value change or - more broadly - for the interaction between society, technology, and values. The underlying idea is that if a model based on a certain theoretical account of value change can reproduce key phenomena, the underlying account is more convincing. In this way, we can explore and compare the theoretical strengths and weaknesses of accounts of value change in more detail. It should be noted that this approach does not address the empirical adequacy of a simulation model or the underlying theoretical account of value change. This needs to be addressed in further research.

To further develop our approach, we create an explorative agent-based model that models value change based on ideas and assumptions from philosophical pragmatism, particularly Dewey (1939). More specifically, we build on a proposal by Van De Poel & Kudina (2022) in which values are seen as “evaluative devices” to gauge existing technologies and related moral problems. We assume that the incapacity to cope with new morally problematic situations may cause value change and foster innovation. Next, we use an exploratory approach combining agent-based modeling (Epstein & Axtell 1996) and scenario discovery (Bryant & Lempert 2010) to investigate whether and under what conditions we can reproduce relevant value phenomena as well as the underlying (expected) mechanisms resulting in the occurrence of these phenomena. The agent-based model simulates potential value change that might emerge in a specific society. Using the scenario discovery technique, we run the model multiple times and classify value change phenomena in different kinds of societies.

In particular, we zoom in on the conditions under which specific phenomena described in the philosophical and social science literature and which can be related to value change occur. This paper focuses on four phenomena: 1) the inevitability and stability of values, 2) differences in openness and resistance to value change between societies, 3) moral revolutions, and 4) lock-in. With this work, we contribute to a new field of application for agent-based modeling. While promising, the using agent-based models and exploratory tools within ethics of technology is still relatively scarce. We contribute to the discussion on conceptualizing values by doing justice to how they are understood in different scientific fields (e.g., behavioral science vs. ethics of technology). Additionally, we contribute to building formal models of value change. We aim to enrich the philosophical literature by formalizing the relationship between technology, society, and values.

Theory

Previous work

The literature has examined several multiple agent-based models that include values. Examples include Boshuijzen-van Burken et al. (2020), de Wildt et al. (2021), Gore et al. (2019), Harbers (2021), Kreulen et al. (2022), and Mercuur et al. (2019). In most cases, these models are either built on the literature on value-sensitive design (e.g., Friedman & Hendry 2019) or Schwartz’s theory of values (e.g., Schwartz & Bilsky (1987), and Schwartz (1992)), or a combination of both.1 In this literature, values are either very broadly understood as “what people consider important in life” (in the value-sensitive design literature) or as part of someone’s personality (in Schwartz’s theory or other psychological theories; e.g., Steg & De Groot 2012). Values have also been modeled in operations research, where they mainly function as (given) decision criteria in complex decision problems with multiple alternatives and various conflicting values (Brandao Cavalcanti et al. 2017; Curran et al. 2011; Keeney 1996; Keeney & von Winterfeldt 2007).

Our approach is different from extant literature in three respects. First, we are interested in values at the level of society. So rather than modeling values as characteristics of individuals or as (external) decision criteria, we model them as characteristics of societies. Second, as far as we know, we are the first to explicitly model a pragmatist account of values, in which values are considered functional for recognizing, addressing, and solving moral problems. We explain the pragmatist account in more detail below. Third, we are interested in value change at the societal level and in interaction with technology. Although existing models can address value change at the individual level, they ignore the societal level and/or the interaction with technology.

The theoretical basis of our model: pragmatist account of value change

As starting point for our model, we use the pragmatist account of value change presented in Van De Poel & Kudina (2022). In this account, values are understood as tools for moral evaluation. They help people to recognize, analyze and (possibly) resolve morally problematic situations. The idea is that values can change if and when they are no longer helpful (functional) in recognizing, analyzing, and resolving these situations. This means that value change may be triggered when new morally problematic situations arise that cannot, or not sufficiently, be resolved with existing values (or existing priorities among values). For example, the emergence of sustainability as a value in the 1980s may be seen viewed as a response to increasing environmental problems and tensions between economic and environmental values that could not be solved by existing values.

Van de Poel and Kudina’s pragmatist account of value change (2022) is broadly functionalist, i.e., it understands values as serving certain functions, primarily moral evaluation.2 However, it is different from other functionalist accounts of values in the social sciences and psychology. Values may also serve a social function, such as providing a shared identity, contributing to social stability, or aligning intentions and actions. They can also fulfill a psychological function for individuals, such as identity formation or coping with certain universal human challenges. Here, we understand values primarily as serving a function in moral evaluation and problem-solving.

Van De Poel & Kudina (2022) distinguish between three dynamics of value change: (1) value dynamism, (2) value adaptation, and (3) value emergence. The first occurs if human agents confronted with a morally problematic situation reinterpret existing values to better deal with such a situation. This may lead to some change in how a value is understood or prioritized vis-a-vis other values, but the change does not carry over to the societal level and is, therefore, short-lived. The second case is similar to the first, but the value change carries over to the societal level and will last longer. In the third case, agents cannot resolve the morally problematic situation based on existing values. This may lead to a new value to deal with the problem, which may induce a societal change.

The value change account offered by Van De Poel & Kudina (2022) is broader than just technology-induced value change, but they apply it to technology, which we will do in this paper. We model the interaction between values and technologies at the societal level. To this end, we assume that technologies are developed and employed in a society to fulfill certain needs that exist in a society. However, employing technologies may also have unintended effects that manifest as (new) morally problematic situations. In line with the pragmatist account of value change and the underlying ideas of the pragmatist philosopher John Dewey, we will assume that such moral problems are initially latent.3

We assume that agents must have the relevant values to recognize a morally problematic situation and make a latent moral problem a perceived one. For example, agents need environmental values to identify environmental problems. However, if a morally problematic situation is not recognized or resolved, the problem may increase and become apparent so that the relevant value emerges (cf. Van De Poel & Kudina 2022). For example, societies may employ an energy technology, like coal, to meet energy needs. As an unintended by-product, this may create environmental problems. If these societies have no environmental values, agents may not immediately recognize the environmental issues as problematic. However, the problem may become undeniable, and an environmental value (like sustainability) may emerge.4

We assume that the values of a society influence the technologies the society employs. In other words, agents will use technologies that serve their needs and choose those that best align with their values. For example, a society that places importance on environmental values will strive to avoid using coal as energy technology. Once another technology – like, for example, wind energy – that fulfills the same needs but is more in line with the values of that society becomes available, it is likely to switch to that technology. This brings us to a final element of how we model the interaction between technologies and values. To a certain extent, societies tend to innovate, i.e., to look for (and find) new technologies that fulfill their needs. However, they may differ in terms of (unintended) effects and, therefore, in the degree to which they respect certain values.

Phenomena of value (change)

Van De Poel and Kudina’s pragmatist account was developed to explain value change better. Although there are other accounts of value or moral change (Baker 2019; Nickel 2020; Swierstra et al. 2009), the authors claim that their account can also (or even better) explain aspects of value change highlighted by these other authors. In this paper, we have a somewhat different interest. We do not aim to compare the pragmatist account with other accounts. We are interested in investigating whether it can reproduce some related phenomena in the interaction between values, technology, and society.

We identify four phenomena related to values and value change. We build a simulation model and investigate whether the conceptualization of value change offered by Van De Poel & Kudina (2022) can reproduce these phenomena and, if so, under what conditions.

We focus on the following four phenomena:

- The inevitability and stability of values

- The difference between societies in openness and resistance to value change

- The occurrence of moral revolutions

- The occurrence of lock-in

This list resulted from an initially longer list of relevant phenomena that seemed relevant in the interaction between technology, society, and values. We then reduced this list to those phenomena that would roughly require the same model conceptualization. Some phenomena, for example, would require distinguishing specific values (e.g., sustainability or privacy), which our chosen model conceptualization does not allow.5 We describe these phenomena in more detail below.

The inevitability and stability of values

It would seem a social fact that societies are characterized by values. It is difficult to imagine a society with no values. Humans are evaluative and normative beings who seem to hold certain values by their very nature. Moreover, values are relatively stable over time. They differ from attitudes and preferences, which are more situation-specific and transient (Rokeach 1973; Williams Jr. 1968). They also differ from norms, which are more specific than values and contain prescriptions for action often based on sanctions (Hitlin & Piliavin 2004). Values transcend specific situations and are relatively stable over time (Schwartz & Bilsky 1987).

While it may seem a truism that values are inevitable and rather stable elements of a society, it is not straightforward that a functionalist conceptualization of values that allows for value change can account for the inevitability and stability of values. In a functionalist account, it would seem conceivable – at least theoretically – that values disappear once the function they fulfill is no longer needed or required. In our case, we consider values to be functional for addressing and resolving moral problems. This suggests that they may disappear if all moral problems are solved.

This does not mean that a functionalist conception of values cannot account for the inevitability and stability of values. In such a conception, the absence of stable values is a theoretical possibility. However, one would expect that such a society (without stable values) would not be able to deal with moral problems adequately. In other words, if we simulated a society without stable values, we would expect it to be characterized by a high severity of moral problems, or at least a severity of moral problems that are significantly higher than societies with stable values.

Societies differ in openness and resistance to change

It seems likely that societies differ in their openness or resistance to change, and this characteristic is likely to affect, among others, how such societies react to external events or shocks. Theoretically, this difference in what we will call “openness to change” may be understood along two dimensions that are sometimes understood as distinct differences between traditional and modern societies in the literature. These differences are: (1) the pace of technological change and innovation and (2) the degree to which a society is characterized by a division of labor and, consequently, the extent to which different value spheres co-exist in a society.

Traditional and particular pre-industrial societies are often characterized by a slower pace of technological development emphasizing technological innovation. Similarly, they are typically more homogenous. So-called modern societies are characterized by complex divisions of labor (Durkheim 1933), which may give rise to social spheres in which values are prioritized (Walzer 1983; Weber 1948). Therefore it seems likely that such societies have a higher plurality of values and are more open to value change.

We recognize that distinguishing traditional from modern societies is difficult and potentially controversial. Any defining difference might meet objections and counterexamples. Moreover, the idea that there is any sharp distinction might be contentious. Still, we maintain that, theoretically, it is worthwhile distinguishing societies with different degrees of “openness to change.” And we believe it would be a virtue of a conceptualization of value change if it could explain why such a difference matters for how societies react to external perturbations. The question of which societies are more or less open to change and whether this maps on any distinction between ‘traditional’ and ‘modern’ societies is empirical and beyond the scope of this paper.

Moral revolutions

While value changes may gradually emerge over time, sometimes they occur suddenly and in a relatively short period. An example is the sexual revolution, which led to a change in sexual morality– and hence a value change – in certain societies in a relatively short period (Diczfalusy 2000; Swierstra 2013; Van Der Burg 2003). Such phenomena have been described as moral revolutions (Appiah 2010; Baker 2019; Lowe 2019; Pleasants 2018). Baker (2019), for example, understood moral revolutions as being akin to Kuhnian scientific revolutions (Kuhn 1962). According to him, certain moral dissenters articulate moral anomalies by pointing out that the old moral paradigm can no longer address current moral problems.

In a survey article on recent accounts of moral revolutions in moral philosophy, Klenk et al. (2022) suggest that there is an agreement in the literature that “part of what makes revolutions distinctive is the radicality, depth or fundamentality of the moral changes involved.” There is no agreement that moral revolutions need to happen suddenly. Appiah (2010 p. 172) describes a moral revolution as ‘a large change in a small time’, whereas authors like Baker (2019), Pleasants (2018), and Kitcher (2021) think they can stretch over longer periods, even several generations. Klenk et al. (2022 p. 6) state that “some of the apparent tension between these comments on the speed of revolutions may be resolved by acknowledging that a moral revolution can be slow in the making (the challenging of moral codes, the presentation of an alternative, the individual activism etc.) while the behavioral change can be sudden.”

To see whether our model can reproduce moral revolutions, we will understand such revolutions as implying a significant or radical change in the importance of values in a relatively short period. Since time is relative in our model, it is measured in ticks, but we can compare the time in which a value change occurs to the entire length of the model run. We do not assume a particular period in which the radical value change happens, but it needs to be short compared to the typical lifespan of societies.

Lock-in

We are interested in seeing whether our simulation model can simulate a lock-in that occurs when a technology is used despite it being morally unacceptable. In the literature, technological lock-in is often understood as the phenomenon that a technology is dominant in use even though a better technology that roughly fulfills the same needs is available (Arthur 1989; David 1985). ‘Better’ can mean that the alternative technology is more effective or more efficient in fulfilling needs or does so with fewer harmful secondary consequences, like pollution and risks. In the literature, lock-in has been analyzed as the result of path dependencies in the development and adoption of technology (Arthur 1989; David 2007). Such path dependencies may, for example, be caused by increased returns to adoption or by network externalities A classic example is the QWERTY keyboard. A layout was chosen to avoid typewriter jamming, but ergonomically, it was allegedly not the best layout for users in terms of efficiency and muscle strains (David 1985). However, it remains the dominant design in the computer age (where jamming is impossible) mainly because so many people have become used to it. It has become the standard in typing and training courses.

Bergen (2016) points out that path dependency and lock-in are not purely economic phenomena. Political, institutional, and infrastructural lock-in in a certain technology option or project has been discussed in the literature (e.g., Frantzeskaki & Loorbach 2010; Walker 2000). Terms such as carbon lock-in (Unruh 2000) and moral lock-in (Bruijnis et al. 2015) have been used in the literature to refer to the continued use of a morally undesirable technology. Bergen (2016) suggests lock-ins may also occur without (better) technological alternatives. He points out that the absence of technological alternatives may result from path dependencies and micro-irreversibilities, for example, if a technology is preferred due to political (or ideological) factors, even if it has significant drawbacks.

It should be noted that our operationalization of lock-in terms of the continued use of unacceptable technology reflects an idea that is also found in the literature on lock-in, namely the idea that it is undesirable. For some authors, the undesirability lies in the fact that a better technology is available but not used (e.g., David 1985). For others, it is the fact that it is more difficult to develop alternative technologies (e.g., Bergen (2016)). Still others understand lock-in simply as the continued use of an undesirable technology, for example, carbon (e.g., Unruh 2000) or moral lock-in (Bruijnis et al. 2015).

Model Description

This section describes the conceptualization and parameters of the model. The model can be found online.6 An ODD+D description (Müller et al. 2013) of the model is provided in the Appendix.

Simulation goal and requirements for model validation

The simulation experiment aims to evaluate whether the pragmatist account of value change proposed in Van De Poel & Kudina (2022) can successfully reproduce the four phenomena described in the previous section. To do so, the simulation experiment should comply with the following two requirements. First, it should follow the conceptualization of value change by Van De Poel & Kudina (2022). Table 1 shows how key elements of the pragmatist account of value change have been translated into the model. The model is based on four key assumptions: (1) values influence the selection of preferable technologies, (2) technologies may have unintended consequences and lead to (new) morally problematic situations, (3) values are necessary to recognize moral problems caused by technologies, and (4) society may respond to moral problems through value dynamism, value adaptation, and innovation.

Second, the simulation experiment should allow us to evaluate whether the conceptualization can reproduce the relevant phenomena. To do so, we need to pay attention to the metric (whether the phenomenon occurred in the model) and the mechanism (whether the process leading to the phenomena is plausible).

We use an agent-based model (ABM) because we expect that agent heterogeneity (i.e., technologies having different properties and needs having different impacts) will affect the occurrence of the emergent behavior (i.e., the phenomena of value change). Additionally, ABM has advantages in explicitly visualizing processes leading to value change compared to other modeling paradigms, such as system dynamics (i.e., populations using technologies to address certain needs).

We discover the occurrence of value change using the scenario discovery approach (Bryant & Lempert 2010). Exploratory modeling and analysis (EMA) (Bankes 1993; Kwakkel & Pruyt 2013) is used to run the simulation model multiple times, each time with a different combination of input parameters (e.g., degree of openness to change of societies, propensity for value adaption, dynamism, and innovation). PRIM (Patient Rule Induction Method) (Friedman & Fisher 1999) is used to trace which combinations of input parameters led to the occurrence of the phenomenon of interest.

Model conceptualization

Model elements

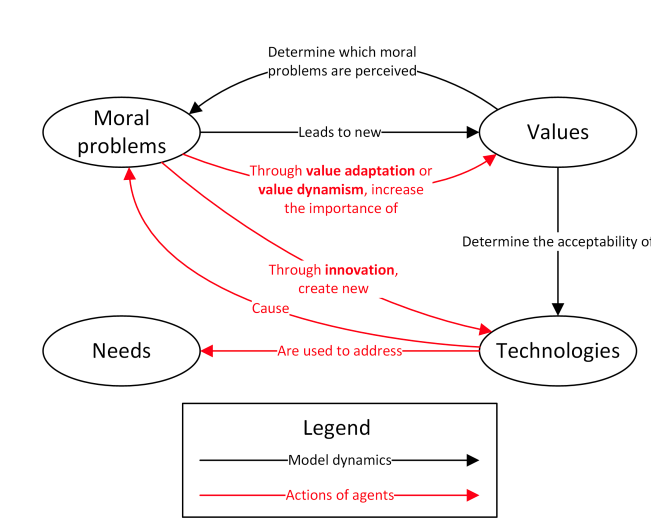

The conceptualization of the model follows the account of value change of Van De Poel & Kudina (2022) (See Table 1), as well as some other common assumptions in the relevant literature. For example, we do not only assume that technologies meet human needs (a commonsense assumption) but also that they may have unintended consequences that can lead to moral problems (e.g., Van De Poel & Royakkers 2011). We further assume that values affect the choice of technologies and can affect technological innovation, an assumption that is common in the literature on value-sensitive design (e.g., Friedman & Hendry 2019; van den Hoven et al. 2015), responsible innovation, and in Science and Technology Studies (e.g., Bijker & Law 1992). We use three options for reacting to moral problems (value dynamism, value adaptation, and innovation) based on Van De Poel & Kudina (2022), but with two deviations. First, we do not distinguish value emergence as a separate response because, in our model, it is an extreme case of value adaptation, where value importance changes from zero to a positive number (so we include it implicitly). Second, we distinguish innovation as a separate response. The literature recognizes that innovations can be developed to address moral problems (e.g., van den Hoven et al. 2015). For example, they may be developed to meet the sustainable development goals of the United Nations (e.g., European Commission 2012; Voegtlin et al. 2022). By adding innovation as a response, our model can simulate the mutual interaction between technology and values (society), an important phenomenon according to several authors (Rip & Kemp 1998; Swierstra 2013; Van De Poel 2020). We further assume that moral problems are not recognized if society lacks the relevant values (following the pragmatist account, see Table 1). However, they will be recognized if they become too big (a commonsense assumption). Finally, we assume that values that are not ‘used’ will disappear over time, an assumption we explained in the section on ‘the inevitability and stability of values’ above.

| Pragmatist account of value change | In model |

|---|---|

| Indeterminate situation | Latent moral problem |

| Values help to see what is morally salient | Values are needed to recognize (latent) moral problems |

| Values help to evaluate situations | Values determine the acceptability of technologies |

| Values provide clues/guidance for action | Values help to suggest innovations |

| Value dynamism | Temporary change in the importance of values |

| Value adaptation | Structural change in the importance of values |

| Value emergence | A new value appears in the model |

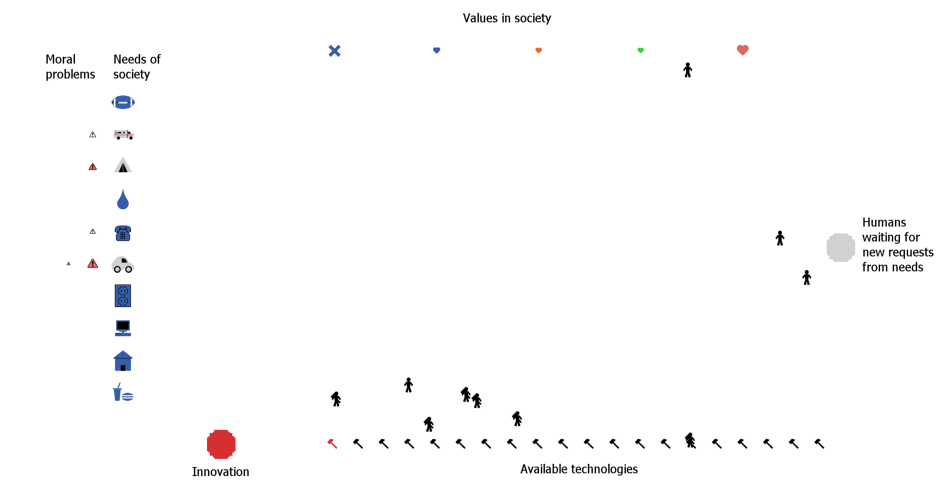

Our simulation model represents a society where agents fulfill societal needs by using technologies. The usage of these technologies can cause moral problems. If so, agents have three options to address these problems. First, they can adjust the values of society temporarily (value dynamism). As a result, the technology that has caused the problems becomes less desirable. Second, they can adjust society’s values, but permanently (value adaptation). The consequences are the same as for value dynamism, but the values that have been adjusted will not return to their initial settings over time. A third option is to create a new technology (innovation). If possible, the newly created technology will align with society’s current values (assuming agents aim for responsible innovation). Figure 1 visualizes the model, and Figure 2 conceptualizes it.

Example. Society is characterized by various needs. One example of such a need is energy. Various technologies, like coal power, nuclear power, and wind energy, may be used to fulfill that need. In the model, existing values determine which technologies are considered acceptable and are used. Suppose a society is characterized by the values of safety and reliability and has access to the technologies of coal and nuclear power. In light of these values, coal power rather than nuclear power is deemed acceptable to generate energy. In turn, the use of this technology causes environmental problems. Since society does not yet have the value of sustainability, these environmental problems are initially not recognized and are latent. However, above a certain threshold, they become perceived, and the value of sustainability emerges. The emergence of sustainability may have several consequences in the model. First, it may affect the acceptability of available technologies. If society has access to coal and nuclear power, the new value of sustainability might make coal power less and nuclear power more acceptable. This could change the technologies used to fulfill the energy need (and which moral problems are caused). The value of sustainability may also trigger new innovations and, for example, may make wind energy available to society. Suppose this technology (wind energy) becomes the (dominant) technology to fulfill the energy need, it may then cause new moral problems. For example, windmills may negatively affect landscape aesthetics, or their noise may create moral health problems for people. These problems may again lead to new value changes, which may trigger further technological changes.

Needs. The model comprises several needs. The role of agents in the model is to fulfill these needs using technologies.

Technologies. To fulfill a need, agents first need to choose a technology. Multiple technologies might exist that can fulfill the same need. In this case, the agent will choose one that is morally acceptable (not those in red in Figure 1) and that has frequently been used (the agent has learned to use the technology efficiently).

Moral problems. After choosing a technology, the agent turns to the need it aims to fulfill. After fulfilling the need, the usage of the technology may have caused moral problems. The severity of a moral problem can vary between zero and ten. Each time a technology is used, the severity of the moral problem may increase. This is determined by the characteristics of the technology and how well the agent has learned to use it (the more frequently the technology is used, the more efficient its usage). The severity of the moral problem is likely to trigger a reaction from agents. If it reaches the first threshold, the status of the problem changes from inexistent to unperceived (white warning sign in Figure 1). If it reaches a second threshold, the moral problem becomes perceived (colored warning sign in Figure 1).

Values. Moral problems can become perceived if their severity is high and if society has the appropriate values to recognize them. Each value in the model has specific properties that allow a society to identify certain moral problems. Values in society determine whether technologies are deemed morally unacceptable (those in red in Figure 1). Agents will try to choose acceptable technologies if available. The importance of a value increases when the corresponding moral problems become more severe. As a result, technologies that were initially acceptable can become unacceptable over time.

Values may disappear if related moral problems have not occurred for some time. The variable ‘value memory of society’ affects how quickly values are forgotten.

Value deliberation. If a moral problem is perceived, an agent can act on it in three ways: value dynamism, value adaptation, and innovation. This choice depends on society’s preferences towards each action (see Table 2).

If the agent chooses value dynamism, the importance of the value(s) corresponding to the moral problem increases temporarily. If the value does not yet exist, a new one is created. Increasing the importance of values can cause technologies that were first deemed acceptable to become morally unacceptable. After a certain number of ticks, the importance will return to its initial level. If the agent chooses value adaptation, the importance of values is also increased, but permanently. If the agent chooses innovation, a new technology that aligns with society’s values (i.e., morally acceptable) is likely to be created. The agent heads toward the ‘innovation area’. Whether the agent successfully creates these technologies depends on society’s openness to change and a certain degree of chance (random distribution).

Model specification

This section provides an overview of the input and output parameters for the model. Output parameters are used to trace phenomena of value change.

| Parameters | Description | Range |

|---|---|---|

| Propensity_value_dynamism | Probability that agents choose value dynamism to act on moral problems. | [0-1] |

| Propensity_value_adaptation | Probability that agents choose value adaptation to act on moral problems. | [0-1] |

| Propensity_innovation | Probability that agents choose innovation to act on moral problems. | [0-1] |

| Openness_to_change | Pace at which values are changed and the probability of finding new technologies | [0-100] |

| Value_memory_of_society | Pace at which unaffected values are forgotten | [0-100] |

| max_need_change | Maximum increase or decrease of needs if these change over time | [0.5-4] |

| Output parameters | Output parameter name |

|---|---|

| Number of moral problems discovered throughout simulation run using their values | ‘number_of_moral_problems_discovered_through_values’ |

| Number of moral problems discovered throughout simulation run because they exceeded the threshold (moral problems were severe) | ‘number_of_moral_problems_discovered_through_threshold’ |

| Sum of severity of moral problems encountered throughout the simulation run | ‘total_severity_of_moral_problems’ |

| Average number of existing perceived moral problems throughout simulation run | ‘average_number_of_perceived_moral_problems’ |

| Average number of existing unperceived moral problems throughout simulation run | ‘average_number_of_unperceived_moral_problems’ |

| Number of moral problems emerged throughout the simulation run | ‘number_of_moral_problems_emerged’ |

| Number of moral problems that existed when moral revolutions occurred | ‘count_moral_problems_when_moral_revolution’ |

| Number of values that existed when moral revolutions occurred | ‘count_values_when_moral_revolution’ |

| Average number of moral problems in the model throughout the simulation run | ‘average_number_of_moral_problems’ |

| Average number of values in the model throughout the simulation run | ‘average_number_of_values’ |

| Number of moral revolutions that occurred throughout one simulation run | ‘count_moral_revolutions’ |

| Number of lock-in situations that occurred throughout one simulation run | ‘count_lock_in_situations’ |

Model runs

An important criterion for successfully performing the analysis is that the number of runs is sufficiently large to perform PRIM experiments (see subsection ‘Simulation goal and requirements for model validation’). Using PRIM, we can identify the input parameters (and subsequent ranges) that caused the occurrence the phenomenon of interest.

We use Saltelli’s sampling scheme (Saltelli 2002) to determine the number of model runs as it offers good coverage of the input space for sensitivity analysis. We set the number of samples to 512 (\(N\)) to ensure that the number of runs is sufficiently large and because this sampling scheme recommends that the sample number is a power of 2. The number of runs is calculated using the following formula: \(N \times (2D + 2)\), where \(D\) is the number of input parameters. The number of runs performed is 7,168.

Model validation

We validate the model using the ‘evaludation’ protocol of Augusiak et al. (2014) and considering the criteria for model validation established in the section ‘Simulation goal and requirements for model validation’. For the data evaluation step, we verified that the ranges of input parameters are sufficient to generate all phenomena of value change that could be observed in the model. For the conceptual model evaluation step, we verified with one of the authors of the pragmatist account of value change proposed by Van De Poel & Kudina (2022) that the model conceptualization was correct. We conducted a series of tests to verify the absence of code errors and bugs in the model. The model output verification step was achieved by verifying that the model could generate phenomena of value change and that some of the mechanisms leading to value change were plausible. The use of EMA (see subsection ‘Simulation goal and requirements for model validation’) is beneficial for the model analysis step, as the sensitivity of input parameters is immediately perceived. The model output corroboration step is part of the result section, as we aim to evaluate to what extent the model can reproduce phenomena of value change.

Results

Reproducing phenomena of value change

We test the ability of the model to reproduce phenomena of value change by formulating several hypotheses. Table 4 lists our hypotheses. These are derived from the earlier described phenomena of value change as follows:

- The first phenomenon is ‘the inevitability and stability of values.’ As indicated earlier, we expect societies without stable values to be less able to address moral problems (hypothesis 1).

- The second phenomenon is based on the distinction between traditional and modern societies. We define the former as having a lower rate of technological innovation and less propensity for value change. They have a more challenging time reacting to external shocks because the pace at which they can innovate or change values in response to these shocks is lower (hypothesis 2). Modern societies are more likely to innovate and have a variety of value spheres, making value change more likely. These societies can innovate and solve problems due to external shocks, but these innovations will likely lead to new moral problems in the long run (hypothesis 3).

- The third phenomenon relates to moral revolutions. Authors like Baker (2019) suggested that moral dissenters often trigger moral revolutions. Although we have not modeled ‘dissent’, it likely correlates with model parameters such as the number and severity of moral problems (hypothesis 4). Similarly, external shocks may lead to unsolved moral problems and hence dissent (hypothesis 5). Moral revolutions are more likely to occur if the problem-solving capacity of a society is lower. Since we conceive values as tools to solve moral problems, this would seem to be the case if a society has few values. A moral revolution may be seen as an event that tries to correct this state of affairs (hypothesis 6).

- The fourth phenomenon is lock-in, which we conceptualize as the continued use of a morally unacceptable technology. Two factors seem to be at play in causing lock-in. One is that value change may make a technology that was deemed acceptable to suddenly become unacceptable, contributing to lock-in. Second, a low level of technological innovation will make it more difficult to develop acceptable technologies, leading to more unacceptable technologies and, most likely, more lock-in (hypothesis 7).

| Phenomenon | Hypothesis |

|---|---|

| Phenomenon 1: The inevitability and stability of values | Hypothesis 1: Societies cannot adequately address moral problems without stable values. |

| Phenomenon 2: Societies differ in openness and resistance to change | Hypothesis 2: Societies resistant to change are less resilient in dealing with external shocks. |

| Hypothesis 3: Societies with high openness to change can solve more moral problems but also create more new moral problems. | |

| Phenomenon 3: Moral revolutions | Hypothesis 4: Moral revolutions are more likely if a society is confronted with many moral problems and/or severe moral problems. |

| Hypothesis 5: Moral revolutions are more likely in cases of external shocks. | |

| Hypothesis 6: Moral revolutions are more likely if a society has few values. | |

| Phenomenon 4: Lock-in | Hypothesis 7: Lock-in is caused by a combination of value change and low innovation. |

Phenomenon 1: The inevitability and stability of values

In a functionalist account, the function of values is to help societies address and resolve moral problems.

To test hypothesis 1, we run several simulations for various value memories of a society. A society with low-value memory means values tend to be forgotten quickly (i.e., values are unstable). This may result in less awareness of potential moral problems caused by technologies. We measure the average severity of moral problems observed in a society and the number of problems discovered in a society.

Figure 3 shows the results of the simulations. A longer value memory indeed helps to address moral problems. This is particularly apparent when the value memory is between 0 and 20. If it is lower than 20, moral problems are mainly discovered through the severity threshold (the problem is so severe that it becomes perceived in a society even if it lacks the values to perceive the problem directly). When the value memory is higher than 20, moral problems are often discovered thanks to the values that society already has. As a result, the total severity of moral problems is lower when the value memory is higher than 20. The results in Figure 3 largely confirm hypothesis 1 that societies cannot adequately address moral problems without stable values.

Phenomenon 2: Societies differ in openness and resistance to change

Societies may differ in degree of openness to change. For example, some societies might be more open to new technologies and new moral considerations in the face of social and technological change. Although this openness can be beneficial to adjust to new situations, technological and moral change can also lead to new moral problems.

To test hypothesis 2, we perform simulations in which the society has various degrees of openness and where the needs of the society change in a succession of shocks. The variable ‘max_need_change’ determines the size of these shocks. We then measure the cumulative severity of moral problems observed in a society. Figure 4 shows the results of the simulation. The figure on the left shows a kernel density estimate (KDE7) plot of the cumulative severity of moral problems observed for each simulation run. The figure indicates that the average cumulative severity is around 600,000. Using PRIM, we select simulation runs that end with a cumulative severity higher than 850,000 (worst cases). We inspect which ranges of the input parameters generated these runs. The figure on the right reports these results. It shows that the severity of moral problems was highest when both the need changes were high (between 2.3 and 4) and openness to change was low (between 0 and 31). This means that a high severity of moral problems most likely occurs in societies that combine a low openness to change with large external need changes (i.e., large external shocks). This confirms hypothesis 2 that societies resistant to change are less resilient in dealing with external shocks.

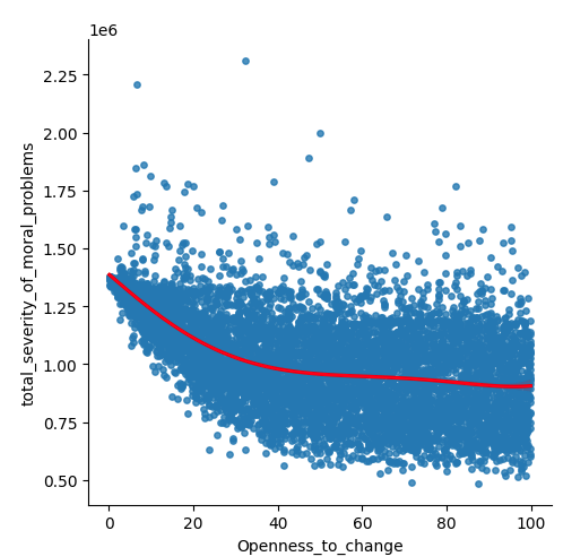

To test hypothesis 3, we run several simulations for various degrees of openness of a society. We measure the number of moral problems in a society in each simulation run (both perceived and unperceived) and the number of new problem problems that emerged during the simulation run.

Figure 5 presents the simulation results. These seem to confirm hypothesis 3. The number of perceived moral problems that a society has to deal with is lower if the openness to change of a society is high. However, high openness to change also leads to more unperceived moral problems and more new moral problems.

Phenomenon 3: Moral revolutions

Technological change may induce moral revolutions. More specifically, they can be triggered by multiple and severe societal problems, external shocks, and too few values (which could help solve moral problems).

To test hypothesis 4, we measure the number of moral problems a society faces before a moral revolution and compare it with the average number of moral problems a society faces. Figure 6 shows the results. It shows two KDE plots of both measures. The average number of moral problems faced before moral revolutions was 7,242, with an average of 5,549 moral problems in society. We can therefore confirm Hypothesis 4 that moral revolutions are more likely if a society is confronted with many problems and/or severe moral problems.

To test hypothesis 5, we perform simulations where the needs in a society change through a series of shocks. We measure the number of moral revolutions in each simulation run. Figure 8 confirms hypothesis 5, namely that moral revolutions occur more frequently when external shocks are stronger (also see Figure 7).

To test hypothesis 6, we compare the number of values in a society when moral revolutions occur to the average number of values in that society over the entire model run (see Figure 8). The figure indicates that the number of values seems lower before moral revolutions (often only two), confirming hypothesis 6.

Phenomenon 4: Lock-in

Lock-in may occur without (better) technological alternatives, making it impossible for a society to react to new morally unacceptable technologies.

To test hypothesis 7, we perform simulation runs in which societies have different propensities towards innovation, value dynamism, and adaptation and measure whether lock-ins have occurred. The outcomes of the simulations suggest that the hypothesis cannot be confirmed. Rather, we identify three combinations of propensities that lead to lock-in situations (see Table 5).

| Scenario | Lock-ins | Innovation | Value Dynamism | Value Adaptation |

|---|---|---|---|---|

| 1 | A few short ones | High | High | Low |

| 2 | Several larger ones | High | Low | High |

| 3 | Many short ones | Low | High | Low |

The mechanism of value dynamism explains the occurrence of the three scenarios. In the case of value dynamism, society changes its values only temporarily to get out of lock-in situations for a short period. However, if society keeps using the same technologies, the same lock-in situation will reappear as soon as the importance of values resorts to its initial state (scenario 3). In contrast, a high innovation rate can help avoid lock-in situations (scenario 1). In scenario 2, there is no mechanism to quickly escape lock-in situations due to the low propensity of value dynamism. Values may adapt, but it might take longer before society finds innovations that align with new values. Although our simulation results do not confirm hypothesis 7, they do not reject it either. Moreover, our model can simulate the lock-in phenomenon, albeit under somewhat different conditions than expected.

Discussion

We first consider each of the four studied phenomena in detail and then draw more general conclusions about the strength and weaknesses of the pragmatist account of value change. We also discuss the added value of agent-based modeling in developing theories of value change and propose possible directions for future research.

The inevitability and stability of values

In a pragmatist account of values, values can theoretically disappear in a society if the function they fulfill is no longer required. This suggests that, theoretically, there are also no values in a society without moral problems Yet, in the actual world, values seem to be inevitable but also rather stable, as also suggested by many (other) theories about values (e.g., Schwartz 1992). How then can we account for this phenomenon in a pragmatist account of value? We suggested that a society without stable values cannot adequately address moral problems if we assume values to be functional in recognizing, addressing, and solving moral problems. Our experiments mostly confirmed this hypothesis, suggesting that values in most societies are more or less ‘inevitable’ and rather stable (otherwise, societies may not persist). This may be one of the strengths of the model and the underlying conceptualization in the sense that we can reproduce the phenomenon of the inevitability and stability of values without assuming it in advance.

Of course, other value scholars may argue that there is an intrinsic, conceptual connection between values and human agents (or between values and societies). One can also build a simulation model based on such a conceptual assumption, but then the inevitability and stability of values are assumed in the model. In our case, we did not make this assumption and show that a pragmatist functionalist account of values nevertheless requires that values are present in a society and need to be rather stable for a society to address moral problems.

Societies differ in openness and resistance to change

We wanted our model to reproduce differences in societies in terms of openness and resistance to change. In particular, we were interested to see whether they would lead to differences in how societies react to external shocks. In the experiments, we operationalized an external shock as a sudden change in needs. In reality, such sudden changes in needs may be caused by an external shock. For example, a society hit by drought might suddenly have an increased need for food, or a society struck by an earthquake for housing.

Our experiments show that societies less open to change are, on average, confronted with a higher total cumulative severity of moral problems when plagued by bigger external shocks. Although more open societies are more open to change and can adapt more quickly, our experiments also suggest that they are not necessarily better able to avoid moral problems in the long run (see Figure 9). Although the figure suggests that the total severity of moral problems decreases when the openness to change increases, the effect of openness seems limited, implying that societies open to change have their own issues in properly addressing moral problems. One possible explanation is that the higher pace of technological innovation, leading to the use of new technologies, may, in turn, cause new types of moral problems. Although new values may arise to address these problems, these societies may not always be able to successfully abate moral problems in the long run. This explanation is in line with our hypothesis 3.

This finding suggests that although ‘modern’ societies are more adaptive than ‘traditional’ ones when reacting to external shocks, they are more plagued by moral problems of their own making, particularly due to new technologies. Current human-induced climate change seems to be an example of the latter. Some have interpreted it as a sign that we are entering a new era, sometimes called the Anthropocene (e.g., Crutzen 2006), in which the circumstances in which we live, but also the problems that confront us, are much more the result of human actions and technological developments, than in the past (cf. Kolbert 2021).

Moral revolutions

Our experiments suggest that moral revolutions can occur in our model. They are characterized by relatively sudden and drastic changes in the importance of values. Our simulations also suggest that moral revolutions typically happen when a society faces various moral problems it cannot resolve. This is in line with the literature, which suggests that moral revolutions may occur when an existing paradigm can no longer cope with moral problems (e.g., Baker 2019). Similarly, we found that moral revolutions are more likely in societies confronted with external shocks or those with limited capacity to solve moral problems due to few values.

Lock-in

We also wanted our model to reproduce the phenomenon of lock-in, understood as the continued use of a morally unacceptable technology. Our results show that under some circumstances, lock-in occurs in our model, while in others not or less. Although we were thus able to reproduce the lock-in phenomenon, the circumstances under which it occurred were somewhat different from the ones we formulated in hypothesis 7. Our model could not simulate lock-in cases where a better or more acceptable technology is available. This is probably because the current model assumes that society always prefers an acceptable technology to an unacceptable one. However, this model assumption is not based on the underlying pragmatist conception of values (and value change) and can be adjusted without changing the underlying pragmatist conception of values.

The literature often understands lock-in as the consequence of path dependencies; in other words, lock-in occurs due to historical contingencies that affect the further development of a society. An agent-based model is – at least in principle - able to simulate such path dependencies, as the state of the variables in an agent-based model at a given time is dependent on previous states of these variables. In our experiments, we have only investigated more structural factors that make lock-in more likely to occur. Still, it would be interesting to see whether we can also investigate which events are more likely to lead to lock-in and which may result in lock-out.

Comparison with other models and theories of value and value change

Existing (agent-based) models typically model values as decision criteria and/or as individual characteristics (see the theory section). Such models can model (and potentially explain) how values affect the choice of technologies and how these technologies affect the realization of moral values (and the occurrence of moral problems). Typically, do not model how the moral problems created by certain technologies affect values in society and how they may lead to value change. In contrast to existing models, we model the interaction between technology and values full circle, including how the technologies used affect values. This limitation of existing models partly stems from modeling choices that can easily be made differently, for example, by adding other or more mechanisms to existing models. However, there seems to be also an underlying conceptual reason why some existing models are limited in this respect. They are based on Schwartz’s theory of values, which assumes that there are only ten basic individual values, which are universal and timeless and thus cannot change (Schwartz 1992). Only the relative importance of these values can change. This limits the theoretical resources of this theory to understand value change. Schwartz’s theory does not seem to help us understand the interaction between values and technologies.8

If we compare our account and model of value change with other existing theories of value change or moral change, we can make the following observations. One influential idea is moral revolutions (Appiah 2010; Baker 2019; Pleasants 2018). Our model based on a pragmatist notion of value can account for moral revolutions. Conversely, it is difficult to see how Baker’s theory of moral revolutions can account for phenomena like lock-in or differences between societies in openness to change. Another important theory, developed by Swierstra (2013), is related to technomoral change. This is one of the few other accounts that conceptualizes how technology may affect morality, although its focus is on moral routines rather than values. However, it fails to explain how values affect the choice of technology. In that respect, our pragmatist account seems quite promising in modeling the full interaction between technology and values.

Strengths and weaknesses of the pragmatist conceptualization of values and value change

We selected four phenomena that a model based on a pragmatist conceptualization of values and value change should be able to reproduce. By and large, our model was indeed able to reproduce these phenomena. It could reproduce the inevitability and relative stability of values for societies with a certain minimal value memory. It also reproduced lock-ins and moral revolutions.

This suggests that the pragmatist account is promising to understand value change and account for several related phenomena. Nevertheless, we mention some caveats and limitations. First, it is often difficult to tell whether the ability to reproduce a certain phenomenon in the model is due to the underlying pragmatist conceptualization of value change or to other assumptions and choices. Second, we focused on four phenomena. There might be other relevant phenomena. Moreover, we did not compare our results with real-world data, so we cannot draw any conclusions about the empirical adequacy of our model or the underlying account of value change. Third, although we could reproduce the four phenomena, our model is still rather coarse-grained, so sometimes it was unable to reproduce the more precise dynamics associated in the literature with these phenomena (particularly in the case of moral revolutions and lock-in). Fourth, our model assumes rather homogenous societies. It hardly differentiates between individual agents within a society, ignoring individual differences in value, and does not model interactions between societies with different values.

The added value of (agent-based) modeling

We see our model also as a proof-of-concept of how agent-based models – and more generally, simulation models – may be of added value in developing theories of value change. As we have shown, ABM can be used to explore a certain theory or conceptualization of value change, in this case the pragmatist account of value change proposed in Van De Poel & Kudina (2022). Our exploration here took the form of testing, through experiments, whether the developed model could reproduce four relevant phenomena.

However, it should be emphasized that we learned a lot from developing the model in terms of theoretical exploration. One of the authors of this paper has a stronger background in agent-based modeling, the other in the philosophy of technology, and developing the model required a dialogue between both viewpoints. On the one hand, it required us to become more precise about the underlying conceptualization of value change. On the other hand, it made us aware that sometimes modeling assumptions are less innocent than they may seem. Certainly, when it came to trying to simulate or reproduce the four phenomena of interest, it was clear that it was sometimes difficult to clearly distinguish between modeling assumptions coming from the underlying conceptualization of values and value change and additional assumptions that were needed to build the model. We generally tried to keep the model as simple as possible to stay close to the underlying conceptualization. In doing so, we had to reduce the number of phenomena of value change we initially had in mind by choosing those more closely related conceptually.

Future research

Future research could address several issues. First, exploring whether the model can reproduce other relevant phenomena concerning the interaction between values, technology, and societies might be interesting. For example, can we reproduce that different societies have different values (value pluralism)? In a pragmatist functionalist account of values, such pluralism may be because societies are confronted with different moral problems (or developed different technologies). It would be interesting to see whether our model can reproduce this.

Second, other values or value change conceptions could be modeled. For example, scholars could model Schwartz’ value theory (Schwartz 1992) and see whether it can account for value change and the other phenomena studied in this paper. Future research could model Baker’s (2019) account of moral revolutions and compare how well it can account for different relevant phenomena compared to our model.

Third, it would be interesting to further develop and detail our model. For example, it would be useful to explore the phenomenon of lock-in in the light of the pragmatist account of value change. The current model is still too coarse-grained to simulate the dynamics of lock-in in more detail. Further research could distinguish agents with different roles in the model (e.g., technology developers and users of technology) or allow agents to have different values (which is currently not yet the case). This might be useful for exploring specific phenomena and making the model more realistic and empirically adequate.

Conclusions

We developed an agent-based model to explore the theoretical strengths and weaknesses of the pragmatist conception of value change proposed by Van De Poel & Kudina (2022). We systematically investigated whether this model could reproduce four phenomena that seem (theoretically) relevant for the interaction between technology, society, and values, namely 1) the inevitability and stability of values, 2) how different societies may react differently to external shocks, (3) moral revolutions, and (4) lock-in. By and large, we were able to reproduce these phenomena, although the exact dynamics associated with them were sometimes not completely reproducible, probably because our model is rather coarse-grained.

We draw two main conclusions The first is that the underlying pragmatist conception of value change is theoretically promising as it can account for several relevant phenomena. However, there are some significant limitations to this conclusion. First, we did not compare the account with others, which could also reproduce the relevant phenomena. Second, there may be other relevant phenomena than the four studied. Third, it is often difficult to tell whether the ability to reproduce a relevant phenomenon is due to the underlying account or to additional modeling choices. (This applies mutatis mutandis also for the inability to reproduce some of the more detailed dynamics of these phenomena).

The second conclusion is that ABM – and more generally simulation models that allow ‘growing the system’ – are promising for exploring the theoretical strengths and weaknesses of accounts of value change. First, they offer the apparatus to systematically investigate whether certain phenomena can be reproduced (under certain variations of the main parameters). Second, building a simulation model forces us to become more precise about an account of value change and articulate certain assumptions based on the account. In that sense, building a model already has clear added value.

Funding

This publication is part of the project ValueChange that has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 Research and Innovation Programme under grant agreement no. 788321.

Notes

The only exception in the mentioned papers is de Wildt et al. (2021) who use the capability approach.↩︎

Van De Poel & Kudina (2022) distinguish five more specific functions of values in moral evaluation: (1) discovering what is morally salient in a situation; (2) normatively evaluating situations; (3) suggesting courses of action (4) judging whether a problematic situation has been resolved and (5) moral justification↩︎

Dewey calls such situations indeterminate situations. See also Van De Poel & Kudina (2022).↩︎

Following Dewey (1939) and Van De Poel & Kudina (2022), we thus assume that what Dewey calls indeterminate situations may be recognized by agents because they are unsettling, even if agents do not yet have the relevant values to turn the indeterminate situation into a morally problem one. More specifically, in the model we assume that the situation may only be felt as unsettling if the latent problem reaches a certain threshold.↩︎

Our initial list comprised seven phenomena: Lock-in, innovativeness, value plurality, moral progress, moral revolutions, resistance to change, and resilience. We excluded value plurality and moral progress because they would require another model conceptualization. The three phenomena, that we initially called Innovativeness, resistance to change, and resilience are now combined under the heading “The difference between societies in openness (and resistance) to value change.” The first phenomenon (‘inevitability and stability of values’) was later added when we realized that our model could lead to the disappearance of values, which seemed undesirable.↩︎

https://www.comses.net/codebases/485e19db-7634-42b7-bf80-f5f0db39d46d/releases/1.1.0/↩︎

A KDE plot is a visualization of the distribution of observations in a dataset. The visualization is similar to a histogram, but smoothing is applied to improve visibility.↩︎

However, it might be argued the Schwartz value theory is much better empirically corroborated than the pragmatist account of value that we used.↩︎

Appendix

The ODD+D model description can be found here: https://www.jasss.org/27/1/3/odd_d.pdf.

References

APPIAH, A. (2010). The Honor Code: How Moral Revolutions Happen. New York, NY: Norton.

ARTHUR, W. B. (1989). Competing technologies, increasing returns, and lock-in by historical events. The Economic Journal, 99(394), 116–131. [doi:10.2307/2234208]

AUGUSIAK, J., Van Den Brink, P. J., & Grimm, V. (2014). Merging validation and evaluation of ecological models to “Evaludation”: A review of terminology and a practical approach. Ecological Modelling, 280, 117–128. [doi:10.1016/j.ecolmodel.2013.11.009]

BAKER, R. (2019). The Structure of Moral Revolutions : Studies of Changes in the Morality of Abortion, Death, and the Bioethics Revolution, Basic Bioethics. Cambridge, MA: The MIT Press. [doi:10.7551/mitpress/11607.001.0001]

BANKES, S. (1993). Exploratory modeling for policy analysis. Operations Research, 4, 435–449. [doi:10.1287/opre.41.3.435]

BERGEN, J. P. (2016). Reversible experiments: Putting geological disposal to the test. Science and Engineering Ethics, 2(22), 3. [doi:10.1007/s11948-015-9697-2]

BIJKER, W., & Law, J. (1992). Shaping Technology/Building Society. Cambridge, MA: The MIT Press.

BOSHUIJZEN-VAN Burken, C., Gore, R., Dignum, F., Royakkers, L., Wozny, P., & Leron Shults, F. (2020). Agent-Based modelling of values: The case of value sensitive design for refugee logistics. Journal of Artificial Societies and Social Simulation, 23(4), 6. [doi:10.18564/jasss.4411]

BRANDAO Cavalcanti, L., Bergsten Mendes, A., & Tsugunobu Yoshida Yoshizaki, H. (2017). Application of multi-Attribute value theory to improve cargo delivery planning in disaster aftermath. Mathematical Problems in Engineering, 2017.

BRUIJNIS, M. R. N., Blok, V., Stassen, E. N., & Gremmen, H. G. J. (2015). Moral “lock-In” in responsible innovation: The ethical and social aspects of killing day-Old chicks and its alternatives. Journal of Agricultural and Environmental Ethics, 28(5), 939–960. [doi:10.1007/s10806-015-9566-7]

BRYANT, B. P., & Lempert, r. j. (2010). Thinking inside the box: A participatory, computer-Assisted approach to scenario discovery. Technological Forecasting and Social Change, 77(1), 34–49. [doi:10.1016/j.techfore.2009.08.002]

BUCHANAN, K., Banks, N., Preston, I., & Russo, R. (2016). The british public’s perception of the UK smart metering initiative: Threats and opportunities. Energy Policy, 91, 87–97. [doi:10.1016/j.enpol.2016.01.003]

CRUTZEN, P. J. (2006). The Anthropocene. In E. Ehlers & T. Krafft (Eds.), Earth System Science in the Anthropocene (pp. 13–18). Berlin, Heidelberg: Springer.

CURRAN, R., Smulders, F., & van der Zwan, F. (2011). Evaluation of airport system of systems from a human stakeholder perspective using a value operations methodology (VOM) assessment framework. 11th AIAA Aviation Technology, Integration, and Operations (ATIO) Conference, Virginia Beach, VA. Available at: https://doi.org/10.2514/6.2011-7052 [doi:10.2514/6.2011-7052]

DANAHER, J. (2021). Axiological futurism: The systematic study of the future of values. Futures, 132, 102780. [doi:10.1016/j.futures.2021.102780]

DAVID, P. A. (1985). Clio and the economics of QWERTY. American Economic Review, 75(2), 332–337.

DAVID, P. A. (2007). Path dependence: A foundational concept for historical social science. Cliometrica, 1(2), 91–114. [doi:10.1007/s11698-006-0005-x]

DEWEY, J. (1939). Theory of Valuation. Chicago, IL: The University of Chicago Press.

DE Wildt, T. E., Boijmans, A. R., Chappin, E. J. L., & HerDE, P. M. (2021). Ex ante assessment of the social acceptance of sustainable heating systems: An agent-Based moDElling approach. Energy Policy, 153, 112265. [doi:10.1016/j.enpol.2021.112265]

DICZFALUSY, E. (2000). The contraceptive revolution. Contraception, 61(1), 3–7.

DURKHEIM, E. M. (1933). The Division of Labor in Society. New York, NY: Macmillan.

EDMONDS, B., Polhill, G. J., & Hales, D. (2019). Predicting social systems - A challenge. Review of Artificial Societies and Social Simulation. Available at: https://rofasss.org/2019/11/04/predicting-social-systems-a-challenge/

ELLUL, J. (1964). The Technological Society. New York, NY: Vintage Books.

EPSTEIN, J. M., & Axtell, R. (1996). Growing Artificial Societies - Social Science From the Bottom Up. Washington, DC: The Brookings Institution.

EUROPEAN Commission. (2012). Responsible research and innovation. Available at: https://op.europa.eu/en/publication-detail/-/publication/bb29bbce-34b9-4da3-b67d-c9f717ce7c58/language-en

FRANTZESKAKI, N., & Loorbach, D. (2010). Towards governing infrasystem transitions: Reinforcing lock-in or facilitating change? Technological Forecasting and Social Change, 77(8), 1292–1301. [doi:10.1016/j.techfore.2010.05.004]

FRIEDMAN, B., & Hendry, D. (2019). Value Sensitive Design: Shaping Technology With Moral Imagination. Cambridge, MA: The MIT Press. [doi:10.7551/mitpress/7585.001.0001]

FRIEDMAN, J., & Fisher, N. (1999). Bump hunting in high-Dimensional data. Statistics and Computing, 9, 123–162.

GORE, R., Le Ron Shults, F., Wozny, P., Dignum, F. P. M., Boshuijzen-van Burken, C., & Royakkers, L. (2019). A value sensitive ABM of the refugee crisis in the Netherlands. Spring Simulation Conference (SpringSim) Tucson, AZ, USA. Available at: https://doi.org/10.23919/SpringSim.2019.8732867 [doi:10.23919/springsim.2019.8732867]

HARBERS, M. (2021). Using agent-Based simulations to address value tensions in design. Ethics and Information Technology, 23(1), 49–52. [doi:10.1007/s10676-018-9462-8]

HITLIN, S., & Piliavin, J. A. (2004). Values: Reviving a dormant concept. Annual Review of Sociology, 30, 359–393. [doi:10.1146/annurev.soc.30.012703.110640]

JASANOFF, S. (2016). The Ethics of Invention : Technology and the Human Future. New York, NY: W.W. Norton & Company.

KEENEY, R. L. (1996). Value-Focused thinking: Identifying decision opportunities and creating alternatives. European Journal of Operational Research, 92(3), 537–549. [doi:10.1016/0377-2217(96)00004-5]

KEENEY, R. L., & von Winterfeldt, D. (2007). Practical value models. In W. Edwards, R. Miles, & D. von Winterfeldt (Eds.), Advances in Decision Analysis: From Foundations to Applications (pp. 232–252). Cambridge: Cambridge University Press. [doi:10.1017/cbo9780511611308.014]

KITCHER, P. (2021). Moral Progress. Oxford: Oxford University Press.

KLENK, M., O’Neill, E., Arora, C., Blunden, C., Eriksen, C., Hopster, J., & Frank, L. (2022). Recent work on moral revolutions. Analysis, 82(2), 354–366. [doi:10.1093/analys/anac017]

KOLBERT, E. (2021). Under a White Sky: The Nature of the Future. New York, NY: Crown.

KREULEN, K., de Bruin, B., Ghorbani, A., Mellema, R., Kammler, C., Dignum, V., & Dignum, F. (2022). How culture influences the management of a pandemic: A simulation of the COVID-19 crisis. Journal of Artificial Societies and Social Simulation, 25(3), 6.

KUHN, T. S. (1962). The Structure of Scientific Revolution. Chicago, IL: University of Chicago Press.

KWAKKEL, J. H., & Pruyt, E. (2013). Exploratory modeling and analysis, an approach for model-based foresight under deep uncertainty. Technological Forecasting and Social Change, 80(3), 419–431. [doi:10.1016/j.techfore.2012.10.005]

LOWE, D. (2019). The study of moral revolutions as naturalized moral epistemology. Feminist Philosophy Quarterly, 5, 2. [doi:10.5206/fpq/2019.2.7284]

MERCUUR, R., Dignum, V., & Jonker, C. M. (2019). The value of values and norms in social simulation. Journal of Artificial Societies and Social Simulation, 22(1), 9. [doi:10.18564/jasss.3929]

MORRIS, I. (2015). Foragers, Farmers, and Fossil Fuels: How Human Values Evolve. Princeton, NJ: Princeton University Press.

MÜLLER, B., Bohn, F., Dre, G., Groeneveld, J., Klassert, C., Martin, R., Schlüter, M., Schulze, J., Weise, H., & Schwarz, N. (2013). Describing human decisions in agent-Based models - ODD + D, an extension of the ODD protocol. Environmental Modelling & Software, 48, 37–48.

NICKEL, P. J. (2020). Disruptive innovation and moral uncertainty. Nanoethics, 14(3), 259–269. [doi:10.1007/s11569-020-00375-3]

PLEASANTS, N. (2018). The structure of moral revolutions. Social Theory and Practice, 44(4), 567–592. [doi:10.5840/soctheorpract201891747]

RIP, A., & Kemp, R. (1998). Technological change. In S. Rayner & E. L. Malone (Eds.), Human Choice and Climate Change. Malone, OH: Battelle Press.

ROKEACH, M. (1973). The Nature of Human Values. New York, NY: The Free Press.

SALTELLI, A. (2002). Making best use of model evaluations to compute sensitivity indices. Computer Physics Communications, 145(2), 280–297. [doi:10.1016/s0010-4655(02)00280-1]

SCHWARTZ, S. H. (1992). Universals in the content and structure of values: Theoretical advances and empirical tests in 20 countries. In M. P. Zanna (Ed.), Advances in Experimental Social Psychology (pp. 1–65). Amsterdam: Elsevier. [doi:10.1016/s0065-2601(08)60281-6]

SCHWARTZ, S. H., & Bilsky, W. (1987). Toward a universal psychological structure of human values. Journal of Personality and Social Psychology, 53(3), 550–562. [doi:10.1037/0022-3514.53.3.550]

STEG, L., & De Groot, J. I. M. (2012). Environmental values. In S. Clayton (Ed.), The Oxford Handbook of Environmental and Conservation Psychology (pp. 81–92). Oxford: Oxford University Press. [doi:10.1093/oxfordhb/9780199733026.013.0005]

SWIERSTRA, T. (2013). Nanotechnology and technomoral change. Etica E Politica, 15(1), 200–219.

SWIERSTRA, T., & Rip, A. (2007). Nano-Ethics as NEST-Ethics: Patterns of moral argumentation about new and emerging science and technology. NanoEthics, 1(1), 3–20. [doi:10.1007/s11569-007-0005-8]

SWIERSTRA, T., Stemerding, D., & Boenink, M. (2009). Exploring techno-Moral change. The case of the obesity pill. In P. Sollie & M. Düwell (Eds.), Evaluating New Technologies (pp. 119–138). Berlin Heidelberg: Springer. [doi:10.1007/978-90-481-2229-5_9]

UNRUH, G. C. (2000). Understanding carbon lock-In. Energy Policy, 28(12), 817–830. [doi:10.1016/s0301-4215(00)00070-7]

VAN den Hoven, J., Vermaas, P. E., & VAN de Poel, I. (2015). Handbook of Ethics, Values, and Technological Design. Berlin Heidelberg: Springer.

VAN De Poel, I. (2020). Three philosophical perspectives on the relation between technology and society, and how they affect the current debate about artificial intelligence. Human Affairs, 30(4), 499–511. [doi:10.1515/humaff-2020-0042]

VAN De Poel, I., & Kudina, O. (2022). Understanding technology-Induced value change: A pragmatist proposal. Philosophy and Technology, 35(2), 1–24. [doi:10.1007/s13347-022-00520-8]

VAN De Poel, I., & Royakkers, L. M. M. (2011). Ethics, Technology and Engineering. Oxford: Wiley-Blackwell.

VAN Der Burg, W. (2003). Dynamic ethics. Journal of Value Inquiry, 37, 13–34.

VOEGTLIN, C., Scherer, G. A. G., Stahl, K., & Hawn, O. (2022). Grand societal challenges and responsible innovation. Journal of Management Studies, 59(1), 1. [doi:10.1111/joms.12785]

WALKER, W. (2000). Entrapment in large technology systems: Institutional commitment and power relations. Research Policy, 29(7), 833–846. [doi:10.1016/s0048-7333(00)00108-6]

WALZER, M. (1983). Spheres of Justice : A Defense of Pluralism and Equality. New York, NY: Basic Books.

WEBER, M. (1948). Religious Rejections of the World and Their Directions. Oxford: Oxford University Press.

WILLIAMS Jr., R. M. (1968). The concept of values. In D. S. Sills (Ed.), International Encyclopedia of the Social Sciences (pp. 283–287). New York, NY: Macmillan Free Press.

WINNER, L. (1980). Do artifacts have politics? Daedalus, 109(1), 121–136.