High-Frequency Financial Market Simulation and Flash Crash Scenarios Analysis: An Agent-Based Modelling Approach

, , ,

and

aDepartment of Computing, Imperial College London, United Kingdom; bSimudyne Limited, London, United Kingdom; cHazelcast, Palo Alto, CA

Journal of Artificial

Societies and Social Simulation 27 (2) 8

<https://www.jasss.org/27/2/8.html>

DOI: 10.18564/jasss.5403

Received: 01-Dec-2022 Accepted: 22-Mar-2024 Published: 31-Mar-2024

Abstract

This paper describes simulations and analysis of flash crash scenarios in an agent-based modelling framework. We design, implement, and assess a novel high-frequency agent-based financial market simulator that generates realistic millisecond-level financial price time series for the E-Mini S&P 500 futures market. Specifically, a microstructure model of a single security traded on a central limit order book is provided, where different types of traders follow different behavioural rules. The model is calibrated using the machine learning surrogate modelling approach. Statistical test and moment coverage ratio results show that the model has excellent capability of reproducing realistic stylised facts in financial markets. By introducing an institutional trader that mimics the real-world Sell Algorithm on May 6th, 2010, the proposed high-frequency agent-based financial market simulator is used to simulate the Flash Crash that took place on that day. We scrutinise the market dynamics during the simulated flash crash and show that the simulated dynamics are consistent with what happened in historical flash crash scenarios. With the help of Monte Carlo simulations, we discover functional relationships between the amplitude of the simulated 2010 Flash Crash and three conditions: the percentage of volume of the Sell Algorithm, the market maker inventory limit, and the trading frequency of fundamental traders. Similar analyses are carried out for mini flash crash events. An innovative "Spiking Trader" is introduced to the model, replicating real-world scenarios that could precipitate mini flash crash events. We analyse the market dynamics during the course of a typical simulated mini flash crash event and study the conditions affecting its characteristics. The proposed model can be used for testing resiliency and robustness of trading algorithms and providing advice for policymakers.Introduction

With the advent of electronic financial markets for the exchange of securities, the electronic centralized limit order book has become the standard market mechanism for transaction matching and price discovery. This form of order book offers market participants a more liquid market system with a small bid-ask price spread, increased market depth and decreased transaction times.

Algorithmic trading is commonly defined as the use of computer algorithms to automatically make trading decisions, submit orders, and carry out post-submission order management. In the past decade algorithmic trading has grown rapidly across the world and has become the dominant way securities are traded in financial markets, currently generating more than half of the volume of U.S. equity markets. Constantly improving computer technology and its application by both traders and exchanges, together with the evolution of market micro-structure, automation of price quotation and trade execution have together enabled faster trading. Nowadays the speed of order submission has become a principal characteristic for distinguishing trading agents. Market participants known as high-frequency traders are capable of trading hundreds of times in a second, using fast algorithms and specialized network connections with exchanges. High-frequency traders are often orders of magnitude faster in order submission than other traders, and even other trading algorithms.

The rise of algorithmic trading and high-frequency trading has had broad impacts on financial markets, especially on the price discovery process and market price stability. One conspicuous impact is the increasingly frequent "flash crashes" in major financial markets. The flash crashes comprise large and rapid changes in the price of an asset that do not coincide with changes in economic fundamental value for the asset. The flash crash events have occurred in markets that are among the largest and most liquid exchanges in the world. One representative flash crash event is the famous 2010 Flash Crash, which happened in the U.S. stock market on May 6th, 2010. During this flash crash event, one market participant’s algorithm caused a sharp price drop in the E-mini S&P futures market. The flash crash soon spread to other futures markets and equity markets. The market price fell almost 6% in just several minutes, while the bulk of losses was recovered nearly as quickly. The 2010 Flash Crash led to turmoil market conditions and caused huge market value loss. As for the cause of the 2010 Flash Crash, Kirilenko et al. (2017) show that the key events in the 2010 Flash Crash have clear relationships with regard to algorithmic trading.

The 2010 Flash Crash seems to be singular because of the fact that no following events have rivalled its depth, breadth, and speed of price movement. Nevertheless, flash crashes on a smaller scale happen frequently. These events are termed mini flash crashes (Johnson et al. 2012). According to Johnson et al. (2012), there are more than 18,000 mini flash crashes that are identified in the U.S. equity market between 2006 and 2011. As scaled-down versions of the 2010 Flash Crash, mini flash crashes are abrupt and severe price changes occur in an extremely short time period (Golub et al. 2012). Mini flash crashes are attracting great research interest because their frequent occurrence could destabilize the financial market and undermine investor confidence (Golub et al. 2012).

The flash crash episodes1, including large flash crash and mini flash crash events, are of significant concern to researchers, practitioners, and policymakers. Financial markets in which price changes are orderly and reflect proper changes in valuation factors are desirable. However, flash crash episodes could potentially disorganise such a desirable market and cause adverse consequences for financial stability if they were to impede investment by undermining investor confidence in the price at which securities could be transacted (Karvik et al. 2018). To prevent flash crash episodes from becoming more frequent and longer-lasting, it is important to understand how such episodes arise. In this paper we explore the dynamics during both large flash crashes and mini flash crashes. The major objectives are understanding what happens during these events and identifying conditions that would affect characteristics of these flash crash scenarios. The main methodology employed here is financial market simulation in agent-based models. The agent-based financial market simulation provides realistic synthetic financial market data and a testbed for exploring dynamics during flash crash episodes and conditions that influence the characteristics of flash crash episodes.

Financial market simulation based on agent-based models is a promising tool for understanding the dynamics of financial markets. With huge potential academic and industrial value, agent-based financial market simulation has gained extensive research attention in recent years. Financial market simulation by agent-based models is an exciting new field for exploring behaviours of financial markets. An agent-based financial market simulation consists of a number of distinct agents that follow predetermined rules in a manner analogous to how real-world traders behave in reality. Unlike traditional economic theories, there is no equilibrium assumption in agent-based financial markets. In addition, traders are no longer assumed to have rational behaviours as in traditional economic theories. The removal of these assumptions makes agent-based financial market simulation more realistic than traditional equilibrium-based economic and financial theories. These advantages of agent-based financial market simulation make it possible to explore complex phenomena such as flash crash episodes in modern financial markets, which is unachievable with traditional equilibrium-based theories.

Various agent-based simulators have been developed in the literature. However, there are still gaps in creating ideal agent-based financial market simulators that are capable of generating synthetic high-frequency market data that are realistic. Specifically, most existing agent-based financial markets are of lower frequency such as daily or minutely. To explore market dynamics that involve high-frequency trading, a higher simulation frequency in sub-second level is needed. In addition, instead of using full exchange protocols, many simulators make assumptions about the price formation process and use mathematical formulae to approximate the matching engine. This significantly undermines the realism of the simulator. Last but not least, the proper calibration and validation of agent-based financial market simulation are still an open problem.

To sum up, there are two challenges that this paper aims to address:

- C1: To design and implement a high-frequency agent-based financial market simulator with full exchange protocols, and with a proper calibration and validation process to reproduce a realistic artificial financial market.

- C2: Under the proposed agent-based financial market simulator, investigate the market dynamics during flash crashes (including both large flash crashes and mini flash crashes) and explore the conditions that influence the characteristics of flash crashes.

Motivated by the above challenges, we developed a novel high-frequency financial market simulator to bridge the existing gaps. The simulator is then employed to explore the dynamics during flash crashes and the conditions that affect flash crashes. Broadly speaking, our contributions in this paper are threefold:

- It is known that sub-second level simulation is needed to replicate high-frequency dynamics in financial markets, which is rarely implemented in previous work. In this work, a high-frequency agent-based financial market simulator that comprises various types of traders is implemented, with each simulation step corresponding to 100 milliseconds. The 100 milliseconds step interval is chosen because the average interval between two transaction messages from the exchange is around 100 milliseconds. Shorter step interval is not chosen because of a huge increase in computational expenses, though higher frequency is realisable in our simulation. In addition, our experimental results show that a step interval of 100 milliseconds is sufficient to capture high-frequency dynamics in financial markets. Full exchange protocols (limit order books) are implemented to simulate the order matching process, which acts exactly the same as the match engine in real-world exchanges. In this way, we provide a microstructure model of a single security traded on a central limit order book in which market participants follow fixed behavioural rules. The model is calibrated using the machine learning surrogate modelling approach. As for model validation, statistical tests and moment coverage ratio results show that the simulation is capable of reproducing realistic stylised facts in financial markets.

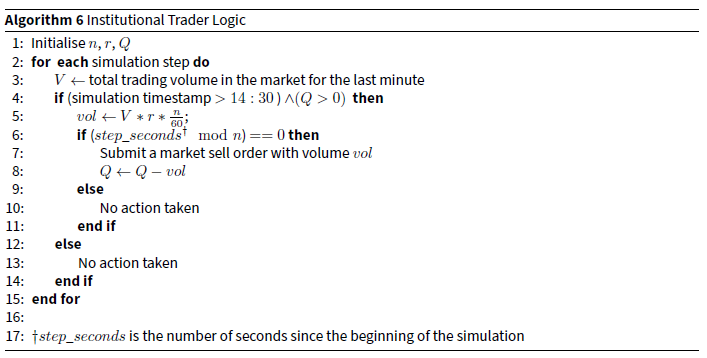

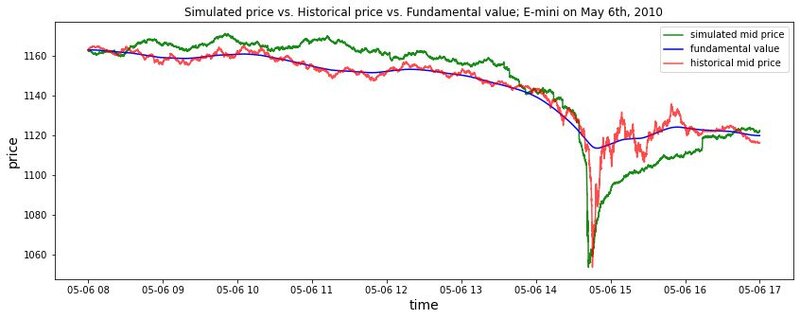

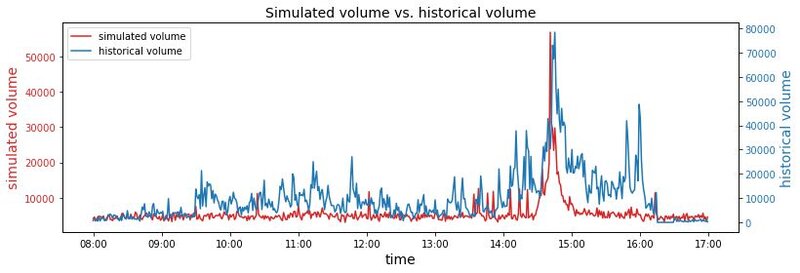

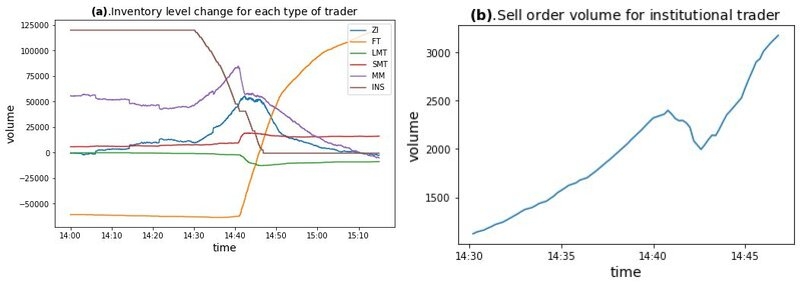

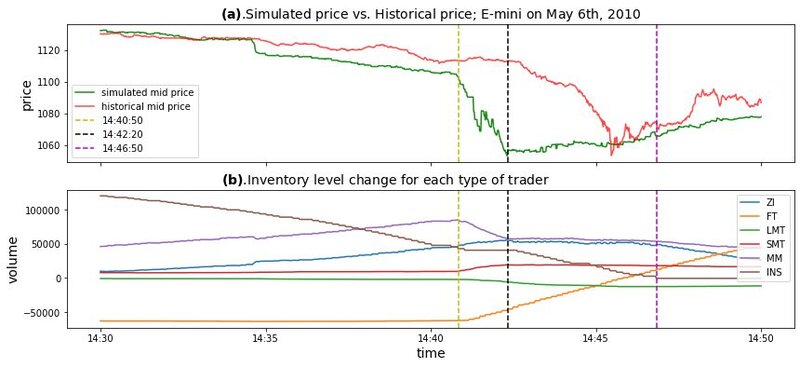

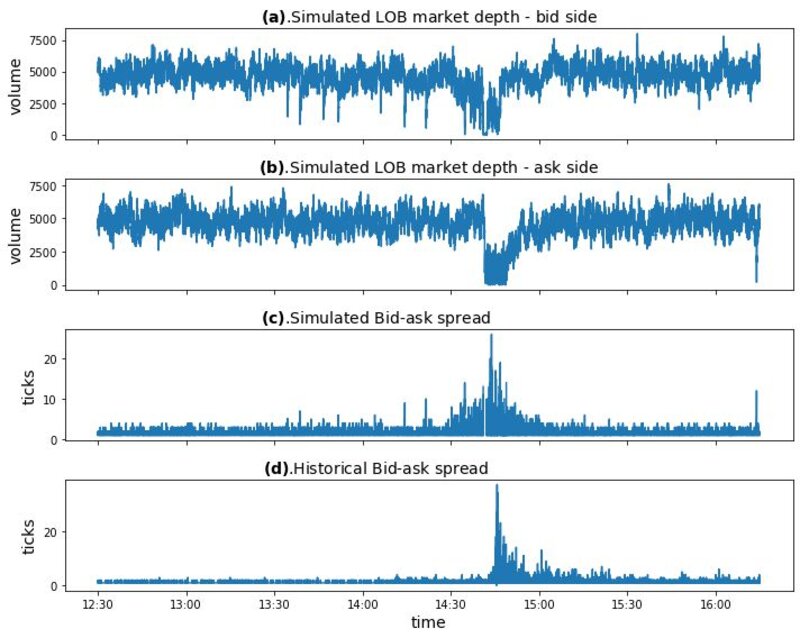

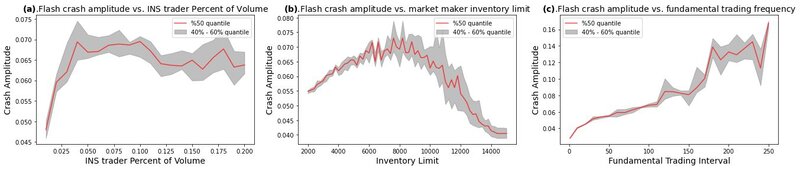

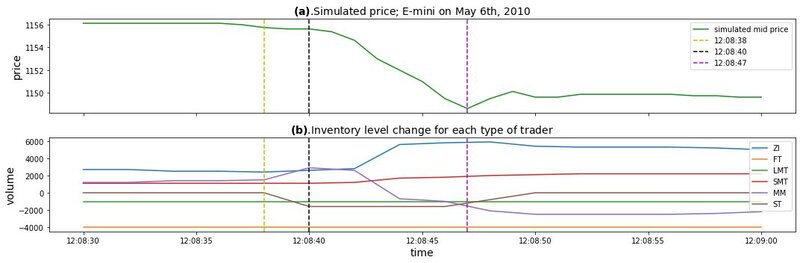

- Under the framework of the proposed high-frequency agent-based financial market simulator, the 2010 Flash Crash is realistically simulated by introducing an institutional trader that mimics the real-world Sell Algorithm2 on May 6th, 2010, which is generally believed to have precipitated the flash crash event. We investigate the market dynamics during the simulated flash crash and show that the simulated dynamics are consistent with what happened in historical flash crash scenarios. We then explore the conditions that could have influenced the characteristics of the 2010 Flash Crash. According to our Monte Carlo simulation, three conditions significantly affect the amplitude of the 2010 Flash Crash: the percentage of volume (POV) of the Sell Algorithm, market maker inventory limit, and the trading frequency of fundamental traders. In particular, we found that the relationship between the amplitude of the simulated 2010 Flash Crash and the POV of the Sell Algorithm is not monotonous, and so is the relationship between the amplitude and the market maker inventory limit. For the trading frequency of fundamental traders, the higher the frequency, the smaller the amplitude of the simulated 2010 Flash Crash.

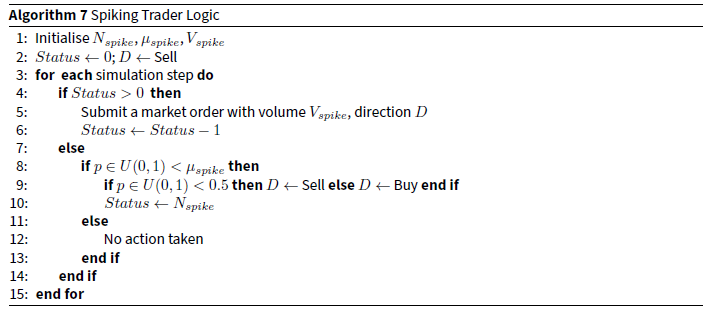

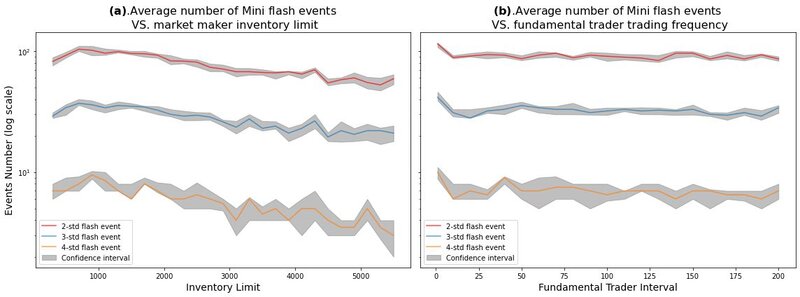

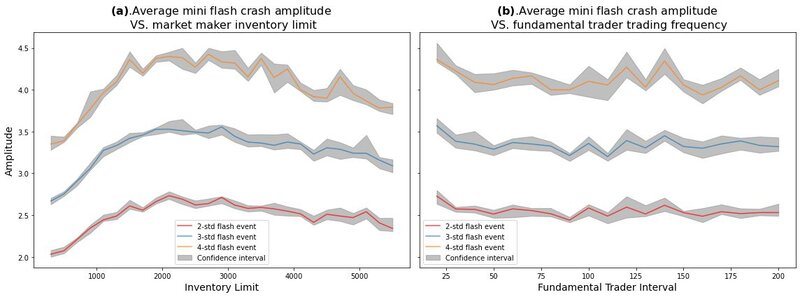

- Similar analysis is carried out for mini flash crash events. An innovative type of trader called "Spiking Trader" is introduced to the agent-based financial market simulator, mimicking real-world price shocks to precipitate more mini flash crash events. Market dynamics for a typical simulated mini flash crash event are analysed. We also explore the conditions that could influence the characteristics of mini flash crash events. Experimental results show that the market maker inventory limit significantly affects both the frequency and amplitude of mini flash crash events. However, the trading frequency of fundamental traders shows no obvious influence on mini flash crash events in our experiments.

The novelty of our approach lies in several aspects. Firstly, the proposed agent-based financial market simulator has a higher frequency than most other simulators in the literature. Our simulation step is at the milliseconds level, which allows for the investigation of high-frequency dynamics in the simulated financial market, while most simulation models in the literature adopt larger simulation steps of 1 second or 1 minute. Secondly, we explore the influence of different market configurations on the amplitude of the 2010 Flash Crash. To the best of our knowledge, there are few similar experiments in the existing literature. Thirdly, an innovative type of trader named "Spiking Trader" is proposed to precipitate more mini flash crash events. Fourthly, the experiments that explore the conditions that influence the frequency and amplitude of mini flash crash events are also a novelty of this work.

The remainder of the article is organized as follows. Section 2 presents general background on the agent-based financial market simulation and an overview of previous research about flash crash events. Section 3 shows the structure and details for the proposed agent-based model, while Section 4 presents the model calibration process and model validation results. Section 5 and Section 6 provide simulation and analysis for the 2010 Flash Crash scenario and mini flash crash scenarios, respectively, in the framework of the proposed agent-based financial market simulation. Section 7 concludes and gives directions for future work.

Background and Related Work

Agent-based financial market simulation

An agent-based model (ABM) is a computational simulation driven by the individual decisions of programmed agents (Todd et al. 2016). ABMs are often used in simulating financial markets. In agent-based simulated financial markets, an agent’s objective is to "digest the large amounts of time series information generated during a market simulation, and convert this into trading decisions" (LeBaron 2001). The model aggregates these trading decisions from all agents to build market snapshots (e.g. limit order book states) for each step and generate transactions. With the advantage of capturing the heterogeneity of agents and diversity of the underlying economic system, ABMs provide a promising alternative to traditional equilibrium-based economic models.

Gode & Sunder (1993) build an agent-based model with only zero-intelligence traders to simulate financial markets. Those zero-intelligence traders are not able to think strategically, or do any advanced learning, or statistical modelling of the financial market. Surprisingly, results show that zero-intelligence traders can trade very effectively in the simulated market. The prices tend to converge to the standard equilibrium price and market efficiency tends to reach a very high level. According to their experimental results, they argue that some stylised facts in financial markets may rely more on institutional design rather than actual agent behaviour. Agent-based models are also proposed to model the "Trend" and "Value" effects in financial markets. Chiarella designed an agent-based model composed of two types of traders: fundamentalists and chartists (Chiarella 1992). With only two types of traders, lots of dynamic regimes that are compatible with empirical evidence can be generated in the simulated artificial financial market. An extension of the Chiarella model is proposed in Majewski et al. (2020). The extended model adds a new type of trader called noise trader and allows the fundamental asset value to have a long term drift. The extended Chiarella model is capable of reproducing more realistic price dynamics. This extended Chiarella model in Majewski et al. (2020) forms the basis of the proposed agent-based financial market simulation in this paper. A more complex agent-based model for financial market simulation is proposed in McGroarty et al. (2019). Five different types of traders are present in the simulated market: market makers, liquidity consumers, momentum traders, mean reversion traders, and noise traders. Their model is capable of replicating most of the existing stylised facts of limit order books, such as autocorrelation of returns, volatility clustering, concave price impact, long memory in order flow, and the presence of extreme price events. Those stylised facts have been observed across different asset classes and exchanges in real financial markets. The successful replication of these stylised facts indicates the validity of their agent-based simulation model. It is shown that agent-based financial market simulation is capable of generating artificial financial markets with realistic macro behaviours.

The prevalence of electronic order books and automated trading permanently changed the way the market works. It is virtually impossible to infer meaningful relationships between market participants using traditional mathematical methods because of the complexity of electronic financial markets. Instead, agent-based financial market simulation has been gradually getting popularity in the market microstructure literature. An agent-based simulated financial market offers an experimental environment for examining market features and characteristics. It also provides plenty of artificial financial market data for analysis. Hayes et al. (2014) develop an agent-based model for use by researchers, which offers the capability of capturing the organization of exchanges, the heterogeneity of market participants, and the intricacies of the trading process. Agent-based models can also provide regulators with an experimental environment that helps to comprehend complex system outcomes. In other words, it allows for a clearer examination of the relationship between micro-level behaviour and macro outcomes. For example, Darley & Outkin (2007) test the regulatory changes that came with decimalization in the NASDAQ market using agent-based financial market simulation. The agent-based models of the NASDAQ market shed light on how these changes would impact market function.

To summarise, agent-based financial market simulation simplifies complex financial system simulation by including a set of individual agents, a topology and an environment. Different agent-based models in the literature focus on different practical problems in financial markets. In this paper, we focus on agent-based models applied to flash crash analysis. In the following section, we provide a literature review of flash crash episodes.

Flash crash episodes

During the 2010 Flash Crash, over a trillion dollars were wiped off the value of US equity markets in an event that has been largely attributed to the rapid rise of algorithmic trading and high-frequency trading (Kirilenko et al. 2017). The base indices in both the futures and securities market experienced a rapid price fall of more than 5% in just several minutes, after which the bulk of the price drop was recovered nearly as fast as it fell. The staff from CFTC and SEC present a thorough report on what happened during the 2010 Flash Crash event (SEC and CFTC 2010). They identified an automated execution algorithm that sold a large number of contracts as the main catalyst for the flash crash. The Sell Algorithm, which was activated on the E-mini S&P 500 futures market, kept pace with the market aiming at selling around 9% of the previous minute’s trading volume (SEC and CFTC 2010). Even though no negative impact was known previously, this process triggered a cascade of panic selling by market participants that employ high-speed automated trading systems. The consequent "hot-potato" effect, where those market participants rapidly acquired and then liquidated positions among themselves, resulted in rapid and extreme price decline.

Flash crash episodes have attracted attention after the 2010 Flash Crash event. Several months after the crash, the staff from regulatory authorities released a report that highlighted the important role of a large seller in initiating the flash crash event (SEC and CFTC 2010). It is reported that although high-frequency traders appear to have exacerbated the magnitude of the crash, they do not actually trigger the flash crash. Though high-frequency traders played a role in creating the so-called "hot-potato" effect, the flash crash would very likely have been avoided without the overly simplistic sell algorithm based on volume alone. There is also a lot of academic research on flash crash episodes. For example, Kirilenko et al. (2017) applied purely empirical approaches to understanding the causes of the 2010 Flash Crash. They use regression analysis on a unique dataset that is labelled with the identities of all market participants. It is demonstrated that in response to the activity of the Sell Algorithm, high-frequency traders caused the "hot-potato" effect that exacerbated the price drop. This is consistent with the SEC and CFTC (2010) report. Brewer et al. (2013) applied a simulation approach to studying consequences of different regulatory interventions that aim to stabilize the limit order book market after a flash crash event. They trigger a flash crash by simulating the submission of an extremely large order. In this way they study the conditions under which the influence of a flash crash could be substantial and the mechanisms that could mitigate the influence. Their simulation results indicate that three mechanisms could potentially mitigate the detrimental effects of flash crashes: introducing minimum testing times, switching to call auction market mechanism and shutting off trading for a period of time.

An important stream of flash crash research is simulating flash crashes in agent-based models, which usually involves investigating how high-frequency traders contribute to the emergence of flash crash events. Paddrik et al. (2012) develop an agent-based model of the E-mini S&P futures applied to flash crash analysis. A general flash crash in price is replicated in their model. However, they only reproduce a rough shape of the flash crash price behaviour, and detailed analyses of trader behaviours and market depth are absent. Karvik et al. (2018) developed an agent-based model to analyse the flash crash episodes in the sterling-dollar forex market. They emphasize the important role of high-frequency traders in the emergence of flash crash episodes. The proposed approach in this paper is partly inspired by their work. Jacob-Leal et al. (2016) develop an agent-based model to study how the interplay between low-frequency traders and high-frequency traders impact asset price dynamics. Their experimental results indicate that high-frequency trading exacerbates market volatility and plays a fundamental role in the emergence of flash crashes. They also show that flash crashes are associated with two salient characteristics of high-frequency traders, namely their capability of generating high bid-ask spreads and synchronizing on the sell side of the limit order book. The most similar model to our proposed one in literature is the agent-based model proposed in Vuorenmaa & Wang (2014). They build an agent-based simulation framework that reproduces key characteristics of the 2010 Flash Crash. Consistent with SEC and CFTC (2010), they argue that high-frequency traders play an important role in creating vicious feedback loop system, which is triggered by a large institutional sell. Their results imply that policy-makers should pay more attention to number of high-frequency traders in the market, inventory sizes of these traders, and the regulated tick size. Jacob-Leal & Napoletano (2019) test the effects of a set of regulatory policies with regard to high-frequency trading by developing an agent-based model that is able to generate flash crashes. It is shown that there is a trade-off between market stability and resilience when determining policies directed towards high-frequency trading. Their experimental results indicate that possible policies to prevent flash crash events include the imposition of minimum order resting times, circuit breakers, cancellation fees and transaction taxes.

There are also different angles of view for flash crash episodes in literature. Paulin et al. (2019) design and implement a hybrid microscopic and macroscopic agent-based approach to investigate the conditions that give rise to the "electronic contagion"3 of flash crash events. Their results demonstrate that the flash crash contagion between different assets is dependent on portfolio diversification, behaviours of algorithmic traders, and network topology. It is also stressed that regulatory interventions are important during the propagation of flash crash distress. Menkveld & Yueshen (2019) look at the flash crash event from the perspective of cross-arbitrage. They find that the breakdown of cross-arbitrage activities between related markets plays an important role in exacerbating the flash crash event. Kyle & Obižaeva (2020) analyse price impact during flash crash events in their market microstructure invariance model. It is shown that the actual price declines in flash crash events are larger than the predicted price impact. Madhavan (2012) argues that the flash crash episodes are linked directly to the current market structure, mostly the pattern of volume and market fragmentation. He further suggests that a lack of liquidity is the critical issue that requires the greatest policy attention to prevent future flash crash events. Similarly, Borkovec et al. (2010) explicitly owe the flash crash in ETFs to an extreme deterioration in liquidity. Their results are consistent with the liquidity provision behaviour in financial markets. Paddrik et al. (2017) explore how the levels of information can be used to predict the occurrence of flash crash events. Their findings suggest that some stability indicators derived from limit order book information are capable of signalling a high likelihood of an imminent flash crash event. Golub et al. (2012) analyse mini flash crashes, which are the scaled-down versions of the 2010 Flash Crash. It is shown that mini flash crashes also have an adverse impact on market liquidity and are associated with the fleeting liquidity phenomenon.

The above provides various analyses for the occurrence of flash crash episodes. However, despite extensive work on analysing the flash crash episodes, the exact causes of the flash crash episodes are still not clear. In this paper, we investigate and analyse the flash crash episodes through the lens of agent-based financial market simulation. In this sense, our work is similar to the work in Karvik et al. (2018), Paddrik et al. (2012) and Vuorenmaa & Wang (2014). Nevertheless, we offer a much more extensive and detailed analysis of the simulated flash crash event which, to the best of our knowledge, is the most fine-grained analysis in current literature. Specifically, we realistically capture the 2010 Flash Crash event in our simulation, and divide the simulated flash crash event to several phases. For each phase, detailed analyses about traders’ behaviours and market dynamics are presented. To the best of our knowledge, this fine-grained simulation and analysis is not reported before in literature. By dividing the whole flash crash event into different phases and examining trader behaviours and market dynamics for each phase, we shed light on the cause for flash crash events. In addition, controlled experiments under different model settings and traders’ behaviours are carried out in the developed agent-based simulation framework, which provide insights about how to prevent the happening of detrimental flash crash events. Specifically, the proposed methodology enables us to discover three important conditions that significantly affect the amplitude of the 2010 Flash Crash: the percentage of volume (POV) of the Sell Algorithm, market maker inventory limit, and the trading frequency of fundamental traders. The basis of our agent-based model is the extended Chiarella model in Majewski et al. (2020), which comprises fundamental traders, momentum traders, and noise traders. We further divide momentum traders into long-term momentum traders and short-term momentum traders, and introduce market makers to the model. The motivation for introducing these types of traders and their interactions will be presented in the next section. It is shown that the proposed model is capable of generating realistic artificial financial time series. Within the framework of the proposed realistic agent-based financial market simulation, special types of agents are introduced to trigger flash crash episodes in the simulated financial market. In this way, simulated flash crash episodes are scrutinized and analysed. Our focus in this paper is to provide a clear and thorough examination of what happens during the whole process of a flash crash, and what conditions could impact the characteristics of a flash crash. This is achieved by developing a realistic high-frequency agent-based financial market simulation model. Specific regulatory interventions and their influences are not our focus here and are left as future work.

Model Structure

This section presents the set-up and components of the proposed agent-based high-frequency financial market simulator.

Model set-up

The proposed model consists of various traders who submit limit orders or market orders to a central limit order book, which functions according to a continuous double auction mechanism. All the orders submitted by these traders in one simulation period, including limit orders and market orders, constitute the aggregated virtual "demand" and "supply" in that period. The aggregated virtual "demand" and "supply" depend on the trading strategies of various types of market participants. The market participants are assumed to be heterogeneous in their trading decisions. According to the Extended Chiarella model (Majewski et al. 2020), a popular set of traders in an artificial financial market includes momentum traders, fundamental traders, and noise traders. This composition of traders is capable of capturing the trend and value effects in financial markets. We further extend the model by dividing momentum traders into two groups: short-term momentum traders and long-term momentum traders. In this way, heterogeneity is introduced not only between groups of traders, but also inside a specific group of traders. In addition, in an artificial financial market with full exchange protocols, the market maker is an indispensable component to create realistic limit order book behaviours. To sum up, five types of traders are included in our model: fundamental traders, short-term momentum traders, long-term momentum traders, noise traders, and market makers. Each type of trader is associated with several parameters which control the trading behaviours. Trading heuristics, specific parameters and the parameter calibration process will be presented in subsequent sections.

Price formation process

As mentioned above, we use a continuous double auction mechanism to form the price in our model, which has been adopted by most popular electronic exchanges around the world. The key element in continuous double auction is the limit order book, which is a record of all outstanding limit orders maintained by the exchange. The price dynamics in our proposed model are exactly the same as the price dynamics in a real-world stock exchange – a limit order book is built to accept all limit orders and market orders. A trade occurs when a market order is entered or a limit order whose price crosses the spread of best bid and best ask. This marketable order transacts against the best opposing orders using a price-time priority, removing them from the order book until this order is either fully or partially filled, leaving the rest of the quantity of the order to become the new best bid or offer. All normal limit orders will join the limit order book according to price-time priority. For a complete description for how continuous double auction works, refer to Smith et al. (2003).

We denote the price of a stock at time \(t\) as \(P_t\). The simulated price in this work refers to mid-price, which is the mean value of the best bid price and the best ask price from the limit order book at time \(t\):

| \[P_t := 0.5 * b^0_t + 0.5 * a^0_t\] | \[(1)\] |

Common trader behaviours

Traders in our simulation model have some behaviours in common, for example submitting and cancelling orders. We can assume that there is a certain type of agent called "Base Agent", and all traders in our model inherit the functionalities of this base agent. Specifically, all traders in the model have common behaviours as follows.

- Each trader has a parameter \(\theta\), which controls the probability of submitting a limit order. The value of \(\theta\) depends on the type of traders and also varies during simulation, but the behaviour given a \(\theta\) value is common for all types of traders. That is, for each simulation step, if \(p \in U(0, 1) < \theta\), the trader will place a limit order, otherwise, no action is taken4. Note that the specific side of the order (buy or sell) depends on the trader type and market conditions. The value of \(\theta\) could be zero for some traders, which means that the corresponding traders do not submit limit orders.

- Each trader has a parameter \(\mu\). The function of \(\mu\) is identical to parameter \(\theta\), except that \(\mu\) controls the probability of sending a market order. Similar to \(\theta\), the value of \(\mu\) is different for different types of traders and varies for different timestamps. For each simulation step, if \(p \in U(0, 1) < \mu\), the trader will place a market order, otherwise no action is taken. The specific side of the market order also depends on the trader type and market conditions. Similar to \(\theta\), the value of \(\mu\) can also be zero for some particular type of traders.

- The side for all limit orders and market orders are determined by corresponding trader types and market conditions. A market order is submitted directly to the exchange after the side is determined. For a limit order, the price information is required. Given the order side, a buy limit order will have a price lower than the market mid-price at the corresponding timestamp, while a sell limit order will have a price higher than the market mid-price. The distance between the limit order price and the market mid-price follows a particular distribution, whose type is determined by the trader types. Specifically, the price distance for limit orders from market makers is sampled from a uniform distribution with parameters \(0\) and \(p^{MM}_{edge}\), and the price distance for limit orders from other types of traders is sampled from a common log-normal distribution with parameters \(\mu_{\ell}\) and \(\Sigma_{\ell}\). The parameters of these distributions are calibrated to historical price time series stylised facts. The price for a limit order is calculated after the price distance is sampled from the corresponding distribution.

- Each type of trader has a parameter \(\delta\). Regardless of the order price and side, each limit order has a cancellation probability of \(\delta\) at each simulation step. The value of \(\delta\) is dependent on the type of trader who places the order, and is calibrated using historical market data to create realistic limit order book behaviours. In the presented model, all traders share the same value for \(\delta\) except market makers, who have a higher value of \(\delta\). This reflects the fact that orders submitted by market makers tend to have a much higher cancellation / replacement rate.

- In the proposed model, short-term momentum traders, long-term momentum traders and noise traders all have a parameter called \(\rho\), which controls the ratio between the number of market orders and limit orders placed by the same trader. That is, for each trader of these three types, there is a fixed relationship between \(\theta\) and \(\mu\): \(\mu = \theta * \rho\). This relationship enables a realistic ratio between the number of market orders and limit orders in the simulated financial market and the value of \(\rho\) is selected according to historical orders data.

- The volume \(V\) for each order is 100, regardless of limit order or market order.

Despite the common trader behaviours, each type of trader follows different trading heuristics and has different values for the associated parameters. For example, fundamental traders only submit market orders. Market makers submit limit orders in normal trading time. Only after the inventory limit is hit will market makers submit market orders to reduce their inventory risk. The remaining types of traders submit both limit orders and market orders during trading hours, aiming to maintain a fixed ratio between the number of limit orders and the number of market orders. The remainder of the section describes the trading behaviour heuristics for each type of trader in more detail. Descriptions for all the parameters involved in the proposed model are summarised in Appendix A.

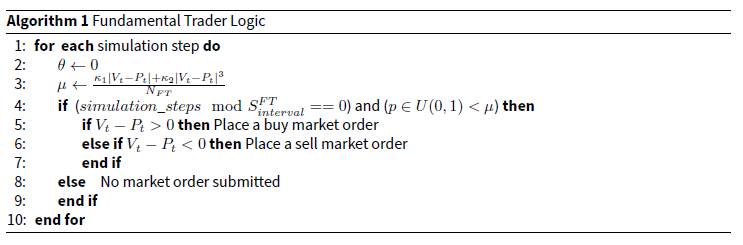

Fundamental Trader (FT)

Fundamental traders make their trading decisions based on the perceived fundamental value of the stock. The fundamental value is denoted as \(V_t\). A fundamentalist tends to buy a stock if the stock is under-priced (\(V_t - P_t > 0\)), otherwise he will sell the stock. Following the convention in Majewski et al. (2020), in this work we assume the aggregated demand of fundamental traders is polynomial to the level of mispricing. Specifically, the aggregated demand of fundamentalists is:

| \[ D_{FT} = \kappa_{1}(V_t - P_t) + \kappa_{2}(V_t - P_t)^3\] | \[(2)\] |

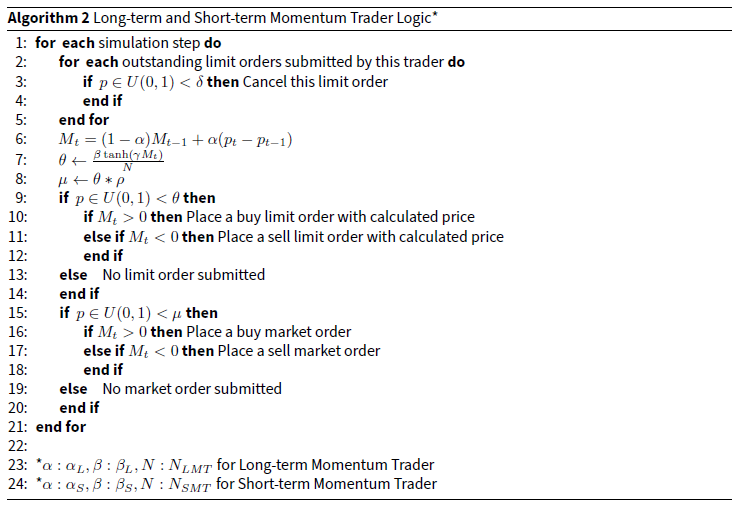

Momentum Trader (MT)

Momentum traders are also called "Chartists". This group of traders buy and sell financial assets after being influenced by recent price trends. The assumption is to take advantage of an upward or downward trend in the stock prices until the trend starts to fade. Instead of looking at the fundamental value of the stock, momentum traders focus more on recent price action and price movement. If the stock price has been recently rising, a long position is established; otherwise, momentum traders will enter a short position. In the proposed model, momentum traders can submit both limit orders and market orders. The ratio between the number of limit orders and market orders is fixed, which is denoted as \(\rho\). The price information for a limit order is determined as follows. A buy limit order will have a price lower than the market mid-price at the corresponding timestamp, while a sell limit order will have a price higher than the market mid-price. The distance between the limit order price and the market mid-price follows a log-normal distribution with parameters \(\mu_{\ell}\) and \(\Sigma_{\ell}\). The parameters of the log-normal distribution are calibrated to historical price time series stylised facts. Price information for the limit order is subsequently calculated after the price distance is sampled from the corresponding distribution.

There are lots of methods to estimate the momentum of stock prices. A common trend signal is the exponentially weighted moving average of past returns with decay rate \(\alpha\). This trend signal is denoted by \(M_t\):

| \[ M_{t} = (1 - \alpha)M_{t-1} + \alpha(p_t - p_{t-1})\] | \[(3)\] |

- \(f(M_t)\) is increasing.

- \(f''(M_t) * M_t < 0\)

According to the value of \(\alpha\), we further divide the group of momentum traders into two sub-groups: long-term momentum traders (small \(\alpha\)) and short-term momentum traders (large \(\alpha\)). The two types of momentum traders also have different values for \(\beta\); however, they share the same \(\gamma\) value. The proposed model includes \(N_{LMT}\) long-term momentum traders and \(N_{SMT}\) short-term momentum traders.

Long-term Momentum Trader (LMT)

Long-term momentum traders are associated with a small value for \(\alpha\). According to Equation 3, a small \(\alpha\) corresponds to slow changes in the momentum signal. Consequently, the momentum signal is smooth and reflects the trend on a longer time scale. In our intra-day simulation, we choose an \(\alpha\) value of 0.01 for long-term momentum traders. This would approximately correspond to an intra-day hourly trend. The group of long-term momentum traders will focus on the relatively long-time price trend and calculate their demand function. For limit orders, the virtual aggregated demand from long-term momentum traders is \(\beta_L \tanh(\gamma M_t^L)\), while for market orders the virtual aggregated demand from long-term momentum traders is \(\rho \beta_L \tanh(\gamma M_t^L)\). As there are \(N_{LMT}\) long-term momentum traders, the \(\theta\) and \(\mu\) for each trader are corresponding quantities divided by \(N_{LMT}\). Trading decisions are made according to the common order submission rules. A full description of long-term momentum traders’ logic is shown in Algorithm 2.

Short-term Momentum Trader (SMT)

Compared to the long-term momentum traders, short-term momentum traders are associated with a much larger \(\alpha\). Typically, \(\alpha\) for short-term momentum trader would be a value close to 1. Following Equation 3, the momentum signal is updated very fast when \(\alpha\) has a large value. In this circumstance, \(M_t\) represents the trend in an extremely short time scale, typical only several ticks in our intra-day simulation. The group of short-term momentum traders aim at exploiting the price trend in a very short time scale, which mimics the behaviour of some real-world high-frequency traders. There are \(N_{SMT}\) short-term momentum traders in our agent-based financial market simulation. We assign a value of 0.9 to \(\alpha\) of short-term momentum traders.5 The trading heuristics for short-term momentum traders are exactly the same as that for long-term momentum traders, except that \(\alpha_L\) and \(\beta_L\) are replaced by \(\alpha_S\) and \(\beta_S\), respectively. A full description of short-term momentum traders’ logic is shown in Algorithm 2.

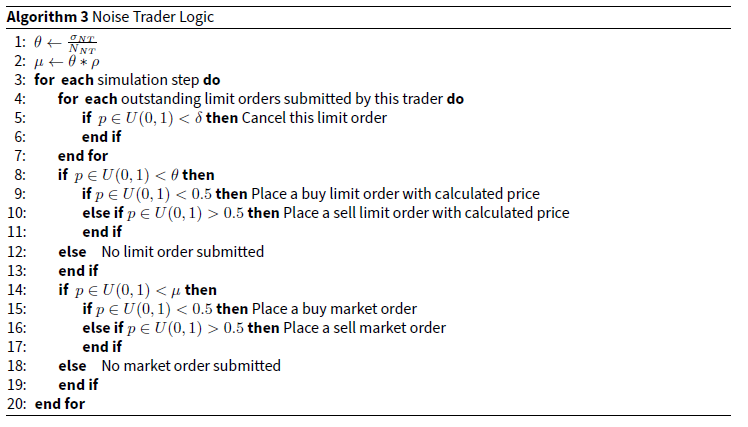

Noise Trader (NT)

Another group of market participants are noise traders. They are designed so as to capture other market activities that are not reflected by trend-following and value investing. As a result, the cumulative demand of noise traders can be described by a random walk, with each step having an equal probability of placing buy/sell orders. Parameter \(\sigma_{NT}\) controls the aggregated demand level from noise traders. There are \(N_{NT}\) noise traders in the model, with each noise trader having value \(\frac{\sigma_{NT}}{N_{NT}}\) for \(\theta\). Similar to momentum traders, noise traders are also associated with a parameter \(\rho\), which controls the ratio between the number of limit and market orders. Note that unlike other traders whose \(\theta\) and \(\mu\) vary according to simulated market conditions, \(\theta\) and \(\mu\) for noise traders are determined prior to simulation and remain fixed during the simulation process. When noise traders place limit orders, the price determination process is identical to the process used by momentum traders - both involve sampling a distance from an empirically calibrated log-normal distribution. Algorithm 3 gives a description of noise traders’ logic in the simulated financial market.

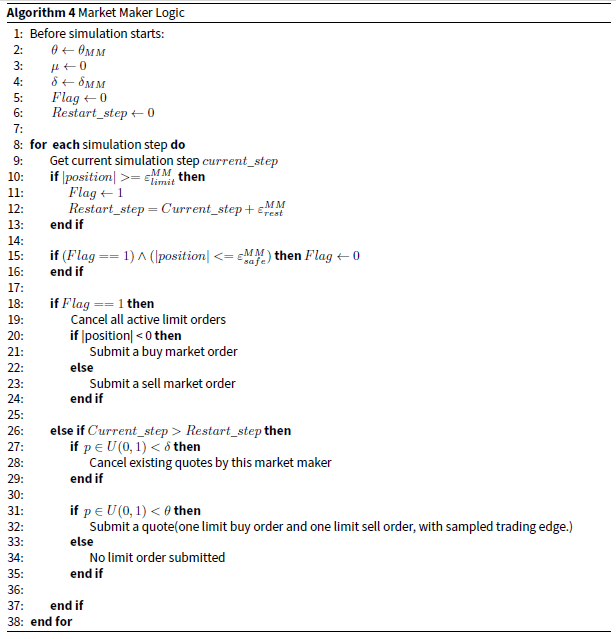

Market Maker (MM)

The market makers are another group of traders in the model. The introduction of market makers in the proposed model is aimed at creating realistic limit order book dynamics. Market makers in the proposed model are more complex than previous traders. During normal trading time, market makers only submit quotes to the market. A quote includes one buy limit order and one sell limit order. The simplification that market makers only submit limit orders is motivated by Menkveld (2013), which finds that around 80% of market makers’ orders are passive. The price of the sell (buy) order is calculated by adding (subtracting) a distance from the mid-price at the corresponding timestamp, where the distance is sampled from a uniform distribution. (The price is rounded to the closest multiple of tick size before being submitted to the exchange.) In alignment with the market making behaviours during the 2010 Flash Crash event (SEC and CFTC 2010), market makers in the proposed model are associated with a position limit. Specifically, once the inventory of a market maker reaches the position limit, the market maker will stop all active quoting and actively submit market orders to reduce the inventory level. This will continue until the inventory reduces to a certain safe level, which is also a parameter of the model. At this stage, the market maker suspends trading for a certain time period. This resembles the real-world scenarios that market makers tend to suspend trading to check their own trading systems and observe market conditions after some unusual scenarios happen. After this time period, the market maker will restart the normal trading heuristics. Table 1 presents the corresponding order types that market makers will submit in different trading conditions.

| Trading condition | Normal trading | Stressed trading (after inventory limit reached) | Trading suspension |

|---|---|---|---|

| Order type | Limit order | Market order | None |

Since market makers only submit limit orders during the normal trading time, the \(\mu\) for each market maker is set to 0. Similar to the case in previous traders, \(\delta\) and \(\theta\) control the probability of order cancellation and limit order submission respectively, whose values are calibrated to historical price time series. Note that one difference is that \(\theta\) controls the probability of submitting a quote (buy and sell limit orders) for market makers. In addition to \(\delta\), \(\theta\) and \(\mu\), market makers are associated with several extra parameters that correspond to the trading behaviours presented above. As mentioned, price information of the sell (buy) limit order is calculated by adding (subtracting) a distance from the mid-price. This distance is the trading edge of market makers. The trading edge is sampled from a uniform distribution, which controls the spread of the quotes submitted by market makers. Specifically, the uniform distribution from which the price distance is sampled has a minimum value of 0 and a maximum value of \(p^{MM}_{edge}\). \(\varepsilon^{MM}_{limit}\) represents the position limit for each market maker, while \(\varepsilon^{MM}_{safe}\) denotes the safe position level. That is, market makers will actively reduce inventory once \(\varepsilon^{MM}_{limit}\) is reached, until the position is reduced to \(\varepsilon^{MM}_{safe}\) level. \(\varepsilon^{MM}_{rest}\) represent the time length for the trading suspension. Algorithm 4 presents a full description of market makers’ trading heuristics in the proposed model.

Simulation dynamics

The above are the five types of agents included in the proposed model. A fully functioning limit order book was implemented, as is the case in most electronic financial markets. The simulation is run in pseudo-continuous time. Specifically, each simulation step represents 100 milliseconds of trading time. Each trading day is divided into \(T = 324,000\) steps, corresponding to 9 hours of trading (8:00 to 17:00). The minimum time for execution of transactions is 100 milliseconds, showing that the simulated financial market represents a high-frequency trading environment. We remind here that the fundamental value \(V_t\), which is extracted from the historical price time series, is an exogenous signal that is input to the model.

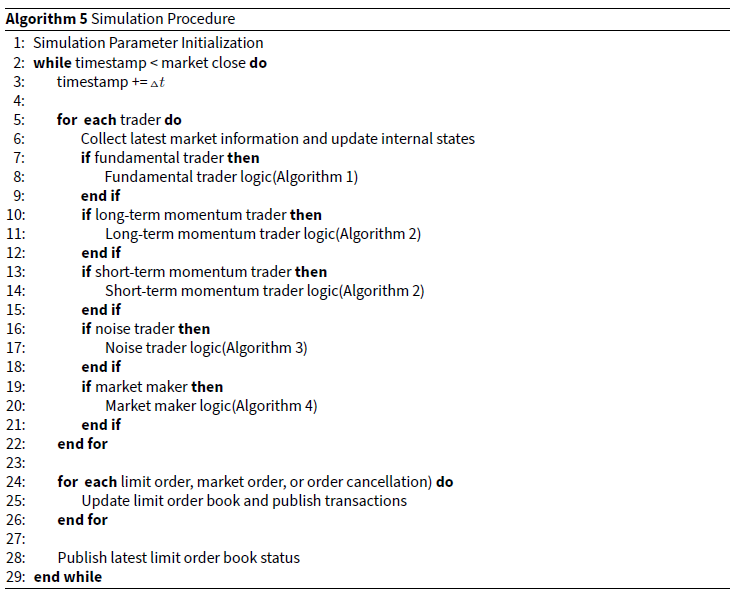

The whole simulation runs as follows. For each step, each trader collects and processes market information. Internal variables associated with each trader are calculated. According to agent type and values of internal variables, actions are taken by the traders. These actions include limit order submission, market order submission, and order cancellation. The programmed matching engine matches these orders and updates the state of the limit order book. Finally, transactions and limit order book status are published to all traders. The whole simulation procedure is shown in Algorithm 5.

We suggest that the proposed five types of traders reflect a sufficiently realistic and diverse market environment. According to O’Hara (1995), there are three major market-microstructure trader types: uninformed traders, informed traders and market makers. The noise traders in our model correspond to uninformed traders, while market makers in the proposed model obviously correspond to the market makers in literature. The remaining three types of traders represent informed traders in our model. Specifically, fundamental traders utilise exogenous information implied by the fundamental value, while the two types of momentum traders exploit the endogenous technical indicator information. In addition, among the informed traders some perceived trading opportunities are based only on an analysis of short-horizon returns, while others focus on market information revealed by long-term return horizons. This is reflected by the division of momentum traders into long-term and short-term momentum traders. Overall, a sea of different informed and uninformed traders in the proposed model compete with each other, with market makers providing liquidity and ensuring realistic limit order book behaviours. Note that in our model, traders’ strategies are fixed and traders cannot switch their strategy according to market conditions. One may argue that in real-world financial markets, traders usually choose and switch trading strategies according to market conditions. However, we can still argue that our model captures this type of behaviour. Different types of traders in our model capture different types of real-world trading strategies. A real trader’s strategy is captured by one type of agent under one condition, and by another type of agent under a different condition, should the real trader pick another trading strategy under the new market condition. This is another strength of our approach: with the simplest model and the least number of assumptions, complex financial market situations can be analysed. In conclusion, the proposed model with five types of traders represents a complete range of micro-behaviours of real financial markets.

Fundamental value from kalman smoother

The only remaining unknown variable is the fundamental value of the stock. The simulation can proceed only if the fundamental value is known and is exogenously input to the model. One difficulty is the non-observability of the fundamental value. According to the economic literature, the fundamental value of a stock equals the expected value of discounted dividends that the company will pay to the shareholders in the future. However, this methodology requires extremely strong assumptions about the future dynamics of the stock dividends. Furthermore, this approach can never reflect the intra-day change of fundamental value, while the consensus fundamental value can indeed vary during the trading day due to the continuous feed of events and news.

In this paper, we propose a new method which is to apply Kalman Smoother (Ralaivola & d’Alche-Buc 2005) directly to the stock price time series to get the hidden fundamental value. In accordance with Majewski et al. (2020), we assume the fundamental value \(V_t\) is a hidden variable of a linear dynamical system. The observations are the actual prices traded in real markets. The specific Kalman Smoothing algorithm used here can be found in Byron et al. (2004). The algorithm is applied to the price time series for each trading day to extract the corresponding fundamental value time series for that day.6

Model Calibration and Validation

In this section we present the methodology for calibrating the agent-based financial market. Calibration means finding an optimal set of model parameters to make the model generate the most realistic simulated financial market. Firstly, we describe the real data and the associated stylised facts in financial markets. Next we define the distance between historical and simulated stylised facts, which acts as the loss function in the calibration process. The parameter calibration workflow is presented, followed by detailed validation of the proposed high-frequency financial market simulator.

Calibration target: Data and stylised facts for realistic simulation

In the model calibration process, real financial market data is essential for setting up the calibration target. We collected high-frequency limit order book data of E-mini S&P 500 futures, from May 3rd, 2010 to May 6th, 20107. The data are purchased from the official CME DataMine platform.8 We select the most liquid contract as the calibration target, which is the contract expires in June 2010. Our dataset comprises high-frequency information for 10 levels of limit order book update, both the buy side and sell side.

Financial price time series data display some interesting statistical characteristics that are commonly called stylised facts. According to Sewell (2011), stylized facts refer to empirical findings that are so consistent (for example, across a wide range of financial instruments and different time periods) that they are accepted as truth. A stylized fact is a simplified presentation of an empirical finding in financial markets. A successful and realistic financial market simulation is capable of reproducing various stylised facts. These stylised facts include fat-tailed distribution of returns, autocorrelation of returns, and volatility clustering. The loss function used in the calibration process is constructed by measuring the distance between historical and simulated stylised facts.

Fat-tailed distribution of returns

The distributions of price returns have been found to be fat-tailed across all timescales. In other words, the return distributions exhibit positive excess kurtosis. Understanding positively kurtotic return distributions is important for risk management since large price movements are much more likely to occur than in commonly assumed normal distributions.

Following the convention in literature, in this paper we investigate the stylised fact of fat-tailed returns by examining second-level intra-day price returns. Both millisecond-level historical and simulated mid-price time series are resampled into second-level frequency and we examine the mid-price returns for each second. Specifically, the last price snapshot is taken as the price for that specific second. Second-level price returns are calculated accordingly. Our experiments show that different time scales have no significant influence on the final results. The main metric used for evaluating the fat-tail characteristic is the Hill Estimator of the tail index (Hill 2010). A lower value of the Hill Estimator implies that the return distribution has a fatter tail. Table 2 illustrates the Hill Estimator values for all trading days in our dataset9.

| Date | 20100503 | 20100504 | 20100505 | 20100506 |

|---|---|---|---|---|

| Hill Estimator | 0.019 | 0.046 | 0.061 | 0.095 |

Autocorrelation of returns

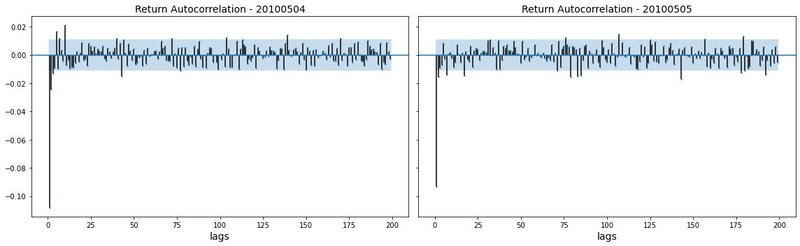

Autocorrelation is defined to be a mathematical representation of the degree of similarity between a time series and a lagged version of the same time series. It measures the relationship between a variable’s past values and its current value. Take first-order autocorrelation for example. A positive first-order autocorrelation of returns indicates that a positive (negative) return in one period is prone to be followed by a positive (negative) return in the subsequent period. Instead, if the first-order autocorrelation of returns is negative, a positive (negative) return will usually be followed by a negative (positive) return in the next period. It is observed that the returns series lack significant autocorrelation, except for weak, negative autocorrelation on very short timescales. McGroarty et al. (2019) show that the negative autocorrelation of returns is significantly stronger at a smaller time horizon and disappears at a longer time horizon. Examination of our data also reveals this stylised fact. Figure 1 shows the autocorrelation function of second-level return time series for E-mini S&P 500 futures on two days. We can see that the autocorrelation is significantly negative for very small lags (1 and 2), and the negative autocorrelation disappears for larger lags. This reflects the "bid ask bounce" phenomenon in market microstructure.

Volatility clustering

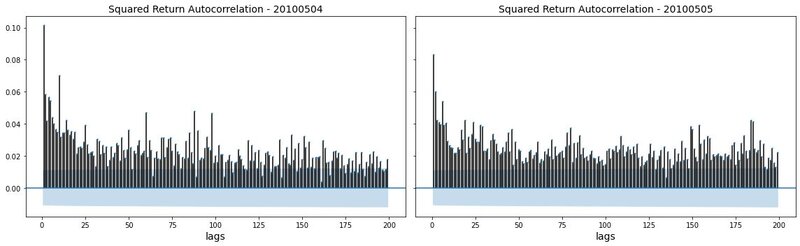

Financial price returns often exhibit the volatility clustering property: large changes in prices tend to be followed by large changes, while small changes in prices tend to be followed by small changes. This property results in the persistence of the amplitudes of price changes (Cont 2007). It is found that the volatility clustering property exists on timescales varying from minutes to days and weeks. Volatility clustering also refers to the long memory of square price returns (McGroarty et al. 2019). Consequently, volatility clustering can be manifested by the slow decaying pattern in the autocorrelation of squared returns. Specifically, for short lags, the autocorrelation function of squared returns is significantly positive, and the autocorrelation slowly decays with the lags increasing. Figure 2 shows the autocorrelation patterns for squared second-level returns for E-mini S&P 500 futures on two days. It is shown that the volatility clustering stylised fact clearly exists in our collected E-mini S&P 500 futures price dataset.

Stylised facts: Distance as loss function

The target for agent-based model calibration is to find an optimal set of model parameters to make the model generate a realistic simulated financial market. To solve this optimization problem, it is essential to have a metric that is able to quantify the "realism" of a simulated financial market. First of all, a realistic simulated financial market must exhibit similar characteristics to real financial markets, such as the fat-tailed return distribution and volatility level. In addition, realistic simulated financial data are also required to reproduce other stylised facts such as the autocorrelation patterns in returns and squared returns. Here we design a stylised facts distance to quantify the similarities between simulated and historical financial data. Four metrics are considered in the stylised facts distance: Hill Estimator of the tail index for absolute return distributions, volatility, autocorrelation of returns and autocorrelation of squared returns. For each metric, the differential quantity between simulated value and historical value is calculated. The stylised facts distance is then calculated as the weighted sum of the four differential quantities:

| \[ \begin{aligned} D &= w_1 * \Delta_{Hill} + w_2 * \Delta_{V} + w_3 * \Delta_{ACF^1} + w_4 * \Delta_{ACF^2} \\ \end{aligned}\] | \[(4)\] |

Detailed calculations of the four quantities in the stylised facts distance are presented below.

The Hill Estimator is famous for inferring the power behaviour in the tails of experimental distribution functions. Following Franke & Westerhoff (2012), we use the Hill Estimator of the tail index to estimate the degree of fat-tail in the distribution of absolute returns on the mid-price. Note that the absolute return distribution is considered since there is no need to distinguish between extreme positive and negative returns. In our experiments, the Hill Estimator for simulated absolute returns and historical absolute returns are calculated, respectively. The absolute difference between the two Hill Estimators constitutes the first part of the stylised facts distance:

| \[ \begin{aligned} \Delta_{Hill} &= |Hill_s - Hill_h|\\ \end{aligned}\] | \[(5)\] |

The second part of the stylised facts distance is the absolute volatility difference between simulated returns and historical returns:

| \[ \begin{aligned} \Delta_{V} &= |V_s - V_h| \\ \end{aligned}\] | \[(6)\] |

The third part of the stylised facts distance is the difference between simulated and historical autocorrelations of returns. This part in the stylised distance measures the model’s ability to reproduce autocorrelation patterns commonly found in historical returns. It is shown that financial price return time series lack significant autocorrelation, except for short time scales, where significantly negative autocorrelations exist. This phenomenon is backed by our empirical data. For very small lags the autocorrelations are negative, while for larger lags the autocorrelations become insignificant. To measure the distance in autocorrelation patterns between simulated data and historical data, we invoke the autocorrelation function of returns and calculate the average absolute difference between autocorrelations of simulated return time series and historical return time series for various lags:

| \[ \begin{aligned} \Delta_{ACF^1} &= \frac{ \sum\limits_{l \: in \: lags} |ACF_s(l, r) - ACF_h(l, r)| } { |lags|} \\ \end{aligned}\] | \[(7)\] |

The last part of the stylised facts distance is the difference between simulated and historical autocorrelations of squared returns. The replication of autocorrelation patterns in squared returns indicates the model’s capability to reproduce the volatility clustering stylised fact. It is shown empirically that large price changes tend to be followed by other large price changes, known as the volatility clustering phenomenon. Consequently, though there are generally no significant patterns in autocorrelations of returns, the autocorrelations of squared returns are significantly positive, especially for small time lags. Also, as time lag increases, the autocorrelation of squared returns displays a slowly decaying pattern, as shown in Figure 2. Similar to the difference between autocorrelations of returns \(\Delta_{ACF^1}\), the difference between autocorrelations of squared returns is calculated as follows:

| \[ \begin{aligned} \Delta_{ACF^2} &= \frac{ \sum\limits_{l \: in \: lags} |ACF_s(l, r^2) - ACF_h(l, r^2)| } { |lags|} \\ \end{aligned}\] | \[(8)\] |

The above four parts, along with the corresponding weights, constitute the stylised facts distance in Equation 4. The next question is how to determine the associated weight for each part of the stylised facts distance. The basic guiding idea is that the higher the sampling variability of a given part in historical data, the larger the difference between simulated value and historical value that can still be deemed insignificant. A natural candidate for each weight is the inverse of the sampling variance for the corresponding part in the stylised facts distance:

| \[ \begin{aligned} w_i &= \frac{1}{\sigma^2_i} \\ \end{aligned}\] | \[(9)\] |

Following Franke & Westerhoff (2012), the sampling variance \(\sigma^2_i\) for each part in the stylised facts distance is estimated by applying the block bootstrap method on the historical return time series. The choice of weighting is inspired by the Method of Moments estimation, where the inverse of the covariance matrix is used. Interested readers are referred to Wooldridge (2001) and Fuhrer et al. (1995).

Note that the stylised facts distance is a function of model parameters. In other words, given a set of model parameters, there is a unique stylised facts distance calculated from the simulated time series, which corresponds to that particular set of model parameters. Let \(\boldsymbol{\theta}\) denote the vector of model parameters to be estimated, Equation 4 can be rewritten as:

| \[ \begin{aligned} D(\boldsymbol{\theta}) &= w_1 * \Delta_{Hill}(\boldsymbol{\theta}) + w_2 * \Delta_{V}(\boldsymbol{\theta}) + w_3 * \Delta_{ACF^1}(\boldsymbol{\theta}) + w_4 * \Delta_{ACF^2}(\boldsymbol{\theta}) \\ \end{aligned}\] | \[(10)\] |

The smaller \(D(\boldsymbol{\theta})\) is, the more realistic the simulation is. Thus \(D(\boldsymbol{\theta})\) serves as the loss function that the calibration method aims to minimize by finding an optimal set of model parameters. Let \(\boldsymbol{\Theta}\) denote the admissible set for model parameter vector \(\boldsymbol{\theta}\), the calibration target is to find the optimal model parameter vector \(\boldsymbol{\hat{\theta}}\) that minimizes the stylised facts distance:

| \[ \begin{aligned} \boldsymbol{\hat{\theta}} &= \arg \; \min_{\boldsymbol{\theta} \in \boldsymbol{\Theta}} \; D(\boldsymbol{\theta}) \\ \end{aligned}\] | \[(11)\] |

Calibration workflow and results

The model is calibrated by choosing values for the model parameters so that the dynamics of the simulated price time series match those observed empirical price time series. Specifically, the aim of the calibration process is to find \(\boldsymbol{\hat{\theta}}\) that minimizes the stylised facts distance, as specified in Equation 11. As mentioned, the data used in the calibration are the price time series data of the most liquid contract of E-mini S&P 500 futures, from May 3rd, 2010 to May 6th, 2010. The model parameters are calibrated for every trading day10. After a large-scale trial and error, seven model parameters are selected to be calibrated. The seven parameters are: \(\mu_{\ell}\), \(\sigma_{NT}\), \(\kappa_1\), \(\kappa_2\), \(\beta_L\), \(\beta_S\), \(\theta_{MM}\). Specific meanings of these seven parameters are presented in the previous section. In terms of the replication of stylised facts, our experiments show that the simulation results are less sensitive to the values for other parameters. As a result, it is reasonable to keep other parameters fixed. This choice significantly reduces the computational complexity of the calibration process. These parameters and corresponding descriptions, as well as specific values, are presented in Appendix A and Appendix B.

The focus of this paper is not on specific methods for calibration; instead, we will pay more attention to the validation part. Here we briefly present the calibration process. The calibration workflow has two stages in our experiments. The main calibration technique in the first stage is the surrogate modelling approach, proposed by Lamperti et al. (2018). Specifically, an XGBoost surrogate model is built to approximate the agent-based model simulation. The surrogate model is capable of intelligently guiding the exploration of the parameter space. Estimated parameter values are those that give rise to smaller stylised facts distance, indicating that simulated moments match those observed empirically. After the first stage of the calibration, optimal parameters are given by the surrogate model. Even though global optimum is not guaranteed, it is shown in our experiments that the obtained parameter combination yields small stylised facts distance and is capable of reproducing realistic price dynamics. It is likely that the global optimal parameters are located close to the parameters generated by the first stage. Taking this into consideration, a numerical grid search over a feasible bounded set of parameters is carried out. The feasible set of parameters is centered around the optimal parameters given by the surrogate modelling approach in stage one. The parameter combination that yields the smallest stylised facts distance is selected as the final calibrated model parameter combination. Calibrated model parameter values for each trading day, as well as the yielded stylised facts distance, are presented in Table 3.

| Date | \(\mu_{\ell}\) | \(\sigma_{NT}\) | \(\kappa_1\) | \(\kappa_2\) | \(\beta_L\) | \(\beta_S\) | \(\theta_{MM}\) | \(D(\boldsymbol{\hat{\theta}})\) |

|---|---|---|---|---|---|---|---|---|

| 20100503 | 0.9093 | 0.7895 | 0.1632 | 0.0235 | 0.0924 | 0.0252 | 0.7805 | 0.2259 |

| 20100504 | 1.2717 | 0.6732 | 0.0933 | 0.3402 | 0.6964 | 0.681 | 0.8998 | 0.2269 |

| 20100505 | 1.7396 | 0.6176 | 0.2844 | 0.2098 | 0.5935 | 0.055 | 0.8291 | 0.2191 |

| 20100506 | 1.0098 | 0.0107 | 0.3697 | 0.1649 | 0.7494 | 0.9877 | 0.3265 | 0.1666 |

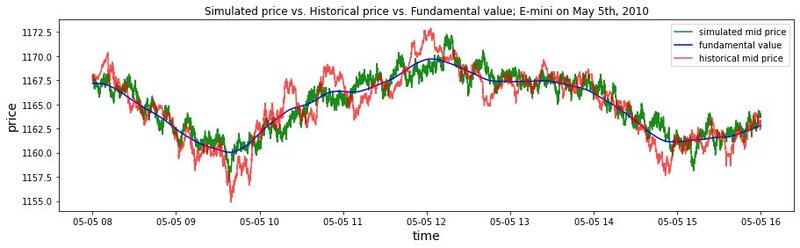

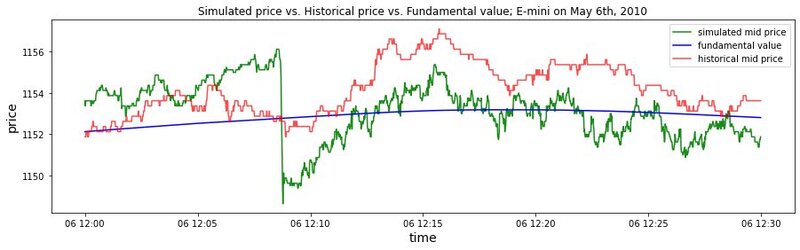

Figure 3 compares the empirical time series of mid-price on May 5th to the simulated mid-price time series. Visual inspection shows that the model produces price time series whose dynamics are very similar to those in empirical data. Nevertheless, a quantitative assessment is required to validate the proposed simulation model, which is presented in the subsequent section.

Model validation

Table 3 shows the stylised facts distance of the calibrated model. However, the value itself does not present an intuitive description of how well the simulated data fit empirical data. A cross-check on the validity of our model topology and calibration strategy is needed. Following Franke & Westerhoff (2012), two metrics are used for assessing the quality of the moment matching and the validity of the model simulation: the moment-specific p-value and the moment coverage ratio.

Statistical hypothesis testing: Moment-specific p-value

The stylised facts distance provides us with numerical values for the realism of the simulation. However, one more elementary question needs to be addressed: whether the data generated by model calibration and simulation would be rejected by the empirical data. The question is answered by calculating a moment-specific p-value as a statistical hypothesis test.

Recall that in previous sections, the weights are calculated by the block bootstrapping method proposed in Franke & Westerhoff (2012). While the variance of block bootstrapping samples is the corresponding weight for each part of the loss function, the large number of samples obtained in this procedure can also be used to apply the loss function \(D\) to them. In this way, an entire frequency distribution of values of \(D\) is available, which can subsequently be contrasted with the simulated distribution of values of \(D\). The fundamental idea is that the set of bootstrapped samples of return time series is a proxy of the set of different return time series samples that could be produced if the hypothetical real-world data generation process exists. Accordingly, if a simulated return time series yields a value of \(D\) within the range of the bootstrapped values of \(D\), this simulated return time series is difficult to be distinguished from a real-world series.

Note that a Monte Carlo experiment is undertaken and the simulation is repeated many times to create a distribution of model-generated \(D\) values. In total, two distributions of \(D\) values are obtained: bootstrapped distribution and Monte Carlo simulated distribution. Let \(N\) denote the number of bootstrapped samples. For consistency, we also select \(N\) Monte Carlo samples to create the simulated distribution. Let \(D^b\) and \(D^m(\boldsymbol{\hat{\theta}})\) denote the bootstrapped sample and Monte Carlo sample, respectively11. The distributions of the following two sets of \(D\)-values are then compared:

| \[ \begin{aligned} Bootstrap &: \{D^b\}_{b=1}^{N} \\ Simulated &: \{D^m(\boldsymbol{\hat{\theta}})\}_{m=1}^{N} \end{aligned}\] | \[(12)\] |

The p-value is obtained as follows. A critical value of \(D\) is established from the bootstrapped distribution of values of \(D\). Taking account of the rare extreme events with a significance level of 5%, the critical value is defined to be the 95% percentile \(D_{0.95}\) of the bootstrapped \(D\). In this way, a simulated return time series will be considered to be inconsistent with the real-world data, and therefore be rejected, if the corresponding \(D\)-value is larger than \(D_{0.95}\). Take the May 3rd trading day as an example. Here \(D=0.2114\) is obtained as the critical value of the bootstrapped distance function. As for the Monte Carlo simulation, the model-generated distribution of \(D\) is prominently wider to the right. The detailed calculation yields that \(D_{0.95}\) corresponds to the 18.33% percentile of the simulated distribution of values of \(D\). Following the general moment matching convention in Franke & Westerhoff (2012), the model is said to have a p-value of 0.1833, with respect to the estimated parameter vector \(\boldsymbol{\hat{\theta}}\), the historical data for May 3rd, and the specific moments that we have chosen. According to the conventional significance criteria, for this trading day the model will not be rejected as being obviously inconsistent with the empirical data. Following Franke & Westerhoff (2012), a p-value of 0.1833 is actually believed to be a fairly good performance for an agent-based financial market simulator. The p-values for the calibrated model for all trading days are shown in Table 4.

| Date | 20100503 | 20100504 | 20100505 | 20100506 |

|---|---|---|---|---|

| p-value | 0.1833 | 0.4167 | 0.0833 | 0.6167 |

From Table 4 we can see that for all trading days in the collected dataset, the moment-specific p-values are greater than 0.05. Consequently, we cannot reject the null hypothesis which specifies that the simulated return time series belongs to the same distribution as the empirical return time series. This statistical hypothesis testing gives evidence that the calibrated model is capable of generating realistic financial price time series.

Moment coverage ratio

The previous evaluation of the model was based on the values of the stylised facts distance function \(D\). While statistical testing enables us to evaluate the validity of the model simulation, the quality of moment matching for each specific moment is still unknown. Another potential issue is that the stylised facts distance function \(D\) is the optimisation target during the calibration process. Thus the evaluation metric involving the distance \(D\) may be biased because of the potential overfitting problem. To address the above problems, the "moment coverage ratio" (MCR) metric is adopted to assess the degree of moment matching, taking into account each specific moment. The moment coverage ratio is originally proposed in Franke & Westerhoff (2012). The basis for moment coverage ratio calculation is the concept of a confidence interval of the empirical moments. Consistent with Franke & Westerhoff (2012), the 95% confidence interval of a moment is considered, which is defined to be the interval with boundaries $$1.96 times the standard deviation around the empirical value of this moment. The next step is to determine the standard deviation for each empirical moment. Franke & Westerhoff (2012) apply the delta method to the autocorrelation coefficients to calculate the standard deviation. In this paper, we use a more direct way to obtain the empirical standard deviation, which is based on the block bootstrapping method. Recall that large quantities of return time series are obtained by the block bootstrapping method. For each specific moment, a moment value can be calculated out of every sampled return series. In total there will be \(B\) values for each moment, where \(B\) is the bootstrapping sample number. The standard deviation of those values is considered to be the standard deviation for the corresponding empirical moment. One may feel uneasy about the bootstrapping of the autocorrelation functions at longer lags since the method alters the temporal order of the return series. However, the block size in our block bootstrapping method is 1800, which is significantly larger than the longest lag (90) in the autocorrelation functions. Consequently, the impact of bootstrapped block re-ordering on the autocorrelation functions is negligible.

With the standard deviation on hand, the corresponding confidence interval for each specific moment is immediately available. In this way an intuitive criterion for assessing a simulated return series is obtained: if all of its moments are contained in the confidence intervals, the simulated return series cannot be rejected as being incompatible with empirical data. Nonetheless, one single simulation is not sufficient to evaluate a model as a whole due to the sample variability. In addition, it is likely that for one simulated return series some moments are contained in the confidence interval while others are not. It goes without saying that considering multiple simulation runs of the model will provide a more exhaustive assessment of the model’s performance. Specifically, for each simulation run the confidence interval check is repeated. We count the number of Monte Carlo simulation runs in which the single moments are contained in the corresponding confidence intervals. The corresponding percentage numbers out of all Monte Carlo runs are defined as the moment coverage ratio.

Since the model is calibrated by each trading day, a Monte Carlo simulation is run for each trading day and the corresponding moment coverage ratios are calculated to evaluate the calibrated model. Table 5 presents the results of the moment coverage ratios calculation. Except for the volatility moment on May 3rd and the 90-lag squared return autocorrelation moment on May 4th, all other moment coverage ratios are higher than 50%. According to the analysis in Franke & Westerhoff (2012), the higher than 50% moment coverage ratio represents a terrific performance of the model. In addition, almost half of the moment coverage ratios are even higher than 90%, which indicates that our calibrated model has an excellent ability to reproduce realistic stylised facts. Overall, with respect to the selected moments, the calibrated model’s capability of matching empirical moments and reproducing realistic stylised facts is highly remarkable.