Martin Meister, Kay Schröter, Diemo Urbig, Eric Lettkemann, Hans-Dieter Burkhard and Werner Rammert (2007)

Construction and Evaluation of Social Agents in Hybrid Settings: Approach and Experimental Results of the INKA Project

Journal of Artificial Societies and Social Simulation

vol. 10, no. 1

<https://www.jasss.org/10/1/4.html>

For information about citing this article, click here

Received: 20-Jan-2006 Accepted: 18-Jul-2006 Published: 31-Jan-2007

Abstract

Abstract

|

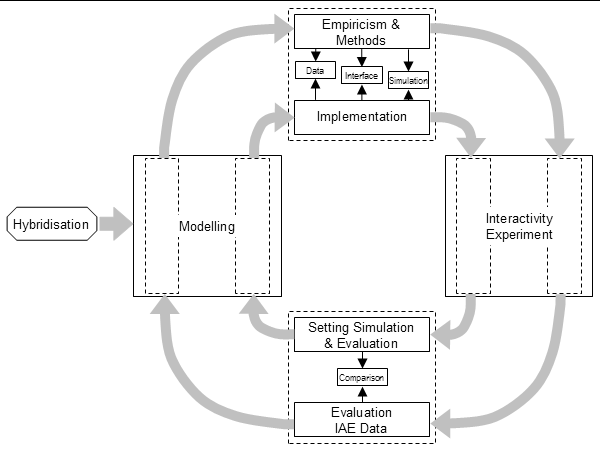

| Figure 1. The socionics development cycle structures the interdisciplinary work in our project |

| Table 1: Profiles of the social types | ||||||

| Social type | Willingness to compromise | Willingness to negotiate | Success effects relationship to partner | Preferred shift types | Preferred form of capital | Irrelevant forms of capital |

| Family type | Low | Average | No | Early shift, Night shift | Money | Reputation |

| Team type | High | High | Yes | All shift types | Reputation, relationships to colleagues | Money |

| Uncooperative type | Low | Low | No | All shift types | Knowledge acquisition | Positive relationships to colleagues |

| Self-confident type | Low | Average | No | All shift types | Knowledge acquisition | Positive relationships to colleagues |

| Agreement-orientated type | High | High | Yes | All shift types | -- | Money, reputation |

| Pleasure type | Average | Average | No | Late shift | Money | Reputation, knowledge acquisition |

| Note: Columns 2-4 specify typical behavioural characteristics; columns 5-7 give typical preferences. | ||||||

|

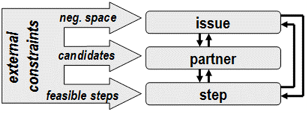

| Figure 2. The C-IPS approach to negotiating agents |

| ushift(s) = wLTI⋅lti(s) + wCA⋅∑i=1∈{1…4}cii⋅cai(s) | (1) |

| upartner(p) = 0.5⋅(exppersonal(p) +exptypified(p))⋅∑(s,s')∈AEmax(0,uexchange(s,s'))² | (2) |

| uexchange(s,s') = ushift(s) - ushift(s') | (3) |

| exp'{personal | typified}(p) = (1-stepexp)⋅exp{personal | typified}(p) + stepexp | (4) |

| exp'{personal | typified}(p) = (1-stepexp)⋅exp{personal | typified}(p) | (5) |

Concrete numerical values on all constants, thresholds, weights and functions mentioned in this section are presented in the Appendix.

|

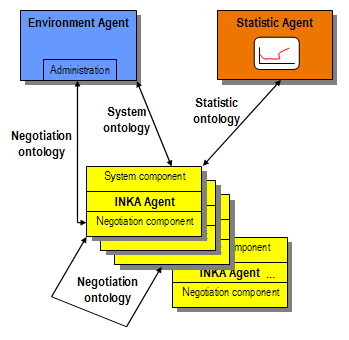

| Figure 3. The architecture of the INKA system |

|

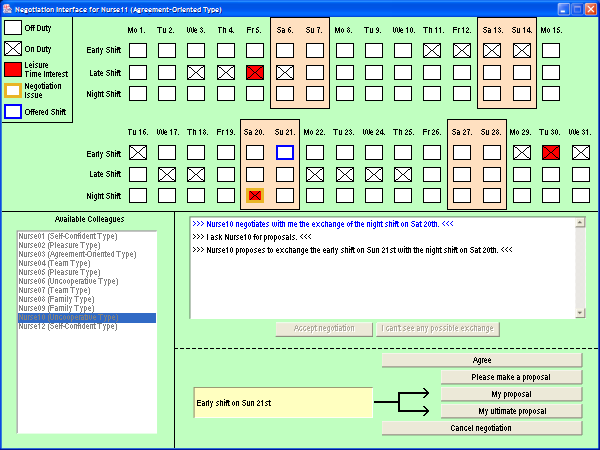

| Figure 4. The interface for manual control of an INKA agent (click to enlarge the figure) |

A non-restrictive set of social types achieves better results with regard to shift-plan quality than a restrictive set of social types.

The pattern of distribution is the essential factor for the quality of shift-plans, independent of the individual social types involved.

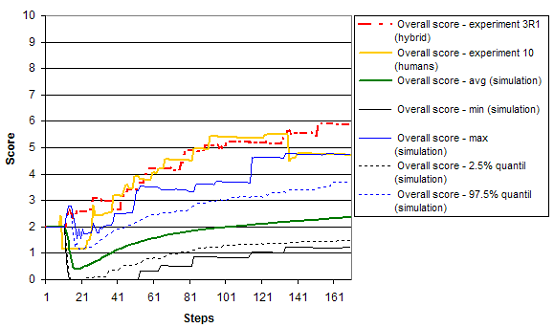

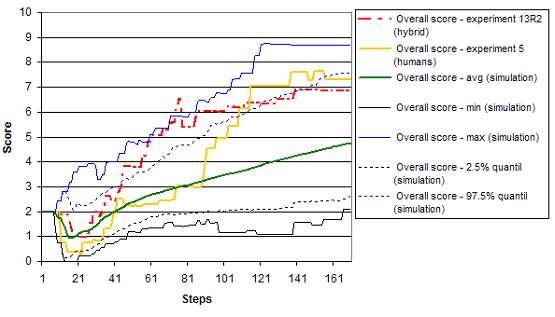

Different qualities of shift plan depend on the nature of the negotiation partners – whether they are exclusively agents, exclusively humans or a mixture.

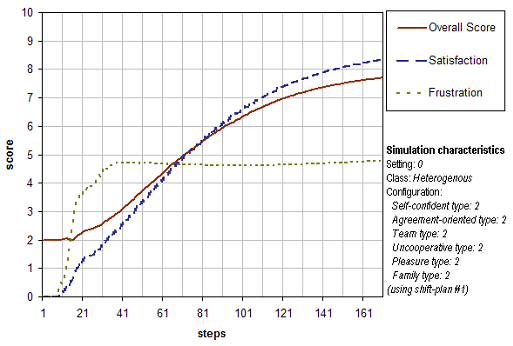

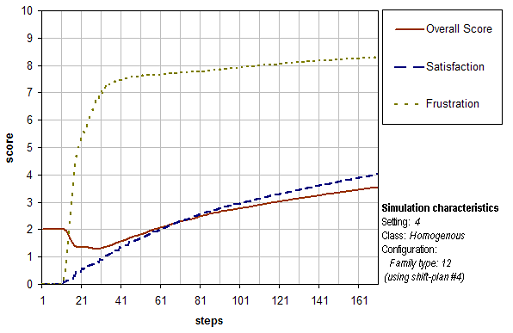

Collective satisfaction with the negotiated shift-plan (CS): Individual satisfaction can be expressed as the percentage of leisure-time interest each individual can realise. As a collective measure we define the average of the individual satisfaction values. This value is scaled on a range from 0 to 10. A higher collective satisfaction indicates a shift-plan of a higher quality.

Collective frustration caused by negotiating the shift-plan (CF): The individual interest in efficient shift negotiations is defined in negative terms. The measure of individual frustration is the ratio between unsuccessful negotiations – that is, negotiations that have been cancelled – and all the negotiations in which an individual has been involved. The collective value is the average of the individual values. Again, this value is scaled on a range from 0 to 10.

| OS = (1 − w) ⋅ CS + w ⋅ (10 − CF) | (6) |

The weight w is set to 0.2, so the influence of satisfaction is higher than the influence of frustration.

|

| Figure 5. Simulation of a heterogeneous setting |

|

| Figure 6. Simulation of a homogenous setting |

| Table 3: Test of hypotheses 1 and 3 | ||||

| Agents | Hybrid | Humans | All | |

| Homogeneous setting | 5.22 | 7.20 | 8.53 | 5.97 |

| Group setting | 6.80 | 8.02 | 8.45 | 7.26 |

| Outsider setting | 6.87 | 8.19 | 8.29 | 7.29 |

| Heterogeneous setting | 7.54 | 8.27 | 8.20 | 7.81 |

| All settings | 6.59 | 7.94 | 8.37 | |

| Table 4: Test of hypothesis 2 | ||||

| Homogeneous setting | Group setting | Outsider setting | All | |

| Self-confident type | - | 5.51 | 7.29 | 7.02 |

| Uncooperative type | - | 6.41 | 7.16 | 7.02 |

| Agreement-oriented type | - | 6.41 | 7.16 | 7.02 |

| Family type | 5.20 | 8.86 | 7.10 | 7.24 |

| Pleasure type | 6.65 | 8.19 | 7.79 | 7.73 |

| Team type | - | 8.86 | 7.50 | 7.94 |

|

| Figure 7. Overall score and statistical spread of homogenous settings (family type) |

|

| Figure 8. Overall score and statistical spread of heterogeneous settings |

2 We do not develop a better tool for roster construction, but the problem of negotiating the exchange of work shifts is taken as a real-life problem for investigating systems composed of artificial and human actors.

3 See http://www.pcs.usp.br/~mabs/ and http://boid.info/CoOrg06.

4 These are especially defined by restrictions of the formal role.

5 The implementation of experiences and how they are considered in selecting future interaction partners is in fact a two-layered reinforcement mechanisms, one layer is directed at the individual agent while the second one is directed at the more general social type. This approach is strongly related to the work on trust (see for instance Gambetta, 1999). Because the specific partner selection method is not in the focus in the article we do not discuss this in detail here. Our two-layered approach to reinforcement learning and trust implies that similar agents, e.g. same social type, are more likely to be correlated regarding trust. This view is supported by Ziegler and Lausen 2004.

6 Compared to multi-agent-based simulation tools, for example, SWARM (http://www.swarm.org), the JADE platform is not suited for large reproducible simulations but for really concurrent distributed MAS.

7 ISO-NORM 9241-10: Ergonomic requirements for office work with visual display terminals (VDTs) – Part 10: Dialogue principles: International Organization for Standardization.

8 The INKA System can be used for small simulations by running artificial setting (consisting of agents only). Such simulations have been used for checking potential problems and pitfalls before the experiment with humans, and in order to fine-tune the experimental set-up.

9 The set-up described was evaluated in a pre-test on a smaller scale.

10 Exploratory simulations have shown that 500 runs per setting produce a good significance level for estimating means and variances of simulation results.

| Table 5: Application of social types within the agents' decision processes | |||||||||||||

| Social type | Weight of leisure time interests wLTI | Default leisure time preferences | Weight of capital accumulation wCA | Capital interests | Acceptance line | Cancel line | |||||||

| Early shift | Late shift | Night shift | Economic c1 | Cultural c2 | Social c3 | Symbolic c4 | Start below best in % | Slope in % | Start below worst in % | Slope in % | |||

| Family type | -132,8 | 0 | 70 | 0 | 1 | 80 | 50 | 50 | 20 | 0 | -5 | 30 | 10 |

| Team type | -132,8 | 0 | 0 | 0 | 1 | 20 | 50 | 80 | 80 | 25 | -20 | 30 | 5 |

| Uncooperative type | -132,8 | 0 | 0 | 0 | 1 | 50 | 80 | 20 | 50 | 0 | -5 | 5 | 20 |

| Self-confident type | -132,8 | 0 | 0 | 0 | 1 | 50 | 80 | 20 | 50 | 0 | -5 | 30 | 20 |

| Agreement-oriented type | -132,8 | 0 | 0 | 0 | 1 | 20 | 50 | 50 | 20 | 50 | -20 | 55 | 5 |

| Pleasure type | -132,8 | 70 | 0 | 70 | 1 | 80 | 20 | 50 | 20 | 25 | -10 | 30 | 10 |

| Note: For the application of social types within the agents' decision processes we had to transform the profiles of the social types given in Table 1 into concrete parameters and numerical values, as presented in this table. The preference and hence the non-preference of certain shift types is transformed into default leisure-time interests (0 resp. 70). Individual leisure-time interests override the default values. Notice that default leisure-time interests will not cause a negotiation, as they are not considered significant (see Table 7). However, they may change the utility of an exchange. The capital interests result from the preferred (set to 80) and irrelevant forms of capital (set to 20) of the social type profile. Not explicitly mentioned forms of capital are set to 50. The weights are currently equal for all social types. They have been set so that a shift with maximum leisure-time interest (100) results in any case in a negative shift utility. We derive the parameters that define the strategic lines from the willingness for compromise (agree line) and from the willingness to negotiate (cancel line). All parameters are percentages of the difference between the utility of the best and the worst exchange. The greater the willingness to compromise, the lower the agree line starts and the steeper is its decrease. The smaller the willingness to negotiate, the higher the agree line starts and the steeper is its increase. | |||||||||||||

| Table 6: Typical capital accumulation of the different shift types | ||||

| Shift type | Economic capital | Cultural capital | Social capital | Symbolic capital |

| Early shift weekday | 0 | 40 | 20 | 20 |

| Late shift weekday | 10 | 40 | 10 | 22 |

| Night shift weekday | 20 | 20 | 0 | 16 |

| Early shift weekend | 50 | 40 | 13 | 39 |

| Late shift weekend | 50 | 20 | 10 | 30 |

| Night shift weekend | 100 | 20 | 0 | 48 |

| Table 7: Global parameters | |

| Parameter | value / range |

| Significance thresholds for LTI | 75 |

| Magnitude of experience change stepexp | 0.05 |

| Initial experience values | 0.5 |

| Time steps for which a certain issue with a certain partner is impossible after a negotiation | 30-50 |

| Time steps for which an issue is impossible if no partner can be found or any issue is impossible if no issue can be found at all | 3-7 |

| Time steps to wait before the next negotiation starts | 3-7 |

| Time steps to wait for the partner's reaction before a timeout is sent | 15 |

| Number of consecutive identical proposals before the booster is activated | 2-4 |

ALMOG O (1998), The problem of social type: A review, Electronic Journal of Sociology, vol. 3, no. 4 http://www.sociology.org/content/vol003.004/almog.html (12.11.2003).

ARONSON E, WILSON TD, and BREWER MB (1998), Experimentation in Social Psychology, in Gilbert DT, Fiske ST, and Lindzey G (eds), The Handbook of Social Psychology, 4th edition, Boston, MA: McGraw-Hill, 99-142.

BARRETEAU O, BOUSQUET F, and ATTONATY J-M (2001), Role-Playing Games for Opening the Black Box of Multi-Agent Systems: Method and Lessons of its Application to Senegal River Valley Irrigated Systems, Journal of Artificial Societies and Social Simulation, vol. 4, no. 2 https://www.jasss.org/4/2/5.html.

BERG M, and TOUSSAINT P (2003), The mantra of modelling and the forgotten powers of paper: A sociotechnical view on the development of process-oriented ICT in health care, International Journal of Medical Informatics, vol. 66, no. 2-3, 223-234.

BOURDIEU P (1986), The (three) Forms of Capital, in Richardson JG (ed.), Handbook of Theory and Research in the Sociology of Education, New York and London: Greenwood Press, 241-58.

BOWKER GC, STAR SL, TURNER W, and GASSER L (eds.) (1997), Social Science, Technical Systems, and Cooperative Work. Beyond the Great Divide, Hillsdale, NY: Lawrence Earlbaum.

BRADSHAW JM, SIERHUIS M, ACQUISTI A, FELTOVICH P, HOFFMAN R, JEFFERS R, PRESCOTT D, SURI N, USZO A, and VAN HOOF R (2003), Adjustable Autonomy and Human-Agent Teamwork in Practice: an Interim Report on Space Applications, in Hexmoor H, Castelfranchi C, and Falcone R (eds), Agent Autonomy, Kluwer, 243-80.

CAMPBELL DT (1988), Factors Relevant to the Validity of Experiments in Social Settings, in Overman ES (ed.), Methodology and Epistemology for Social Science. Selected Papers, Chicago and London: University of Chicago Press, 151-66.

CAMPBELL DT and RUSSO MJ (1999), Social Experimentation, London: Sage.

CAMPBELL DT and STANLEY J (1963), Experimental and Quasi-Experimental Design for Research, Chicago: Rand McNally.

CASTELFRANCHI C (2003), Formalising the informal? Dynamic social order, bottom-up social control, and spontaneous normative relations. Journal of Applied Logic, vol. 1, no. 1-2, 47-92.

COHEN MD, MARCH JG, and OLSEN JP (1972), A Garbage Can Model of Organizational Choice, Administrative Science Quarterly, vol. 17, no. 1, 1-25.

DAHRENDORF R (1973), Homo Sociologicus; London: Routledge and Kegan Paul.

ESSER H (1999), Soziologie – Spezielle Grundlagen. Band 1: Situationslogik und Handeln, Frankfurt a.M.: Campus.

FATIMA SS, WOOLDRIDGE M, and JENNINGS NR (2003), Optimal Agendas for Multi-Issue Negotiation, in Proceedings of the 2nd International Joint Conference on Autonomous Agents and Multi-Agent Systems (AAMAS'03), ACM, 129-36.

FERBER J (1999), Multi-Agent Systems. Introduction to Distributed Artificial Intelligence; Reading, MA: Addison Wesley.

FISCHER K, MÜLLER JP, and PISCHEL M (1998), A Pragmatic BDI architecture, in Huhns MN and Singh MP (eds), Readings in Agents, Morgan Kaufmann, 217-24.

GAMBETTA D (1998), Can we Trust Trust?, in Gambetta D (ed.), Trust: Making and Breaking Cooperative Relations; Oxford: Blackwell, 213-37.

GROSSI D, DIGNUM F, ROYAKKERS L, and DASTANI M (2005), Foundations of Organizational Structures in Multiagent Systems, in Proceedings of the 4th International Joint Conference on Autonomous Agents and Multi-Agent Systems (AAMAS'05), ACM, 690-698.

GUYOT P and DROGOUL A (2004), Designing Multi-Agent based Participatory Simulations, in Coelho H and Epinasse B (eds), 5th Workshop on Agent-Based Simulations, Lisbon: Publishing House, 32-37.

HUHNS MN and STEPHENS LM (1999), "Multiagent Systems and Societies of Agents". In Weiss G (ed.), Multiagent Systems: A Modern Approach to Distributed Artificial Intelligence, MIT Press, 79-120.

JENNINGS NR, FARATIN P, LOMUSCIO AR, PARSONS S, WOOLDRIDGE M, and SIERRA C (2001), Automated Negotiations: Prospects, Methods and Challenges, Group Decision and Negotiation, 10(2), 199-215.

KORTENKAMP D and FREED M (eds) (2003), Human Interaction with Autonomous Systems in Complex Environments, AAAI Spring Symposium Technical Report SS-03-04, Palo Alto: AAAI Press.

KURBEL K and LOUTCHKO I (2002), Multi-Agent Negotiation under Time Constraints on an Agent-Based Marketplace for Personnel Acquisition, in Proceedings of the 3rd International Symposium on Multi-Agent Systems, Large Complex Systems, and E-Business (MALCEB 2002), 566-79.

MARCH JG and SIMON HA (1958), Organizations, New York: Wiley.

MEISTER M, URBIG D, GERSTL R, LETTKEMANN E, OSHERENKO A, and SCHRÖTER K (2002), Die Modellierung praktischer Rollen für Verhandlungssysteme in Organisationen. Wie die Komplexität von Multiagentensystemen durch Rollenkonzeptionen erhöht werden kann, Berlin: Technische Universität Berlin, Technology Studies TUTS-WP-6-2002, http://www.tu-berlin.de/~soziologie/Tuts/Wp/TUTS_WP_6_2002.pdf.

MEISTER M, URBIG D, SCHRÖTER K, and GERSTL R (2005), Agents Enacting Social Roles. Balancing Formal Structure and Practical Rationality in MAS design, in Fischer K and Florian M (eds), Socionics, Berlin, Springer: 104-131.

MEYER JW and ROWAN B (1977), Institutionalized Organizations: Formal Structure as Myth and Ceremony, American Journal of Sociology, 83, 340-63.

NICKLES M, ROVATSOS M, SCHMITT M, BRAUER W, FISCHER F, MALSCH T, PAETOW K, and WEISS G (2007), The Empirical Semantics Approach to Communication Structure Learning and Usage: Individualistic vs. Systemic Views. Journal of Artificial Societies and Social Simulation, vol. 10, no. 1, https://www.jasss.org/10/1/5.html.

POPITZ H (1967), Der Begriff der sozialen Rolle als Element der soziologischen Theorie, Tübingen: Mohr (Paul Siebeck).

RAMANATH AM and GILBERT N (2004), The Design of Participatory Agent-Based Social Simulations, Journal of Artificial Societies and Social Simulation,, vol. 7, no. 4, https://www.jasss.org/7/4/1.html.

RAMMERT W (1998), Giddens und die Gesellschaft der Heinzelmännchen. Zur Soziologie technischer Agenten und Systemen verteilter künstlicher Intelligenz, in Malsch T (ed.), Sozionik. Soziologische Ansichten über künstliche Sozialität, Berlin: Sigma, 91-128.

RAMMERT W (2003), Technik in Aktion: Verteiltes Handeln in soziotechnischen Konstellationen, in Christaller T and Wehner J (eds), Autonome Maschinen, Frankfurt a.M.: Campus, 289-315.

RAMMERT W and SCHULZ-SCHAEFFER I (eds) (2002), Können Maschinen handeln? Soziologische Beiträge zum Verhältnis von Mensch und Technik Frankfurt a.M.: Campus.

RAO AS and GEORGEFF MP (1991), Modeling Rational Agents within a BDI Architecture, in Fikes R and Sandewall E (eds), Proceedings of Knowledge Representation and Reasoning (KRR-91), Kaufmann, 473-84.

SCHRÖTER K and URBIG D (2004), C-IPS: Specifying Decision Interdependencies in Negotiations, in Lindeman G et al. (eds), Proceedings of the Second German Conference on Multiagent System Technologies (MATES 2004), Springer, 114-25.

SIERHUIS M, VAN HOOF R, CLANCEY WJ, and SCOTT M (2001), From Work Practice Models and Simulation to Implementation of Human-Centered Agent Systems in Proceedings of ASCW01, Workshop on Agent Supported Cooperative Work, Montreal, Canada, May 2001, http://www.agentisolutions.com/documentation/papers/Workshop_on_%20AgentSupportedCooperativeWork.pdf, accessed 02.09.2002.

SUCHMAN L (1987), Plans and Situated Actions. The Problem of Man-Machine Communication, Cambridge, UK: Cambridge University Press.

TURNER RH (1962), Role-Taking: Process versus Conformity, in Rose AM (ed.), Human Behavior and Social Process. An Interactionist Perspective, London: Routledge, 20-40.

URBIG D, MONETT DIAZ D, and SCHRÖTER K (2003), The C-IPS Architecture for Modelling Negotiating Social Agents, in Schillo M et al. (eds), Proceedings of the First German Conference on Multiagent System Technologies (MATES 2003), Springer, 217-28.

URBIG D and SCHRÖTER K (2005), Negotiating Agents: from full Autonomy to Degrees of Delegation, to appear in Proceedings of the 4th International Joint Conference on Autonomous Agents and Multi Agent Systems (AAMAS 2005), Utrecht, 25-29 July 2005.

ZIEGLER C-N and LAUSEN G (2004), Analyzing Correlation between Trust and User Similarity in Online Communities. In Proceedings of the 2nd International Conference on Trust Management, Volume 2995 of LNCS, 251-265.

ZIMMERMANN E (1972), Das Experiment in den Sozialwissenschaften, Stuttgart: Teubner.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2007]