Abstract

Abstract

- Psychological research on human creativity focuses

primarily on individual creative performance. Assessing creative

performance is, however, also a matter of expert evaluation. Few

psychological studies model this aspect explicitly as a human process,

let alone measure creativity longitudinally. An agent-based model was

built to explore the effects contextual factors such as evaluation and

temporality have on creativity. Mihaly Csikszentmihalyi's systems

perspective of creativity is used as the model's framework, and

stylized facts from the domain of creativity research in psychology

provide the model's contents. Theoretical experimentation with the

model indicated evaluators and their selection criteria play a bearing

role in constructing human creativity. This insight has major

implications for designing future creativity research in psychology.

- Keywords:

- Creativity, Social Psychology, Mihaly Csikszentmihalyi, Social Systems, Cultural Evolution, Information Theory

Introduction

Introduction

- 1.1

- The phenomenon of creativity captures the awareness of

countless experts in psychological science (Cropley

et al. 2010; Glover et

al. 2010; Kaufman

& Sternberg 2006, 2010;

Mumford 2011; Piirto 2004; Runco 1997, 2007; Runco

& Pritzker 2011; Sawyer

2006; Sternberg 1999b;

Sternberg et al. 2004;

Zhou & Shalley 2007).

Wehner, Csikszentmihalyi and Magyari-Beck (1991)

investigated approaches used in research on creativity to assess how

the construct is studied. Using their own taxonomy, they classified

scientific work according to its examined social level (individual,

group, organization or culture), aspect (trait, process or product) and

methodology (quantitative or qualitative; empirical or theoretical).

Particularly in the domain of psychology, their results indicated a

strong focus on individual traits. Here, traits

refer to any stable individual attributes, for instance personality

traits (Feist 1999; McCrae 1987; Ochse 1990; Schuler & Görlich 2006),

behavioral patterns (Stokes 1999,

2001, 2007) or cognitive styles (Dewett & Williams 2007;

Kirton 1976; Kwang et al. 2005; Mednick 1962). Kahl, Hermes da

Fonseca & Witte (2009)

conducted a near-replication of Wehner et al.'s (1991) work and produced very

similar results: Almost 20 years later, creativity researchers in the

domain of psychology still place the most emphasis on studying

individuals and their traits. This also holds for neighboring

disciplines such as education and social sciences.

Assessing creativity in psychological science

- 1.2

- There is more to creativity than just individuals and their

traits, however. This assumption also applies to studies conducted in

psychological science. The following, brief outline of creativity

research in psychology elaborates on this, and it exposes a trace of a

social system inherent in creativity assessments.

- 1.3

- Psychological research on creativity commonly encompasses

the administration of non-algorithmic tasks to

study participants who work individually on them. A non-algorithmic

task is characterized by its "open-endedness", that is, there is no one

right solution and principally endless solutions may be generated by

the experimental unit (individual, group, etc.). A classic example is

the alternative uses task, for instance, "name all

the uses for a brick" (Guilford

1967). Contemporary studies contain more realistic tasks with

regard to their commonly recruited student samples: "Name ways your

university/faculty/cafeteria can be improved" (Adarves-Yorno et al. 2006;

Nijstad et al. 2003; Paulus & Brown 2003; Rietzschel et al. 2006, 2010). Besides the verbal

examples mentioned here, figural non-algorithmic tasks are available (Ward et al. 1999).

- 1.4

- After participants generate solutions to administered

tasks, their work is evaluated by judges. A method of scientific

control, these raters communicate neither with study participants nor

with each other. Nevertheless, Lonergan et al. (2004) argue "the evaluative

process itself may be a highly creative, or generative, undertaking"

(p. 231). Silvia (2008)

admits "…judges are a source of variability, so they are more

appropriately understood as a facet in the research design" (p. 141).

Generally, the evaluators involved in creativity studies are

categorized as being a) selves or others and b) experts or non-experts

(regarding the task at hand). Furthermore, their

specific task is to respond to participants' manifest solutions with a

predefined measuring instrument. Although diverse methods to measure

creativity exist (Amabile 1982,

1996; Kaufman et al. 2008; Kozbelt & Serafin 2009;

Lonergan et al. 2004;

Rietzschel et al. 2010;

Runco et al. 1994; Silvia 2008; Sternberg 1999a), most

converge in their use of the criteria novelty and appropriateness

to indicate the creativity of specific manifest behavior (Amabile & Mueller 2007;

Lubart 1999; Metzger 1986; Preiser 2006; Schuler & Görlich 2006;

Styhre 2006; Ward et al. 1999; Zysno & Bosse 2009).

Shalley & Zhou (2007,

p. 6) noted "novel ideas are those that are unique compared to other

ideas currently available" and "useful or appropriate ideas are those

that have the potential to add value in either short or long term".

Similarly, Lubart (1999,

p. 339) stated novel work is "distinct from previous work" and

"appropriate work satisfies the problem constraints, is useful or

fulfils a need".

- 1.5

- It should be noted that several synonyms for these two

qualities reoccur in literature on creativity research. For instance,

novelty is interchanged with originality, newness, unexpectedness,

freshness, uniqueness (Couger et al.

1989; Sternberg 1999a).

Appropriateness is alternately described with words such as usefulness,

utility, value, adaptiveness and practicality (ibid). Both terms do not

seem to have been explicitly discriminated from their respective

synonyms yet. Instead, implicit scientific agreement appears to prevail

regarding the issue that both subsets of terms refer to the same latent

dimensions of creativity – most commonly labeled novelty and

appropriateness. In common research practice, both attributes are

conceived as independent of each other and aggregated with equal

weighting (Amabile &

Mueller 2007; Schuler

& Görlich 2006).

- 1.6

- Summarizing, a definition, or rather operationalization, of

creativity in psychological research is given under the following

conditions: a) The task at hand is heuristic rather than algorithmic;

b) any particular response to the task is considered creative if it is

judged to be both novel and appropriate; c) judges make their ratings

independently and without prior training, that is, they are prompted to

use their personal, subjective definition of what is novel and

appropriate; d) judges are designated experts, even if their

domain-specific knowledge is not identical. They need not have created

themselves, but require enough experience in the domain to have

developed a personal sense for what is novel and appropriate; e)

interrater reliability must be sufficiently high to establish

creativity has been validly assessed.

- 1.7

- This brief outline of creativity research in psychology

indicates there is another sample inherent in every study on

creativity: the evaluators. Admittedly, researchers have started to

acknowledge the variance evaluators may produce in measuring creativity

(Haller et al. 2011; Herman & Reiter-Palmon 2011;

Randel et al. 2011; Silvia 2011; Westmeyer 1998, 2009). Nevertheless, the two

samples – study participants and evaluators – fall short of

constituting a social system as they interact neither directly nor

indirectly: Participants' creativity is usually measured just once and

they do not receive feedback from evaluators. This implies evaluators'

impact on constructing what psychologists measure as creativity remains

obscure.

Defining an alternative view of creativity

- 1.8

- How would the contribution psychological science makes to

creativity research change if participants were assessed more than

once, and were affected by evaluators' judgments as well as their

peers? The systems perspective of creativity developed by

Csikszentmihalyi (1999)

illustrates such aspects, postulating they play a key part in the

emergence of creativity as a social construct. His view is briefly

outlined in the following.

- 1.9

- According to Csikszentmihalyi's (1988, 1999) systems

perspective, an individual creator is surrounded by an environment

consisting of a field and a domain. Whereas the field represents a part

of society, and therefore human beings, the domain represents a part of

the individual's and the field's culture. It is a symbolic system

containing information such as ideas, physical objects, behaviors,

styles, values. Essentially, the dynamic between individual creator,

field and domain functions in the following manner: An individual

creates something novel, and the novelty must be accepted, or socially

validated, by the evaluating field in order to be included in the

domain, which serves as a frame of reference to both creators and

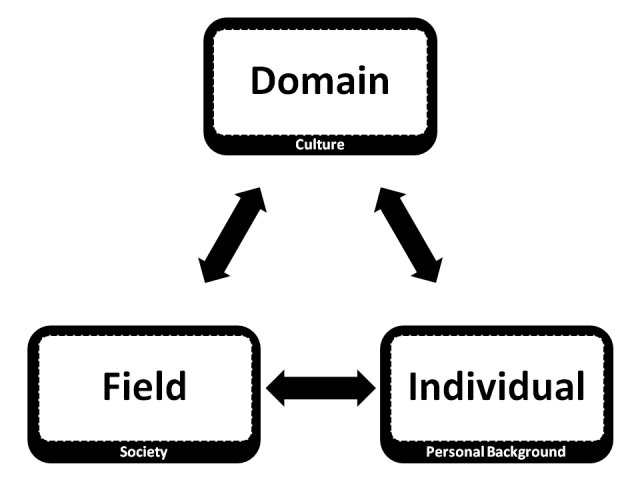

evaluators (Figure 1).

Creator(s) and their field do not necessarily have access to exactly

the same elements in the domain. Furthermore, the domain's contents

change as creators and their field make changes to it. Csikszentmihalyi

(1999) asserted

that creativity can only be determined by studying the interaction

between creators, their fields and corresponding domains.

Figure 1. Graphical replication of Csikszentmihalyi's (1999) systems perspective of creativity - 1.10

- The dynamics of individuals creating, a field evaluating

and their domain changing establishes a way to imagine psychological

studies of creativity as a process. Moreover, the perspective resembles

the evolutionary mechanism of variation, selection and retention (VSR;

see Rigney 2001).

Described concisely, an artifact is produced by a creator in the

variation phase. The selection phase describes how an evaluator –

simply put – accepts or rejects the artifact, either allowing it to

persist or reducing its chance of continuance. In the retention phase,

selected artifacts are preserved in some information system (e.g.,

culture, domain) to be reused in later variation and selection phases

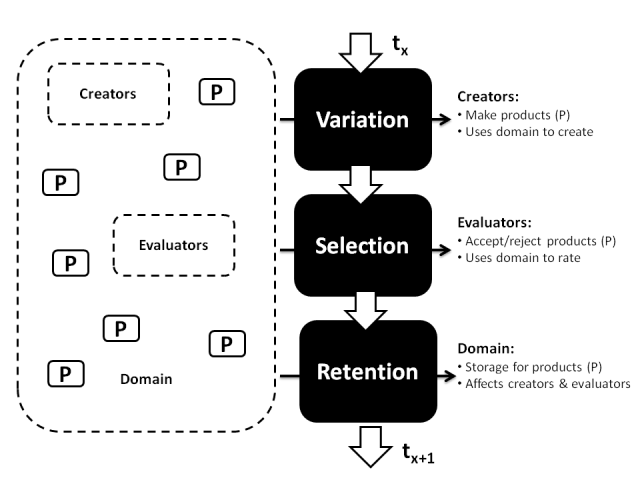

(see Figure 2 for a summary).

Even though verbal and figural descriptions coerce VSR models to a

linear form, these processes do not necessarily occur in this fashion

in practice (Baldus 2002; Csikszentmihalyi 1988;

Gabora & Kaufman 2010).

Figure 2. Subsystems (creators, evaluators and domain) and their processes (variation, selection and retention) - 1.11

- The unit of variation and selection is the single artifact

or its comprising parts[1].

Artifacts are inherited by creators and evaluators when they

acknowledge and retain them (Buss 2004).

A domain's contents are therefore perceived, processed, (re)produced,

assessed and disseminated. They accumulate and only extinguish if they

are collectively overlooked, forgotten or deselected. The domain

changes relative to how creators and evaluators behave, and creators

and evaluators change depending on what information is available in the

domain they create (Dasgupta 2004;

Gabora & Kaufman 2010).

The domain is therefore a dynamic entity characterized by change and

growth, but it is not unrestricted. It coevolves with constraints set

by its environment (Bryson 2009),

namely in the systems perspective of creativity presented here,

creators

and evaluators. Moreover, evaluators are explicit restrictors because

their main purpose in the system is to filter variations, even if they

do so with differential rigor. Implicit restrictions occur, for

instance, by coincidence (e.g., which variations are discovered,

imitation errors; Gabora &

Kaufman 2010) and due to internal psychological mechanisms

(e.g., how information is processed, how behavior is exhibited; Buss 2004) regarding creators and

evaluators alike. It should be emphasized that creators and evaluators

actively participate in the generation of creativity. Even if they

cannot predict their domain's contents, they can change them by

performing their specific roles in the system, that is, by creating and

attributing meaning.

- 1.12

- Admittedly, caution needs to be taken when using biological

evolutionary terms to describe cultural evolution (Baldus 2002; Chattoe 1998; Gabora 2004; Kronfeldner 2007, 2010), the latter similar

to the systems perspective of creativity described here. However,

analogical thinking does facilitate comprehension among advocates of

diverse concepts of creativity (cp. Campbell

1960; Csikszentmihalyi

1988, 1999;

Ford & Kuenzi 2007;

Ochse 1990; Simonton 2003). Kronfeldner (2009) suggests using

evolutionary analogies to aid thinking and communication between

fields, and to leave the details to the specific fields. VSR theorizing

also lends itself as an intermediary language to translate conceptual

ideas of creativity into a more linear description for scientific

exploration. It facilitates the designation of moments in time to

manipulate

and others to measure.

Purpose of this research

- 1.13

- The purpose of this research is to explore what there is to learn about how creativity is studied in psychological science by using Csikszentmihalyi's (1999) systems perspective as a framework for an agent-based model. As described above, psychological studies on creativity commonly stop with one assessment of the variation process. By using Csikszentmihalyi's (1999) model as a framework, selection and retention processes as well as their potential effects on variation can be theoretically explored. The next section describes the simulation model in detail.

Simulation

model

Simulation

model

- 2.1

- The agent-based model presented here is called CRESY (CREativity

from a SYstems perspective) and was constructed with NetLogo

4.1 (Wilensky 1999).

This section contains an abbreviated version of its ODD protocol (Grimm et al. 2006, 2010) as a model description.

The entire simulation model (NetLogo file) and supplementary material

(full ODD protocol, model verification, experiment documentation, data

analyses, etc.) can be downloaded from the CoMSES Computational Model

Library (OpenABM website)[2].

Purpose

- 2.2

- CRESY simulates creativity as an emergent phenomenon

resulting from variation, selection and retention processes (Csikszentmihalyi 1988,

1999; Ford & Kuenzi 2007; Kahl 2009; Rigney 2001). In particular, it

demonstrates the effects creators and evaluators have on emerging

artifact domains. It was built based on stylized facts from the domain

of creativity research in psychology in order to reflect common

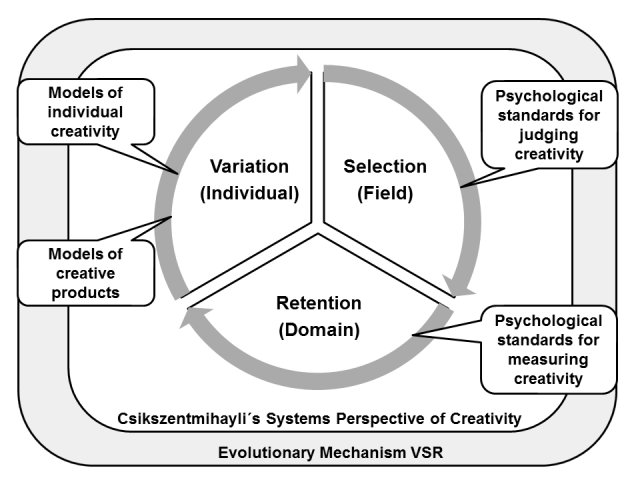

research practice there (Figure 3).

Figure 3. Basic concepts and models used in CRESY - 2.3

- An abstract model, CRESY was specifically designed for

theoretical exploration and hypotheses generation (Dörner 1994; Esser & Troitzsch 1991; Ostrom 1988; Troitzsch 2013; Ueckert 1983; Witte 1991). It constitutes a

form of research called theory-based exploration,

in which models, concepts, stylized facts and observations are used as

input to build a tentative model explored via computer simulation

particularly in order to generate new hypotheses and recommendations as

output (Bortz & Döring 2002,

Ch. 6; Kahl 2012, Ch. 4; Sugden 2000). In contrast to

explanatory research, the objective of exploratory research is to build

theory instead of testing it. In relating this method to the model's

target, Sternberg (2006)

noted creativity research is currently advancing not via its answers,

but via its questions.

Entities, state variables and scales

- 2.4

- CRESY encompasses the following entities: patchworks,

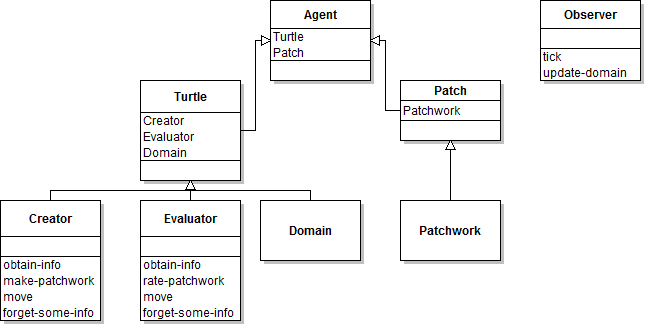

creators, evaluators and a domain (Figure 4).

The systems perspective of creativity outlined in the Introduction

was used as the model's framework, and auxiliary theory from the domain

of creativity research in psychology was used to formalize the entities

in a more concrete and face valid way. This renders the model

structurally more realistic and comparable to research practice in

psychological science (Gilbert 2008;

Meyer 2011).

Figure 4. Class diagram of CRESY (attributes are omitted for simplicity) Patchworks

- 2.5

- Patchworks abstractly represent artifacts creators produce

and evaluators rate, for instance, ideas (Paulus

& Brown 2003), paintings (DiPaola

& Gabora 2009), movements (Leijnen

& Gabora 2010), behavior strategies (Edmonds 1999) or other

cultural artifacts (Picascia

& Paolucci 2010). Technically, patchworks are

represented by stationary agents ("patches") in NetLogo and they appear

as RGB colors. RGB colors were chosen as artifacts because they

represent an operationalization of stylized facts about creative

products prevalent in creativity research in psychological science: a)

They represent manifest behavior; b) the three individual RGB values

represent disparate elements combined to form something new (Casti 2010; Sawyer 2006; Schuler & Görlich 2006;

Sternberg & Lubart

1999; Ward et al. 1999).

The production of patchworks becomes visible in NetLogo's View when

creators combine three independently selected values and "paint" them

on a nearby patch. Moreover, patchworks are the means by which creators

and evaluators communicate. Both agentsets do not interact directly;

they gain knowledge of the domain (i.e., other creators' and

evaluators' behavior) by processing patchworks locally. This feature

is comparable to situations in which creators do not know each other

personally, but know each other's work (Dennis

& Williams 2003; Müller

2009; Runco et al. 1994;

van den Besselaar &

Leydesdorff 2009), and it is very common in creativity

research (Kozbelt & Serafin

2009). It is also directly comparable to the way products are

rated by judges in creativity research. Raters usually do not have

contact with study participants. They judge the artifacts independently

and anonymously (Bechtoldt et al.

2010; Kaufman et al. 2008;

Lonergan et al. 2004;

Rietzschel et al. 2006;

Silvia 2008). See

Table 1 for patchwork state

variables and scales.

Table 1: Patchwork state variables and scales Name Brief description Value pcolor List of RGB values (24 bit). [r g b] plabel domSize category for pcolor. Integer,

[0, domSize-1]pDom List of RGB values reduced to domain variable domSize (6, 9 or 12 bit). [r g b] pR, pG, pB Patch's current respective red, green and blue values. Integer, [0,255] madeBy Who made patchwork? Environment (means a patch's color has not changed yet by any creator. It is still the color it randomly received when the world was initiated) or creator type. {Env, Cx, C1, C2, C3} whosNext Holds who value of creator or evaluator potentially to next move on patch. Belongs to movement and evaluation procedures. Integer,

[1, number-creators]

OR

[1, number-evaluators]justMade Indicates whether patchwork was made during current step. Boolean toDo Indicates whether evaluator still needs to judge patchwork. Boolean hue Indicates whether patchwork's RGB color is warm or cool. {warm,cool} currentEvals List of evaluators current scores for given patchwork. List with

{0,1}cScore Patchwork's cumulative creativity score. If a patchwork has never been rated before, its cScore is set to -1 by default and excluded from output variable analyses. Integer,

[0,1]PcScoreList Current domain evaluation list of cScores. List of length domSize

values of [0,1]Creators

- 2.6

- Creators are represented by mobile agents ("turtles") in

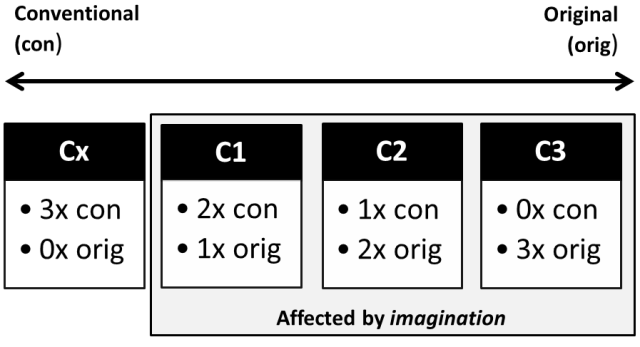

NetLogo. There are four different types (Cx,

C1, C2, C3), and they

differ in the way they make patchworks (i.e., solve a heuristic task).

Accordingly, creators vary in the way they combine three digits to form

an RGB color (see Submodels). Depending on their

variable imagination they can invent elements they

have never encountered before (genuine novelty). The ideas incorporated

in creators' design are based on stylized facts from creativity

research in psychological science (Abuhamdeh

& Csikszentmihalyi 2004; Brower

2003; Campbell 1960;

Cziko 1998; Dewett & Williams 2007;

Gruber 1988; Kirton 1976; Kwang et al. 2005; Mednick 1962; Mumford 2011; Richards 1977; Sawyer 2006; Simonton 1998, 2002, 2003, 2004; Smith

2008; Sternberg

& Lubart 1999; Stokes

1999, 2007; Ward et al. 1999; Welling 2007; for an overview

see Kahl 2012, Ch. 7): a)

Individuals differ in their creative behavior; b) their differences are

often conceptualized as dichotomous extreme types (e.g., creative vs.

not creative, adaptors vs. innovators); c) dichotomous types are

nevertheless connected by a theoretical continuum, making more subtle

individual differences detectable. See Table 2

for creator state variables and scales.

Table 2: Creator state variables and scales Name Brief description Value label Describes (static) creator type. {Cx,C1,C2,C3} cR, cG, cB Creator's 3 memory lists for red, green & blue values. Each a list of 256 nested lists; item 0 = color value (cj), item 1 = absolute frequency (fj) of value cj. [ [0 f1] [1 f2] ,…,

[cj fj],…,[255 f256 ] ]imagination How likely creators C1, C2 & C3 will generate an r, g or b value not in their memory. Same value for all three creator types. [0.00, 0.25] info-rate How many neighbors (4 or 8) a creator obtains info from per time step. Same for all creators. {n4,n8} movement How creators move in the world. {straightFd1, ahead3, allButBehind7, any8} Evaluators

- 2.7

- Evaluators are mobile agents ("turtles") in NetLogo and

their task is to judge the patchworks creators make. They do so based

on the criteria novelty and appropriateness, whereas their stringency

in using these criteria varies. Novelty is a

quality attributed to a patchwork when an evaluator perceives it to be

statistically infrequent compared with other patchworks it has in its

memory. Appropriateness is a quality attributed to

a patchwork when an evaluator considers its hue to agree with the hues

of its neighbors (see Submodels).

- 2.8

- Whereas creators can make any one of 2563

RGB colors, evaluators perceive them with a lesser degree of

differentiation (this is based on evaluators'

state variable domSize; Table 3).

Their recognition of patchworks, therefore, is not as fine-tuned as

that of creators. In evolutionary terms, they only perceive a

patchwork's phenotype, whereas creators are aware of patchworks'

genotypes (Chattoe 1998).

The ideas incorporated in evaluators' design reflect stylized facts

from research on how to rate the creativity of human behavior (Adarves-Yorno et al. 2006;

Amabile 1982, 1996; Haller

et al. 2011; Herman

& Reiter-Palmon 2011; Kaufman

et al. 2008; Kozbelt

& Serafin 2009; Randel

et al. 2011; Rietzschel

et al. 2010; Runco et al.

1994; Silvia 2008,

2011; for an overview

see Kahl 2012, Ch. 9): a)

Evaluations are based on the criteria novelty and appropriateness; b)

evaluators' stringency in using these criteria may differ; c) a

concrete definition of creativity is given via evaluators' role in the

creative process, that is, by their individual attribution of

differential value to artifacts; d) evaluators make their judgments

independently of each other. See Table 3

for evaluator state variables and scales.

Table 3: Evaluator state variables and scales Name Brief description Value label Describes (static) evaluator type. {En,Ea,Ena} eMem Evaluator's memory list for patchworks seen. List of domSize nested lists. item 0 = domSize value (cj), item 1 = absolute frequency (fj) of value cj. [[0 f1] [1 f2],…,

[cj fj],…,[n fn]]domSize Size of patchwork space, evaluators can perceive, e.g., number of RGB colors (patchworks) they can discriminate (12, 9 or 6 bit). This variable is set by the observer globally for all evaluators. {64, 512, 4096} novelty-stringency Percentage. Integer, [0,1] appropriateness-stringency Percentage. Integer, [0,1] info-rate How many neighbors (4 or 8) an evaluator obtains info from per time step. Same for all. {n4, n8} movement How evaluators move in the world. {straightFd1, ahead3, allButBehind7, any8} Domain

- 2.9

- According to the systems perspective of creativity, the

domain is an evolving system of information (Csikszentmihalyi 1999).

In CRESY, information refers to patchworks as well as information about

them such as their RGB values and creativity ratings. Ultimately, the

domain is characterized in CRESY by output variables (Table 5). However, the patchwork

information required to characterize the domain with these output

variables is recorded in NetLogo by one invisible agent ("turtle"), for

simplicity called a domain. See Table 4

for these domain state variables and scales.

Table 4: Domain state variables and scales Name Brief description Value evalList List of domSize nested lists containing all evaluations ever made for particular patchwork. Note 0 = not creative, 1= creative. {0,1} cScoreList List of length domSize containing current creativity scores for patchworks. A patchwork's cScore is set to -1 by default if it has never been evaluated before. Default values are not processed. Integer,

[0,1]justRated List of number-creators nested lists containing the creativity evaluations evaluator made in current step. Integer,

[0,1]justRatedTable List of length number-creators*2 containing summed and squared creativity scores from justRated. = 0 Process overview and scheduling

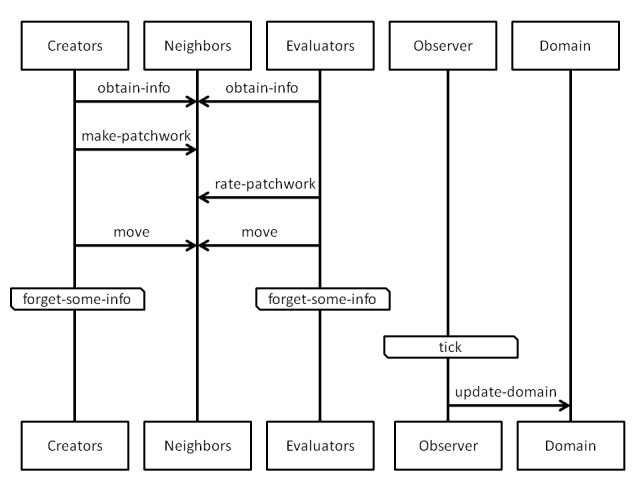

- 2.10

- CRESY consists of seven subsequent processes occurring in

one simulation step: obtain-info, make-patchwork,

rate-patchwork, move, forget-some-info,

tick, update-domain. Their

scheduling is linear, i.e. their order occurs in the exact order they

are listed in the sequence diagram in Figure 5.

The agentsets running the commands in each process do so serially, but

in a random order on the basis of NetLogo's ask

command. The state variables were updated asynchronously. Time was

modeled discretely.

Figure 5. Sequence diagram of CRESY ("neighbors" are neighboring patches in NetLogo) Submodels

- 2.11

- This section describes all processes in CRESY.

obtain-info

- 2.12

- In this submodel, all creators and evaluators gather

information about patchworks. They view four or eight neighboring

patches according to their state variable info-rate

(Tables 2–3).

make-patchwork

- 2.13

- Every creator produces a patchwork (RGB color) and "paints"

it on one of the eight neighboring patches with the lowest current

creativity evaluation. This form of retention ensures that more favored

patchworks remain in the domain longer ("survival of the fittest

enough"). make-patchwork works differently for each

creator type, but the underlying idea is the same for all: Creators

combine three digits from 0-255 to produce a color. The way a creator

selects these digits depends on its state variables label

and imagination, and is described in the following.

Conventional vs. original strategies

- 2.14

- A creator's label (Cx,

C1, C2, C3)

indicates its "creative type" and therefore how it retrieves individual

digits from its memory lists. Cx is highly likely

to choose digits it has most often obtained before. In everyday terms, Cx's

behavior is conventional, unoriginal or reliable, because it

(re)produces what it "knows best". When C3 makes a

patchwork, it does so in an original or unreliable fashion[3]: All three digits it

selects are highly likely to be ones it has rarely encountered before. C1

and C2 are hybrids of Cx and C3.

C1 selects one of the three digits in the highly

original way C3 does, and the other two in the

conventional way Cx does. C2

creates exactly in the opposite manner: It selects two digits in the

highly original way C3 does, and one in the

conventional way Cx does. See Figure 6 for a visual summary.

Figure 6. Creator types and digit selection strategies Imagination

- 2.15

- Furthermore, the original selection strategies used by C1,

C2 and C3 are affected by

the state variable imagination. This is a parameter

describing how likely it is these creators will select a digit they

have never encountered before during the process obtain-info

(Figure 5), that is, how likely

a creator type will create a genuinely novel digit. Novelty in this

sense refers to personal and not global novelty[4], although the latter

form can be a consequence of the former depending on the types of

patchworks already in the world.

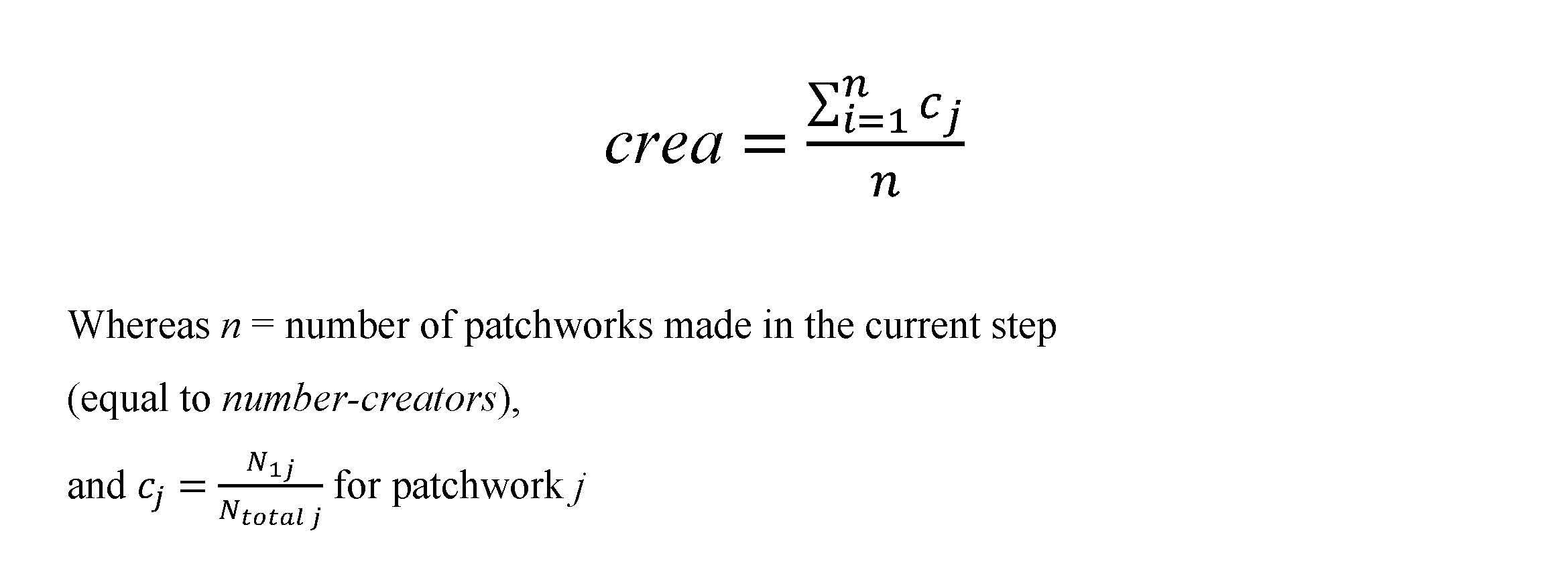

Color value selection

- 2.16

- Each color value selected by a creator to form a patchwork

is formally chosen in the following way: Each memory list (cR,

cG and cB) a creator has,

and therefore color dimension, is treated independently when a

patchwork is made. The first step in selecting a digit (0-255) from a

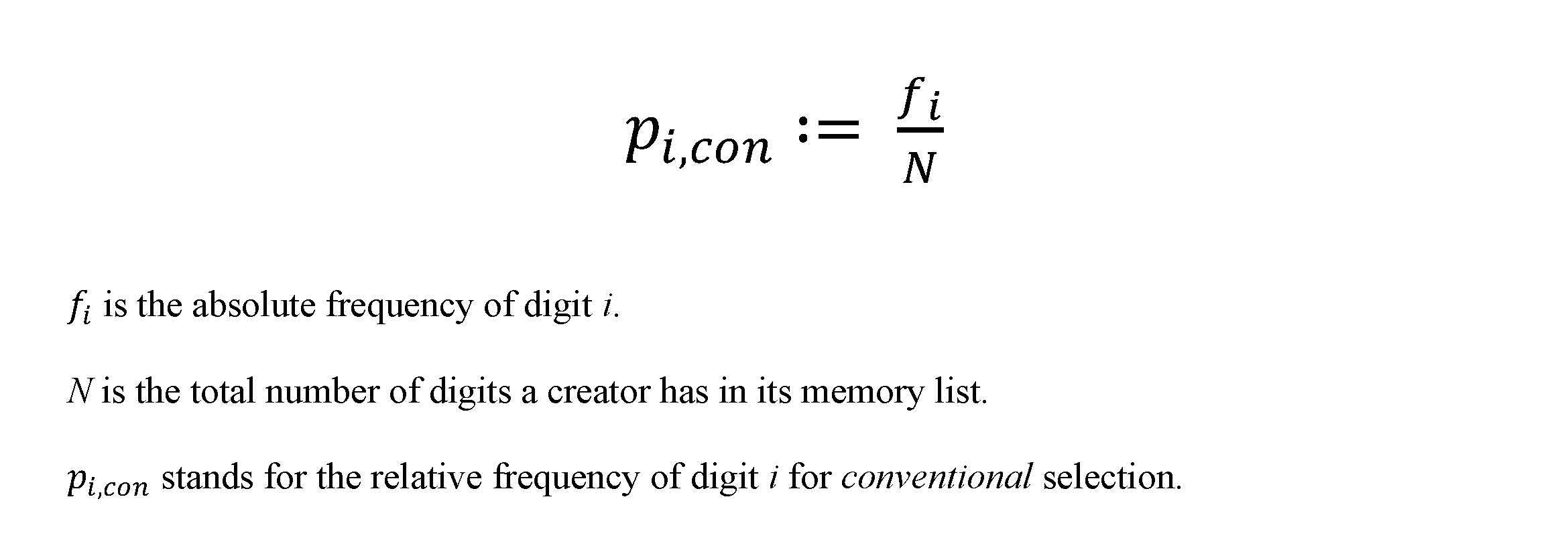

color dimension (red, blue or green) is to calculate the relative

frequencies of all digits in one dimension (memory list). Here is an

example for one color dimension. The relative

frequency for each digit (0-255) of this color dimension is calculated

as stated in Equation 1 (Figure 7).

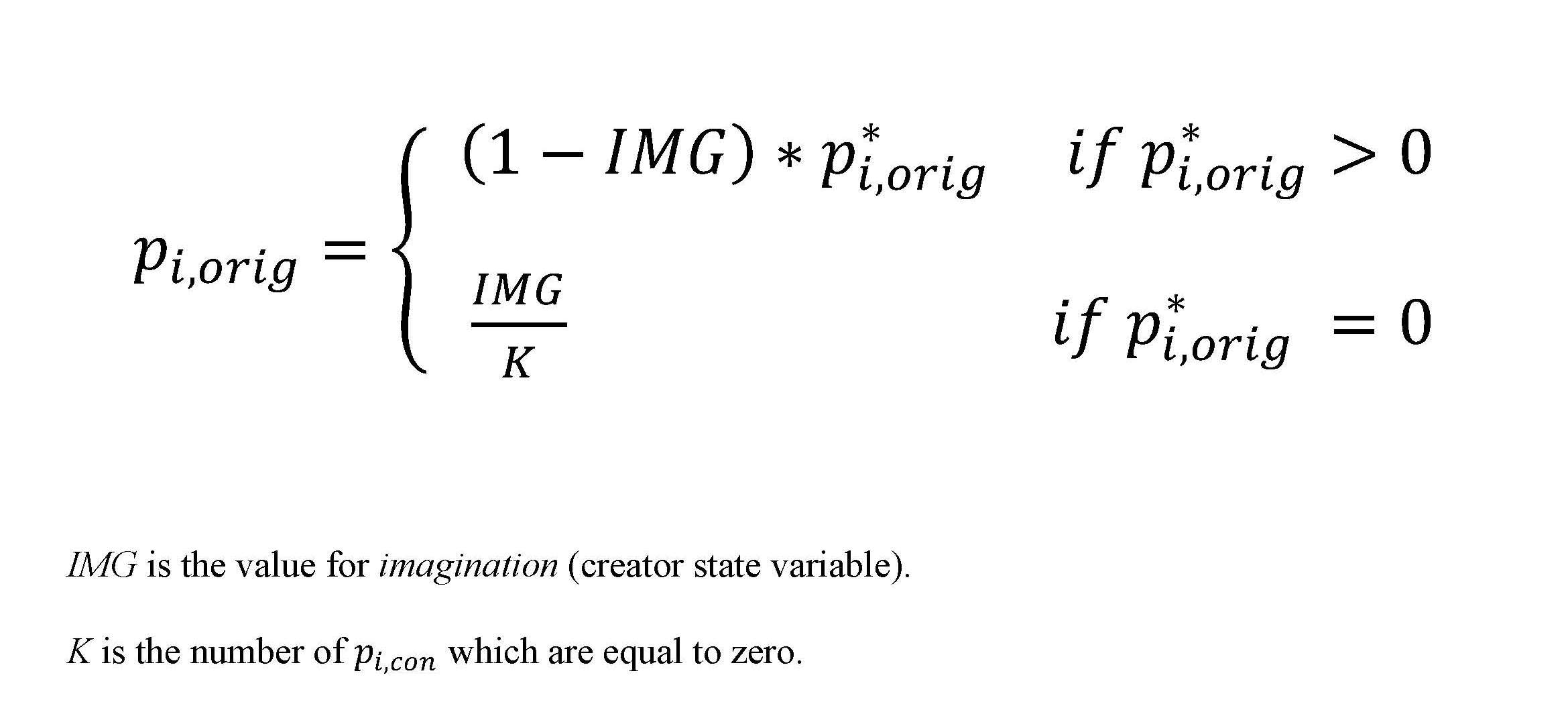

Figure 7. Equation 1 describes the relative frequency for each digit (0-255) of one color dimension (for one specific creator) - 2.17

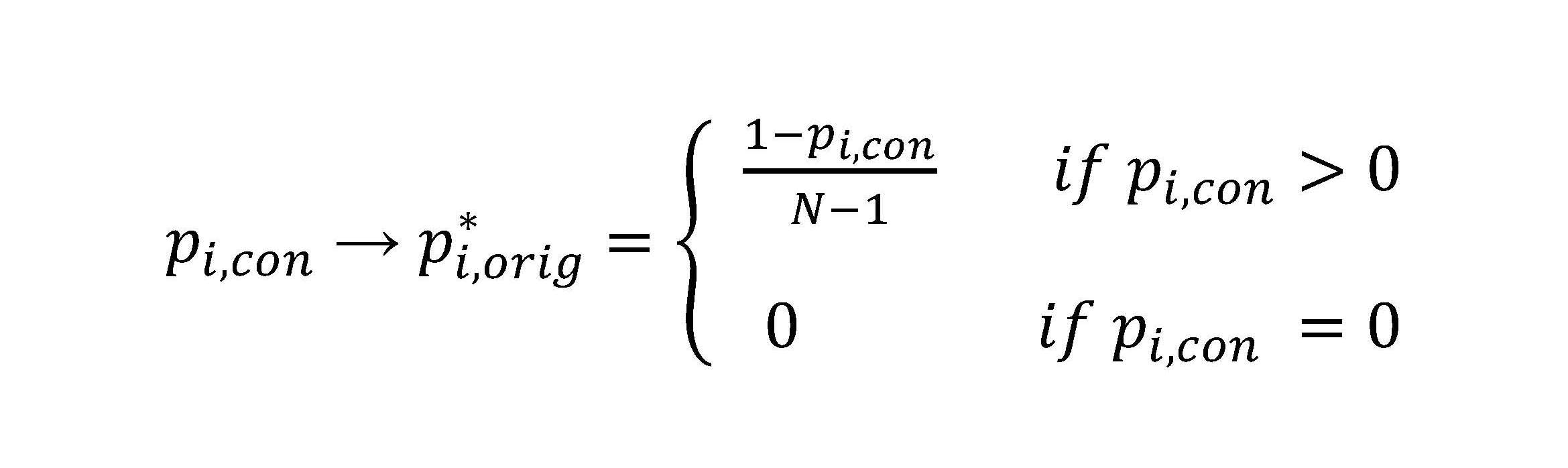

- Relative frequencies calculated as described in Equation 1

(Figure 7) are the basis for

selecting a digit with the conventional selection strategy. In

implementing the original selection strategy, these frequencies are

transformed twice. In the first transformation, they are inverted and

normalized as shown in Equation 2 (Figure 8).

Figure 8. Equation 2 describes how the relative frequencies for all digits (0-255) of one color dimension (for one specific creator) are transformed when the original selection strategy is used - 2.18

- Equation 2 (Figure 8)

qualitatively means that a creator using the original (p*i,orig)

strategy will most likely select those values it has seldom seen before

compared with those it has. However, this transformation does not

ensure that a creator will produce a color value it has never seen

before. This is what the second transformation does. It incorporates

the state variable imagination (IMG)

as described in Equation 3 (Figure 9).

Figure 9. Equation 3 describes how the variable imagination is incorporated in the original selection strategy - 2.19

- IMG (imagination, Equation 3 in Figure 9) is the probability for a creator

to choose a digit it has never seen before. This probability is

assigned with equal weight to the digits which have zero frequencies in

a creator's respective memory list. Before a digit is chosen for a

patchwork, the frequencies of all digits of the respective color

dimension (memory list) are either calculated as relative frequencies

(Equation 1 in Figure 7;

conventional selection strategy) or calculated according to Equation 2

in Figure 8 (original selection

strategy). In both cases, digit selection is finally realized by

cumulative distribution sampling (Figure 10).

The entire process of calculating frequencies or probabilities and

cumulative distribution sampling (Figures 7–10) occurs three times every time a

creator makes a patchwork, namely once for each color dimension R, G

and B.

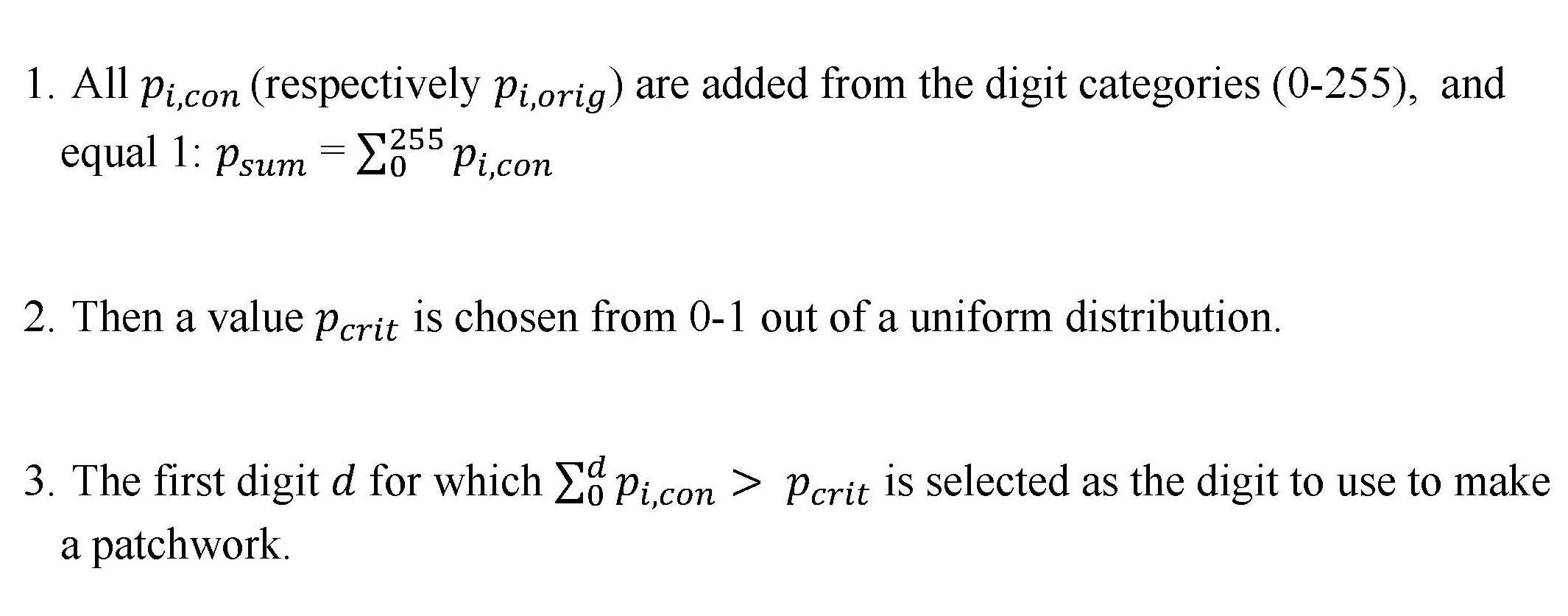

Figure 10. Cumulative distribution sampling in the submodel make-patchwork rate-patchwork

- 2.20

- Evaluators rate patchworks according to perceived novelty

and/or appropriateness. They can be one of three types: En

(judges only novelty), Ea (judges only

appropriateness) or Ena (judges both criteria). An

evaluator's judgment results in one dichotomous creativity score (cScore)

for a particular patchwork: 0 = not

creative, 1 =creative.

Evaluators judging patchworks based on their perceived novelty do so

according to the variable threshold novelty-stringency,

and those judging appropriateness are influenced by the variable

threshold appropriateness-stringency. Both

thresholds are set in NetLogo globally. The pseudo code in Figure 11 describes how the different

evaluator types make their judgments. Evaluator type Ena

must judge a patchwork's novelty as given (1) and its appropriateness

as given (1) in order to rate the entire patchwork as creative (1).

Figure 11. Pseudo code for submodel rate-patchwork (Grey text denotes comments, black text commands, blue text variables and their values) Assessing novelty

- 2.21

- The variable novelty-stringency ranges

from 0-1 and represents a threshold according to which an evaluator

decides whether a patch under current evaluation is novel or not. The

patchwork's (p) relative frequency (rfpe)

is calculated from an evaluator's (e) memory, and it

represents the absolute number of times evaluator e

has seen patchwork p normalized by the total number

of patchworks it has seen before. If rfpe

is less than or equal to novelty-stringency, the

patchwork is considered novel (n = 1),

otherwise it is not (n = 0;

Figure 11). Novelty assessment

depends on an evaluator's memory; two evaluators may disagree about the

same patchwork's novelty depending on what they have seen before.

Assessing appropriateness

- 2.22

- All patchworks have a variable called hue,

which indicates whether their color is warm or cool[5]. A patchwork is

considered appropriate when its hue agrees with the hues of its

neighbors. An evaluator about to judge a patchwork's appropriateness

first assesses the latter's neighbors. To simulate the variability with

which humans perceive their environment, an evaluator only views the

hues of six randomly selected neighbors[6],

and calculates their relative frequency of the hue the patchwork under

evaluation has. The relative frequency is then compared with the

variable appropriateness-stringency, which ranges

from 0-1 and represents a threshold according to which an evaluator

decides whether a patchwork under current evaluation is appropriate or

not. If the hue's relative frequency >= appropriateness-stringency,

the patchwork is considered appropriate (a = 1),

otherwise not (a = 0; Figure 11).

move

- 2.23

- Each creator and evaluator has a heading, a built-in

variable indicating the direction they are facing. Their movement

occurs relative to this parameter. Only one creator per patch is

allowed. If all patches assessed in this process are full, creators

remain on the patches they are currently standing on. Different

movement strategies are available for creators and evaluators (Tables 2–3),

and are set globally.

forget-some-info

- 2.24

- A total of three pieces of information are deleted from

creators' memory lists every time step. In each list, one color

dimension value with a frequency larger than zero is randomly chosen,

and then one is subtracted from this value's frequency counter. Color

values are randomly selected per creator memory list, not per creator

or total group of creators. Therefore, the loss of information due to

forgetting is not systematic. In this submodel, creators' state

variables cR, cG, and cB

(Table 2) are updated.

Analogous to the way creators forget, evaluators also forget three

randomly selected pieces of information which are deleted from their

memory eMem (Table 3),

which is updated in this procedure.

tick

- 2.25

- In this process, Netlogo's built-in time counter advances.

Time is modelled in

discrete steps.

update-domain

- 2.26

- During this procedure all output variables and the interim

variables used for their calculation are updated. Additionally,

patchworks' cScores (see Table 1)

are updated to reflect the evaluation status of the domain.

Indpendent variables

- 2.27

- The independent variables are based on each entity in

CRESY: Creators, evaluators and the domain (Figure 2

and Table 5).

Creators (cRatio)

- 2.28

- The ratio of creator types (Cx:C1:C2:C3) is varied to

reflect different levels of a sample's potential to produce diversity

(Table 5).

Evaluators (evalStrategy)

- 2.29

- novelty-stringency (n) and appropriateness-stringency

(a) are varied to reflect different evaluation strategies.

Five strategies were designated in CRESY: nov

(only based on perceived novelty), app

(only based on perceived appropriateness), cla

(classic, i.e., based on both novelty and

appropriateness), equ (novelty

and appropriateness do not matter to evaluators), mix

(based on novelty only, appropriateness only or on both

in the classic sense. This mixture of strategies is equally distributed

among evaluators; Table 5).

Domain (predefine)

- 2.30

- CRESY's interface sliders predefine-r, predefine-g

and predefine-b can be set to any number from 1-256

to predefine how diverse (colorful) the world is at the start of a

simulation run (Table 5).

Dependent variables

-

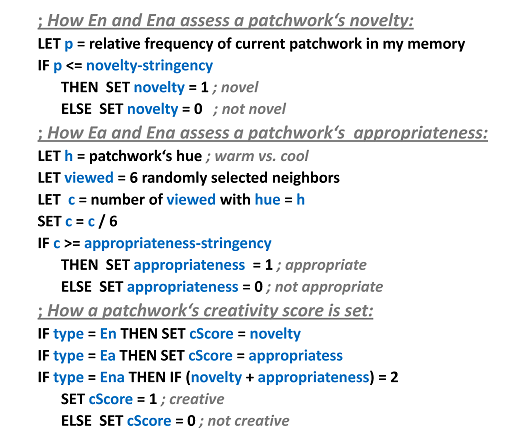

Creativity (crea)

- 2.31

- After rating a patchwork's novelty and/or appropriateness,

evaluators ultimately judge its creativity by giving it a binary score (cScore;

Table 1) of 0 (not creative)

or 1 (creative). Each evaluator rates every patchwork made in the

current step. So number-creators*number-evaluators

creativity scores result every step, because each creator makes only

one patchwork and each evaluator rates every one. Subsequently, a total

creativity assessment for all patchworks made in the current step is

calculated and expressed in the variable crea. For

each patchwork j currently evaluated, its number of

corresponding creative evaluations (1s; N1j)

is set in relation to its total number evaluations (Ntotal

j, equal to number-evaluators).

That is the single patchworks's current creativity score (cj).

These interim creativity scores are then averaged to obtain the

patchworks' mean creativity in the current step (Equation 4 in Figure 12). crea is

therefore a percentage indicating how creative evaluators find

creators' current work on average.

Figure 12. Equation 4 describes how the variable crea (creativity) is calculated Reliability (rel)

- 2.32

- The interrater reliability of patchwork ratings is measured

with Fleiss' kappa for binary data (Fleiss

1971; Bortz et al. 2008,

454–458), and ranges from 0-1. Calculating the reliability of

independent judgments is an essential part of creativity assessment in

psychology (Amabile 1982,

1996; Kaufman et al. 2008; Silvia 2008). The measure

indicates evaluators' level of agreement regarding patchworks' creative

value, and it must be sufficiently high in order to ascertain

creativity has been measured at all.

- 2.33

- Strictly, high interrater reliability only indicates judges

agree on something, that is, the ratings share more variance than by

chance. However, in creativity research in psychology, high interrater

reliability is assumed to reflect that the construct of creativity has

been assessed in a face valid way as long as judges are considered to

be legitimate (e.g., all possess relevant expertise in the

artifact domain). As Kaufman et al. (2008)

note, the "judges'

expertise provides a measure of face validity – it makes sense that

experts in a domain could accurately assess performance in that domain"

(p. 175).

Table 5: Independent and dependent variables Entity Variable name Scale Independent Variables Domain (retention) predefine (p) High: 256

Low: 1Creators (variation) cRatio (c) High: (1:0:0:3)

Low: (3:0:0:1)Evaluators (selection) evalStrategy (e) nov: n = .25; a = .00

app: n = 1.0; a = .75

cla: n = .27; a = .75

equ: n = .50; a = .50

mix: n = .25; a = .75Dependent Variables Current patchworks crea (creativity) 0-1 Evaluators' ratings

(of current patchworks)rel (reliability) 0-1

Experimentation

and results

Experimentation

and results

-

Research questions

- 3.1

- To exemplify theory-based exploration with CRESY, the

experiment reported here was conducted to answer the following

questions: a) How do three independent variables representing creators (variation),

evaluators (selection) and the domain (retention)

affect the dependent variable creativity? b) Which hypotheses about

creativity from a systems perspective, modelled as the interplay

between variation, selection and retention processes, can be generated

from the simulated data?

Experimental design

- 3.2

- The independent and dependent variables summarized in Table

5 were used in this

experiment. Fully randomizing the independent variables yields a 2 × 2

× 5 design. The resulting 20 experimental conditions are summarized in

Table 6.

- 3.3

- The following variables were held constant: In each run,

four creators and 15 evaluators were used in a 21×21 torus (density).

The info-rate was set to n8,

meaning creators and evaluators collected information about patchworks

from eight neighboring patches every step. Both agent types moved in

the world based on the any8 strategy, meaning they

could move to any of the eight neighboring patches as long as they were

empty. Creators' imagination was set to 0.05, and

evaluators' state variable domSize was set to 512. novelty-stringency

was set to 0.25 and appropriateness-stringency to

0.75 when evaluators used the classic strategy

(Table 5). These values are

an operationalization of the scientific convention that creative work

must be sufficiently novel and appropriate. A total of 30 runs with

6000 steps each were collected per experimental condition[7].

Table 6: Experimental design Domain predefine-r/-g/-b (p) 1 256 Creators cRatio (c) 3:0:0:1 1:0:0:3 3:0:0:1 1:0:0:3 Evaluators evalStrategy (e) nov app cla equ mix nov app cla equ mix nov app cla equ mix nov app cla equ mix novelty-stringency .25 1.0 .25 .50 .25 .25 1.0 .25 .50 .25 .25 1.0 .25 .50 .25 .25 1.0 .25 .50 .25 appropriateness-stringency .00 .75 .75 .50 .75 .00 .75 .75 .50 .75 .00 .75 .75 .50 .75 .00 .75 .75 .50 .75 Experimental Condition 01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 Results

- 3.4

- The experiment was analyzed with R (Version 2.13.0). The

data were analyzed in the following steps: a) reliability calculation,

b) graphical diagnostics, c) dependent variable aggregation, d)

analyses of variances.

Reliability

- 3.5

- Almost perfect reliability was reached in nearly every

condition (M =.94-.99). The only exceptions are

conditions 5, 10, 15 and 20 in which the mean reliability is moderate (M

= .45-.67; Landis & Koch

1977). These conditions share a common factor: All have evalStrategy

set to mix, meaning evaluators are not using the

same strategy to judge patchworks. predefine and cRatio

are completely varied among these four conditions (see Table 6). This result is expectable, as

evaluators' judgments are based on different thresholds. The fact that

evaluators agree so well in all other conditions indicates face and

construct validity regarding the dependent variable creativity (crea;

Amabile 1982; Kaufman et al. 2008)[8].

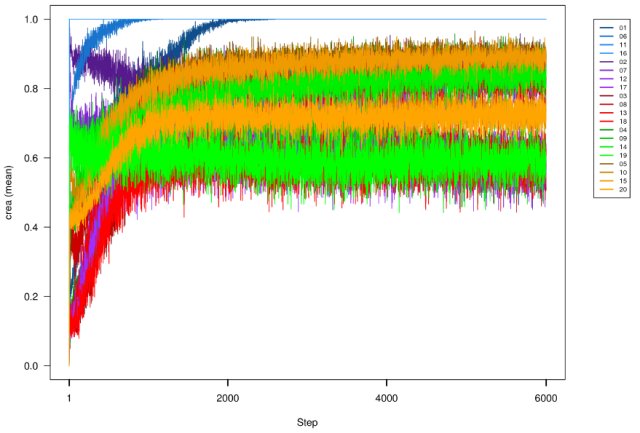

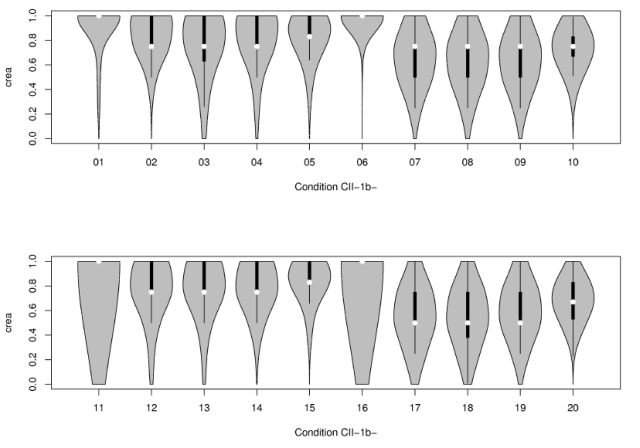

Graphical diagnostics

- 3.6

- Line and violin plots (Hintze

& Nelson 1998) were made to visually assess patchwork

creativity (crea). Line plots depict the average

run, violin plots the entire data per experimental condition. The line

plots in Figure 13 indicate

the following: Experimental conditions 01, 06, 11 and 16 exhibit

average creativity levels of mcrea

= 1.00. All other experimental conditions exhibit average creativity

levels varying from approximately mcrea

= 0.5 – 0.8. The violin plots in Figure 14

show this difference more explicitly[9]:

Conditions 01, 06, 11 and 16 have the highest medians for creativity

(roughly mdcrea = 1.00) as

well as minute interquartile ranges. All other conditions exhibit

medians varying from approximately mdcrea

= 0.4 – 0.8, as well as comparably larger interquartile ranges.

Conditions 01, 06, 11 and 16 have a common independent variable value (evalStrategy

= nov; Table 6).

The differences between these four conditions and all others means

patchwork creativity is, on average, rated the highest in experimental

conditions in which only patchwork novelty is assessed (01, 06, 11,

16).

Figure 13. Average run per experimental condition for crea (patchwork creativity)

Figure 14. Violin plots showing crea (patchwork creativity) for each experimental condition Dependent variable aggregation

- 3.7

- The dependent variable crea (patchwork

creativity) was measured in every step (6000) of every run (30) in each

experimental condition (20). Two measures, the mode and the coefficient

of variation (CV), were calculated per run to describe these time

series. The former is an indicator of a run's average location, the

latter an indicator of a run's variability. This aggregation allows the

comparison of experimental conditions based on centrality and stability

of the dependent variable creativity (crea) in

subsequent analyses of variances.

Analyses of variances

- 3.8

- The modes and CVs calculated for crea

were separately tested in three-way independent analyses of variances

with the factors cRatio (c, variation), evalStrategy

(e, selection) and predefine (p,

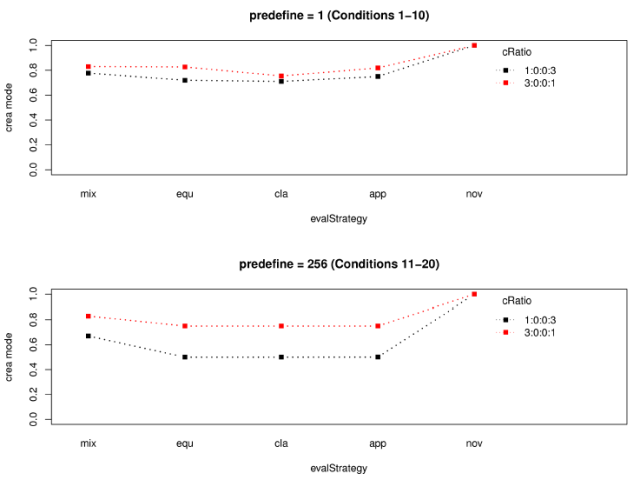

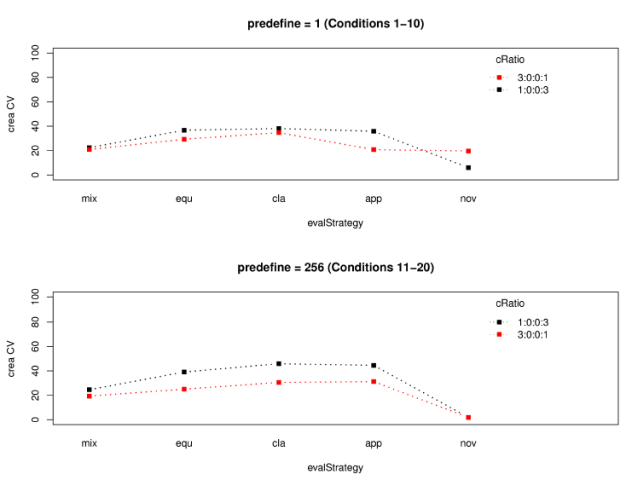

retention). The results are summarized in Tables 7–8. Figures 15–16 display the interaction plots.

The effect size generalized omega squared (Olejnik

& Algina 2003) was additionally calculated for each

effect. The following was done to create a frame of reference to select

effects for interpretation: Firstly, all violin and interaction plots

were compared, and based on inspection certain effects were preselected

for interpretation. Secondly, all effect sizes were ranked (Tables 7–8).

Thirdly, the effects preselected based on the plots were compared with

the effect size ranks. The preselected effects indicated that only

effects with a value = 0.82 for generalized omega squared would be

considered meaningful enough to interpret as making a notable

difference. These effects happen to be those ranked first in the

ANOVAs. The only exception is the second-order interaction p

x c x e in the ANOVA for crea modes,

although the effect sizes for the individual factors are comparably

higher (Table 7). This

procedure permits an overview of the kind of effect sizes reachable

given the type of simulation data at hand, something which externally

set thresholds do not account for. It also constitutes an internal,

pragmatic threshold to detect differences considered meaningful or

large enough to render interpretation for theory-based exploration

worthwhile.

Table 7: Three-way independent analysis of variance for crea modes Source df F p Generalized

Omega2predefine (p) 1 683.81 < .0001 0.53(3)2 cRatio (c) 1 1089.25 < .0001 0.64(2) evalStrategy (e) 4 1101.27 < .0001 0.88(1) p × c 1 317.04 < .0001 0.35(4) p × e 4 69.57 < .0001 0.31(6) c × e 4 78.87 < .0001 0.34(5) p × c × e 4 25.33 < .0001 0.14(7) Residuals 580 (0.00192)1 1 The value in parentheses is the mean square error.

2 The numbers in parentheses are the ranks of the effect sizes.

Figure 15. First-order interaction plots for crea modes Table 8: Three-way independent analysis of variance for crea CVs Source df F p Generalized

Omega2predefine (p) 1 0.70 0.79 0.00(7)2 cRatio (c) 1 591.60 < .0001 0.50(4) evalStrategy (e) 4 1826.33 < .0001 0.92(1) p × c 1 182.87 < .0001 0.23(5) p × e 4 168.76 < .0001 0.53(3) c × e 4 211.86 < .0001 0.56(2) p × c × e 4 30.43 < .0001 0.16(6) Residuals 580 (9.6)1 1 The value in parentheses is the mean square error.

2 The numbers in parentheses are the ranks of the effect sizes.

Figure 16. First-order interaction plots for crea CVs (coefficients of variation) Creativity (crea) modes

- 3.9

- The following effects are considered meaningful based on

the interaction plots (Figure 15)

and the effect sizes (Table 7).

Main effect e (generalized

omega squared = 0.88): The common level of creativity (crea

mode) is notably higher in conditions in which only patchwork

novelty (e = "nov") is judged. It is equal to one in

those conditions. Average creativity does not notably differ between

conditions with the other evaluation strategies. This coincides with

the information depicted in Figures 13–14. Second-order interaction p

× c × e

(generalized omega squared = 0.14); main effect c

(generalized omega squared = 0.64); main effect p

(generalized omega squared = 0.53): Average creativity is notably lower

in conditions in which diversity is most expected (c

= 1:0:0:3 and p = 256). Otherwise, it is worth

remarking that a) the two sample types do not noticeably differ and b)

patchworks made by the least original sample type (c

= 3:0:0:1) are generally considered slightly more creative. Apart from

the interaction between p = 256 and c

= 1:0:0:3, the different types of domains do not notably influence

creativity levels.

Creativity (crea) coefficients of variation (CVs)

- 3.10

- The following effect is considered meaningful based on the interaction plots (Figure 16) and the effect sizes (Table 8). Main effect e (generalized omega squared = 0.92): The instability of creativity judgments (crea CVs) is noticeably lower in conditions in which only patchwork novelty (e = "nov") is evaluated. Otherwise, the instability is similar across the remaining conditions. This coincides with the information depicted in the violin plots in Figure 14.

Discussion

Discussion

-

Discussion of the results

- 4.1

- High reliability levels indicate creativity was validly

measured in terms of face and construct validity (Amabile 1982; Kaufman et al. 2008). The

evaluators have the greatest effect on average creativity levels.

Neither creators, evaluators nor the domain substantially affect the

stability of these levels over time

Theoretical implications

-

The field and the domain render models of individual creativity incomplete

- 4.2

- The experimental results qualitatively indicate contextual

factors systematically affect creativity. Specifically, the following

circumstances apply when the field evaluates appropriateness: In

homogeneous domains, that is, those with little artifact diversity,

creators cannot be discriminated based on the creativity of their

artifacts. In heterogeneous domains, "conventional" creators surpass

"original" creators regarding the creativity of their artifacts. The

former implies a sufficient amount of informational stimulation or

availability is necessary for individual creativity to be

discriminable. The latter implies that a system designed to facilitate

the most change, that is, consisting of maximum domain heterogeneity

and highly "original" creators, does not lead to maximum creativity

levels when appropriateness is evaluated. In this case, "conventional"

creators fare better by adhering to norms evaluators develop.

- 4.3

- Both cases elaborated above demonstrate the fragility of

models of individual creativity. As illustrated in the Introduction,

creativity research in the domain of psychology is characterized by a

strong focus on stable attributes or behavior exhibited by individual

creators to detect and explain differences in creativity (Dewett & Williams 2007;

Feist 1999; Kahl et al. 2009; Kirton 1976; Kwang et al. 2005; McCrae 1987; Wehner et al. 1991; Zaidel 2014). The creators in

CRESY were designed to reflect this stylized fact. However, these

stable differences do not cohere with respective differences in

creativity when the field and the domain are explicitly modeled and

varied. To understand and explain the process of creativity, therefore,

contextual factors are necessary. Although his ideas have not been

tested empirically, Csikszentmihalyi (1999)

recognized the significance of the field and the

domain in constructing creators. He specifically

addressed the availability of "cultural capital" (Bourdieu 1986), and the ways

significant others support an individual's creativity. This is not an

argument for ignoring creators' agency in contributing to the creative

production process, as he does describe their role in detail, but

rather an appeal for acknowledging the relevance of social factors in

cultivating individual creativity and the need for their assessment.

Moreover, creativity is a condicio sine qua non for innovation (cp. Ahrweiler 2013; Viale 2013). Information (ideas,

concepts, prototypes, etc.) produced in creative processes are

precursors for innovations (cp. Schulte-Römer

2013). Understanding how the information pool evolves via

socially distributed selection and retention processes is significant

for providing or varying the informational basis of innovations (cp. Barrère 2013).

- 4.4

- Videos 1-2 illustrate how the domain, as one contextual

factor, affects artifact creativity (crea) and

creator discriminability (hX.Y) over time. The

latter measure is known as the mutual information of two discrete

variables (Attneave 1959;

Bischof 1995), and

ranges from 0 – 1. It refers to how well a creator type can be

"predicted" or "guessed" by looking at a randomly selected color. Such

an interpretation is important for discriminating the creator types

from each other, therewith assessing their validity as different and

stable behavioral types. Both Videos 1-2 show one run of 1000 steps.

They have exactly the same settings as conditions 3 and 8 (Video 1; low

domain diversity) and 13 and 18 (Video 2; high domain diversity) in the

experiment reported above (Table 6).

They only differ in the number of creators. Each video shows 6 creators

(3 Cx and 3 C3). Note in both

cases and as time passes, it becomes harder to discriminate creator

types from each other based on the colors they have produced. Their

stable differences do not suffice to make a difference in terms of

creative products.

Video 1. One run of 1000 steps plotting artifact creativity (crea) and creator discriminability (hX.Y). Except for domain diversity (predefine = 1), all settings are the same in Video 2). Video 2. One run of 1000 steps plotting artifact creativity (crea) and creator discriminability (hX.Y). Except for domain diversity (predefine = 256), all settings are the same in Video 1. The criteria evaluators use affect creativity the most

- 4.5

- The experimental results qualitatively support the

assumption that the field (evaluators) plays a decisive role in

generating artifact creativity (Csikszentmihalyi

1988, 1999).

However, acknowledging their existence in the process does not suffice

to explain how creativity emerges. Their behavior can be differentiated

according to the kind and number of criteria they use. The highest

levels of creativity coincide with evaluators' use of one criterion,

namely novelty. It is based on the relation between

artifacts previously seen and the artifact to be currently judged.

Notably lower creativity levels coincide with evaluators' sole use of

the criterion appropriateness. It is based on the

relation between task restrictions and how well the current artifact

meets them. Moreover, using both criteria to evaluate artifacts

coincides with creativity levels similar to or lower than those

measured when only artifact appropriateness is

judged. Due to the algorithms' design, it is "easier" for an artifact

to be considered novel than appropriate as there are less constraints

to attaining that value (Figure 11).

Regardless of whether or not novelty and appropriateness are judged in

this algorithmic way in practice, the implication here is that some

indicators of creativity may be harder to fulfill than others, and this

impacts what passes as creative.

- 4.6

- To a certain degree, the trade-off between novelty and

appropriateness resembles the notion of exploration and exploitation of

knowledge in organizations (March 1991;

see also Page 2011). What

the novelty criterion rewards is the discovery of and experimentation

with new knowledge. In contrast, the appropriateness criterion rewards

the "refinement and extension" (March

1991, p. 85) of existing knowledge. Achieving a balance

between both novelty and appropriateness is something evaluators can

guide by being aware of the quality of information they want the domain

to encompass, the criteria they are using, and how they weight the

latter (Keller & Weibler 2014;

March 1991). The

creator types reflect this duality, too: The "original" creators

explore the knowledge space, while the "conventional" creators exploit

it (cp. Gupta et al. 2006;

Kunz 2011). Which

behavioral strategy is more adaptive (in terms of creativity scores for

patchworks) depends on the evaluation criteria used to select their

work. In more fluid, real-world settings, these criteria are

constructed and therewith malleable (cp. Dexter

& Kozbelt 2013; Salah

& Salah 2013).

Practical implications

- 4.7

- How do these results inform the practice of creativity

research? Firstly, psychological studies on creativity commonly stop

with one assessment of variation. By explicitly personifying and

repeating the selection mechanism, the impact the field has on

creativity can be demonstrated. The obscureness of the selection

process as described in the Introduction would

therewith be reduced, and the influence evaluators have on creators and

their artifacts exposed (Haller et

al. 2011; Herman

& Reiter-Palmon 2011; Lonergan

et al. 2004; Randel et

al. 2011; Silvia 2008,

2011, Westmeyer 1998, 2009).

- 4.8

- Secondly, the results recreate ideas stated in

Csikszentmihalyi's (1999) qualitative model. A (recognized) variation

depends on the properties of its environment just as much or even more

so than on its creator (Ahrweiler

2010; Cropley

& Cropley 2010; Westmeyer

1998, 2009).

According to Csikszentmihalyi (1999),

evaluators are the ones "who have the right to add memes to a domain"

(p. 324), and "if one wishes to increase…creativity, it may be more

advantageous to work at the level of the fields than at the level of

individuals" (p. 327). CRESY formalizes these ideas and exhibits a way

to conceive psychological studies as a complex system (Holland 2014; Mitchell 2009), or rather, a

creative system (Page 2011).

This implies acknowledging the agency (e.g., intelligence,

intentionality, bias, foresight) evaluators have in establishing a

domain, that is, their selective behavior functions as a balance

between exploiting and exploring the domain.

- 4.9

- Thirdly, by demonstrating the impact of social validation

(selection), it raises practical questions about the validity of study

design and creativity assessment:

- Who constitutes the field? (How vast is their experience with the domain? How do they recognize, for instance, appropriateness?)

- How is the field composed? (How reliable should the field sample be? Is their variation in judgments welcome or should it be reduced?)

- Which and how many criteria are used for creativity assessment?

- How are they operationalized in terms of their scope / frame of reference?

- How are they aggregated?

- How often does evaluation take place?

- Do creators receive feedback on their work?

Limitations

- 4.10

- The scientific scope of this model is limited to a very

specific target: creativity research within psychological science. It

is based only on stylized facts from that domain. Furthermore, the

model was built with the sole purpose of conducting theoretical

experiments in order to reflect how creativity is and could be studied

in psychology. Specifically, CRESY focuses on one particular form of

variation, namely combinatorial creativity, the most widely defined

kind in creativity research (e.g., Amabile

1996; Sawyer 2006;

Sternberg 1999b; Sternberg et al. 2004; Zhou & Shalley 2007).

- 4.11

- The interaction incorporated in CRESY is restricted to indirect social influence. On the one hand, this is consistent with the model's target, creativity research in psychology, and its purpose, to reflect how science is conducted there. As described in the Introduction, study participants commonly work alone on creative tasks and their work is subsequently rated by judges evaluating independently of each other. This by no means implies creators and evaluators do not influence one another, given both parties can perceive each other's work and ratings (which is the case in CRESY). The "indirectedness" of this social influence can be viewed in many other instances of real life beyond creativity research: graffiti (Müller 2009), online brainstorming (e.g., http://www.bonspin.de/), Amazon reviews (Chattoe-Brown 2010), fashion trends (e.g., http://www.signature9.com/style-99). There are creators and evaluators virtually everywhere anonymously viewing, consuming, acknowledging and therefore being influenced by artifacts of other creators they do not necessarily know personally. On the other hand, modeling social influence in an impersonal way limits this model's applicability, as it does not address phenomena inherent in interpersonal relationships (e.g., leadership-follower dynamics, sympathy, reciprocity or ingroup-outgroup bias), which may affect creativity.

Conclusion

Conclusion

- 5.1

- Stylized facts from the domain of creativity research in psychological science were incorporated in CRESY, an agent-based model build to describe and test a systems perspective of creativity. Systematic experimentation with the model indicated creativity is affected by contextual factors such as evaluation strategies and informational diversity, results which can be used to stimulate and refine research practice in the target domain. The current model could nevertheless benefit from assessing its applicability to other targets (external validity) and extending its architecture to include more social and informational complexity. Model extensions could include processes depicting direct social influence, creators and evaluators incorporated into one agent, or multiple artifact domains. Assessing the model's external validity could be initiated by compiling its similarities and differences to other domains, for instance peer review (Squazzoni & Gandelli 2013; Squazzoni & Takács 2011), information retrieval (Picascia & Paolucci 2010; Priem et al. 2012), art criticism (Alexander 2010; Alexander & Bowler 2014), personnel assessments (Nicholls 1972) as well as pop culture reception (Chattoe-Brown 2010), and testing whether results obtained with CRESY hold in such areas.

Acknowledgements

Acknowledgements

-

The authors would like to thank three anonymous reviewers for their helpful comments. Our gratitude also goes to the following people for their valuable feedback while developing CRESY: Erich H. Witte, Klaus G. Troitzsch, Nigel Gilbert, Iris Lorscheid, Nanda Wijermans, the SIMSOC@WORK group, Florian Felix Meyer, Mario Paolucci, Bruce Edmonds.

Notes

Notes

-

1A

journal article, for instance, represents a single creative artifact.

Yet perhaps just individual attributes such as its method, sample or

results are retained by those acknowledging it.

2http://www.openabm.org/model/2554/version/4/view (Note the model is called CRESY-II online. There is an earlier version of the model, which does not contain evaluators, also available: http://www.openabm.org/model/2552/version/3/view).

3The term unreliable actually refers to how an observer interprets C3's behavior. Technically, C3 functions reliably based on a stable behavioral rule, that is, C3 will always select with high probability digits it has seldom encountered before. However, its behavioral outcomes (patchworks) are so diverse that it appears to behave "unreliably", because an observer cannot predict its next patchwork as easily as one made by Cx.

4Creativity researchers discriminate and refer to these situations as "little-c" (personal, everyday) and "Big-C" (global, historical, eminent) creativity (Boden 1999; Beghetto & Kaufman 2007).

5Hues can be depicted with a color circle of 360°. The RGB values of a patchwork are translated into their corresponding degrees on the circle. Values from 135°-314° are categorized as cool, all others as warm.

6Without this stochasticity appropriateness judgments would always be the same, as all evaluators would view the same eight neighbors and come to the same judgment according to the algorithm described here (see also Chattoe 1998).

7Parameterization for all variables held constant in this experiment (density, info-rate, movement, imagination, domSize) as well as for the number of runs and steps chosen was carried out in a series of experiments specifically designed to test the robustness of these parameters. The experiments, their data analyses and discussions are fully available online in this model's documentation (http://www.openabm.org/model/2554/version/4/view).

8An intriguing notion creativity researchers usually sidestep is whether evaluators really have to agree, as demanded via adequately high interrater reliabilities. High reliability only indicates raters agree, but as long as legitimate evaluators are the ones agreeing, creativity researchers consider it to be an appropriate indicator of face validity (Amabile 1982; Kaufman et al. 2008). The absence of high interrater reliability means, according to the discipline, creativity was not measured. One alternative explanation could be that evaluators are being creative themselves. Presently, there is a lack of work reflecting this idea and its consequences for creativity research. Evaluators' potential to stimulate change, as for example remarked by Amabile (1996) and Csikszentmihalyi (1999), could encourage scholars to focus on rater dissent and its meaning in future research.

9Violin plots are vertical boxplots with rotated kernel density plots at both left and right sides. The white dot represents the median, and the thick black line the interquartile range (IQR). The thin black lines extend to the lowest datum still within 1.5 IQR of the lower quartile and to the highest datum still within 1.5 IQR of the upper quartile.

References

References

- ABUHAMDEH, S. &

Csikszentmihalyi, M. (2004). The artistic personality: A systems

perspective. In R. Sternberg, E. Grigorenko, & J. Singer

(Eds.), Creativity. From potential to realization.

Washington, D.C.: American Psychological Association. [doi:10.1037/10692-003]

ADARVES-YORNO, I., Postmes, T., & Haslam, S. (2006). Social identity and the recognition of creativity in groups. British Journal of Social Psychology, 45, 479–497. [doi:10.1348/014466605X50184]

AHRWEILER, P. (2010, Jan.). Quality of research between preventing and making a difference. Paper presented at the Quality Commons, Paris, France. <http://cress.soc.surrey.ac.uk/web/QualityCommons/qc_booklet_a4-full.pdf> Archived at: <http://www.webcitation.org/6G4biFRgM>

AHRWEILER, P. (2013). Special issue on "Cultural and cognitive dimensions of innovation" edited by Petra Ahrweiler and Riccardo Viale. Mind & Society, 12(1), 5–10. [doi:10.1007/s11299-013-0128-2]

ALEXANDER, V. D. (2010, Jan). Quality and art worlds. Paper presented at the Quality Commons, Paris, France. <http://cress.soc.surrey.ac.uk/web/QualityCommons/qc_booklet_a4-full.pdf>. Archived at: http://www.webcitation.org/6G4biFRgM>

ALEXANDER, V. D. & Bowler, A. E. (2014). Art at the crossroads: The arts in society and the sociology of art. Poetics, 43, 1–19. [doi:10.1016/j.poetic.2014.02.003]

AMABILE, T. (1982). Social psychology of creativity: A consensual assessment technique. Journal of Personality and Social Psychology, 43(5), 997–1013. [doi:10.1037/0022-3514.43.5.997]

AMABILE, T. (1996). Creativity in context. Boulder, CO: Westview Press.

AMABILE, T. & Mueller, J. (2007). Psychometric approaches to the study of human creativity. In J. Zhou & C. Shalley (Eds.), Handbook of organizational creativity (pp. 33–64). New York: Lawrence Erlbaum Associates.

ATTNEAVE, F. (1959). Applications of information theory to psychology. New York: Henry Holt and Company.

BALDUS, B. (2002). Darwin und die Soziologie [Darwin and sociology]. Zeitschrift für Soziologie [Journal of Sociology], 31(4), 316–331.

BARRÈRE, C. (2013). Heritage as a basis for creativity in creative industries: the case of taste industries. Mind & Society, 12 (1), 167–176. [doi:10.1007/s11299-013-0122-8]

BECHTOLDT, M., De Dreu, C., & Choi, H. (2010). Motivated information processing, social tuning, and group creativity. Journal of Personality and Social Psychology, 99, 622–637. [doi:10.1037/a0019386]

BEGHETTO, R. & Kaufman, J. (2007). Toward a broader concept of creativity: A case for "mini-c" creativity. Psychology of Aesthetics, Creativity, and the Arts, 1(2), 73–79. [doi:10.1037/1931-3896.1.2.73]

BISCHOF, N. (1995). Struktur und Bedeutung [Structure and meaning]. Bern, Switzerland: Verlag Hans Huber.

BODEN, M. (1999). Computer models of creativity. In R. Sternberg (Ed.), Handbook of creativity (pp. 351–372). Cambridge, UK: Cambridge University Press.

BORTZ, J. & Döring, N. (2002). Forschungsmethoden und Evaluation [Research methods and evaluation]. Berlin, Germany: Springer. [doi:10.1007/978-3-662-07299-8]

BORTZ, J., Lienert, G., & Boehnke, K. (2008). Verteilungsfreie Methoden in der Biostatistik [Non-parametric methods in biostatistics]. Heidelberg, Germany: Springer Medizin Verlag.

BOURDIEU, P. (1986). The forms of capital. In J. E. Richardson (Ed.), Handbook of theory and research for the sociology of education (pp. 241–258). New York: Greenwood Press.

BROWER, R. (2003). Constructive repetition, time, and the evolving systems approach. Creativity Research Journal, 15(1), 61–72. [doi:10.1207/S15326934CRJ1501_7]

BRYSON, J. (2009). Representations underlying social learning and cultural evolution. Interaction Studies, 10(1), 77–100. [doi:10.1075/is.10.1.06bry]

BUSS, D. (2004). Evolutionäre Psychologie [Evolutionary psychology. The new science of the mind]. Munich, Germany: Pearson Studium.

CAMPBELL, D. (1960). Blind variation and selective retention in creative thought as in other knowledge processes. Psychological Review, 67(6), 380–400. [doi:10.1037/h0040373]

CASTI, J. (2010, Jan.). Artistic forms, complexity and quality. Paper presented at the Quality Commons, Paris, France. http://cress.soc.surrey.ac.uk/web/QualityCommons/qc_booklet_a4-full.pdf>. Archived at: http://www.webcitation.org/6G4biFRgM>

CHATTOE, E. (1998). Just how (un)realistic are evolutionary algorithms as representations of social processes? Journal of Artificial Societies and Social Simulation, 1(3), 2.

CHATTOE-BROWN, E. (2010, Jan.). What is quality? Paper presented at the Quality Commons, Paris, France. http://cress.soc.surrey.ac.uk/web/QualityCommons/qc_booklet_a4-full.pdf>. Archived at: http://www.webcitation.org/6G4biFRgM>

COUGER, D., Higgins, L., & McIntyre, S. (1989). Differentiating creativity, innovation, entrepreneurship, intrapreneurship, copyright and patenting for I.S. products / processes. Proceedings of the Twenty-Third Annual Hawaii International Conference on Systems Sciences, 4, 370–379.

CROPLEY, D. & Cropley, A. (2010). Functional creativity: "Products" and the generation of effective novelty. In J. Kaufman & R. Sternberg (Eds.), The Cambridge handbook of creativity (pp. 301–320). New York: Cambridge University Press. [doi:10.1017/CBO9780511763205.019]

CROPLEY, D., Cropley, A., Kaufman, J., & Runco, M. (Eds.). (2010). The dark side of creativity. New York: Cambridge University Press. [doi:10.1017/CBO9780511761225]

CSIKSZENTMIHALYI, M. (1988). Society, culture, and person: A systems view of creativity. In R. Sternberg (Ed.), The nature of creativity. Contemporary psychological perspectives (pp. 325–339). Cambridge, UK: Cambridge University Press.

CSIKSZENTMIHALYI, M. (1999). Implications of a systems perspective for the study of creativity. In R. Sternberg (Ed.), Handbook of creativity (pp. 313–338). Cambridge, UK: Cambridge University Press.

CZIKO, G. (1998). From blind to creative: In defense of Donald Campbell's selectionist theory of human creativity. Journal of Creative Behavior, 32, 192–209. [doi:10.1002/j.2162-6057.1998.tb00815.x]

DASGUPTA, S. (2004). Is creativity a Darwinian process? Creativity Research Journal, 16(4), 403–413. [doi:10.1080/10400410409534551]

DENNIS, A. & Williams, M. (2003). Electronic brainstorming. In P. Paulus & B. Nijstad (Eds.), Group creativity (pp. 160–178). New York: Oxford University Press. [doi:10.1093/acprof:oso/9780195147308.003.0008]

DEWETT, T. & Williams, S. (2007). Innovators and imitators in novelty-intensive markets: A research agenda. Creativity and Innovation Management, 16(1), 80–92. [doi:10.1111/j.1467-8691.2007.00421.x]

DEXTER, S. & Kozbelt, A. (2013). Free and open source software (FOSS) as a model domain for answering big questions about creativity. Mind & Society, 12 (1), 113–123. [doi:10.1007/s11299-013-0125-5]

DIPAOLA, S. & Gabora, L. (2009). Incorporating characteristics of human creativity into an evolutionary art algorithm. Genetic Programming and Evolvable Machines, 10(2), 97–110. [doi:10.1007/s10710-008-9074-x]

DÖRNER, D. (1994). Heuristik der Theorienbildung [Heuristics of building theories]. In T. Herrmann & W. Tack (Eds.), Enzyklopädie der Psychologie. Themenbereich B: Methodologie und Methoden. Serie I: Forschungsmethoden der Psychologie. Band I: Methodologische Grundlagen der Psychologie [Encyclopedia of psychology. Subject: Methodology and methods. Series I: Research methods in psychology. Volume I: Methodological basics in psychology] (pp. 343–388). Göttingen, Germany: Hogrefe.

EDMONDS, B. (1999). Gossip, sexual recombination and the El Farol Bar: Modelling the emergence of heterogeneity. Journal of Artificial Societies and Social Simulation, 2(3), 2.

ESSER, H. & Troitzsch, K. (1991). Einleitung: Probleme der Modellierung sozialer Prozesse [Introduction: Problems with modelling social processes]. In H. Esser & K. Troitzsch (Eds.), Modellierung sozialer Prozesse. Neuere Ansätze und Überlegungen zur soziologischen Theoriebildung. Ausgewählte Beiträge zu Tagungen der Arbeitsgruppe Modellierung sozialer Prozesse der Deutschen Gesellschaft für Soziologie [Modelling social processes. New approaches to building sociological theory. Selected contributions from conferences of the working group "Modeling Social Processes" of the German Society of Sociology] (pp. 13–25). Bonn, Germany: Eigenverlag Informationszentrum.