Rosaria Conte and Mario Paolucci (2004)

Responsibility for Societies of Agents

Journal of Artificial Societies and Social Simulation

vol. 7, no. 4

<https://www.jasss.org/7/4/3.html>

To cite articles published in the Journal of Artificial Societies and Social Simulation, reference the above information and include paragraph numbers if necessary

Received: 12-Dec-2003 Accepted: 31-May-2004 Published: 31-Oct-2004

Abstract

Abstract

|

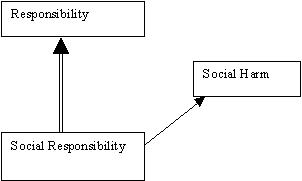

| Figure 1. Responsibility generalises (double line) social responsibility and is defined upon (full line) social harm |

However, this definition does not take into account the motivation of x. If s is an actual event, two possibilities occur: either x did not exert its power or this was not sufficient to prevent s. Only in the former case, x can be blamed and is accountable for s. Let us discuss this aspect of responsibility.

|

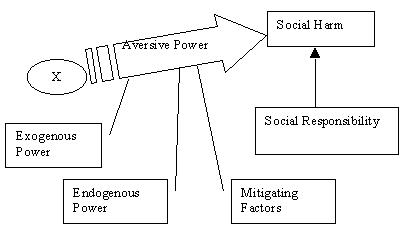

| Figure 2. Factors involved in the evaluation of the aversive power of agent x on a social harm that entails social responsibility |

|

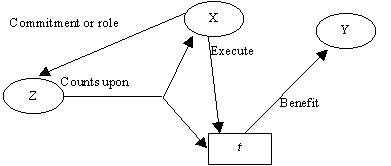

| Figure 3. A graphical representation of task-based responsibility |

|

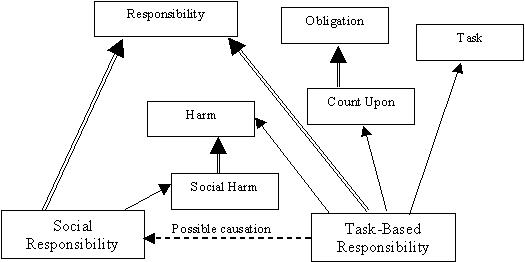

| Figure 4. The two faces of Responsibility. The dependence (single line) of Social and Task-Based Responsibility is shown graphically, together with generalisations (double line) of cognitive constructs employed |

2 But there are others. A socially relevant harm is one that the social group, which both the victim and the harmer belong to, is interested in avoiding, since it endangers some global goal or interest of the group. This point requires some clarification. A group may have an interest that (a) its members are in the proper condition to exercise their social role/function and therefore that (b) this condition be maintained or (c) restored at the lowest cost for the whole group; consequently, (d) the group is interested in avoiding that the costs of restoring their members' capacity be sustained by the whole group, and that it is (e) distributed over a subset of the group. Social groups are interested in charging a given number of agents with the costs of repairing social harms. Hence, they assess which agents have effective responsibilities for given social harms. At the same time, they are also interested in avoiding injuries and in reducing the social (whether global or distributed) costs of repair. Consequently, they are interested in discouraging socially impairing behaviours. Hence, they claim that responsible agents exert their power to avoid potential harms.

3 Indeed, she would be held responsible for a decision which did not take into account the global interest of her patient.

4 In Gardner (2003), responsibility is indeed defined as the ability to respond, i.e., to give justification of given choices; however, this view is not satisfactory, fist because makes responsibility collapse on accountability; secondly, because it still is a decision-based notion.

5 However, harm comparison is not always an easy one. Suppose a physician refuses to practise infibulation in a young patient of Islamic religion in virtue of his commitment to defend the physical integrity and the health of his patients. Can he be held responsible for the severe injuries that a non-institutional infibulation may cause to the child? The answer is not an easy one.

6 Some (e.g., Mellema 1988) consider this dilutionist view of shared responsibility as somewhat unsatisfactory. In contrast with it, an anti-dilutionist view is proposed. As regards shared responsibility, the author seems to argue that all bear responsibility. As regards collective responsibility (Mellema 2001) it may be the case that some members of the collective do not bear responsibility, while the whole entity does.

7 This example indicates a possible solution to a classical problem in deontic logic: what is a collective obligation? More precisely, how to predict which agents is the obligation impinging upon? The two identified solutions (cf. Carmo and Pacheco 2000) are complementary: either the obligation actually impinges upon at least one agent in the collective, or on all of them. Both solutions have drawbacks (for a convincing critique, see again Carmo and Pacheco 2000). A possible way out is allowed by distinguishing conceptually an obligatory goal and the consequent obligatory action: a collective obligation is one that all members of the collective ought to want to be realized, although only a subset of agents are sufficient to execute it.

8 Sometime, X's members may be interdependent in avoiding errors: to see this, consider Kaminka and Tambe's work on monitoring teamwork execution (1998). As is reasonably suggested in that work, avoidance of errors in teamwork execution is facilitated by decentralized control not only because the higher the number of agents who effectuate control the lower the chances of errors, but also and moreover because the team members' viewpoints (and consequently their capacity to predict errors) are different and complementary.

J. CARMO, J.and Pacheco, O. (2000) Deontic and action logics for collective agency and roles, in: Proc. of the Fifth International Workshop on Deontic Logic in Computer Science (Deon'00), R. Demolombe and R. Hilpinen (eds.), ONERA-DGA, 93-124

CARMO, J. and Pacheco, O. (2001) Deontic and Action Logics for Organized Collective Agency Modeled through Institutionalized Agents and Roles. Journal Fundamenta Informatica, Vol. 48 (2,3):129-163.

CASTELFRANCHI, C. and R. Falcone (1997) From Task Delegation to Role Delegation. AI*IA 1997: 278-289.

COHEN, P.R. And Levesque, H.J. (1990), Intention is Choice with Commitment, Artificial Intelligence 42, 231-261.

CONTE R., Miceli M. and Castelfranchi C. (1991) Limits and Levels of Cooperation: Disentangling various types of Prosocial Interaction, in Demazeau Y. and Muller J. (eds.), Decentralized A. I. 2, Elsevier, 147-157

EHRENBERG, K. (1999). Social Structure and Responsibility, Loyola Poverty Law Journal, 1-26.

FEINBERG, J., (1970), Doing and Deserving: Essays in the Theory of Responsibility. Princeton: Princeton University Press

FLEURBAEY, M. (1995) Equality and responsibility, European Economic Review, vol. 39, 3-4:683-689

FRENCH, J.R.P. and B. Raven (1959) 'Bases of Social Power' Studies in Social Power. Ed. Dorwin Cartwright. University of Michigan, Ann Arbor.

GARDNER, J. (2003) The Mark of Responsibility. Oxford Journal of Legal Studies 23(2), 157-171.

HART, H. L. A. and Honoré, T. (1985) Causation in the Law. Second Edition. Oxford Univ. Press.

HEIDEGGER, M. (1977) Being and Time; trans. by Stambaugh, Joan; State University of New York Press.

HEIDER F. (1958) The psychology of interpersonal relations. New York: Wiley.

HILD, M. and Voorhoeve, A. (2001) Roemer on Equality of Opportunity, Working paper of the California Institute of Technology, Division of the Humanities and Social Sciences, n. 1128, http://www.hss.caltech.edu/SSPapers/wp1128.pdf

JENNINGS, N. R. and Mamdani, E. H. (1992). Using Joint Responsibility to Coordinate Collaborative Problem Solving in Dynamic Environments, Proc of 10th National Conf. on Artificial Intelligence (AAAI-92), San Jose, USA 1992, 269-275.

JENNINGS. N.R. (1992) On being responsible. In Decentralized A.I. 3, Proc. MAAMAW-91, 93-102, Amsterdam, The Netherlands 1992. Elsevier Science Publishers, http://citeseer.ist.psu.edu/jennings92being.html

JONES, A.I.J. and Sergot, M.J. (1996) A formal characterisation of institutional power, Journal of the JGPL, 4(3): 429-445.

KAMINKA, G., and Tambe, M. (1998) Social comparison for failure detection and recovery. In Intelligent Agents IV: Agents, Theories, Architectures and Languages (ATAL), Springer Verlag.

LACEY, N. (2001) Responsibility and Modernity, http://www.law.nyu.edu/faculty/workshop/fall2001/lacey.pdf

LENK, Hans (1997) Einführung in die angewandte Ethik. Stuttgart Berlin Köln: Kohlhammer.

LANE, M. (2004) Autonomy as a Central Human Right and Its Implications for the Moral Responsibility of Corporations, in T. Campbell and S. Miller (eds.) Human Rights and the Moral Responsibilities of Corporate and Public Sector Organisations. Series: Issues in Business Ethics, Vol. 20, Springer

MAMDANI, E.A. and Pitt, J. (2000) Responsible Agent Behavior: A Distributed Computing Perspective, IEEE Internet Computing, 4 (Sept./Oct.):27-31.

MELLEMA G. (1988) Individuals, Groups, and Shared Moral Responsibility. Bern: Peter Lang

MELLEMA, G.F. (2001), Collective Responsibility, Barnes & Noble.

MITCHAM, C. and R. von Schomberg (2000) The Ethic of Engineers: From Occupational Responsibility to Public Co-responsibility. In P. Kroes and A. Meijers (eds.) The empirical turn in the philosophy of technology, Research in philosophy and technology, vol. 20, JAI Press, Amsterdam.

MOORE, M. (1998) Placing Blame, Oxford: Oxford University Press,.

NORMAN, T. J. and Reed, C. A. (2001) Delegation and responsibility. In C. Castelfranchi and Y. Lesp¥ erance, editors, Proceedings of the Seventh International Workshop on Agent Theories, Architectures, and Languages, Lecture Notes in Artificial Intelligence. Springer-Verlag, pp. 136-149.

NORMAN, T.J. and Reed, C. (2002) Group Delegation and Responsibility, C. Castelfranchi and W.L. Johnson (eds.) Proceedings of the First International Conference on Autonomous Agents and Multi Agent Systems (AAMAS), Bologna, July 15-19, ACM Press.

OREN, T.I. (2000); Responsibility, Ethics, and Simulation; Transactions of the Society for Modeling and Simulation International, Vol. 17, No. 4 pp. 165-170.

SANTOS, F. , A. J. I. Jones, and J. Carmo. (1997) Responsibility for action in organisations: A formal model. In G. Holmstrom-Hintikka and R. Tuomela, editors, Contemporary action theory: Social action, volume 2, pp. 333-350. Kluwer.

SARTRE, J.P. (1984) Existentialism and Human Emotions, Lyle Stuart.

Scanlon, T. M., (1998) What We Owe to Each Other. Cambridge, MA: Harvard University Press

SEEGER, M.W. (2001), Ethics and Communication in Organizational Contexts: Moving From the Fringe to the Center, American Communication Journal, Vol. 5(1) http://www.acjournal.org/holdings/vol5/iss1/special/seeger.htm.

SHAVER, K. G., and Drown, D. (1986). On causality, responsibility, and self-blame: A theoretical note. Journal of Personality and Social Psychology, 4, 697-702.

SILVER, S. (2002) Collective Responsibility and the Ownership of Actions, Public Affairs Quarterly 16 (3) 287-304.

STRAWSON, P.F. (1974) Freedom and Resentment. Proceedings of the British Academy 48; Reprinted in Freedom and Resentment and Other Essays. Oxford 1974, pp. 1-25. References are to the reprinted version.

ZSOLNAI, L. (forthcoming) Ethical Decision Making: Responsibility and Choice in Business and Public Policy, Ashland: Purdue University Press.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2004]