Methods That Support the Validation of Agent-Based Models: An Overview and Discussion

,

and

aOld Dominion University, United States; bThe MITRE Corporation, United States; cVirginia Modeling, Analysis, and Simulation Center at Old Dominion University, United States

Journal of Artificial

Societies and Social Simulation 27 (1) 11

<https://www.jasss.org/27/1/11.html>

DOI: 10.18564/jasss.5258

Received: 06-Jun-2022 Accepted: 10-Oct-2023 Published: 31-Jan-2024

Abstract

Validation is the process of determining if a model adequately represents the system under study for the model’s intended purpose. Validation is a critical component in building the credibility of a simulation model with its end-users. Effectively conducting validation can be a daunting task for both novice and experienced simulation developers. Further compounding the difficult task of conducting validation is that there is no universally accepted approach for assessing a simulation. These challenges are particularly relevant to the paradigm of Agent-Based Modeling and Simulation (ABMS) because of the complexity found in these models’ mechanisms and in the real-world situations they attempt to represent. To aid both the novice and expert in conducting a validation process for an agent-based simulation, this article reviews nine methods that are useful for this process, including foundational topics of docking, empirical validation, sampling, and visualization, as well as advanced topics of bootstrapping, causal analysis, inverse generative social science, and role-playing. Each method is reviewed with respect to its benefits and limitations as a validation-supporting method for ABMS. Suggestions that may support a validation plan for an agent-based simulations, are also provided. This article is an introductory guide for understanding and conducting ABMS validation for developers of all experience levels.Introduction

Validation is a critical component for building the credibility that a simulation model adequately meets its intended purpose (Law 2015; Naylor & Finger 1967). Credibility is the quality of inspiring belief in the correctness of something. When users and stakeholders can trust that the simulation was diligently and adequately constructed so it addresses the model’s purpose, then credibility grows. Credibility is a ternary relationship between the simulation, the end user, and the systems under study. The relationship is ternary because (i) the stakeholder uses their understanding of the system under study to see if the simulation adequately fits that understanding, (ii) this understanding is, hopefully, informed by the actual system under study, and (iii) the simulation developer uses, hopefully, the system under study to inform the development of the simulation. With this in mind, validation is defined as the process of determining if a model adequately represents the system under study for the model’s intended purpose (Erdemir et al. 2020; Grimm et al. 2020; Sargent & Balci 2017); this definition could be simplified further as building the right model (Balci 1998). What some of the validation-supporting methods are and how they could be practically implemented is the focus of this article. This focus is intended to provide readers with guidance and insight to help them select the validation-supporting methods for use in their validation plan.

Within agent-based modeling, the selection of validation-supporting methods is not a straightforward task because there is no one-size-fits-all approach to validation, nor are there any consistent validation standards that are applicable and/or acceptable across the whole agent-based modeling community (Collins et al. 2015). There might be some fields that use Agent-based Modeling and Simulation (ABMS) that have universally agreed upon validation approach, though the authors where unable to find any. This, coupled with the ever-expanding plethora of new verification and validation-supporting methods being developed (e.g., Collins et al. 2022a; Borgonovo et al. 2022) means that it has become more difficult for a simulation novice to navigate the simulation validation literature. This article aims to help navigate the ever-expanding range of validation-supporting methods suggested for ABMS through a detailed discussion of nine methods and practical guidance for implementation. This article has been written in a discipline-agnostic manner, though it is impossible to mitigate all biases.

Agent-based modeling is conducted in various disciplines, each with its own validation and communication requirements. This can result in differing, sometimes contradictory, advice on how to approach validation. Though this article does not resolve those differences, it is hoped to provide a starting point for a simulation development novice in understanding the validation-supporting methods and approaches available in ABMS.

This article first discusses simulation validation in more detail to give a reader a better understanding of its origin and a few of the controversies. A selected variety of validation-supporting methods are then described. This description is followed by a general discussion on how to select validation-supporting methods. The article concludes with curated recommendations for simulation developers.

Background

In this section, some background is provided, and a little history of validation in the context of modeling and simulation (M&S) in general, then, specifically, agent-based modeling and simulation (ABMS). The focus of this article is validation-supporting methods that can be applied to M&S, specifically ABMS; as such, other forms for validation are not considered. For example, psychologists tend to consider four types of validation in their experiments- internal, external, construction, and statistical (Jhangiani et al. 2015) – which are not discussed in this article.

ABMS is one of several paradigms available in the M&S domain (Law 2015). The M&S community has a sixty-year history (Collins et al. 2022a), which was originally focused on Discrete Event Simulation (DES). Most validation-supporting methods developed by this community were developed for DES (Banks 1998), and some have argued that ABMS is just a subset of DES (Law 2015), so DES validation processes apply to ABMS. However, as the ABMS community diverges from the DES community, so do its applicable validation approaches. This article focuses on validation processes that have been advocated for ABMS.

Note that validation is sometimes used in conjunction with the word verification. Verification is the process of determining if a model is consistent with its specification (Petty 2010). This article focuses on validation, though verification is mentioned at certain points.

Validation of modeling and simulation

The use of multiple definitions is common in the simulation community; for example, Ören (2011) found 400 different definitions of the word “simulation.” In this article, a model is defined as a representation of a system for some intended purpose and a simulation as the dynamic implementation of that model. Inconsistencies in the definitions of terminology can contribute to misinterpretation of outcomes and can result in communication confusion (Barnes III & Konia 2018; David 2006; Glasow & Pace 1999; Roache 1998); however, this article is not intended to be impeded by the semantics of definitions and will thus use the simple definition of validation given in the introduction.

Our definition of validation is, by far, not the only definition of validation that exists. Other definitions include words like “accurate” (Law 2015; Sargent 2013; Schlesinger et al. 1979), “simuland” (Petty 2010), “testability” (Carley 2017), or even “real world” (Department of Defense 2009). These definitions have not been included here to avoid confusion, and the authors will leave the discussion of comparing definitions to a future paper.

The discussion of validation in a simulation context has more than fifty years of history (Sargent & Balci 2017), starting with the first major paper on the topic by Naylor & Finger (1967). This was followed by Fishman & Kiviat (1968), whose work was on a statistical validation approach for discrete-event simulation (DES), which was the emerging simulation paradigm at that time (Collins et al. 2022b). DES is a simulation paradigm that views a dynamic system as a sequence of discrete events, which is a convenient way to simulate as it is analogous to how, pre-parallelization, programs are executed on a computer (i.e., the use of the stack). Until the turn of the century, validation-supporting methods relating to DES dominated the simulation community, with Osman Balci and Robert Sargent being the lead academics in Verification and Validation (V&V) at that time (Sargent & Balci 2017). In a seminal piece of work, Balci identified 75 verification and validation (V&V) techniques (Balci 1998), and that number continues to grow, with new ones being created annually (e.g., Collins et al. 2022a; Borgonovo et al. 2022).

The simulation community has dramatically expanded over the last 30 years, mainly due to advances in personal computers (Collins et al. 2022b; Lynch et al. 2020). As a result, many in the simulation community have never heard of DES, let alone its validation. For example, the Augusiak et al. (2014) review of validation-supporting methods for ecological simulations does not even mention Osman Balci’s work. As such, it is not surprising to expect some development of ABMS validation to occur without this background in DES knowledge. The authors of this article all come from a traditional M&S / system engineering background, which means they are experienced in DES literature, and, as a result, this article might contain biases toward that knowledge base.

Validation process for agent-based modeling and simulation

ABMS is a form of M&S that focuses on the agent paradigm; that is, agents and their behavior form the foundations of the model (North & Macal 2007). Unsurprisingly, there is no universally agreed-upon definition of ABMS across all its communities (Macal 2016). The intent of agent-based modeling is to gain insight into the emergent macro-level behaviors that are observed from interacting heterogeneous agents without the use of aggregation modeling (Epstein 2007; Miller & Page 2007). It is these agents and behaviors that tend to be the focus of validation exercises relating to ABMS.

Some view ABMS as just a subset of DES (Law 2015) because it is implemented on a computer using discrete events, and, as such, they believe that all DES validation-supporting methods are appropriate. This is not a view shared by all, including the authors, and, as such, different validation-supporting methods have been proposed. This article discusses nine of these approaches (data analytics, docking, empirical validation, sampling, visualization, bootstrapping, causal analysis, inverse generative social science, and role-playing).

The aforementioned techniques are not an exhaustive list, with other methods having been suggested. For example, Heath et al. (2012) advocated the use of unified modeling language (UML) type approaches; Bianchi et al. (2007) applied ex-post experiments as their validation-supporting method; and Carley (2017) talks about testability. Some even advocate for using multiple methods (Klügl 2008; McCourt et al. 2012; Niazi 2011), which is discussed below.

The different validation-supporting methods have been developed by researchers from a variety of academic disciplines. The multidisciplinary nature of ABMS raises the question of whether the different suggested validation-supporting methods are unique or entirely distinct from each other. Different disciplines have different expectations, terminology, theoretical frameworks, and ontologies, which can make it challenging to translate from one academic discipline to another. More importantly, all disciplines have their own biases, and the discipline’s community may not even realize or may trivialize these biases (perhaps due to their epistemic bubbles, Magnani & Bertolotti (2011)). These multidisciplinary differences result in different expectations for a validation process. As such, it is important to consider the intended audience (and their discipline) when choosing validation-supporting methods (this point will be expanded upon in the Discussion section).

These differences in the disciplines even result in different viewpoints on the simulation validation processes. Different definitions of validation for ABMS are being defined for different disciplines: engineering (North & Macal 2007), social sciences (Ormerod & Rosewell 2009), ecology (Railsback & Grimm 2019), and computer science (Wilensky & Rand 2015). As such, the above definition of validation for use with ABMS is retained for simplicity’s sake. This definition is hoped to be discipline-agnostic enough to be useful to the reader.

Given the issue of multidisciplinary interpretation of validation, there is no universal standard method for the ABMS validation process. Given the subjective nature of validation’s definition and its purpose, it is not surprising that there is not one universal validation approach, and the authors would recommend skepticism towards anyone who claims they have developed one. Part of this article’s purpose is to expose the reader to different validation-supporting methods and techniques that might be appropriate for their simulation projects. As Gilbert (2020) stated, “the theory and practice of validation is more complicated and more controversial than one might at first expect”. This article is hoped to provide some guidance in handling these subjective aspects of validation.

Validation-Supporting Methods

A selection of nine validation-supporting methods is presented in this section to provide an understanding of the variety available. Other validation-supporting methods exist, for example, face validation (Balci 1998) and the others mentioned above. The selection of methods was deliberately made from various academic disciplines that use ABMS in an attempt to be inclusive of the whole enterprise that is ABMS. In all nine methods discussed, the focus is placed on a particular instance of the method, e.g., Latin Hyper-cube Sampling, as an example of sampling.

It is an intimidating task for a simulation developer to select a validation-supporting method that is appropriate for their simulation; thus, the nine methods described in this section were chosen to provide an insight into a variety of different approaches and, to further this insight, discussion on the benefits and limitations of each validation-supporting method is provided. Further guidance is provided in the Discussion section.

The methods are split into foundational (data analytics, docking, empirical, sampling, and visualization) and advanced (bootstrapping, causal analysis, iGSS, and role-playing). “Foundational” is defined as those methods that the authors would expect every agent-based modeling expert to know. A detailed description of each of the five foundational methods is given before a more general discussion is given on the four more advanced methods.

Data analytics

Data Analytics is the holistic study of empirical data, including data mining, data management, statistics, and machine learning (Leathrum et al. 2020). This definition is not universal, and other terms are used interchangeably with data analytics, i.e., data science, data analysis, etc. The key feature of data analytics is that it considers the holistic management and use of data, whereas statistical analysis focuses only on the statistical technique or test. Data analytics is important for any simulation development project because handling data, both input and output, can require intense effort (Skoogh & Johansson 2007); thus, deciding which data handling methods are used is worthy of pre-consideration as opposed to ad-hoc decisions.

Data analytic methods provide a means to clean and organize the input and output data for a simulation. This supports the validation process of a simulation because it helps provide transparency toward data collection, management, and application (Lynch et al. 2021). Data analytics also provides a mechanism for input modeling and data modeling: it helps derive the statistical distributions used in the input modeling as well as provides relational structures of data for the data modeling. Input modeling connects data to the probabilistic mechanisms within the simulation. This is important because input data contributes to deriving system structure, input parameters, and modeling assumptions. Unstructured messy data can induce biased structures and unequal variance estimates in differing regions of the sample space.

Given the ever-increasing volumes of available data, it is essential to follow a data management protocol and for the effort to implement a formal data management program. Formal data management programs should preserve and secure original data sets, intermediate structured and cleaned data sets, and output data. This is not to imply that all data must be saved. Simulation development is usually an iterative process: running the simulation, analyzing the output data, changing the simulation, running it again, and so on. Data developed during these “development runs” can often be discarded. When simulation development has ended, then it is time to begin carefully dealing with input and output data used for, and created by, these “runs of record.”

Once a data management protocol has been developed, data wrangling can begin. Data wrangling is data cleaning, which includes gathering, selecting, and transforming data. Data wrangling the simulation input data both reduces the need for complexity and the number of errors made during development (Kavak et al. 2018). This can be further supported by data mining, which is the use of computational algorithms to illuminate meaning, relationships, and patterns. While data mining can highlight subtle patterns in the data and complex relationships among input and output data, many insights can often be generated via simpler methods such as descriptive and sampling statistics. Your choice of data analytics methods should be driven by the questions being asked and should be no more complex than necessary.

Leathrum et al. (2020) describe how data analytics fits into the M&S development process, which Lynch et al. (2021) take and specifically shows how data analytics fits into the validation process. Data analytics formalizes data usage and representation, shows the organization of the data, and builds credibility.

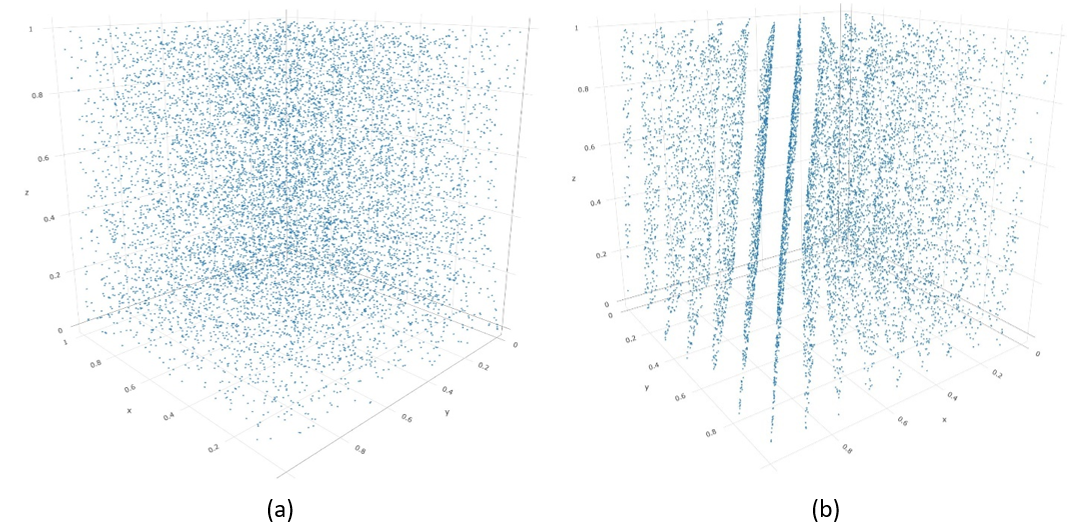

To understand how data analytics could be used in validation, a simplified example from the study of pseudo-random number generators (RNG) is used. The reason for picking this example is that its goal is simple to understand: generate sufficiently random numbers from a seed that is reproducible. There are a variety of tests that can be conducted on a set of data to determine its “randomness,” e.g., run-up test, spectral tests, etc. (Law 2015). Through data exploration, a popular RNG by IBM, known as RANDU, was discovered to have a flaw in that the randomly generated numbers fall into planes, as shown below. In fact, all linear congruential generators (LCG), a form of RNG, suffer from this problem to some degree (Marsaglia 1968).

The point here is that the invalidation of RANDU was discovered through the exploration of its generated data. Data analytics provides an approach to conducting this exploration. Simply put, it provides a means to explore the data collected or generated by the simulation to help discover any anomalies.

Benefits and limitations of data analytics

There are many benefits to data analytics as a method to support validation. Obviously, it helps provide a means for the developer to manage and understand their datasets; this provides a deeper understanding of the simuland (i.e., the real-world system under study), which, is hoped, results in a better simulation being built. Looking at the actual data and its resultant logical implications might help remove some of the developers’ unfounded biases that they have about the system. Since data analytics can be used on not only the simulation input data but also its output data and any data used in its validation process, it provides credibility between the validation process and the simulation’s stakeholders by demonstrating that the data was handled correctly and managed.

A prominent disadvantage of data analytics is that it requires the simulation developers to additionally understand how to conduct data analytics and properly interpret and convey the outcomes (Lynch et al. 2021). This is a non-trivial skill that can take much time for simulation developers to adopt into their simulation development skillsets. There is also no universal way to conduct data analytics, and if the customer has a preferred but inappropriate way (for example, the current fashion to always use machine learning), then if this is not followed, the data analytics might actually reduce the credibility of the simulation with that customer. Finally, properly managing, wrangling, and mining data takes time and resources.

Docking

Docking is a method for comparing a simulation to a referent (usually a pre-existing simulation). Docking can be especially useful for a new practitioner as it helps to focus one’s thinking on (a) the assumptions that underlie the simulation and how they differ from existing work (helping to highlight scientific contribution), (b) provides a framework to express how your simulation compares to the referent (identically, statistically indistinguishable, or with analogous dynamics). In order to use this technique, there must be a referent to compare; if your simulation is the first one to focus on a non-existent system, then this will not be a viable option for you. Also, it is assumed that the referent has been previously deemed valid.

Docking is an approach that compares the outputs of two independently developed simulations of a system of interest. The idea is that if the models use the same theory, then their resultant simulation should produce similar outputs. Since any comparison depends on the models and their purpose, docking is a general term for any method that compares the output of the two models to see if they are acceptably similar. The concept of docking was introduced by ABMS academic leaders Robert Axtell, Robert Axelrod, and Joshua Epstein (1996), and has successfully been applied numerous times (Arifin et al. 2010; Edmonds & Hales 2003; Will 2009).

Docking is a form of model-to-model comparison (Arifin et al., 2010) and is also known as Alignment (Axtell et al. 1996; Rouchier et al. 2008) or Replication within the social simulation community (Edmonds & Hales 2003; Will 2009). Within the discrete event simulation (DES) / military community, it is known as comparison testing or back-to-back testing (Balci 1998) from the software engineering realm (Sommerville, 1996). Within software engineering, docking is considered a verification technique because it involves checking to see if the modeler has implemented the conceptual model correctly (Petty 2010). Arifin et al. (2010) argue that it is also a validation-supporting method because if the conceptual model has faulty assumptions, then docking has the potential to highlight them. Since there is no clear docking method, it is difficult to say what the functions of docking are in general.

Whatever the purpose, docking compares the output of two different executable models. In their original paper, Axtell et al. (1996) identify three possible positive outcomes from docking: identity, distributional, and relational. An identity outcome is when the outputs of the two models are indistinguishable. A distributional outcome is when the results of the two models are statistically indistinguishable. A relational outcome is when the results of the two models show that similar changes in inputs cause similar relational changes in outputs. An identity outcome implies the other two, but distributional does not imply relational unless the inputs to the model were considered in the associated statistical test.

The metrics used in docking depend on the simulation being evaluated; however, a common trend is to generate a distribution of a particular system or output variable and compare them. A suggestion for demonstrating the outcomes of the model selection/comparison/recovery process between tested models is to utilize and present results using confusion matrices (Wilson & Collins 2019).

Strengths and weaknesses of docking

Comparing a model output to the simuland can be problematic because the simuland’s output will be affected by the ‘noise’ of the extraneous variables that have been removed in the abstraction process of constructing a model. Since docking typically compares a model of the system with another model of the system, based on your current understanding of the simuland, it is a fair comparison. The comparison process itself can reveal insights into the simuland and its underlying problem – see Collins et al. (2015) for an example from pedestrian evacuations.

There are several issues with docking: groupthink, incorrect error allocation, and boundaries of docking, which are discussed in turn. The most significant aspect is “groupthink” (Janis 1971; Orwell 1949); that is, if two models are based on the same false theory, the fact they produce similar results does not make those models any less false, but it might, inadvertently, improve confidence in those underlying theories. Another issue is that of error allocation (“blaming” the wrong model for docking failure); if the results from the two models are different, it might be assumed that the new model is incorrect and the base model is not; however, it could easily be the other way round. The final issue is defining what constitutes docking; arguably, all validation-supporting methods are model comparison methods (Petty 2010), even if that comparison is to conceptual models. Thus, it could be argued that docking should be limited to only output data comparison.

Empirical/Data-driven

An empirical validation-supporting method is a process of fitting model outcomes with real data from the modeled system or with expected values if the true values are not known. Commonly, these methods are called empirical validation (Klügl 2008). Note that our definition is slightly different from the empirical validation definition found in economics (Moss 2008; Windrum et al. 2007), which focuses on data generation, but the authors will not discuss these differences here.

Empirical validation-supporting methods rely on statistical methods and machine learning to draw conclusions based on the data and with the support of the number of samples included in the data set. Statistical methods are instrumental as they enable the data from the simulation to directly answer specific scientific questions (Kass et al. 2016). Conclusions are commonly supported with p-values, confidence intervals, and other summary statistics to provide clear evidence supporting rejecting hypotheses. In the presence of large amounts of data, empirical models can be generated and compared on specific features of the data in order to identify which model is able to most effectively represent the desired aspects of the real system. For instance, in a comparison of five machine learning and statistical modeling techniques, Kavak (2019) identifies that a Random Forest-based learning model built using social media data is the most effective model for modeling human mobility compared to the alternative models.

Empirical validation-supporting methods are helpful as they often result in statistically supported, quantitative findings that are reproducible. Reproducibility is critical as it allows other researchers to assess the quality of the findings (Peng 2011). These techniques are particularly useful for new practitioners as they can help in directing the proper formation of a question and have standard templates for constructing interpretations. For instance, hypothesis testing requires the explicit creation of a null and alternative hypothesis and results in an outcome that either provides evidence in support of rejecting the null hypothesis or fails to provide evidence in support of rejecting the null hypothesis.

Empirical validation-supporting methods are useful for any circumstances where real data exists for comparison, and the simulation provides data that can reasonably be contextualized as a comparative match to that data. The level of granularity can range from a single expected outcome value to a set of outcome values, from dynamic social network interconnectivities to assessing location sequences taken by thousands of agents.

A simple example of an empirical validation-supporting method would be to compare the output distribution of the simulation to its equivalent empirical distribution from the real-world, using a technique such as a goodness-of-fit measure to determine if they are statistically similar. This assumes that an empirical distribution can be generated based on the real-world. Many events only occur once in the real-world, i.e., Russia’s decision to commit to a full-scale invasion of Ukraine. However, if the simulated scenario is based on a more generic system, then multiple real-world data points are likely to exist, i.e., consumer purchasing decisions in a supermarket.

Data-driven validation-supporting methods rely on the empirical grounding of an ABMS and the real data used to represent a system, agents, or agents’ behaviors at the level of an individual (Kavak 2019). Empirical data can be utilized from a wide range of sources, such as social media for behavior and location data, as well as to identify networking characteristics and authoritative sources for providing demographic and spatial data. Real-world data to use for the validation process can be obtained through Twitter to provide, for instance, identification of tourist visit sentiment (Padilla et al. 2018b) through survey data to identify distributions of characteristics and constraints on decision-making (Robinson et al. 2007), national demographical statistics and spatial data to compare against known historical values (Diallo et al. 2021; Fehr et al. 2021), and observational field studies to inform behaviors (Langevin et al. 2015), among others. Care should be taken to ensure that the appropriate tests are selected when evaluating models built on qualitative versus quantitative data.

Benefits and limitations of empirical methods

A primary approach for assessing the fitness-for-purpose of empirical and data-driven models is to evaluate the fit of the simulation outcomes compared to the real system. Nassar & Frank (2016) identify three advantages of quantitative model fitting: comparison and ranking of competing models based on their fits to empirical data; assessment of differences based on model parameter estimates; and comparison of latent model variables. Many statistical tests provide indicators that help to select the best fit based on a set of criteria, and these criteria can be reported alongside the test result to clearly communicate why the finding was interpreted in the way that it is being presented. All these approaches assume that the appropriate real-world data can be collected for comparison.

Potential weaknesses of empirical validation-supporting methods depend on the type of data collected and the collection methodology; for example, the use of survey data can be representative of a snapshot in time and not correctly capture temporal variabilities, or field data may produce insufficient sample sizes to be generalized to the larger population (Robinson et al. 2007). Statistical tests operate under a set of assumptions, such as a normality assumption, that the data must adhere to in order for the application of the test to be correct, and for the findings to be useful. It is often the burden of the tester to understand what the assumptions are and to take responsibility for checking that the assumptions are not violated. The question of a model’s fit with respect to the empirical data should be viewed within the context of the problem and not merely viewed as a balancing act between overfitting and underfitting (Navarro 2019). Additionally, ethical considerations are involved in determining which groups of people are being represented by the data, such as the majority group members only, and taking care to properly convey interpretations with respect to only the populations that the simulation represents.

Adequately situating between overfitting and underfitting can be daunting for new practitioners. Tests that result in the identification of an error lead to a modification of the simulation to remove the error. As a result, the applied test will not be reproducible using the next, and potentially many subsequent, iterations of the simulation; therefore, to facilitate reproducibility, it is important that the data utilized for each test be stored and that the code used for testing reflect the version of the simulation that produced the erroneous finding. This can add much additional time and data storage requirements to the validation process (Windrum et al. 2007).

Sampling

Exploring the simulation space and running the simulation generates samples of outcome data. Sampling is the process of systematically exploring the simulation space based on a predefined specification of conditions that can be handled using a design of experiments that are defined in advance of testing. Sampling techniques include random sampling, quasi-random sampling, factorial sampling, Latin hypercube sampling, and sphere-packing (Lee et al. 2015; Lin & Tang 2015), to name a few options. The focus of the discussion is on the use of sensitivity analysis as a foundational method for gaining an understanding of the dependencies of a simulation on its input parameters - based on the data collected from the sampling process.

Sensitivity analysis is the study of understanding the impacts of uncertainty in a model’s inputs on the outputs of that model (Archer et al. 1997; Morris & Moore 2015; Saltelli et al. 2021). In simpler language, if the inputs into the model are accurate but not precise, will the conclusion drawn from the outputs also be wrong? How sensitive a model’s outputs are to its inputs depends on the model: if the model’s purpose is to calculate the outcome of a precise mathematical problem, then precise inputs are required; if the simulation model’s purpose is to provide a rough estimate of the evacuation time of a city, say, then there might be some flexibility in the number of evacuees considered (e.g., you would expect similar estimates if the simulation used one million evacuees or 1.01 million evacuees). Sampling is determining which input variables will be used in your sensitivity analysis along with selecting particular values of those variables for the simulation runs, how those input values will be combined, and the number of values that will be considered for each chosen variable. Minimum sample sizes should be determined to support the reliability of analyses based on the data sampled (Lee et al. 2015). Data sizes should be determined in advance to make sure that sufficient data is collected and to prevent the unnecessary collection of too much data.

The reason that imprecise inputs might be used in a simulation is due to the difficulties in collecting data, e.g., using sample estimates of population characteristics. Sensitivity analysis helps determine whether the imprecision of inputs significantly affects the output through systemically changing the values of model input over some range of interest (Shannon 1975). That is, are the input values near a tipping point of the system. To conduct sensitivity analysis, one must consider which systemic sampling approach of the input values will be used. The biologists Marino et al. (2008) advocated Latin Hypercube Sampling (LHS) as an appropriate sampling method for agent-based modeling. Through this approach, the input variables can be assessed to determine whether they are adequately precise to provide confidence in the simulation’s output and the resultant conclusions made from the analysis of these outputs. An overview of sensitivity analysis in ABMS can be found in Borgonovo et al. (2022), who also present a method for dealing with non-parametric parameters in a sensitivity analysis.

When there is reason to be concerned about the precision of any of the input variables, then sensitivity analysis should be considered. This is especially true if it is not clear how the values of those variables will affect the outcomes and resultant conclusions that are drawn from the simulation. Since simulation is commonly used to model complex systems where the input/output relationship is not well understood, the authors advocate that all simulation studies should do some level of sensitivity analysis and sampling.

Benefits and limitations of sampling

One of the strengths of simulation is the ability to investigate “what if” scenarios. Sampling different input variable values and seeing how they affect the simulation’s output provides insight into the system that would be difficult, if not impossible, to achieve when collecting data from a real-world scenario. It could provide insight into whether an input variable really matters, e.g., if a wide variety of values are sampled for a particular input variable, and the resultant output does not change, then this is an indicator that that input does not matter for the underlying problem under consideration for the simulation purpose. This might result in removing the input from future development iterations of the simulation under the law of parsimony (Rodriguez-Fernández 1999). The findings from sensitivity analysis might provide benefits beyond the validation exercise by providing insights into the system, e.g., Collins et al. (2013).

A simple approach to sampling input variables is the One-Factor-At-A-Time (OFAT) approach. OFAT means varying one input variable at a time and observing its impact on the simulation’s output. There are several problems with this approach (e.g., a large number of simulation runs are needed), but the most prominent problem for agent-based modelers is that it ignores variable interdependency. Since ABMS is used to model complex adaptive systems (CAS) (Miller & Page 2007), it is safe to assume that there will be a high level of variable interdependency. Marino et al. (2008) used Latin Hypercube Sampling (LHS) to overcome this problem because the approach varies all inputs simultaneously. LHS also provides similar results as if the complete multi-dimensional combinations had been considered (McKay et al. 1979). Thus, it provides an approach to sampling for sensitivity analysis without requiring an excessively large number of runs to be executed. Since the effects of input variables on the output variable are interdependent, it is not appropriate to use normal correlation analysis; thus, Marino et al. (2008) also discuss the use of the Partial Correlation Coefficient (PCC) with LHS. While LHS is an efficient way to sample a simulation’s input parameter space, even relatively simple simulations can have a vast input parameter space, making the execution of LHS difficult. Oh et al. (2009) have introduced nearly orthogonal LHS as a way to sample vast parameter spaces efficiently. Finally, in order to efficiently highlight the sensitivity of combinations of changes in input parameters, one could undertake an Active Nonlinear Test (Miller 1998) to heuristically search for combinations of inputs that cause large changes in output. These studies are typically undertaken with a genetic algorithm and can be a more efficient way to test sensitivity than brute force methods.

Visualization

Visualizations connect model users to simulation runs by providing relatable and intuitive representations of simulation events, behaviors, and statistical indicators. This connection can provide information on the current state of the simulation by providing details on behaviors, attributes, characteristics, network relationships, and environmental statistics at the system, population, and individual levels. These visualizations can be shown during a simulation run to provide insights throughout its progression, or they can be shown at the conclusion of a simulation run to serve as an executive summary of the events that took place. Visualization is a foundational aspect of agent-based modeling as it provides representations of agents situated within their environments, thus providing the opportunity to glimpse the geospatial connections in relation to the agents. This creates a correspondence between the simulation and the represented real system that aids in maintaining the context of the simulated system in relation to the interactions, geospatial information, and other actions occurring within the simulation to facilitate insightful feedback. Animation can further extend this connection by telling stories about agents, network topologies, or the environment over time.

Visual indicators can provide easily interpretable representations that can be used to support the ‘fitness for purpose’ of individual simulation outcomes, behaviors, boundary constraints, and many other features. This serves as a great starting point for evaluating the simulation’s fitness for purpose, but the authors recommend that these techniques be augmented with additional techniques to provide further quantitative support in favor of a decision. Visual techniques are generally accessible and interpretable across a broad audience base. These techniques have shallow learning curves, do not rely on knowledge of formal mathematics or statistics, can provide insight during simulation execution, and have been found to be more commonly applied in practice (Andersson & Runeson 2002; Padilla et al. 2018a).

Visualization helps to enhance the experience of the runtime representation of the model by graphically representing parameter levels, interdependencies, aggregate and individual-level value distributions, and relationships among model components (Wenzel et al. 2003). Using representative graphics to more closely match the components modeled from the real system can be useful in assessing the structural layout of the simulation. For example, as an initial check, different visual representations for agents can be used to check that both the simulated components initialize to their correct locations and that interactions are occurring between the expected combinations of agents.

Numerous visualization options exist to convey information and facilitate insight across the spectrum of layers involved in the validation process. This spectrum includes showcasing characteristics of the environment, the agents at both the individual and population levels, the agent-environment connections, as well as the network topology configurations linking the agents for interactions and communications. Visual techniques have high value in facilitating insights as they can be tailored based on the intended user audience, message, and model context. Additionally, these techniques allow for the qualitative comparison of behavioral patterns between models (Lee et al. 2015; Wilson & Collins 2019).

Visualization is a starting point for gaining initial insight into the operation of a simulation that is generally simple to apply and interpret during runtime. Animation and graphics serve as intuitive approaches for observing and comparing simulated behaviors (Sargent & Balci 2017; Wilson & Collins 2019) and help to increase the simulation user’s confidence by viewing the simulation’s execution (Hurrion 1978; Palme 1977). Watching the execution of the model can help in identifying discrepancies between the simulation and its specification (Balci 1998), but this can be a very attention-demanding process (Crossan et al. 2000; Henneman 1952; McCormick 1957). This is accomplished in part by the ability to place the simulation into context by collocating different types of data for the purpose of facilitating exploration and generating explanations into the inner workings of the simulation (Kirby & Silva 2008; Vernon-Bido et al. 2015).

Animation and operational graphics provide dynamic representations of model components’ behaviors throughout execution. These techniques are effective at identifying problems within the model logic by conveying the model’s operational behaviors graphically over time (Balci 1998; Melamed & Morris 1985). Performance indicators are utilized to ensure that the performance measures and the model behave correctly. Animation can include visually depicting how levels and rates change over time, highlighting paths followed, indicating periods of waiting, and showcasing resource availability (Kleijnen & van Groenendaal 1992). Additionally, animation allows for the graphical representation of internal and external behaviors, helps modelers visually identify errors in the implementation (Balci 1998; Xiang et al. 2005), and assists in communicating stochastic outcomes to decision-makers (Bell 1989). Operational graphics provide visual insights into dynamic behaviors such as queue sizes and the percentage of busy resources over time (Sargent 2013). Operational outcomes can be examined for correlations, statistical measures, and linear relationships if an appropriate amount of data is available (Sargent 1996, 2005). For reproducibility, and assessing statistical validity when applicable, the raw data and any intermediary data manipulation, such as histogram binning, behind each visualization should be stored (Sandve et al. 2013).

Visualization techniques can be separated into four categories based on the level of insights that they provide within ABMS, namely: (1) characteristics of the environment or the agents, (2) agent behaviors, (3) agent-environment interactions, and (4) network topologies. An initial category of visualizing ABMS information comes in the form of characteristics of the environment and the agent. Agent and environment characteristics exist at both the individual and global levels, may be desirable to display throughout a run, and include items such as geolocation, current resource levels, historic resource levels, traits values (i.e., age, weight, etc.), and interaction histories. Common visual aids for presenting information within a simulation include numerical indicators, scatter plots, line plots, histograms, box plots (Sargent 1996), bar charts, data maps, time-series plots (Forrester 1961; Tufte & Graves-Morris 1983), and polar diagrams (Smith et al. 2007). Spatial plots are a basic plot type that displays agents based on their x-y coordinates and is a default representation feature provided by agent-based modeling platforms. Additionally, visual representations include icon-based displays, dense pixel displays, and stacked displays (Keim 2002). Local and global geographic placements can be represented using cartogram layouts for global shape functions or pixel maps for local placement (Panse et al. 2006). Care should be taken in altering the level of granularity (i.e., zooming) within the environment so information is not lost as an effect of the type of geographical representation used.

Agent behaviors are another primary category of ABMS visualization. For platforms that allow for the creation of decision sequences in the form of state charts, state chart tracing is an effective visual technique. State chart tracing is the act of highlighting the active state, the most recently executed transition, and the upcoming transition (Borshchev 2013). Representing agents’ histories can be an effective route towards assessing ‘fitness for purpose’ of those behaviors (Axelrod 1997; Diallo et al. 2018). Narratives can be generated that convey key events, decisions, or interactions based on the history of an agent’s progression over time.

Another key component that can yield insight into the ‘fitness for purpose’ of the ABMS is the agent-environment interactions within the simulation. This category is comprised of the elements of the simulation that have some dependency between an agent and the environment. Agent-environment interactions can include behaviors or interactions that are based on:

- Resources available within the environmental location, such as the Sugarscape model (Epstein & Axtell 1996) or public health models that explore calorie availability within a person’s situated environment (Diallo et al. 2021),

- Shared physical locations between agents, such as predator-prey models or ethnocentrism models (Jansson 2013), or

- Structural constraints of the environment, such as impassable terrain (Tolk 2012).

Examinations of the behaviors occurring between the agents and environment, i.e., the agent-environment considerations, are explorable through the use of pattern-based visualization methods, such as cluster heat maps (St-Aubin et al. 2018; Wilkinson & Friendly 2009), and location tracking (Kim et al. 2019; Züfle et al. 2021). Cluster heat maps can yield insights into concentrations of agents based on the intersection of selected agent characteristics and the agents’ associated geolocations at specific points in time (Diallo et al. 2021; Lynch et al. 2019). Basic plots can be utilized to demonstrate the differing agent cooperation strategies for interacting with their ingroup members only, outgroup members only, all members, or no other members. For instance, Jansson (2013) utilizes plots to display that the spatial assumptions of the model play a critical role when exploring differences between ingroups and outgroups for ethnocentrism models.

ABMS also includes the ability for networks to exist among the agents. This can be represented with all agents connected in a single network but with a configuration of varied links and link weightings connecting the agents. Agents can also be split into two networks of either ingroup or outgroup members (Jansson 2013; Shults et al. 2018b). Alternatively, networks can be derived from real social network data (Kavak 2019). Networks can vary in connectivity from zero to many connections, ring connections, mesh connections, tree layouts, and many other options. Visual methods should help in communicating the characteristics of the network topology, its nodes and links, and its functional, causal, and temporal components (Marai et al. 2019). Network visualizations should provide information on the individual agents, as nodes within the network, their interconnections, and global structure. Network connections are instantiated based on real social media data or created dynamically based on group memberships and associations made throughout a simulation, such as found ingroup ritual formation models (Shults et al. 2018a; Shults et al. 2018b). Small-world networks prescribe connectives to a circular lattice structure with agents populating the circle and connected to their nearest neighbors (Watts & Strogatz 1998). The type of network topology used should be tested to ensure that results occur as expected and to determine if the results are sensitive to changes in the network topology.

Benefits and limitations of visualization

Visualizations for conveying insight have been shown to result in more confident decision-making, increase efficiency by decreasing simulation analysis time, and correlate with correct solutions (Bell & O’Keefe 1994). Additionally, a recent survey found visual inspection to be the most commonly employed approach for ABMSs, with over 38% of respondents supporting its use (Padilla et al. 2018a). Visual feedback is well suited for conveying spatial information, relationships, time-ordered data, and history (Gaver 1989; Henneman 1952). Plotting residual values as part of assessing a model’s fit and visualizing patterns within replications can contribute to effective statistical practice (Kass et al. 2016). Pairing visual feedback with other forms of sensory feedback can enhance the validation process by providing attention-grabbing features that aid in directing the user towards the location and timeframe of validation points of interest (Gaver 1989) as well as reinforcing training and learning objectives (Crossan et al. 2000).

Weaknesses of visual techniques can be categorized from two perspectives: the user interpreting the feedback, and the artifacts comprising the visualization. Challenges pertaining to the user include that the feedback is attention-demanding, leading to fatigue and that they can face scalability limitations depending on the analytical scope. Additionally, the repetition involved with the manual inspection of visualizations can be error-prone (Ahrens et al. 2010). Challenges from the perspective of the visualization include that too much information can hurt the ability to interpret the results (Rougier et al. 2014), complexities and misrepresentations of magnitudes can easily impact proper readability (Kirby & Silva 2008; Vernon-Bido et al. 2015), and visualizations that rely on subjective interpretation can lead to differing interpretations from users (Rougier et al. 2014). It is important to make sure that visualizations and the data used to create the visualizations are free from bias and are not inadvertently causing misleading inferences based on their construction, such as the cutting off portion of the y-axis to zoom in on the area of interest (Huff 1954). To facilitate reproducibility the raw data and code utilized to generate visual artifacts should be included alongside the visualizations (Angus & Hassani-Mahmooei 2015).

Bootstrapping

A simulation model can be used to produce a statistically accurate empirical distribution of output events for a system, whereas history might only provide a single event. This disconnect makes it difficult to compare reality to a simulation’s output since you are comparing a distribution with a single point. To overcome this issue, it is common to construct confidence intervals using the simulation’s output and see if the historical data point falls within the confidence interval. However, such an approach is problematic because it assumes the historical data point represents a “typical” output of the system. If the historical data point is, itself, a statistic, it might be influenced by outliers or anomalous data points. Assuming the historical data point is a statistic, Champagne & Hill (2009), operational researchers, advocated for a statistical bootstrapping approach as an empirical validation-supporting method for ABMS. It had previously been advocated for use in the validation processes of simulation in general by Cheng (2006).

Bootstrapping is a process in which sample data is itself sampled (with replacement), called resampling, to generate a new version of the statistic of interest (usually the mean). Bootstrapping has been shown to reveal information about the population data, which cannot be found by using statistics alone (Cheng 2006). Since the sample is resampled multiple times, empirical distributions can be generated for comparison with the simulation’s output distribution, although Champagne & Hill (2009) focused on confidence intervals.

Champagne & Hill (2009) used bootstrapping to generate a confidence interval of the mean number of monthly sightings (and kills) of Nazi U-boats in the Bay of Biscay during World War II. This was compared to the confidence intervals generated by an ABMS of the U-boat sighting scenario. Due to the non-gaussian distribution being found, the authors further conducted non-parametric sign tests, which compared bootstrapped means with simulation means. Using this approach, they concluded that the simulation was sufficiently accurate for its purpose.

Benefits and limitations of bootstrapping

The strength of bootstrapping is that it allows an empirical validation approach even when there is limited real-world data. This could be especially important in fields that have very few data points to compare to their simulation, e.g., prehistorians or archaeologists. Another strength is that the process of bootstrapping provides new information about the real-system, which can be useful in any validation exercise or even in further development of the simulation.

The first weakness of bootstrapping is that it is not widely accepted in the scientific community, which might decrease the trust in its outcomes by the stakeholders (thus missing the point of the validation exercise). The second weakness of bootstrapping is that it can produce multiple datasets that are used to make multiple hypotheses, which are then incorrectly incorporated into a single hypothesis. In conducting multiple statistical hypothesis tests, there is a problem when making conclusions that relate to all the tests; this is known as the multiplicity problem (Miller 1981). For example, if a standard error rate, \(\alpha\), of 5% for a Type I error is desired; then when conducting a group of multiple statistical comparisons, the overall chance of Type I error, \(\overline{\alpha}\), called the family-wise error rate, can be calculated using the following formula for the error rate: \(\overline{\alpha} = (1 - \alpha)^{n}\). The implication of using the family-wise error rate, which is appropriate for a large group of statistical hypothesis tests, is that the individual error rate dramatically decreases for a large group of tests. This dramatic decrease is due to the need to compensate for the increased chance that one (or more) of the considered data sets observes a randomly occurring outlier result. As such, it is imperative to limit the number of hypotheses considered in the analysis. Champagne and Hill did not consider pairwise family statistics.

Causal analysis (including traces)

Causal analysis techniques explore the chains of events producing simulation behaviors to help differentiate between acceptable behaviors arising from explainable simulation conditions versus unacceptable behaviors resulting from errors in code (verification) or model design (validation). As a category, causal analysis techniques are beneficial because they provide (i) reproducible outcomes, (ii) objective evaluations based on clear mappings to expected outcomes or defined specifications, (iii) traceability between specifications and outcomes, and (iv) vary in level of difficulty so that they are accessible for novices to advanced users.

These techniques are useful when trying to trace, describe, and explore the occurrences and sources of simulation behaviors, with the occurrence being the observable representation of a behavior and the source being the explainable cause of that behavior. Causal analysis aids in building support for the components of the simulation that are consistently or reliably contributing to the behavior. This helps to build support and confidence in understanding how a behavior is arising. It also provides transparency in showing that the behavior is generated through the correct means using an appropriate set of contributors. Techniques that support causal analysis include statistical debugging (Diallo et al. 2016a; Gore et al. 2015, 2017), traces and execution monitoring (Whitner & Balci 1989; Xiang et al. 2005), model logging (North & Macal 2007), and state transition analysis (Borshchev 2013). Each of these techniques provides a variety of insights to support the building up of ABMS credibility with the simulation’s stakeholders. However, statistical debugging should be the focus as it provides reproducible, replicable, and transparent measures for evaluation.

Gore & Reynolds (2010), computer scientists, created a method to hypothesize the cause of emergent behaviors in ABMS. They argue that unexpected behavior in a simulation needs explanation; this includes emergent behavior, as the behavior may be reached because of implementation or model design errors. Hypotheses pertaining to simulation behaviors are developed using a semi-automated method utilizing causal program slicing (CPS). This approach assumes that errors can be quantified and mapped to their source locations. If unexpected parts affect the variable, then the hypothesis is rejected, the model deemed invalid, and the developer can explore those parts of the code that do (or do not) affect the emergent behavior variable. The advantage of this approach is that it gives insight into the simulation model’s inner workings; the downside is the assumption that the emergent behavior, and its explanation, can be quantified.

The statistical debugging process is facilitated using (i) a data set (i.e., an agent’s log file, simulation output data, test cases, etc.) along with (ii) standalone and pairwise variable combinations with static and dynamic partitions (Diallo et al. 2016a; Gore et al. 2015). The data set needs to be in a row-column representation with variables or factors on each column, and samples or observations on each row. Variables are the items being evaluated within the simulation and can capture a variety of viewpoints from the ABMS, including: individual agent characteristics, such as an agent’s weight or health status; environment characteristics, such as terrain type within a cell; agent population characteristics, such as average happiness; agent-environment characteristics, such as the concentration of agents in a certain state at a specific geolocation; network characteristics, such as the number of links to a specific node; communication characteristics, such as the volume of messages between agents at a specific time; and any other items that are of interest for evaluating the simulation behavior. Each observation can be considered as the value of the specific variable at each time step, thereby reflecting the time-dependent status of the variable at each collected time step. Ultimately, each of the variables in the output set is compared against a single outcome of interest to build support for their consistent contribution, or lack of contribution, to the occurrence of that outcome.

For evaluation, variables reflect the variable names within the data set, and baseline predicate values are dynamically informed by capturing their mean values and standard deviations within the dataset as well as a static value at zero. Static predicates explore the outcome space for absolute values based on stationary values, such as \(Agent \; Age > 0\), i.e., reflecting that age should always be positive. Elastic predicates are based on the central tendency and variance of the variable, which is dynamically captured at runtime. In this case, using mean values based on the observations included within the data set along with their corresponding standard deviation values. This allows for an exploration based on where a bulk of the data is expected to fall under the assumption of a normal distribution. The final piece in the evaluation is that each observation, or row, is categorized based on the behavior being explored; therefore, the observation is considered as failing (remember that the original goal is to specifically trace the contributors to suspect behaviors) if the behavior of interest is present alongside its occurrence. Only the counts of the occurrences of the failing cases and the total number of observations are considered for the empirical evaluation of the results.

Interpretation is provided through the combination of correlation, coverage, and suspiciousness metrics for each predicate (Gore et al. 2017; Shults et al. 2018a). The calculation of each metric is dependent upon each predicate’s associated sample size and the sample size of the behavior of interest. The behavior of interest that is being explored is represented as a quantitative value or value range of some variable interpreted as a pass/fail outcome. For instance, assume the behavior being explored is an agent population’s average weight; the behavior is causing concern as the average weight is consistently surpassing the expected weight values given the historical data. Therefore, the average weight (also included as a column in the output file) is selected as the behavior of interest, and a range is specified as the feasible region for the evaluation, such as \(Population \; Weight > 600\). Each predicate will then be evaluated with respect to its number of co-occurrences alongside \(Population \; Weight > 600\) being true (considered a failing observation case) or false (considered a passing observation case as this would reflect expected simulation behavior).

The correlation for a predicate statement, represented as \(correlation_{s}\), represents the total occurrences that the predicate was present alongside the behavior of interest, i.e., a failed observation, over the total number of occurrences where the predicate was true, as shown in Equation 1.

| \[ correlation_{s} = \frac{\# \; of \; failing \; observations \; including \; s}{\# \; of \; observations \; including \; s}\] | \[(1)\] |

The coverage for a predicate statement, represented as \(coverage_{s}\), represents the total occurrences of the predicate alongside the behavior of interest when only looking at the subset of the output data that included the failing observations for the behavior, as shown in Equation 2.

| \[ coverage_{s} = \frac{\# \; of \; failing \; observations \; including \; s}{\# \; of \; failing \; observations}\] | \[(2)\] |

The suspiciousness for a predicate statement, represented as \(suspiciousness_{s}\), is a combined metric for \(correlation_{s}\) and \(coverage_{s}\). This reflects the situation that the predicate is true in instances where the behavior of interest is present, as shown in Equation 3.

| \[ suspiciousness_{s} = \frac{2 \cdot correlation_{s} \cdot coverage_{s}}{correlation_{s} + coverage_{s}}\] | \[(3)\] |

As an example, assume that there are 100 observations, that a behavior is being explored that manifests in 25 of the observations and that the predicate \(S = (Agent \; Age > 0)\) is one of the predicates being evaluated. Assuming that \(S\) is always true, then there are 100 observations where this predicate exists within the data set. This includes the 25 observations where an outcome of interest occurs as well as the 75 where the outcome does not occur. As a result, \(S\)’s correlation is 0.25 (25/100), its coverage is 1.0 (25/25), and its suspiciousness is 0.4 ((\(2 \times 0.25 \times 1.0\))/(\(0.25 + 1.0\))). This yields insight supporting that Agent Age is not a likely contributor to the overall behavior being explored even though its coverage alongside the behavior is high (e.g., it is observed every time the behavior of interest occurs). More details can be found in Shults et al. (2018a).

The statistical debugging concepts have been extended for simulations in general (Gore et al. 2015), formed into a general-purpose V&V Calculator tool for use by experts and non-experts (Diallo et al. 2016a), and further extended with respect to tracing agent-based models (Gore et al. 2017). This approach has been applied to explore generative ABMs (Shults et al. 2018a) and in a validation exercise with tens of thousands of rows of output data for evaluation (Diallo et al. 2016b; Diallo et al. 2021). A freely available, web-accessible version of the V&V Calculator is available at: https://vmasc.shinyapps.io/VandVCalculator/. This tool allows for increased flexibility in creating predicate combinations and exploration spaces based on the user’s knowledge of the simulation space, the simulation context, and what their expectations for a simulation’s behaviors should look like.

Benefits and limitations of causal analysis

This approach can be used to quickly sort a large output space to identify conditions that frequently co-occur alongside unexpected outcomes (Diallo et al. 2016b). Since the generation of elastic predicate values are based on summary statistics from the actual data contained in the output set, the central tendency (i.e., the mean) and variation (i.e., standard deviation) of each variable changes each time the approach is applied, assuming the simulation is stochastic. This allows for effective application on multiple samples pulled from a simulation or for the aggregation of samples pulled from multiple runs. The suspiciousness metric helps to mitigate the impact of cases that have perfect correlation or coverage as a result of being present within the entire output set.

The predicate-based form of the analysis places a larger burden on properly structuring or wrangling the simulation data. This can add a significant volume of overhead in properly structuring files for evaluation. Large volumes of trace data can be difficult to analyze, incur large amounts of overhead processing, and can be burdensome to interpret (Courdier et al. 2002; Gore et al. 2017; Xiang et al. 2005). Additionally, the semi-automated measures utilized within the publicly accessible tool are setup to explore elastic predicates under an assumption of normality. This will not be a good fit for data affected by outliers, or that should be explored with assumptions that utilize the median of the data. Whether the data meets the assumptions should be manually explored by the user. Finally, these predicates can suffer from confounding bias due to logic statements (i.e., IF-THEN-ELSE) within the source code of the simulation and a lack of balance in the inputs for the simulation runs (Gore & Reynolds 2010, 2012).

Inverse generative social science

Inverse Generative Social Science is a broad method that shifts the analysis from the data a simulation produces to the agents the simulation contains. This is a way to create agent-based models that behave “well enough” with respect to some referent. It is a valuable technique in that it allows one to explore more than one agent-based model and expand to many more that address the question at hand. It may be a viable validation-supporting method if (a) you are able to a priori specify all possible individual behaviors an agent may use, and (b) have a referent or can define a function to articulate what a “good” run is.

Inverse Generative Social Science (iGSS) is an emerging field made up of various techniques that attempt to “grow” the rules an agent uses in an agent-based model (Epstein 2023). In this sense, the agents’ rules are the output rather than some collections of data. The motivation for iGSS is to address a common simulation critique: that the created simulation is only one possible configuration that may give rise to a particular phenomenon. IGSS attempts to discover all agent configurations giving rise to a particular phenomenon (www.igss-workshop.org). Examples of techniques that fall into iGSS include Evolutionary Model Discovery (Gunaratne et al. 2023; Gunaratne & Garibay 2020), Rule Induction (Rand 2019), Computational Abduction (Ren et al. 2018), and Inductive Game Theory (DeDeo et al. 2010).

Evolutionary Model Discovery (EMD) is the focus here as it is one of the most mature and accessible of the iGSS techniques, see Gunaratne & Garibay (2020) and https://github.com/mitre/strategy-mining. In essence, EMD uses a Genetic Program (GP) to automatically create rule sets for the agents to use. The simulation is then run, output data is collected and post-processed into a score that is used within a fitness function to evaluate the performance of the automatically created agent-based model. Well-performing rule sets are then mutated and recombined to create the next generation of simulations. This process is repeated until a population of simulations are discovered that perform well, as defined by the user, in comparison to the referent.

Given the purpose of a validation exercise, how then does iGSS (and specifically to this discussion EMD) relate to the validation process? First, the process requires a referent against which to compare. Therefore, the process itself includes a “built-in” validation-supporting method in the form of the fitness function used by the GP. Upon completion, the user will be able to make quantitative claims about how well the resulting simulations relate to the referent. This process helps to build credibility, between the simulation and its stakeholders, by producing many simulations of the system in question and, thereby, decreasing the chance that any given simulation is an outlier representation of the system in question.

Benefits and limitations of inverse generate social science

The main strength of iGSS is that it produces a set of ABMs that all correspond to some referent to a specified degree. This provides an automated process to allow one to make meaningful statements about the uniqueness (or lack thereof) of the initially specified model. This is important as there may be many potential ABMs that correspond to a referent.

There are some weaknesses to this approach. Two of them are potentially significant. The first is that all potential behaviors must be specified a priori. The EMD system will only find combinations of existing behaviors and will not create new behaviors. Secondly, one must analyze potentially very large collections of agent rules and reason about them. This is a nontrivial endeavor. From a validation perspective, this provides one with the ability to get out from under the critique of a single model with no sense of how unique it may be but then presents the opposite problem of needing to make sense of a potentially vast collection of ABMs.

Role-playing

Accurately modeling human behavior and human decision-making is a current challenge for ABMS (An et al. 2020; Cheng et al. 2016). Human behavior is heavily dependent on unaccountable knowledge like emotions and trust. As such, it is reasonable to question the appropriateness of any human behavior represented in an ABMS. Collecting real-world data on human behavior, within the simuland, is a difficult and time-consuming task; in large complex systems, which ABMS is attuned to modeling, this task is impossible. As such, the authors argue that empirical validation-supporting methods do not apply for simulations focused on human behavior, and alternatives must be used. Alternatives could include using surveys or role-playing. Ligtenberg et al. (2010), geographers, say that surveys are of limited use as stated preferences and not actual preferences. They suggest that real human behavior needs to be observed and collected, for comparison with the simulations output and dynamic behavior. They suggest that role-playing could be used to do this and outline an approach for using role-playing in the validation process. Their approach to obtaining data, for use in the validation process of a particular simulation, is to have humans role-play the agents; this includes giving the humans the same options, goals, etc. Their approach involves creating a roleplaying scenario that emulates the computerized agents and letting groups of human participants “play” the scenario out. The outputs from the human subject experiment can be compared to the outputs of simulation (or part of it, if a large simulation is being used). The resultant behavior that the human participants display can be compared to those generated by the computerized agents. They provided a case-study of their approach, which was used to show fitness for purpose of an ABMS of land use planning in the Netherlands.

Role-playing can be employed in the validation process in many different forms, for example, to support the training of stakeholders to explain model content, to facilitate stakeholders’ assessments of model assumptions, and to lead the exploration of model theories (Barreteau, Bousquet, & Attonaty, 2001). Roleplaying has been used within the validation process of several ABMS.

There are different ways that games can be used in the validation process (Szczepanska et al. 2022). A variation on role-playing games with ABMS, is participatory simulation. Participatory simulation is when human participants play the role of one of the computerized agents (Castella et al. 2005). In a recent paper, Grigoryan et al. (2022) used participatory simulation to determine if the rates of finding a solution, to a hedonic game called the glove game, were similar between the computerized agents and human behavior. This was achieved by having a human participant play the role of a single agent in the simulation. The intention of this comparison was to help show fitness for purpose of a modeling approach to strategic group formation that had been developed (Collins & Frydenlund 2018; Vernon-Bido & Collins 2021).

There are a number of ways that the data collected from the role-playing trials could be used in the validation process. These can be categorized as myopic or hyperopic comparison data. Myopic comparison data relates to decision points that the roleplayed agents make during the scenario, i.e., which decisions were made. Myopic comparison data at the micro-level can be used to compare the frequency of similar choices between the role-playing humans and the simulated agent. Collins et al. (2020) consider this direct comparison in a participatory simulation. Hyperopic comparison data looks at the final outcomes of the scenario, i.e., whether the same emergent behavior is observed in both. Hyperopic comparison is at the macro-level and might not even be possible to collect if only a small subset of the simulated scenario is considered in the roleplaying scenario.

Benefits and limitations of role-playing

Roleplaying methods are not exclusive validation-supporting methods within ABMS. Barreteau et al. (2001) advocated that roleplay could be used to generate input data on human behavior. Role-play scenarios could be generated for particular instances of the simuland and the human behavior, displayed by the participants, could be recorded and used to generate input models in the ABMS. However, creating input scenarios without bias would be a difficult task due to what Salt (2008) calls the Jehovah problem in simulation development; that is, a simulation developer is, and always will be, biased.

Creating the roleplaying scenario can be difficult and must be done in such a way that the human subjects are really able to understand it so that they can effectively play the roles. Ligtenberg et al. (2010) found that the terminology used in the roleplaying scenario can cause confusion and it is better to be precise with the terminology over being accurate. For example, Collins & Etemadidavan (2021) had to provide extensive training for the mechanics of the game they used in their roleplaying effort.