Scott Moss (2008)

Alternative Approaches to the Empirical Validation of Agent-Based Models

Journal of Artificial Societies and Social Simulation

vol. 11, no. 1 5

<https://www.jasss.org/11/1/5.html>

For information about citing this article, click here

Received: 24-Jun-2007 Accepted: 12-Nov-2007 Published: 31-Jan-2008

Abstract

Abstract[s]ome AB economists, engaged in qualitative modelling, are critical of the suggestion that meaningful empirical validation is possible. They suggest there are inherent difficulties in trying to develop an empirically-based social science that is akin to the natural sciences. Socio-economic systems, it is argued, are inherently open-ended, interdependent and subject to structural change. How can one then hope to effectively isolate a specific 'sphere of reality', specify all relations between phenomena within that sphere and the external environment, and build a model describing all important phenomena observed within the sphere (together with all essential influences of the external environment)? In the face of such difficulties, some AB modellers do not believe it is possible to represent the social context as vectors of quantitative variables with stable dimensions…. (Windrum et al., 2007, paragraph 4.2)

|

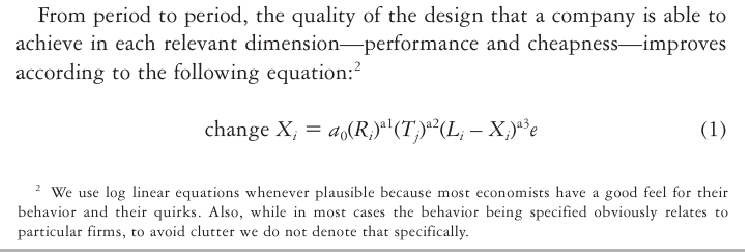

| Figure 1. The strongly quantitative approach; reproduced from Malerba et al. (1999, p. 15) |

To deduce real assumptions about sellers, we interviewed 40 grocery stores in the Seoul metropolitan area that sell perishable goods such as vegetables, fish, fruit, and dairy products. The store managers (or owners) were asked questions regarding list prices, their experience negotiating the price of perishable goods with buyers, and the appropriate range of product freshness levels, among other things. Thirty buyers (Seoul housewives) were also selected, and were asked to describe the utility factors they considered when negotiating with sellers. Based on these interview results, we established assumptions about sellers and buyers which we incorporated into our …experiment… (Lee and Lee, 2007, paragraph 4.5)

The SSE [the artificial stock exchange] operates in the following four steps: (1) every investor in the market receives a personal signal (information on the next period's expected price) and observes the current market price, (2) depending on the confidence of the investor, the personal signal is weighted to a greater or lesser extent with the signal that neighbouring agents have received, and based on this an order is forwarded to the stock market, (3) a new market price is calculated based on the crossing of orders in the SSE's order book, and (4) the agent's rules can be updated according to their results. (Hoffmann et al., 2007, paragraph 2.3)

Note that even the papers that specified agents on the basis of interview and/or survey data, converted their qualitative data to numerically defined utilty functions and the like to drive the agent behaviour. This can present problems when the results of the model are sensitive to the particular form and parameterisation of the functions (Edmonds, 2006) though, in response to this criticism, Deffuant (2006) argued that, in the face of such sensitivity, parameters and functional forms should be chosen to give the required result. If the required result is in conformity with some macro level social statistics, then Deffuant's response is what Windrum et al. would have offered.

Certainly there are those … who have taken the step of accepting they are constructing and analysing synthetic artificial worlds which may or may not have a link with the world we observe (Doran 1997). Those taking this position open themselves to the proposition that a model should be judged by the criteria that are used in mathematics: i.e. precision, importance, soundness and generality. This is hardly the case with [agent based] models! The majority of [agent based] modellers do not go down this particular path. (Windrum et al., 2007, paagraph 4.3)

[L]ogical proofs embody certain constructions which may be interpreted as programs. Under this interpretation, propositions become types. …[I]n different contexts this is in fact an isomorphism: in a certain fragment of logic, every proof describes a program and every program describes a proof. (Pfenning, 2004, emphasis added)

In fact, the term validation is no longer adequate, as many interactions are beyond such an experimental approach. Authentication seems a better approach, as it requires forensic abilities and witnessing.

For example, we have crosschecked the simulated cropping pattern with the ones coming from remote sensing mapping. From 20 repetitions, the average proportions of the different crop types overlap with the actual ones with 80% accuracy.We have also compared the average simulated yields with those provided by local Thai Agencies. In the case of rice, soybean and onion, mean yields are simulated with, at least, 70% accuracy. From an economic viewpoint, the emergence of a small group of wealthy "entrepreneurial" farmers appears to correspond well with the actual situation emerging in the catchment. The continuous impoverishment of the Poor category is less realistic but it has already prompted further consideration, along with our Thai colleagues, about the role of credit in relatively poorly performing households.

Concerning the Farmers profiles, part of the initial material was derived from field surveys. But it is crucial to have direct feedback from the stakeholders themselves regarding the social and individual rules implemented (Barreteau et al., 2001). This recognition by the concerned actors is the best-known authentication …. (Becu et al., 2003, p. 329)

Essentially, a model is more concrete as it captures in more detail the entities contributing to the social process and it is more isolated as it reduces the set of such entities in order to concentrate on particular causal mechanisms. Windrum et al. identify two "open questions" in this regard. These are:

When models are embedded in a participatory planning process, these questions are of little relevance. The entities to be included and how they are to be included (whether as agents or as patterns of interactions amongst agents) are issues for discussion with the planners and perhaps other stakeholders. It is the views of these principals that determine whether "the mechanisms isolated by the model resemble the mechanisms operating in the world". It is also very unlikely that, in these circumstances, stakeholders would knowingly agree to "assumptions that contradict the knowledge we have of the situation under discussion".

This is not an issue for a modeller designing and validating a model with stakeholder participation. The stakeholders and modeller agree on the forces that are to be isolated and then assess the outputs from simulation experiments with the model. If the outputs are not deemed plausible or the numerical outputs carry statistical signatures that are inconsistent with real social statistics, then one consequence might be a reassessment of the "real forces" (i.e., forces considered to be real by participating stakeholders). The identification of the important forces is a part of the discussion and is an important element in the construction of policy scenarios.

"Apriorism is a commitment to a set of a priori assumptions. A certain degree of commitment to a set of a priori assumptions is both normal and unavoidable in any scientific discipline. This is because theory is often developed prior to the collection of data, and data that is subsequently collected is interpreted using these theoretical presuppositions." (Windrum et al., 2007, paragraph 2.3) The distinction between "strong' and "weak" apriorism is that the latter "allows more frequent interplay between theory and data."

Embedding the modelling process in a policy process with stakeholder participation always puts evidence before theory. There is no commitment to a priori assumptions. All assumptions, whether drawn from theory or from prior modelling experience, are provisional and subject to abandonment or modification in the face of stakeholder-provided or other evidence. Windrum et al. would probably consider this to be weak apriorism. However, it is not an option but is rather in the very nature of companion modelling.

This is really an issue for economists since "[t]he neoclassical [economic] paradigm comes down strongly on the side of analytical tractability, the [agent based] paradigm on the side of empirical realism" and we are all agent based modellers.

The issue here is that "different models can be consistent with the data that is used for empirical validation." Econometricians call this the identification problem and philosophers of science call it the underdetermination problem. Econometricians address the problem by adding equations to their models until the rank of the coefficient matrix is equal to the number of independent variables. The equations are based on economic theory.

The proposition that other models might be validated equally well on the available data is not an issue because embedded models are only intended to represent individuals, their behaviour and social interactions as perceived by the participating stakeholders. If different stakeholders have different perceptions, they believe their respective models correctly capture those perceptions and the models are equally well validated in relation to macro level quantitative data, then the differences are not a problem for the modeller but for the stakeholders. Perhaps in such circumstances, the models and simulation outputs will not help to resolve issues. Perhaps the differences in the models might still be a useful subject for discussion by the stakeholders. Perhaps in such cases, conflict is more apparent than real. Whatever may be the case, the problem is not one of modelling except to the extent that the modelling exerecise might be of no practical use.

AXTELL R L, Epstein J M, Dean J S, Gumerman G J, Swedlund A C, Harburger J, Chakravarty S, Hammond R, Parker J and Parker M (2002) Population growth and collapse in a multiagent model of the Kayenta Anasazi in Long House Valley. Proceedings of the National Academy of Sciences of the United States of America, 99(Suppl. 3), pp. 7275-7279.

BARRETEAU O, Bousquet F and Attonaty J M (2001) Role-playing games for opening the black box of multi-agent systems: method and lessons of its application to Senegal River Valley irrigated systems. Journal of Artificial Societies and Social Simulation, 4(2), p. 5, URL <https://www.jasss.org/4/2/5.html>.

BARRETEAU O, Le Page C and Patrick D'Aquino P (2003a) Role-playing games, models and negotiation processes. Journal of Artificial Societies and Social Simulation, 6(2), p. 10, URL https://www.jasss.org/6/2/10.html.

BARRETEAU O et al. (2003b) Our companion modelling approach. Journal of Artificial Societies and Social Simulation, 6(2), p. 1, URL https://www.jasss.org/6/2/1.html.

BECU N, Perez P, Walker A, Barreteau O and Le Page C (2003) Agent based simulation of a small catchment water management in northern Thailand: Description of the CATCHSCAPE model. Ecological Modelling, 170(2-3), pp. 319-331.

BOLLERSLEV T (1986) Generalized autoregressive conditional heteroskedasticity. Journal of Econometrics, 31, pp. 307-327.

BOUSQUET F, Barreteau O, Le Page C, Mullon C and Weber J (1999) An environmental modelling approach: the use of multi-agent simulations. In Blasco F (ed.) Advances in environmental and ecological modelling, Paris: Elsevier,, pp. 113-122.

CHMURA T and Pitz T (2007) An extended reinforcement algorithm for estimation of human behaviour in experimental congestion games. Journal of Artificial Societies and Social Simulation, 10(2), p. 1, URL https://www.jasss.org/10/2/1.html.

CLEMENTS M and Hendry D (1996) Intercept corrections and structural change. Journal of Applied Econometrics, 11(5), pp. 475-494.

CONTE R and Castelfranchi C (1995) Cognitive and Social Action. UCL Press.

CONTE R, Edmonds B, Moss S and Sawyer R K (2001) Sociology and social theory in agent based social simulation: A symposium. Computational and Mathematical Organization Theory, 7(3), p. 183.

DEFFUANT G (2006) Comparing extremism propagation patterns in continuous opinion models. Journal of Artificial Societies and Social Simulation, 9(3), p. 8, URL https://www.jasss.org/9/3/8.html.

DOWNING T E, Moss S and Pahl Wostl C (2000) Understanding climate policy using participatory agent based social simulation. In Moss S and Davidsson P (eds.) Multi Agent Based Social Simulation, Berlin: Springer Verlag, Lecture Notes in Artificial Intgelligence, volume 1979, pp. 198-213.

EDMONDS, B. (1999). Syntactic Measures of Complexity. Doctoral Thesis, University of Manchester, Manchester, UK. http://cfpm.org/~bruce/thesis

EDMONDS B (2006) Assessing the safety of (numerical) representation in social simulation. In Billari F, Fent T, Prskawetz A and Schefflarn J (eds.) Agent-based computational modelling, Heidelberg: Physica Verlag, pp. 195-214.

ENGLE R (1982) Autoregressive conditional heteroskedasticity with estimates of the variance of united kingdom inflation. Econometrica, 50, pp. 987-1007.

EPSTEIN J M and Axtell R (1996) Growing artificial societies: social science from the bottom up. Complex adaptive systems, Washington, D.C.; Cambridge, Mass.; London: Brookings Institution Press: MIT Press.

FAMA E F (1963) Mandelbrot and the stable paretian hypothesis. Journal of Business, 36(4), pp. 420-429.

GELLER A (2006) Macht, Ressourcen und Gewalt: Zur Komplexit‰t zeitgenssischer Konflikte. Eine agenten-basierte Modellierung [Power, Resources, and Violence: On the Complexity of Contemporary Conflicts. An Agent-based Model]. Zurich: vdf.

GELLER A and Moss S (2007a) The Afghan nexus: Anomie, neo-patrimonialism and the emergence of small-world networks. CPM Report 07-179, Centre for Policy Modelling, Manchester Metropolitan University Business School, URL http://cfpm.org/cpmrep179.html.

GELLER A and Moss S (2007b) Growing qawms: A case-based declarative model of afghan power structures. CPM Report 07-180, Centre for Policy Modelling, Manchester Metropolitan University Business School, URL http://cfpm.org/cpmrep180.html.

GRANOVETTER M (1985) Economic action and social structure: The problem of embeddedness. American Journal of Sociology, 91(3), pp. 481-510.

HESSE, MB. Models and Analogies in Science. London: Sheed and Ward, 1963.

HOFFMANN A O I, Jager W and Von Eije J H (2007) Social simulation of stock markets: Taking it to the next level. Journal of Artificial Societies and Social Simulation, 10(2), p. 7, URL https://www.jasss.org/10/2/7.html.

JENSEN H (1998) Self-Organized Criticality: Emergent Complex Behavior in Physical and Biological Systems. Cambridge: Cambridge University Press.

JUDD K L and Tesfatsion L (eds.) (2006) Handbook of Computational Economics, Vol. 2: Agent-Based Computational Economics. Handbooks in Economics Series, North-Holland.

LEE K C and Lee N (2007) Cards: Case-based reasoning decision support mechanism for multi-agent negotiation in mobile commerce. Journal of Artificial Societies and Social Simulation, 10(2), p. 4, URL https://www.jasss.org/10/2/4.html.

MACKENZIE D (2003) Long-Term Capital Management and the sociology of arbitrage. Economy and Society, 32(3), pp. 349-380.

MÄKI U (2005) Models are experiments, experiments are models. Journal of Economic Methodology, 12(2), pp. 303-315. Cited by (Windrum et al., 2007).

MALERBA F, Nelson R R, Orsenigo L and Winter S G (1999) 'history-friendly' models of industry evolution: the computer industry. Industrial and Corporate Change, 8(1), pp. 3-41.

MANDELBROT B (1997) Fractales, Hasard et Finance. Paris: Flammarion.

MAYER T (1975) Selecting economic hypotheses by goodness of fit. : The Economic Journal, 85(340), pp. 877-883.

MOSS S (2002) Policy analysis from first principles. Proceedings of the US National Academy of Sciences, 99(Suppl. 3), pp. 7267-7274.

MOSS S and Edmonds B (2005) Sociology and simulation: Statistical and qualitative cross-validation. American Journal of Sociology, 110(4), pp. 1095-1131.

NEWELL A (1990) Unified Theories of Cognition. Cambridge MA: Harvard University Press.

PEFFER G and Llacay B (2007) Higher-order simulations: Strategic investment under model-induced price patterns. Journal of Artificial Societies and Social Simulation, 10(2), p. 6, URL https://www.jasss.org/10/2/6.html.

PFENNING F (2004) Lecture Notes on The Curry-Howard Isomorphism. Carnegie Mellon University, Pittsburgh PA, URL http://www.cs.cmu.edu/~fp/courses/312/handouts/23-curryhoward.pdf.

POLHILL J G, Pignotti E, Gotts N M, Edwards P and Preece A (2007) A semantic grid service for experimentation with an agent-based model of land-use change. Journal of Artificial Societies and Social Simulation, 10(2), p. 2, URL https://www.jasss.org/10/2/2.html.

VATTIMO G (1988) The End of Modernity: Nihilism and Hermeneutics in Postmodern Culture. Baltimore MD,USA: Johns Hopkins University Press. Translated by Jon R. Snyder.

WEISBUCH G, Kirman A and Herreiner D (2000) Market organisation and trading relationships. The Economic Journal, 110(463), pp. 411-436.

WESTERA W (2007) Peer-allocated instant response (pair): Computational allocation of peer tutors in learning communities. Journal of Artificial Societies and Social Simulation, 10(2), p. 5, URL https://www.jasss.org/10/2/5.html.

WINDRUM P, Fagiolo G and Moneta A (2007) Empirical validation of agent-based models: Alternatives and prospects. Journal of Artificial Societies and Social Simulation, 10(2), p. 8, URL https://www.jasss.org/10/2/8.html.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2008]