An Objective-Based Perspective on Assessment of Model-Supported Policy Processes

Journal of Artificial Societies and Social Simulation

12 (4) 3

<https://www.jasss.org/12/4/3.html>

For information about citing this article, click here

Received: 14-Aug-2009 Accepted: 22-Aug-2009 Published: 31-Oct-2009

Abstract

Abstract

|

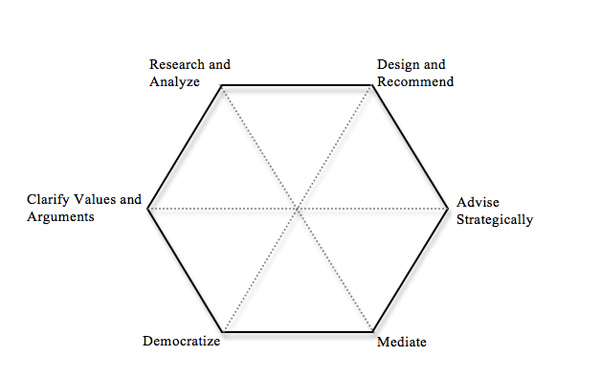

| Figure 1 Overview of activities of policy analysis (after Mayer et al. 2004) |

|

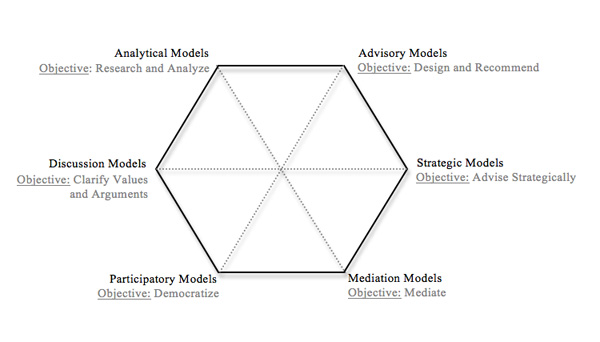

| Figure 2. An overview of the types of models for supporting policy-making |

|

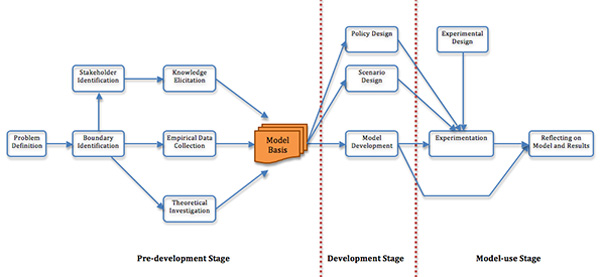

| Figure 3. Modeling stages in general |

2 The reader may refer to Mayer et al. (2004) for an extensive introduction of the hexagon framework.

3 It is possible to encounter several different classifications (and also set of labels used for types) of validation types, as will be discussed later. In this work, we follow the classification discussed by Barlas (1996).

ANDERSEN D F, Richardson G P and Vennix J A M. Group model building: adding more science to the craft. System Dynamics Review 1997;13(2); 187-201.

ARGOTE L. Organizational learning: creating, retaining, and transferring knowledge. Kluwer Academic: Boston;1999.

ARGOTE L and Epple D. Learning curves in manufacturing. Science 1990;247; 920-924.

BALCI O. Validation, verification, and testing techniques throughout the life cycle of a simulation study Annals of Operations Research 1994;53; 121-173.

BARLAS Y. Formal aspects of model validity and validation in system dynamics. System Dynamics Review 1996;12(3); 183-210.

BARRETEAU O. Our companion modelling approach. Journal of Artificial Societies and Social Simulation 2003;6(1); 2 https://www.jasss.org/6/2/1.html.

BARRETEAU O, Bousquet F and Attonaty J M. Role-playing games for opening the black box of multi-agent systems: method and lessons of its applications to Senegal River Valley irrigation system. Journal of Artificial Societies and Social Simulation 2001;4(2); 5 https://www.jasss.org/4/2/5.html.

BECKER J, Niehavens B and Klose K. A framework for epistemological perspectives on simulation. Journal of Artificial Societies and Social Simulation 2005;8(4); 1 https://www.jasss.org/8/4/1.html.

BOERO R and Squazzoni F. Does empirical embeddedness matter? Methodological issues on agent-based models for analytical social science. Journal of Artificial Societies and Social Simulation 2005;8(4); 6 https://www.jasss.org/8/4/6.html.

BOTS P W G and van Daalen C E. Participatory model construction and model use in natural resource management: a framework for reflection. Systemic Practice and Action Research 2008; 21; 389-407.

BRENNER, T. and C. Werker A Taxonomy of Inference in Simulation Models. Computational Economics, 2007; 30, 227-244.

BRENNER, T. and C. Werker Policy Advice Derived From Simulation Models, Journal of Artificial Societies and Social Simulation 2009;12(4); 2 https://www.jasss.org/12/4/2.html.

CARSON E R and Flood R L. Model validation: philosophy, methodology and examples. Transactions of the Institute of Measurement & Control 1990;12(4) 178-185.

CHECKLAND P and Scholes J. Soft systems methodology in action: a 30-year retrospective. Wiley: Chichester, Eng. ; New York;1999.

COYLE R G. System dynamics modelling: a practical approach. Chapman & Hall: London;1996.

D'AQUINO P, Le Page C, Bousquet F and Bah A. Using self-designed role-playing games and a multi-agent system to empower a local decision-making process for land use management: the SelfCormas experiment in Senegal. Journal of Artificial Societies and Social Simulation 2003;6(3); 5 https://www.jasss.org/6/3/5.html.

DALKIRAN E. Scuba diving simulator: Testing real time decision making in a feedback environment. 2006. MSc Thesis; Bogazici University, Istanbul.

DUIJN M, Immers L H, Waaldijk F A and Stoelhorst H J. Gaming approach route 26: a combination of computer simulation, design tools and social interaction. Journal of Artificial Societies and Social Simulation 2003;6(3); 7 https://www.jasss.org/6/3/7.html.

DUNN W N. A pragmatic strategy for discovering and testing threats to the validity of socio-technical experiments. Simulation Modelling Practice and Theory 2002;10; 169-194.

EAC (1996). FORWARD - Freight Options for Road, Water and Rail for the Dutch, Final Report. RAND: Santa Monica; 1996

EXELBY M J and Lucas N J D. Competition in the UK market for electricity generating capacity. A game theory analysis. Energy Policy 1993;21(4); 348-354.

FERGUSON N M, Cummings D A T, Fraser C, Cajka J C, Cooley P C and Burke D S. Strategies for mitigating an influenza pandemic. Nature 2006;442(7101); 448-452.

FORRESTER J W. Urban dynamics. M.I.T. Press: Cambridge, Mass.;1969.

FORRESTER J W and Senge P M 1980. Tests for building confidence in system dynamics models. In: A. A. Legasto, J. W. Forrester and J. M. Lyneis (Eds).System Dynamics. North-Holland: Amsterdam; 1980.

FRANK U and Troitzsch K. Epistemological perspectives on simulation. Journal of Artificial Societies and Social Simulation 2005;8(4); 7 https://www.jasss.org/8/4/7.html.

GILBERT N and Troitzsch K. Simulation for the social scientist. Open University Press: Berkshire;2005.

GURUNG T R, Bousquet F and Trebuil G. Companion modeling, conflict, and institution building: sharing irrigation water in the Lingmuteychu watershed, Bhutan. Ecology and Society 2006;11(2); 36.

HOBBS B F and Kelly K A. Using game theory to analyze electric transmission pricing policies in the United States. European Journal of Operational Research 1992;56(2); 154-171.

KÜPPERS G and Lenhard J. Validation of simulation: Patterns in the social and natural sciences. Journal of Artificial Societies and Social Simulation 2005;8(4); 3 https://www.jasss.org/8/4/3.html.

LE Bars M and Le Grusse P. Use of a decision support system and a simulation game to help collective decision-making in water management. Computers and Electronics in Agriculture 2008;62(2); 182-189.

LUNA-REYES L F and Andersen D L. Collecting and analyzing qualitative data for system dynamics: methods and models. System Dynamics Review 2003;19(4); 271-296.

MAYER I S, van Daalen C E and Bots P W G. Perspectives on policy analyses: a framework for understanding and design. International Journal of Technology, Policy and Management 2004;4(2); 169-190.

MENNITI D, Pinnarelli A and Sorrentino N. Simulation of producers behaviour in the electricity market by evolutionary games. Electric Power Systems Research 2008;78(3); 475-483.

MISER H J and Quade E S 1988. Validation. In: H. J. Miser and E. S. Quade (Eds).Handbook of Systems Analysis: Craft Issues and Procedural Choices. Elsevier Science Publishing: 1988. p. 527-563.

MOSS S. Alternative approaches to the empirical validation of agent-based models. Journal of Artificial Societies and Social Simulation 2008;11(1); 5 https://www.jasss.org/11/1/5.html.

MOSS S and Edmonds B. Sociology and simulation: statistical and qualitative cross-validation. American Journal of Sociology 2005;110(4); 1095-1131.

PRELL C, Hubacek K and Reed M. 'Who's in the network?' when stakeholders influence data analysis. Systemic Practice and Action Research 2008; 21; 443-458.

RAMANATH A M and Gilbert N. The design of participatory agent-based social simulations. Journal of Artificial Societies and Social Simulation 2004;7(4); 1 https://www.jasss.org/7/4/1.html.

ROTMANS J. IMAGE: An Integrated Model to Assess the Greenhouse Effect. Kluwer Academic Publishing: Dordrecht;1990.

ROUWETTE E. Group Model Building as Mutual Persuasion. 2003. PhD Thesis; Radboud University, Nijmegen.

ROWE G and Frewer L J. Public participation methods: a framework for evaluation. Science Technology and Human Values 2000;25(3); 3-29.

SARGENT R G. Validation and verification of simulation models. Winter Simulation Conference 2004.

SAYSEL A K, Barlas Y and Yenigün O. Environmental sustainability in an agricultural development project: a system dynamics approach. Journal of Environmental Management 2002;64(3); 247-260.

SCHELLING T C. Dynamic models of segregation. Journal of Mathematical Sociology 1971;1; 143-186.

SCHMID A. What is the truth of simulation? Journal of Artificial Societies and Social Simulation 2005;8(4); 5 https://www.jasss.org/8/4/5.html.

SOARES-FILHO B S, Nepstad D C, Curran L M, Cerqueira G C, Garcia R A, Ramos C A, Voll E, McDonald A, Lefebvre P and Schlesinger P. Modelling conservation in the Amazon basin. Nature 2006;440(7083); 520-523.

STADLER M, Kranzl L, Huber C, Haas R and Tsioliaridou E. Policy strategies and paths to promote sustainable energy systems - the dynamic Invert simulation tool. Energy Policy 2007;35(1); 597-608.

STAVE K A. Using system dynamics models to improve public participation in environmental decisions. System Dynamics Review 2002;18(2); 139-167.

STERMAN J. Business dynamics: systems thinking and modeling for a complex world. Irwin/McGraw-Hill: Boston;2000.

STROUD P, Del Valle S, Sydoriak S, Riese J and Mniszewski S. Spatial dynamics of pandemic influenza in a massive artificial society. Journal of Artificial Societies and Social Simulation 2007;10(4); 9 https://www.jasss.org/10/4/9.html.

STRUBEN J. Essays on transition challenges for alternative propulsion vehicles and transportation systems. 2006. PhD Thesis; M.I.T., Boston.

TAKADAMA K, Kawai T and Koyama Y. Micro- and macro-level validation in agent-based simulation: reproduction of human-like behavior and thinking in a sequential bargaining game. Journal of Artificial Societies and Social Simulation 2008;11(2); 9 https://www.jasss.org/11/2/9.html.

TROITZSCH K. Validating simulation models. 18th European Simulation Multiconference 2004.

WILDRUM P, Fagiolo G and Moneta A. Empirical validation of agent-based models: Alternatives and prospects. Journal of Artificial Societies and Social Simulation 2007;10(2); 8 https://www.jasss.org/10/2/8.html.

YILMAZ L. Validation and verification of social processes within agent-based computational organization models. Computational and Mathematical Organizational Theory 2006;12; 283-312.

YÜCEL G and Barlas Y. Pattern-based system design/optimization. 25th International System Dynamics Conference 2007; Boston, USA.

ZAGONEL A. Model conceptualization in group model building: a review of the literature exploring the tension between representing reality and negotiating a social order International System Dynamics Conference 2002; Palermo, Italy.

ZEIGLER B P. Theory of modelling and simulation. Wiley: New York;1976.

ZHAO H. Simulation and analysis of dealers' returns distribution strategy. Winter Simulation Conference 2001.

Return to Contents of this issue

© Copyright Journal of Artificial Societies and Social Simulation, [2009]